大数据毕设-基于hadoop+spark+echarts+机器学习的豆瓣图书数据可视化分析系统设计实现(附开发文档+部署)

作者:雨晨源码

简介:java、微信小程序、安卓;定制开发,远程调试 代码讲解,文档指导,ppt制作

精彩专栏推荐订阅:在下方专栏

Java精彩实战毕设项目案例

小程序精彩项目案例

Python实战项目案例

文末获取源码

文章目录

- 豆瓣图书数据可视化分析系统-系统前言简介

- Hadoop豆瓣图书数据可视化分析系统-开发技术与环境

- Hadoop豆瓣图书数据可视化分析系统-功能介绍

- Hadoop豆瓣图书数据可视化分析系统-演示图片

- Hadoop豆瓣图书数据可视化分析系统-代码展示

- Hadoop豆瓣图书数据可视化分析系统-结语(文末获取源码)

本次文章主要是介绍基于hadoop+spark+echarts+机器学习的豆瓣图书数据可视化分析系统的功能,系统分为二个角色,分别是用户和管理员

豆瓣图书数据可视化分析系统-系统前言简介

- 基于大数据的豆瓣图书数据可视化分析系统旨在挖掘和分析海量图书数据背后的规律和趋势,为读者、出版商和数据分析师提供更深入的洞察和辅助决策。本系统依托于豆瓣庞大的图书数据库,通过收集和分析图书的各项指标,如分类、评分、评论数量等,使用先进的数据可视化技术,直观地展示数据中的信息,帮助用户更好地理解和把握图书市场的动态和趋势。

- 随着信息技术的发展,大数据已经成为各行各业重要的资源之一,而数据可视化则是将海量数据转化为有价值信息的重要手段。本系统将通过可视化的方式展示豆瓣图书数据中的模式和趋势,帮助用户发现和分析隐藏在数据中的规律和信息。同时,本系统还将提供强大的数据分析功能,通过运用数据挖掘和机器学习等技术,对数据进行更深层次的挖掘和分析,为读者、出版商和数据分析师提供更加准确、深入的决策支持。

- 通过本系统,用户可以方便地查询和筛选图书数据,观察图书的分类分布、评分分布、热门图书、评论数量等指标,同时还可以对数据进行深入的分析和挖掘,了解读者的阅读喜好、市场趋势和预测未来的发展。本系统的设计和实现旨在为用户提供更加全面、准确、便捷的数据可视化分析服务,帮助用户更好地把握市场动态和趋势,提高决策的准确性和效率。

Hadoop豆瓣图书数据可视化分析系统-开发技术与环境

- 开发语言:Python

- 后端框架:Django、hadoop分布式框架、spark分析

- 前端:Vue

- 数据库:MySQL

- 系统架构:B/S

- 开发工具:Python环境,pycharm,mysql(5.7或者8.0)

Hadoop豆瓣图书数据可视化分析系统-功能介绍

用户和管理员(亮点:大数据、hadoop分布式框架爱、大屏可视化分析)

用户:各作者占比、所有图书数据、评分最高书籍前十、评分请假占比、各时间段的书籍数量、评论最多书籍前十、词云等。

管理员:登录、用户管理、认证和授权、豆瓣数据分析等。

Hadoop豆瓣图书数据可视化分析系统-演示图片

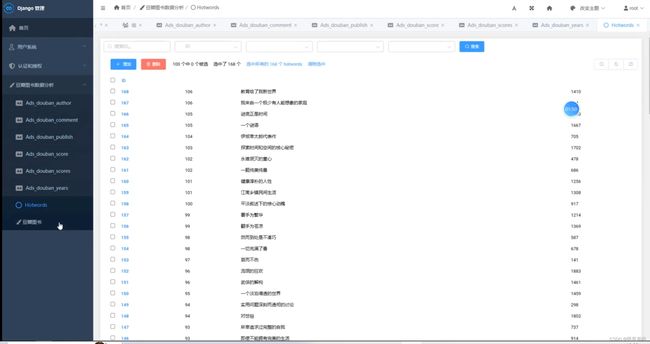

☀️豆瓣图书列表☀️

Hadoop豆瓣图书数据可视化分析系统-代码展示

1.数据清洗【代码如下(示例):】

if __name__ == '__main__':

# urllib3.contrib.pyopenssl.inject_into_urllib3()

html_re = re.compile(r'<[^>]+>', re.S)

db = pymysql.connect(user=tools.Config.MYSQL_USER,

password=tools.Config.MYSQL_PWD,

host=tools.Config.MYSQL_HOST,

database=tools.Config.MYSQL_DB,

charset=tools.Config.MYSQL_CHARSET)

db_cursor = db.cursor(cursor=pymysql.cursors.DictCursor)

db_cursor.execute("TRUNCATE TABLE article")

host = "www.hsnc.edu.cn"

# url="https://www.hsnc.edu.cn/index/xyxx/xyxw/162.htm"

url = "https://www.hsnc.edu.cn/index/xyxx/xyxw.htm"

resp = get_page_data(host, url)

sp = BeautifulSoup(resp, 'lxml')

div_titles = sp.find_all("div", "biaoti")

for div_title in div_titles:

a = div_title.find_all("a")[1]

title = a.string # 新闻标题

link = a.attrs["href"]

link = "https://" + host + link.replace("../..", "").replace("/..", "") # 详细内容的链接

print(link)

resp_cnt = get_page_data(host, link)

sp_cnt = BeautifulSoup(resp_cnt, 'lxml')

div_author = sp_cnt.find("div", "auther")

html_author = div_author.__str__()

html_author = html_re.sub("", html_author).split(" ")

author = html_author[0].replace("来源:", "").strip() # 来源

if html_author[1].strip() != "":

ptime = html_author[1].replace("发布日期:", "").strip() # 发布时间

else:

ptime = html_author[2].replace("发布日期:", "").strip() # 发布时间

div_cnt = sp_cnt.find("div", "v_news_content")

cnt = html_re.sub("", div_cnt.__str__()).replace("\n", "

") # 内容

cnt = cnt.strip("

")

insert_sql = f"INSERT INTO article (title,content,source,t) " \

f"VALUES ('" + addslashes(title) + "','" + addslashes(cnt) + "','" + addslashes(

author) + "','" + addslashes(ptime) + "')"

try:

db_cursor.execute(insert_sql)

db.commit()

except:

print("insert error")

print(insert_sql)

print("休眠1秒")

time.sleep(1)

print("休眠10秒")

time.sleep(10)

2.数据分析【代码如下(示例):】

# 进入下N页的列表

cur_page = 162

url = "https://www.hsnc.edu.cn/index/xyxx/xyxw/" + str(cur_page) + ".htm"

while cur_page >= 140:

resp = get_page_data(host, url)

sp = BeautifulSoup(resp, 'lxml')

div_titles = sp.find_all("div", "biaoti")

for div_title in div_titles:

a = div_title.find_all("a")[1]

title = a.string # 新闻标题

link = a.attrs["href"]

link = "https://" + host + link.replace("../..", "").replace("/..", "") # 详细内容的链接

print(link)

resp_cnt = get_page_data(host, link)

sp_cnt = BeautifulSoup(resp_cnt, 'lxml')

div_author = sp_cnt.find("div", "auther")

html_author = div_author.__str__()

html_author = html_re.sub("", html_author).split(" ")

author = html_author[0].replace("来源:", "").strip() # 来源

if html_author[1].strip() != "":

ptime = html_author[1].replace("发布日期:", "").strip() # 发布时间

else:

ptime = html_author[2].replace("发布日期:", "").strip() # 发布时间

div_cnt = sp_cnt.find("div", "v_news_content")

cnt = html_re.sub("", div_cnt.__str__()).replace("\n", "

") # 内容

cnt = cnt.strip("

")

insert_sql = f"INSERT INTO article (title,content,source,t) " \

f"VALUES ('" + addslashes(title) + "','" + addslashes(cnt) + "','" + addslashes(

author) + "','" + addslashes(ptime) + "')"

try:

db_cursor.execute(insert_sql)

db.commit()

except:

print("insert error")

print(insert_sql)

print("休眠1秒")

time.sleep(1)

print("休眠10秒")

time.sleep(10)

cur_page -= 1

url = "https://www.hsnc.edu.cn/index/xyxx/xyxw/" + str(cur_page) + ".htm"

db_cursor.close()

db.close()

def main():

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'stfeel.settings')

try:

from django.core.management import execute_from_command_line

except ImportError as exc:

raise ImportError(

"Couldn't import Django. Are you sure it's installed and "

"available on your PYTHONPATH environment variable? Did you "

"forget to activate a virtual environment?"

) from exc

Hadoop豆瓣图书数据可视化分析系统-结语(文末获取源码)

Java精彩实战毕设项目案例

小程序精彩项目案例

Python实战项目集

如果大家有任何疑虑,或者对这个系统感兴趣,欢迎点赞收藏、留言交流啦!

欢迎在下方位置详细交流。