基于c++版opencv的图像处理

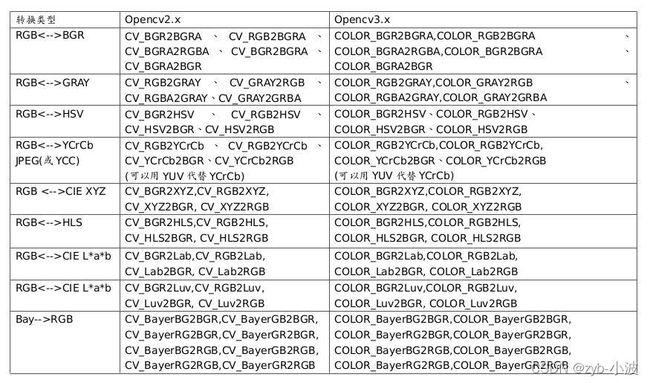

1.通道变换

void cvtColor(InputArray src, OutputArray dst, int code, int dstCn=0 );2.二值化

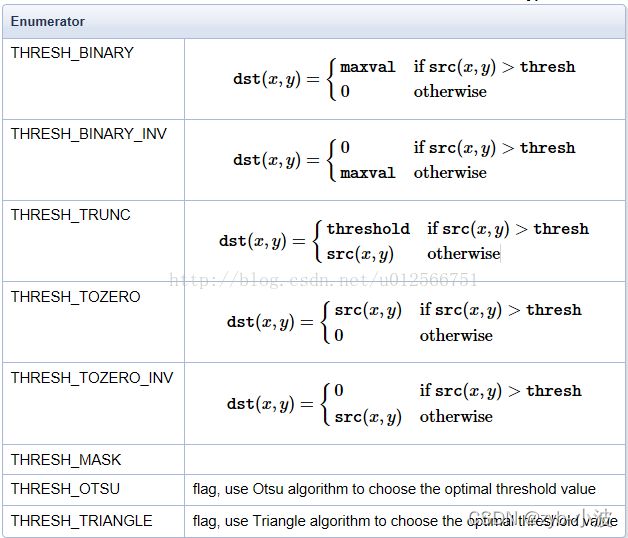

double cv::threshold(InputArray src, OutputArray dst, double thres, double maxval, int type)3.形态学操作

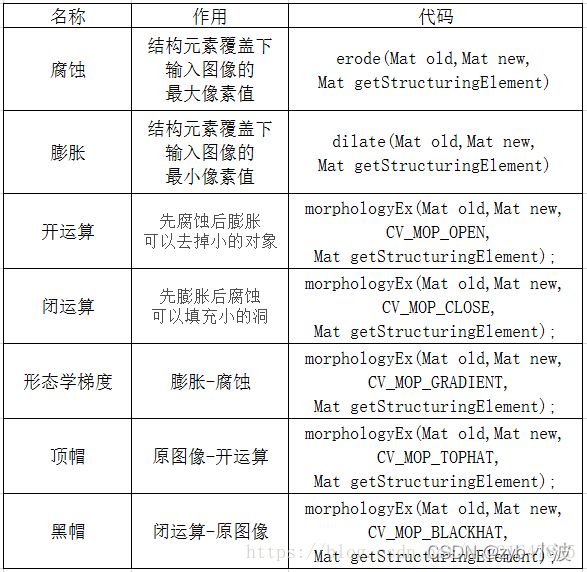

void morphologyEx(InputArray src, OutputArray dst, int op, InputArray kernel, Point anchor=Point(-1,-1), intiterations=1, int borderType=BORDER_CONSTANT, const Scalar& borderValue=morphologyDefaultBorderValue());4.滤波器

//均值滤波

void blur(InputArray src, OutputArray dst, Size ksizem Point anchor=Point(-1, -1), int borderType=BORDER_DEFAULT);

//高斯滤波

void GaussianBlur(InputArray src, OutputArray dst, Size ksize, double sigmaX, double sigmaY=0, int borderType=BORDER_DEFAULT);

//中值滤波

void medianBlur(InputArray src, OutputArray dst, int ksize);

//双边滤波

void bilateralFilter(InputArray src, OutputArray dst, int d, double sigmaColor, double sigmaSpace, int borderType=BORDER_DEFAULT);

5.边缘检测

//canny算子

void Canny(InputArray image,OutputArray edges, double threshold1, double threshold2, int apertureSize=3,bool L2gradient=false);

//sobel算子

void Sobel(InputArray src, OutputArray dst, int ddepth, int dx, int dy, int ksize=3, double scale=1, double delta=0, int borderType=BORDER_DEFAULT);

//Laplacian算子

void Laplacian( InputArray src, OutputArray dst, int ddepth,int ksize = 1, double scale =1, double delta = 0, int borderType = BORDER_DEFAULT);

6.轮廓提取

//轮廓提取

findContours( InputOutputArray image, OutputArrayOfArrays contours,OutputArray hierarchy,

int mode,int method, Point offset=Point());

//绘制轮廓

drawContours(InputOutputArray image, InputArrayOfArrays contours, int contourIdx, const Scalar& color, int thickness=1, int lineType=8, InputArray hierarchy=noArray(), int maxLevel=INT_MAX, Point offset=Point());

//连通域分析

int connectedComponentsWithStats (InputArrayn image, OutputArray labels, OutputArray stats,

OutputArray centroids,int connectivity = 8, int ltype = CV_32S);

7.直线提取、多边形拟合

//直线检测

void HoughLines(InputArray image, OutputArray lines, double rho, double theta, int threshold, double srn=0, double stn=0);

//线段检测

void HoughLinesP(InputArray image, OutputArray lines, double rho, double theta, int threshold,double minLineLength=0, double maxLineGap=0);

//多边形拟合

void approxPolyDP(InputArray curve, OutputArray approxCurve, double epsilon, bool closed);

//椭圆拟合

void ellipse(Mat& img, Point center, Size axes, double angle, double startAngle, double endAngle, const Scalar& color, int thickness=1, int lineType=8, int shift=0);

void ellipse(Mat& img, const RotatedRect& box, const Scalar& color, int thickness=1, int lineType=8);

//直线拟合

void fitLine(InputArray points,OutputArray line, int distType,double param,double reps,double aeps);

8.角点检测

//Harris角点检测

void cornerHarris(InputArray src,OutputArray dst,int blockSize,int ksize,double k,intborderType=BORDER_DEFAULT);

//Shi-Tomasi 角点检测

void goodFeaturesToTrack(InputArray image,OutputArray corners,int maxCorners,double qualityLevel,double minDistance,InputArray mask = noArray(),int blockSize = 3,bool useHarrisDetector = false,double k = 0.04);

9.特征点匹配

//FAST算法

ptr create(int threshold=10, bool nonmaxSuppression=true,int type=FastFeatureDetector::TYPE_9_16);

//SURF算法

typedef SURF cv::xfeatures2d::SurfDescriptorExtractor

typedef SURF cv::xfeatures2d::SurfFeatureDetector

//sift算法

cv::xfeatures2d::SIFT::create( int nfeatures = 0, int nOctaveLayers = 3,double constrastThreshold = 0.04, double edgeThreshold = 10,double sigma = 1.5);

10.图像增强

- 直方图均衡化

#include

#include

#include

using namespace cv;

int main(int argc, char *argv[])

{

Mat image = imread("Test.jpg", 1);

if (image.empty())

{

std::cout << "打开图片失败,请检查" << std::endl;

return -1;

}

imshow("原图像", image);

Mat imageRGB[3];

split(image, imageRGB);

for (int i = 0; i < 3; i++)

{

equalizeHist(imageRGB[i], imageRGB[i]);

}

merge(imageRGB, 3, image);

imshow("直方图均衡化图像增强效果", image);

waitKey();

return 0;

}

- 拉普拉斯算子

使用中心为5的8邻域拉普拉斯算子与图像卷积可以达到锐化增强图像的目的,拉普拉斯算子如下图所示:

![]()

拉普拉斯算子可以增强局部的图像对比度:

#include

#include

#include

using namespace cv;

int main(int argc, char *argv[])

{

Mat image = imread("Test.jpg", 1);

if (image.empty())

{

std::cout << "打开图片失败,请检查" << std::endl;

return -1;

}

imshow("原图像", image);

Mat imageEnhance;

Mat kernel = (Mat_(3, 3) << 0, -1, 0, 0, 5, 0, 0, -1, 0);

filter2D(image, imageEnhance, CV_8UC3, kernel);

imshow("拉普拉斯算子图像增强效果", imageEnhance);

waitKey();

return 0;

}

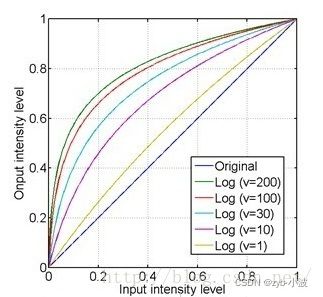

- 基于对数Log变换的图像增强

对数变换可以将图像的低灰度值部分扩展,显示出低灰度部分更多的细节,将其高灰度值部分压缩,减少高灰度值部分的细节,从而达到强调图像低灰度部分的目的。对于不同的底数,底数越大,对低灰度部分的扩展就越强,对高灰度部分的压缩也就越强。

#include

#include

using namespace cv;

int main(int argc, char *argv[])

{

Mat image = imread("Test.jpg");

Mat imageLog(image.size(), CV_32FC3);

for (int i = 0; i < image.rows; i++)

{

for (int j = 0; j < image.cols; j++)

{

imageLog.at(i, j)[0] = log(1 + image.at(i, j)[0]);

imageLog.at(i, j)[1] = log(1 + image.at(i, j)[1]);

imageLog.at(i, j)[2] = log(1 + image.at(i, j)[2]);

}

}

//归一化到0~255

normalize(imageLog, imageLog, 0, 255, CV_MINMAX);

//转换成8bit图像显示

convertScaleAbs(imageLog, imageLog);

imshow("Soure", image);

imshow("after", imageLog);

waitKey();

return 0;

}

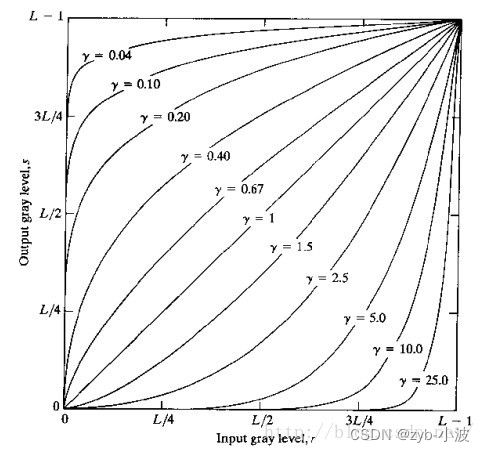

- 基于伽马变换的图像增强

伽马变换对图像的修正作用其实就是通过增强低灰度或高灰度的细节实现的,γ值以1为分界,值越小,对图像低灰度部分的扩展作用就越强,值越大,对图像高灰度部分的扩展作用就越强,通过不同的γ值,就可以达到增强低灰度或高灰度部分细节的作用。

#include

#include

using namespace cv;

int main(int argc, char *argv[])

{

Mat image = imread("Test.jpg");

Mat imageGamma(image.size(), CV_32FC3);

for (int i = 0; i < image.rows; i++)

{

for (int j = 0; j < image.cols; j++)

{

imageGamma.at(i, j)[0] = (image.at(i, j)[0])*(image.at(i, j)[0])*(image.at(i, j)[0]);

imageGamma.at(i, j)[1] = (image.at(i, j)[1])*(image.at(i, j)[1])*(image.at(i, j)[1]);

imageGamma.at(i, j)[2] = (image.at(i, j)[2])*(image.at(i, j)[2])*(image.at(i, j)[2]);

}

}

//归一化到0~255

normalize(imageGamma, imageGamma, 0, 255, CV_MINMAX);

//转换成8bit图像显示

convertScaleAbs(imageGamma, imageGamma);

imshow("原图", image);

imshow("伽马变换图像增强效果", imageGamma);

waitKey();

return 0;

}

11.标定

//棋盘格角点提取

int cvFindChessboardCorners( const void* image, CvSize pattern_size, CvPoint2D32f* corners, int* corner_count=NULL, int flags=CV_CALIB_CB_ADAPTIVE_THRESH );

//亚像素提取

void cornerSubPix( InputArray image, InputOutputArray corners, Size winSize, Size zeroZone, TermCriteria criteria);

//角点绘制

drawChessboardCorners(image, patternSize, corners, patternWasFound);

//内外参求取

double cv::calibrateCamera(InputArrayOfArrays objectPoints,

InputArrayOfArrays imagePoints,

Size imageSize,

InputOutputArray cameraMatrix,

InputOutputArray distCoeffs,

OutputArrayOfArrays rvecs,

OutputArrayOfArrays tvecs,

OutputArray stdDeviationsIntrinsics,

OutputArray stdDeviationsExtrinsics,

OutputArray perViewErrors,

int flags = 0,

TermCriteria criteria = TermCriteria(TermCriteria::COUNT+TermCriteria::EPS,30,DBL_EPSILON));

//畸变矫正

void undistort(InputArray src, OutputArray dst, InputArray cameraMatrix, InputArray distCoeffs, InputArray newCameraMatrix=noArray());

void initUndistortRectifyMap(InputArray cameraMatrix, InputArray distCoeffs, InputArray R, InputArray newCameraMatrix, Size size, int m1type, OutputArray map1,OutputArray map2);

void remap(InputArray src, OutputArray dst, InputArray map1, InputArray map2, int interpolation, int borderMode=BORDER_CONSTANT, const Scalar& borderValue=Scalar());

//九点法标定

Mat estimateRigidTransform(InputArraysrc,InputArraydst,boolfullAffine);

//手眼标定

void cv::calibrateHandEye(InputArrayOfArrays R_gripper2base,

InputArrayOfArrays t_gripper2base,

InputArrayOfArrays R_target2cam,

InputArrayOfArrays t_target2cam,

OutputArray R_cam2gripper,

OutputArray t_cam2gripper,

HandEyeCalibrationMethod method = CALIB_HAND_EYE_TSAI);

常规处理流程:

- 选择相应通道进行处理,常规处理用灰度图,颜色信息用H通道

- 二值化,一般采用自适应二值化,提高泛化能力

- 形态学操作/滤波去掉干扰

- 轮廓提取/边缘提取/多边形拟合,结合实际尺寸、位置信息找到目标