k8s基础-hostpath和localvolume(需创建sc/pv/pvc)的区别示例

引用官话的一句话:

Both use local disks available on a machine. But! Imagine you have a cluster of three machines and have a Deployment with a replica of 1. If your pod is scheduled on node A, writes to a host path, then the pod is destroyed. At this point the scheduler will need to create a new pod, and this pod might be scheduled to node C which doesn't have the data. Oops!

1.简单说就是如果创建了deployment使用了hostpath,pod会分布到不同的节点,如果不指定节点,就找不到前面创建hostpath数据的节点。(hostpath基于节点存放)

示例如下:

(weops) [root@node201 volume]# cat deployment_hostpathvolume.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment-hostpath-volume

labels:

project: k8s

app: hostpath-volume

spec:

replicas: 1

selector:

matchLabels:

project: k8s

app: hostpath-volume

template:

metadata:

labels:

project: k8s

app: hostpath-volume

spec:

containers:

- name: pod-hostpathvolume

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: hostpath-dir-volume #挂载hostpath目录

mountPath: /usr/share/nginx/html

- name: hostpath-file-volume #挂载hostpath目录下文件

mountPath: /usr/share/test.conf

- image: busybox

name: busybox

command: [ "sleep", "3600" ]

volumeMounts:

- name: hostpath-dir-volume

mountPath: /tmp #hostpath-dir-volume挂载到busybox容器的/tmp

- name: hostpath-file-volume

mountPath: /tmp/test.conf

volumes:

- name: hostpath-dir-volume

hostPath:

# 宿主机目录

path: /root/k8s/volume/hostpath_dir

type: DirectoryOrCreate # 如果目录不存在则创建(可创建多层目录)

- name: hostpath-file-volume

hostPath:

# 宿主机文件

path: /root/k8s/volume/hostpath_dir/test.conf

type: FileOrCreate # 如果文件不存在则创建。 前提:文件所在目录必须存在,目录不存在则不能创建文件

You have new mail in /var/spool/mail/root

2.当然如果你创建的是pod,就不存在这个问题。

示例如下:

(weops) [root@node201 volume]# cat hostpathvolume.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-hostpath-volume

spec:

containers:

- name: pod-hostpathvolume

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: hostpath-dir-volume #挂载hostpath目录

mountPath: /usr/share/nginx/html

- name: hostpath-file-volume #挂载hostpath目录下文件

mountPath: /usr/share/test.conf

- image: busybox

name: busybox

command: [ "sleep", "3600" ]

volumeMounts:

- name: hostpath-dir-volume

mountPath: /tmp #hostpath-dir-volume挂载到busybox容器的/tmp

- name: hostpath-file-volume

mountPath: /tmp/test.conf

volumes:

- name: hostpath-dir-volume

hostPath:

# 宿主机目录

path: /root/k8s/volume/hostpath_dir

type: DirectoryOrCreate # 如果目录不存在则创建(可创建多层目录)

- name: hostpath-file-volume

hostPath:

# 宿主机文件

path: /root/k8s/volume/hostpath_dir/test.conf

type: FileOrCreate # 如果文件不存在则创建。 前提:文件所在目录必须存在,目录不存在则不能创建文件

3.如果你创建的deployment,依旧想使用节点上的数据(非NFS等共享存储数据),这种场景k8s引入了local volume。创建deployment时使用local volume,会将pod分配到固定的节点。

在local volume出现之前,statefulsets也可以利用本地SSD,方法是配置hostPath,并通过nodeSelector或者nodeAffinity绑定到具体node上。但hostPath的问题是,管理员需要手动管理集群各个node的目录,不太方便。

在node201主机上创建local volume,然后使用关联到deployment的流程:

a. 首先需要创建storaget class

(weops) [root@node201 localvolume]# cat storage_class.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer #表示PV不要立即绑定PVC,而是直到有Pod需要用PVC的时候才绑定。

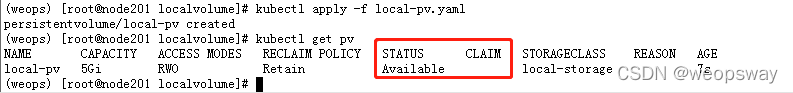

b.创建pv

关联到node201上的local路径,使用创建的local-storage这个storage class

(weops) [root@node201 localvolume]# cat local-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage #关联前面创建的storage class

local:

path: /root/k8s/volume/localvolume/data #node201本地目录

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node201 #关联local volume对应的主机

c.创建pvc

(weops) [root@node201 localvolume]# cat local-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: local-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: local-storage #关联前面创建的storage class

d.配置deployment应用 使用存储pvc:

(weops) [root@node201 localvolume]# cat local-pvc-bind-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-volumen-deployment

labels:

app: local-volume-deployment

spec:

replicas: 2

selector:

matchLabels:

app: local-volume-deployment

template:

metadata:

labels:

app: local-volume-deployment

spec:

containers:

- name: local-volume-container

image: busybox

imagePullPolicy: IfNotPresent

command:

- "/bin/sh"

args:

- "-c"

- "sleep 100000"

volumeMounts:

- name: local-data

mountPath: /usr/test-pod

volumes:

- name: local-data

persistentVolumeClaim:

claimName: local-pvc

注意事项:

删除deployment后,PVC不会与PV解绑,即第一次Pod调度后,PVC就与PV关联了;

可以直接再次创建deplyment就就可以继续用了。

删除PVC后,发现PV一直处于Released状态,导致PV无法被重用,需管理员手动删除PV并重建PV以便被重用:

When local volumes are manually created like this, the only supported persistentVolumeReclaimPolicy is “Retain”. When the PersistentVolume is released from the PersistentVolumeClaim, an administrator must manually clean up and set up the local volume again for reuse.