Hbase高可用集群搭建手册

HBASE集群部署手册

作者:lizhonglin

github: https://github.com/Leezhonglin/

blog: https://leezhonglin.github.io/

本教程主要包含zookeeper集群、hadoop集群、hbase集群搭建,并且配合opentsdb时序数据库进行使用.非常完整的教程和经验分享.

1.集群规划

集群总共5个节点, 一个主节点,一个备份主节点,单个从节点.

2.前置准备

2.1 安装软件清单

| 软件 | 软件包名称 |

|---|---|

| JDK | jdk-8u211-linux-x64.tar.gz |

| Hadoop | hadoop-3.1.4.tar.gz |

| Hbase | hbase-2.2.6-bin.tar.gz |

| zookeeper | apache-zookeeper-3.7.0-bin.tar.gz |

| opentsdb | opentsdb2.4.0.zip |

| docker | docker.rar |

| nginx | nginx.tar.gz |

这是部署集群的软件清单

3.基础环境部署

3.1 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld

3.2 修改主机hostname

修改/etc/sysconfig/network文件将原有的HOSTNAME=localhost.localdomain改成HOSTNAME=master1, 对应其他主机也做同样对应的修改.

编辑/etc/hostname

| 主机ip | hostname |

|---|---|

| 192.168.21.104 | master1 |

| 192.168.21.105 | master2 |

| 192.168.21.106 | slave1 |

| 192.168.21.107 | slave2 |

| 192.168.21.108 | slave3 |

3.3 添加主机hosts

编辑文件/etc/hosts 添加如下内容

192.168.21.104 master1

192.168.21.105 master2

192.168.21.106 slave1

192.168.21.107 slave2

192.168.21.108 slave3

3.3节点之间免密登录

3.3.1 配置互免密码登录

修改配置文件 /etc/ssh/sshd_config 文件.修改如下配置:

RSAAuthentication yes

PubkeyAuthentication yes

# 允许root通过 SSH 登录看,如果禁止root远程登录,那就设置为 no

PermitRootLogin yes

3.3.2 生成秘钥

原理:

首先在serverA 上生成一对秘钥(ssh-keygen),将公钥拷贝到 serverB,重命名 authorized_keys,serverA 向 serverB 发送一个连接请求,信息包括用户名、ip,serverB接到请求,会从 authorized_keys 中查找,是否有相同的用户名、ip,如果有 serverB 会随机生成一个字符串,然后使用使用公钥进行加密,再发送个 serverA,serverA 接到serverB 发来的信息后,会使用私钥进行解密,然后将解密后的字符串发送给serverB,serverB 接到 serverA 发来的信息后,会给先前生成的字符串进行比对,如果一直,则允许免密登录

分别在master1、master2、slave1、slave2、slave3执行创建秘钥命令

ssh-keygen -t rsa

直接回车

就会在/root/.ssh目录下生成rsa文件.

在master1上面执行如下命令,执行完毕后.所有主RSA公钥都存在/root/.ssh/authorized_keys文件中:

下面的命令一行一行的执行每执行一行需要输入yes回车然后在输入服务器的密码

cat ~/.ssh/id_rsa.pub>> ~/.ssh/authorized_keys

ssh root@master2 cat ~/.ssh/id_rsa.pub>> ~/.ssh/authorized_keys

ssh root@slave1 cat ~/.ssh/id_rsa.pub>> ~/.ssh/authorized_keys

ssh root@slave2 cat ~/.ssh/id_rsa.pub>> ~/.ssh/authorized_keys

ssh root@slave3 cat ~/.ssh/id_rsa.pub>> ~/.ssh/authorized_keys

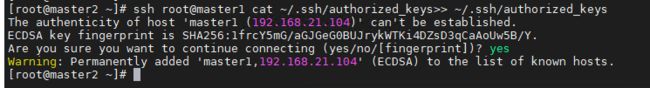

3.3.3 其他节点上面执行

分别在master2、slave1、slave2、slave3中执行

ssh root@master1 cat ~/.ssh/authorized_keys>> ~/.ssh/authorized_keys

将master1上的authorized_keys文件复制过来.

执行完效果如下

这样各个主机之间就可以相互免密码登录了.

3.4 服务器时间同步

设置时区

sudo timedatectl set-timezone Asia/Shanghai

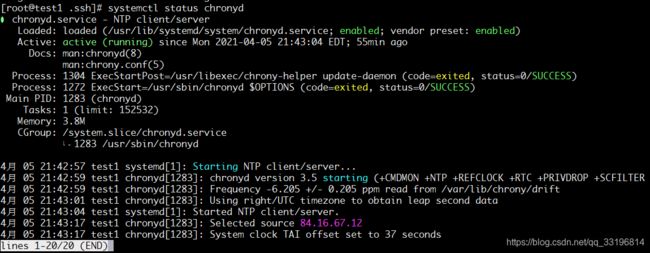

使用centos8自带的时间同步工具chrony,检查是否存在chrony. systemctl status chronyd

-

服务端配置

编辑配置文件

/etc/chrony.conf,首先可以看到有个pool的配置如下:pool 2.centos.pool.ntp.org iburst allow 192.168.21.1/24 -

客户端配置

编辑配置文件

/etc/chrony.confpool 192.168.21.1 iburst

客户端和服务端

systemctl start chronyd

systemctl enable chronyd

systemctl status chronyd

查看当前的同步源

chronyc sources -v

查看当前的同步状态

chronyc sourcestats -v

手动同步时间

chronyc -a makestep

4.集群搭建

4.1创建基础目录

mkdir -p /home/work

mkdir -p /home/hbase

mkdir -p /home/javajdk

4.2 JDK安装

4.2.1 配置环境

编辑 /etc/profile

#JAVA_HOME

export JAVA_HOME=/home/javajdk/jdk1.8.0_211

export PATH=$PATH:$JAVA_HOME/bin

4.2.2 解压文件

# OpenJDK11U-jdk_x64_linux_hotspot_11.0.10_9.tar.gz

tar -zxvf jdk-11.0.5_linux-x64_bin.tar.gz -C /home/javajdk (例如:/opt/software)

tar -zxvf jdk-8u211-linux-x64.tar.gz -C /home/javajdk

4.2.3 使配置生效

source /etc/profile

其他机器使用同样的操作步骤

4.2.4 拷贝到其他机器

scp -r /home/javajdk/jdk1.8.0_211 root@master2:/home/javajdk

scp -r /home/javajdk/jdk1.8.0_211 root@slave1:/home/javajdk

scp -r /home/javajdk/jdk1.8.0_211 root@slave2:/home/javajdk

scp -r /home/javajdk/jdk1.8.0_211 root@slave3:/home/javajdk

4.3 安装zookeeper集群

4.3.1 解决软件

解压apache-zookeeper-3.7.0.tar.gz 安装包

tar -zvxf apache-zookeeper-3.7.0-bin.tar.gz -C /home/work

4.3.2 修改配置文件

配置文件路径/home/work/apache-zookeeper-3.7.0/conf/ ,创建文件 zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/home/work/zkdata

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

## Metrics Providers

#

# https://prometheus.io Metrics Exporter

#metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

#metricsProvider.httpPort=7000

#metricsProvider.exportJvmInfo=true

server.1=master1:2888:3888

server.2=master2:2888:3888

server.3=slave1:2888:3888

server.4=slave2:2888:3888

server.5=slave3:2888:3888

4.3.3 分发文件和配置

scp -r /home/work/apache-zookeeper-3.7.0-bin root@master2:/home/work

scp -r /home/work/apache-zookeeper-3.7.0-bin root@slave1:/home/work

scp -r /home/work/apache-zookeeper-3.7.0-bin root@slave2:/home/work

scp -r /home/work/apache-zookeeper-3.7.0-bin root@slave3:/home/work

4.4.4 创建zookeeper集群数据文件夹

在每个节点(master1、master2 、slave1、 slave2、slave3)中创建目录

mkdir -p /home/work/zkdata

并在改目录下面创建文件myid,文件的内容分别是

1

2

3

4

5

4.3.5 启动zookeeper集群

在master1、master2、slave1、slave2、slave3上依次执行启动zookeeper的命令

/home/work/apache-zookeeper-3.7.0-bin/bin/zkServer.sh start

4.3.6 查看状态

/home/work/apache-zookeeper-3.7.0-bin/bin/zkServer.sh status

4.4 hadoop集群安装

4.4.1 解压文件

tar -zxvf hadoop-3.1.4.tar.gz -C /home/hbase

4.4.2 配置hadoop

/home/hbase/hadoop-3.1.4/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/home/javajdk/jdk1.8.0_211

打开/home/hbase/hadoop-3.1.4/etc/hadoop/yarn-env.sh文件,在YARN_CONF_DIR的配置下面加入以下内容

export JAVA_HOME=/home/javajdk/jdk1.8.0_211

打开/home/hbase/hadoop-3.1.4/etc/hadoop/core-site.xml 文件,修改配置

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://master1:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/home/hbase/hadoop-3.1.4/tempvalue>

property>

configuration>

/home/hbase/hadoop-3.1.4/etc/hadoop/hdfs-site.xml文件配置如下:

<configuration>

<property>

<name>dfs.namenode.name.dirname>

<value>/home/hbase/hadoop-3.1.4/dfs/namevalue>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>/home/hbase/hadoop-3.1.4/dfs/datavalue>

property>

<property>

<name>dfs.replicationname>

<value>2value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>master1:9001value>

property>

<property>

<name>dfs.webhdfs.enabledname>

<value>truevalue>

property>

configuration>

是hdfs相关的配置

/home/hbase/hadoop-3.1.4/etc/hadoop/mapred-site.xml文件,配置如下:

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

<property>

<name>mapreduce.jobhistory.addressname>

<value>master1:10020value>

property>

<property>

<name>mapreduce.jobhistory.webapp.addressname>

<value>master1:19888value>

property>

configuration>

/home/hbase/hadoop-3.1.4/etc/hadoop/yarn-site.xml文件,配置如下:

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.classname>

<value>org.apache.hadoop.mapred.ShuffleHandlervalue>

property>

<property>

<name>yarn.resourcemanager.addressname>

<value>master1:8032value>

property>

<property>

<name>yarn.resourcemanager.scheduler.addressname>

<value>master1:8030value>

property>

<property>

<name>yarn.resourcemanager.resource-tracker.addressname>

<value>master1:8031value>

property>

<property>

<name>yarn.resourcemanager.admin.addressname>

<value>master1:8033value>

property>

<property>

<name>yarn.resourcemanager.webapp.addressname>

<value>master1:8088value>

property>

configuration>

/home/hbase/hadoop-3.1.4/etc/hadoop/workers文件,配置如下:

master2

slave1

slave2

slave3

以上是配置hadoop集群时的slave信息

4.4.3 分发部署数据和文件夹

scp -r /home/hbase/hadoop-3.1.4 root@master2:/home/hbase

scp -r /home/hbase/hadoop-3.1.4 root@slave1:/home/hbase

scp -r /home/hbase/hadoop-3.1.4 root@slave2:/home/hbase

scp -r /home/hbase/hadoop-3.1.4 root@slave3:/home/hbase

4.4.4 配置hadoop相关环境变量

在每个节点(master1、master2 、slave1、 slave2、slave3)上面修改/etc/profile文件中添加如下内容

export JAVA_HOME=/home/javajdk/jdk1.8.0_211

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/home/hbase/hadoop-3.1.4

export PATH=$PATH:$HADOOP_HOME/sbin

export PATH=$PATH:$HADOOP_HOME/bin

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

使用

source /etc/profile

使配置生效

4.4.5 启动hadoop

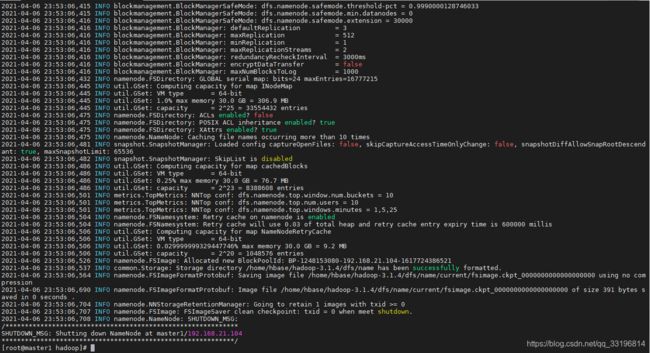

- 在

master上面执行如下命令格式化hdfs:

/home/hbase/hadoop-3.1.4/bin/hdfs namenode -format

如下图:

修改/home/hbase/hadoop-3.1.4/sbin中的四个文件

对于start-dfs.sh和stop-dfs.sh文件,添加下列参数:

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

对于start-yarn.sh和stop-yarn.sh文件,添加下列参数:

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

- 在master上面执行如下命令启动

hadoop:

/home/hbase/hadoop-3.1.4/sbin/start-all.sh

4.5 部署Hbase集群

4.5.1 解压文件

解压文件hbase-2.2.6-bin.tar.gz

tar -zxvf hbase-2.2.6-bin.tar.gz -C /home/hbase

4.5.2 修改配置

- 配置文件

打开hbase-2.2.6/conf/hbase-site.xml,修改配置如下:

<configuration>

<property>

<name>hbase.rootdirname>

<value>hdfs://master1:9000/hbasevalue>

<description>The directory shared by region servers.description>

property>

<property>

<name>hbase.hregion.max.filesizename>

<value>1073741824value>

<description>

Maximum HStoreFile size. If any one of a column families' HStoreFiles has

grown to exceed this value, the hosting HRegion is split in two.

Default: 256M.

description>

property>

<property>

<name>hbase.unsafe.stream.capability.enforcename>

<value>falsevalue>

property>

<property>

<name>hbase.hregion.memstore.flush.sizename>

<value>1073741824value>

<description>

Memstore will be flushed to disk if size of the memstore

exceeds this number of bytes. Value is checked by a thread that runs

every hbase.server.thread.wakefrequency.

description>

property>

<property>

<name>hbase.cluster.distributedname>

<value>truevalue>

<description>The mode the cluster will be in. Possible values are

false: standalone and pseudo-distributed setups with managed Zookeeper

true: fully-distributed with unmanaged Zookeeper Quorum (see hbase-env.sh)

description>

property>

<property>

<name>hbase.zookeeper.property.clientPortname>

<value>2181value>

<description>Property from ZooKeeper's config zoo.cfg.

The port at which the clients will connect.

description>

property>

<property>

<name>zookeeper.session.timeoutname>

<value>120000value>

property>

<property>

<name>hbase.zookeeper.property.tickTimename>

<value>6000value>

property>

<property>

<name>hbase.zookeeper.quorumname>

<value>master1,master2,slave1,slave2,slave3value>

<description>Comma separated list of servers in the ZooKeeper Quorum.

For example, "host1.mydomain.com,host2.mydomain.com,host3.mydomain.com".

By default this is set to localhost for local and pseudo-distributed modes of operation. For a fully-distributed setup, this should be set to a full list of ZooKeeper quorum servers. If HBASE_MANAGES_ZK is set in hbase-env.sh this is the list of servers which we will start/stop ZooKeeper on.

description>

property>

configuration>

以上信息配置了hbase以来的zookeeper信息

修改文件hbase-2.2.6/conf/hbase-env.sh

export HBASE_DISABLE_HADOOP_CLASSPATH_LOOKUP="true"

去掉前面的#号

4.5.3 配置regionservers

打开hbase-2.2.6/conf/regionservers文件,配置如下:

master1

master2

slave1

slave2

slave3

4.5.4 修改Hbase JAVA环境变量

打开hbase-2.2.6/conf/hbase-env.sh文件,找到 export JAVA_HOME=/usr/java/jdk1.6.0/这一行,改为当前jdk的路径

export JAVA_HOME=/home/javajdk/jdk1.8.0_211

以上是hbase的集群配置

4.5.5 配置Hbase备份节点

在/home/hbase/hbase-2.2.6/conf/backup-masters

master2

4.5.6 分发Hbase配置

scp -r /home/hbase/hbase-2.2.6 root@master2:/home/hbase

scp -r /home/hbase/hbase-2.2.6 root@slave1:/home/hbase

scp -r /home/hbase/hbase-2.2.6 root@slave2:/home/hbase

scp -r /home/hbase/hbase-2.2.6 root@slave3:/home/hbase

4.5.7 配置Hbase环境变量

在所有节点(master1、master2 、slave1、 slave2、slave3)做如下操作

- 打开

/etc/profile文件,添加如下配置

export HBASE_HOME=/home/hbase/hbase-2.2.6

export PATH=$HBASE_HOME/bin:$PATH

以上是hbase运行所需的环境变量

4.5.8 使环境变量生效

支持如下命令

source /etc/profile

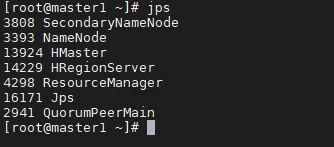

4.5.9 启动Hbase

在master上执行start-hbase.sh命令启动hbase(由于hbase/bin已经添加到环境变量中,所以start-hbase.sh可以在任何目录下执行),启动信息如下图所示:

start-hbase.sh

master节点

slave节点

4.5.10 验证Hbase是否启动

执行以下命令,可以进入Hbase的命令行模式

hbase shell

5.Opentsdb安装

5.1 安装包准备

| 依赖软件 | 版本 |

|---|---|

| cairo-1.15.12-3.el7.x86_64.rpm | cairo-1.15.12-3.el7.x86_64.rpm |

| fribidi-1.0.2-1.el7.x86_64.rpm | fribidi-1.0.2-1.el7.x86_64.rpm |

| gd-2.0.35-26.el7.x86_64.rpm | gd-2.0.35-26.el7.x86_64.rpm |

| gnuplot-4.6.2-3.el7.x86_64.rpm | gnuplot-4.6.2-3.el7.x86_64.rpm |

| gnuplot-common-4.6.2-3.el7.x86_64.rpm | gnuplot-common-4.6.2-3.el7.x86_64.rpm |

| graphite2-1.3.10-1.el7_3.x86_64.rpm | graphite2-1.3.10-1.el7_3.x86_64.rpm |

| harfbuzz-1.7.5-2.el7.x86_64.rpm | harfbuzz-1.7.5-2.el7.x86_64.rpm |

| libglvnd-1.0.1-0.8.git5baa1e5.el7.x86_64.rpm | libglvnd-1.0.1-0.8.git5baa1e5.el7.x86_64.rpm |

| libglvnd-egl-1.0.1-0.8.git5baa1e5.el7.x86_64.rpm | libglvnd-egl-1.0.1-0.8.git5baa1e5.el7.x86_64.rpm |

| libglvnd-glx-1.0.1-0.8.git5baa1e5.el7.x86_64.rpm | libglvnd-glx-1.0.1-0.8.git5baa1e5.el7.x86_64.rpm |

| libthai-0.1.14-9.el7.x86_64.rpm | libthai-0.1.14-9.el7.x86_64.rpm |

| libwayland-client-1.15.0-1.el7.x86_64.rpm | libwayland-client-1.15.0-1.el7.x86_64.rpm |

| libwayland-server-1.15.0-1.el7.x86_64.rpm | libwayland-server-1.15.0-1.el7.x86_64.rpm |

| libXdamage-1.1.4-4.1.el7.x86_64.rpm | libXdamage-1.1.4-4.1.el7.x86_64.rpm |

| libXfixes-5.0.3-1.el7.x86_64.rpm | libXfixes-5.0.3-1.el7.x86_64.rpm |

| libXpm-3.5.12-1.el7.x86_64.rpm | libXpm-3.5.12-1.el7.x86_64.rpm |

| libxshmfence-1.2-1.el7.x86_64.rpm | libxshmfence-1.2-1.el7.x86_64.rpm |

| libXxf86vm-1.1.4-1.el7.x86_64.rpm | libXxf86vm-1.1.4-1.el7.x86_64.rpm |

| mesa-libEGL-18.0.5-4.el7_6.x86_64.rpm | mesa-libEGL-18.0.5-4.el7_6.x86_64.rpm |

| mesa-libgbm-18.0.5-4.el7_6.x86_64.rpm | mesa-libgbm-18.0.5-4.el7_6.x86_64.rpm |

| mesa-libGL-18.0.5-4.el7_6.x86_64.rpm | mesa-libGL-18.0.5-4.el7_6.x86_64.rpm |

| pango-1.42.4-2.el7_6.x86_64.rpm | pango-1.42.4-2.el7_6.x86_64.rpm |

| pixman-0.34.0-1.el7.x86_64.rpm | pixman-0.34.0-1.el7.x86_64.rpm |

| 软件 | 版本 |

|---|---|

| opentsdb-2.4.0.noarch.rpm | opentsdb-2.4.0.noarch.rpm |

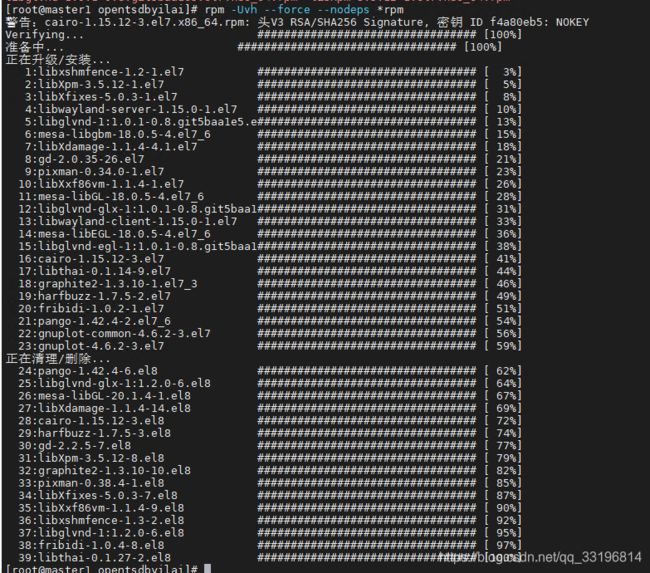

5.1.1 安装依赖

使用以下命令对opentsdb2.4.0.zip解压.

unzip opentsdb2.4.0.zip -d /home/packages/opentsdb2.4

进入 /home/packages/opentsdb2.4/opentsdbyilai ,进行依赖的安装

rpm -Uvh --force --nodeps *rpm

执行完结果如下

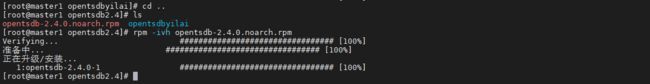

5.1.2 安装Opentsdb

进入/home/packages/opentsdb2.4对opentsdb进行安装,具体安装命令如下

rpm -ivh opentsdb-2.4.0.noarch.rpm

安装结果如下

5.1.3 修改配置文件

vim /usr/share/opentsdb/etc/opentsdb/opentsdb.conf 增加配置项

tsd.network.port = 4242

tsd.http.staticroot = /usr/share/opentsdb/static/

tsd.http.cachedir = /tmp/opentsdb

tsd.core.auto_create_metrics = true

tsd.storage.fix_duplicates=true

tsd.core.plugin_path = /usr/share/opentsdb/plugins

tsd.query.timeout = 270000

tsd.query.multi_get.batch_size=40000

tsd.query.multi_get.concurrent=40000

tsd.storage.enable_appends = true

tsd.http.request.enable_chunked = true

tsd.http.request.max_chunk = 104857600

# Path under which the znode for the -ROOT- region is located, default is "/hbase"

#tsd.storage.hbase.zk_basedir = /hbase

# A comma separated list of Zookeeper hosts to connect to, with or without

# port specifiers, default is "localhost"

tsd.storage.hbase.zk_quorum = master1,master2,slave1,slave2,slave3

5.1.4 修改建表语句

编辑/usr/share/opentsdb/tools/create_table.sh 中以下内容

create '$TSDB_TABLE',

{NAME => 't', VERSIONS => 1, COMPRESSION => '$COMPRESSION', BLOOMFILTER => '$BLOOMFILTER', DATA_BLOCK_ENCODING => '$DATA_BLOCK_ENCODING', TTL => '$TSDB_TTL'}

修改为

create '$TSDB_TABLE',

{NAME => 't', VERSIONS => 1, COMPRESSION => '$COMPRESSION', BLOOMFILTER => '$BLOOMFILTER', DATA_BLOCK_ENCODING => '$DATA_BLOCK_ENCODING'}

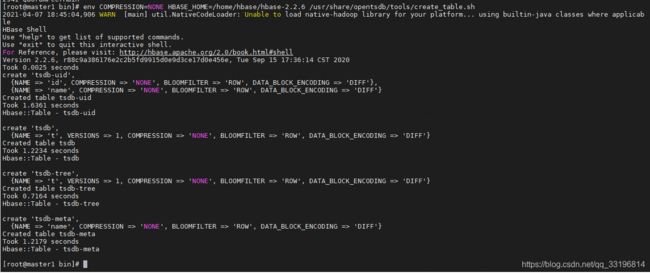

5.1.5 执行建表语句

上面的配置修改好之后可以执行创建表

env COMPRESSION=NONE HBASE_HOME=/home/hbase/hbase-2.2.6 /usr/share/opentsdb/tools/create_table.sh

创建成功如下图

注意如果创建表出现如下错误

ERROR: org.apache.hadoop.hbase.PleaseHoldException: Master is initializing

at org.apache.hadoop.hbase.master.HMaster.checkInitialized(HMaster.java:2826)

at org.apache.hadoop.hbase.master.HMaster.createTable(HMaster.java:2032)

at org.apache.hadoop.hbase.master.MasterRpcServices.createTable(MasterRpcServices.java:659)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:418)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:133)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:338)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:318)

For usage try 'help "create"'

处置过程

进入zookeeper的bin目录执行

sh zkCli.sh

在执行

ls /

看到如下内容

![]()

使用

deleteall /hbase

删除上面的hbase

在执行以下

ls /

看是否被删除

确认被删除了就直接使用quit退出,在重启hbase

stop-hbase.sh

start-habse.sh

如果重启不了,可以使用kill -9 杀掉hbase进程的HMaster,HRegionServer

在执行上面的创建表就能正常创建表了.

5.2 启动opentsdb

5.2.1 修改文件权限

进入/usr/share/opentsdb/bin/执行添加权限的命令

chmod a+x ./tsdb

5.2.2 启动

/usr/share/opentsdb/bin/tsdb tsd

5.2.3 访问地址

ip:4242

5.4 配置系统服务

5.4.1 添加启动service

添加/usr/lib/systemd/system/opentsdb.service 文件

[Unit]

Description=OpenTSDB Service

After=network.target

[Service]

Type=forking

PrivateTmp=yes

ExecStart=/usr/share/opentsdb/etc/init.d/opentsdb start

ExecStop=/usr/share/opentsdb/etc/init.d/opentsdb stop

Restart=on-abort

[Install]

WantedBy=multi-user.target

注册好系统服务之后就可以使用如下命令进行启动了.

systemctl start opentsdb.service # 启动

systemctl status opentsdb.service # 查看状态

systemctl enable opentsdb.service # 开机启动

systemctl stop opentsdb.service # 停止

5.5 分发部署

如果需要部署多个节点需要执行分发部署拷贝配置文件

scp -r /usr/lib/systemd/system/opentsdb.service root@master2:/usr/lib/systemd/system/opentsdb.service

scp -r /usr/lib/systemd/system/opentsdb.service root@slave1:/usr/lib/systemd/system/opentsdb.service

scp -r /usr/lib/systemd/system/opentsdb.service root@slave2:/usr/lib/systemd/system/opentsdb.service

scp -r /usr/lib/systemd/system/opentsdb.service root@slave3:/usr/lib/systemd/system/opentsdb.service

拷贝安装opentsdb安装包

scp -r /home/packages/opentsdb2.4 root@master2:/home/opentsdb2.4

scp -r /home/packages/opentsdb2.4 root@slave1:/home/opentsdb2.4

scp -r /home/packages/opentsdb2.4 root@slave2:/home/opentsdb2.4

scp -r /home/packages/opentsdb2.4 root@slave3:/home/opentsdb2.4

每台服务器上面执行

cd /home/opentsdb2.4/opentsdbyilai/

rpm -Uvh --force --nodeps *rpm

cd /home/opentsdb2.4

rpm -ivh opentsdb-2.4.0.noarch.rpm

cd /usr/share/opentsdb/bin/

chmod a+x ./tsdb

批量拷贝master1上面的配置文件

scp -r /usr/share/opentsdb/etc/opentsdb/opentsdb.conf root@master2:/usr/share/opentsdb/etc/opentsdb/opentsdb.conf

scp -r /usr/share/opentsdb/etc/opentsdb/opentsdb.conf root@slave1:/usr/share/opentsdb/etc/opentsdb/opentsdb.conf

scp -r /usr/share/opentsdb/etc/opentsdb/opentsdb.conf root@slave2:/usr/share/opentsdb/etc/opentsdb/opentsdb.conf

scp -r /usr/share/opentsdb/etc/opentsdb/opentsdb.conf root@slave3:/usr/share/opentsdb/etc/opentsdb/opentsdb.conf

每个节点执行启动,完成开机自动启动

systemctl start opentsdb.service

systemctl enable opentsdb.service

systemctl status opentsdb.service

6.Opentsdb负载均衡

6.1 安装nginx

通过docker和docker-compose来部署nginx,首先安装docker

6.1.1 安装docker

解压docker.rar 里面包含了docker和docker-compose离线安装包. 解压后分别会有 docker-compose和docker-ce-packages两个目录,先安装docker

chmod a+x /home/packages/docker-ce-packages/install.sh

sh install.sh

6.1.2安装docker-compose

进入 docker-compose目录执行

chmod a+x /home/packages/docker-compose/install.sh

sh install.sh

6.2 添加nginx配置文件

6.2.1 新建文件夹

mkdir -p /home/nginx

6.2.2 创建配置文件

nginx负载均衡配置文件名称为 default.conf

upstream opentsdb{

server 192.168.21.104:4242;

server 192.168.21.105:4242;

server 192.168.21.106:4242;

server 192.168.21.107:4242;

server 192.168.21.108:4242;

}

server {

listen 80;

server_name 127.0.0.1;

location / {

client_max_body_size 500m;

proxy_connect_timeout 300s;

proxy_send_timeout 300s;

proxy_read_timeout 300s;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-NginX-Proxy true;

proxy_pass http://opentsdb;

}

}

6.2.3 创建启动文件

创建docker-compose启动yml文件

version: '3'

services:

opentsdb_proxy:

privileged: true

image: nginx:latest

restart: unless-stopped

volumes:

- $PWD/default.conf:/etc/nginx/conf.d/default.conf

ports:

- "8484:80"

container_name: opentsdb_proxy

6.2.4 启动nginx负载均衡器

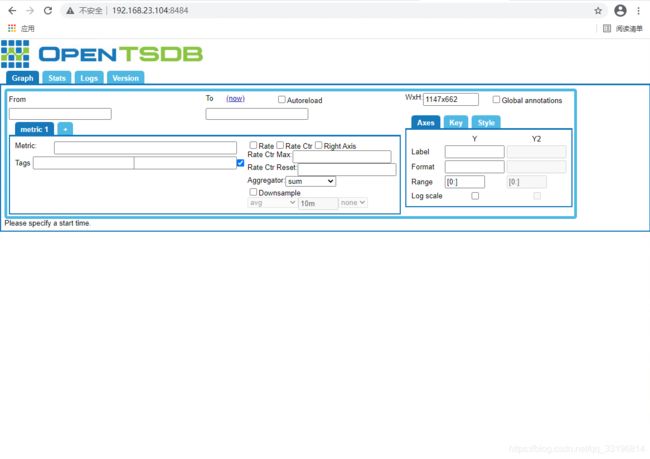

进入/home/nginx启动nginx负载均衡

docker-compose up -d

6.3 完成负载均衡

完成相关配置后就可以通过

http://192.168.23.104:8484/

进行opentsdb访问,具体效果如下图

到这里整个集群部署完成.