ES8生产实践——日志清洗过滤(vector方案)

前言

什么是vector

以下描述摘自官方文档:https://vector.dev/docs/about/what-is-vector/

Vector 是一个高性能的可观测性数据管道,可帮助企业控制其可观测性数据。收集、转换和路由所有日志、度量指标和跟踪数据,并将其提供给今天需要的任何供应商和明天可能需要的任何其他供应商。Vector 可在您需要的地方,而不是在供应商最方便的地方,大幅降低成本、丰富新颖的数据并确保数据安全。开放源代码,速度比其他任何替代方案快 10 倍。

简单来说由于logstash使用java语言开发,在处理海量数据时存在性能低下,占用资源过高的问题。而vector使用Rust语言编写,除了使用极少的资源实现logstash数据处理能力外,还具备配置文件简单、处理函数强大、智能均衡kafka分区消费、自适应并发请求等特色功能。

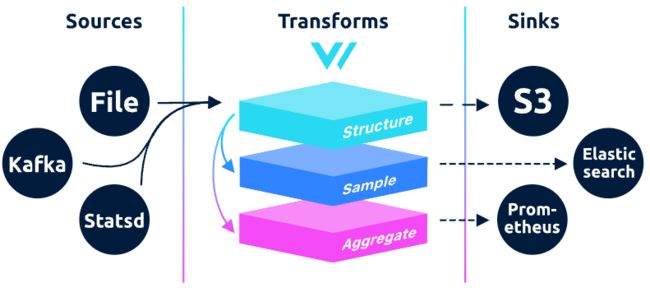

vector架构图

与logstash管道处理类似,vector主要包含数据输入、数据处理、数据输出三部分。

vector优势

- 超级快速可靠:Vector采用Rust构建,速度极快,内存效率高,旨在处理最苛刻的工作负载

- 端到端:Vector 致力于成为从 A 到 B 获取可观测性数据所需的唯一工具,并作为守护程序、边车或聚合器进行部署

- 统一:Vector 支持日志和指标,使您可以轻松收集和处理所有可观测性数据

- 供应商中立:Vector 不偏向任何特定的供应商平台,并以您的最佳利益为出发点,培育公平、开放的生态系统。免锁定且面向未来

- 可编程转换:Vector 的高度可配置转换为您提供可编程运行时的全部功能。无限制地处理复杂的用例

对比测试

性能对比

下图是 Vector 与其它日志收集器的性能测试结果对比,可以看到,Vector 的各项性能指标都优于 Logstash,综合性能也不错,加上其丰富的功能,完全可以满足我们的日志处理需求。

| Test | Vector | Filebeat | FluentBit | FluentD | Logstash | SplunkUF | SplunkHF |

|---|---|---|---|---|---|---|---|

| TCP to Blackhole | 86mib/s | n/a | 64.4mib/s | 27.7mib/s | 40.6mib/s | n/a | n/a |

| File to TCP | 76.7mib/s | 7.8mib/s | 35mib/s | 26.1mib/s | 3.1mib/s | 40.1mib/s | 39mib/s |

| Regex Parsing | 13.2mib/s | n/a | 20.5mib/s | 2.6mib/s | 4.6mib/s | n/a | 7.8mib/s |

| TCP to HTTP | 26.7mib/s | n/a | 19.6mib/s | <1mib/s | 2.7mib/s | n/a | n/a |

| TCP to TCP | 69.9mib/s | 5mib/s | 67.1mib/s | 3.9mib/s | 10mib/s | 70.4mib/s | 7.6mib/s |

可靠性对比

| Test | Vector | Filebeat | FluentBit | FluentD | Logstash | Splunk UF | Splunk HF |

|---|---|---|---|---|---|---|---|

| Disk Buffer Persistence | ✓ | ✓ | ⚠ | ✓ | ✓ | ||

| Disk Buffer Persistence | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| File Rotate (copytruncate) | ✓ | ✓ | ✓ | ||||

| File Truncation | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Process (SIGHUP) | ✓ | ⚠ | ✓ | ✓ | |||

| JSON (wrapped) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

功能性对比

| Vector | Beats | Fluentbit | Fluentd | Logstash | Splunk UF | Splunk HF | Telegraf | |

|---|---|---|---|---|---|---|---|---|

| End-to-end | ✓ | ✓ | ||||||

| Agent | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Aggregator | ✓ | ✓ | ✓ | ✓ | ✓ | |||

| Unified | ✓ | ✓ | ||||||

| Logs | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Metrics | ✓ | ⚠ | ⚠ | ⚠ | ⚠ | ⚠ | ⚠ | ✓ |

| Open | ✓ | ✓ | ✓ | ✓ | ||||

| Open-source | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| Vendor-neutral | ✓ | ✓ | ✓ | ✓ | ||||

| Reliability | ✓ | |||||||

| Memory-safe | ✓ | ✓ | ||||||

| Delivery guarantees | ✓ | ✓ | ✓ | |||||

| Multi-core | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

快速上手

安装部署

官方为我们提供了安装包、docker等多种安装方式,下载地址:https://vector.dev/download/,此处以rpm包部署为例。

[root@tiaoban ~]# wget https://packages.timber.io/vector/0.34.0/vector-0.34.0-1.x86_64.rpm

[root@tiaoban ~]# rpm -ivh vector-0.34.0-1.x86_64.rpm

[root@tiaoban ~]# systemctl start vector --now

配置测试

与logstash类似,在调试数据处理规则时,通常会从文件中读取数据,经过一系列处理后最后输出至控制台。其作用是读取 /var/log/messages日志文件,然后把 syslog 格式的日志转换成 json 格式,最后输出到标准输出:

[root@tiaoban ~]# cd /etc/vector/

[root@tiaoban vector]# ls

examples vector.yaml vector.yaml.back

[root@tiaoban vector]# cat vector.yaml

sources:

in:

type: "stdin"

sinks:

print:

type: "console"

inputs: ["in"]

encoding:

codec: "json"

[root@tiaoban vector]# vector -c vector.yaml

2023-11-12T13:40:06.995872Z INFO vector::app: Log level is enabled. level="vector=info,codec=info,vrl=info,file_source=info,tower_limit=info,rdkafka=info,buffers=info,lapin=info,kube=info"

2023-11-12T13:40:06.996328Z INFO vector::app: Loading configs. paths=["vector.yaml"]

2023-11-12T13:40:06.998137Z INFO vector::topology::running: Running healthchecks.

2023-11-12T13:40:06.998206Z INFO vector: Vector has started. debug="false" version="0.34.0" arch="x86_64" revision="c909b66 2023-11-07 15:07:26.748571656"

2023-11-12T13:40:06.998219Z INFO vector::app: API is disabled, enable by setting `api.enabled` to `true` and use commands like `vector top`.

2023-11-12T13:40:06.998590Z INFO vector::topology::builder: Healthcheck passed.

2023-11-12T13:40:06.998766Z INFO vector::sources::file_descriptors: Capturing stdin.

hello vector

{"host":"tiaoban","message":"hello vector","source_type":"stdin","timestamp":"2023-11-12T13:40:13.368669601Z"}

当控制台开始打印日志,就说明正常采集到了数据,而且转换成了 json 并打印到了控制台,实验成功。接下来详细介绍vector各个配置段具体内容。

多配置文件

当项目、环境、规则等十分庞杂时,推荐对配置文件根据需要进行拆分,而默认的 vector.toml 文件中可以写入一些全局配置。

修改systemctl 命令管理相关配置,让 Vector 读取指定目录下所有配置文件,而非默认的 vector.toml 文件。

将 Vector systemd 配置文件第 12 行由 ExecStart=/usr/bin/vector 修改为:ExecStart=/usr/bin/vector “–config-dir” “/etc/vector”:

# /usr/lib/systemd/system/vector.service

[Unit]

Description=Vector

Documentation=https://vector.dev

After=network-online.target

Requires=network-online.target

[Service]

User=vector

Group=vector

ExecStartPre=/usr/bin/vector validate

ExecStart=/usr/bin/vector "--config-dir" "/etc/vector"

ExecReload=/usr/bin/vector validate

ExecReload=/bin/kill -HUP $MAINPID

Restart=always

AmbientCapabilities=CAP_NET_BIND_SERVICE

EnvironmentFile=-/etc/default/vector

# Since systemd 229, should be in [Unit] but in order to support systemd <229,

# it is also supported to have it here.

StartLimitInterval=10

StartLimitBurst=5

[Install]

WantedBy=multi-user.target

修改 vector 配置目录环境变量,将 VECTOR_CONFIG_DIR="/etc/vector/"追加到 /etc/default/vector 文件最后:

# /etc/default/vector

# This file can theoretically contain a bunch of environment variables

# for Vector. See https://vector.dev/docs/setup/configuration/#environment-variables

# for details.

VECTOR_CONFIG_DIR="/etc/vector/"

重新加载 systemd 配置并重启 Vector:

systemctl daemon-reload

systemctl restart vector

配置文件详解

配置文件构成

根据架构图可知,Vector由 Sources、Transforms和Sinks三个部分构成,Vector作为一款管道处理工具,日志数据可以从多个源头(source)流入管道,比如 HTTP、Syslog、File、Kafka 等等,当日志数据流入管道后,就可以被进行一系列处理,处理的过程就是转换(Transform),比如增减日志中的一些字段,对日志进行重新格式化等操作,日志被处理成想要的样子后,就可以传输给接收器(Sink)处理,也就是日志最终流向何处,可以是 Elasticsearch、ClickHouse、AWS S3 等等,配置文件基本格式如下

sources: # 源

my_source_id: # 数据源名称

type: "***" # 数据源类型

transforms: # 转换

my_transform_id: # 转换名称

type: remap # 转换类型

inputs: # 转换操作的源

- my_source_id

源(source)

Vector 提供了丰富的 Sources 供使用,常用的有控制台、模拟数据、File、http、Kafka等,并且支持的种类还在不断增加,详情参考官方文档https://vector.dev/docs/reference/configuration/sources/。

- 控制台输入

sources:

my_source_id: # 数据源名称

type: "stdin"

- 模拟日志数据输入

sources:

my_source_id: # 数据源名称

type: "demo_logs"

format: "apache_common" # 模拟数据类型

count: 10 # 模拟数据条数

- file输入示例:

sources:

my_source_id: # 数据源名称

type: "file"

include: # 采集路径

- /var/log/**/*.log

- http输入示例:

sources:

my_source_id: # 数据源名称

type: "http_server"

address: "0.0.0.0:80" # 监听地址

- kafka输入示例:

sources:

my_source_id: # 数据源名称

type: "kafka"

bootstrap_servers: "10.14.22.123:9092,10.14.23.332:9092" # kafka地址

group_id: "consumer-group-name" # 消费组id

topics: # 消费主题,支持多个topic使用正则匹配

- ^(prefix1|prefix2)-.+

decoding: # 编码格式

codec: "json"

auto_offset_reset: "latest" # 消费偏移

转换(transforms)-VRL

在Vector传输数据时,可能涉及数据解析、过滤、新增等操作,跟Logstash组件类似,Vector 提供了诸多 Transforms插件来对日志进行处理。 更多transforms插件参考文档:https://vector.dev/docs/reference/configuration/transforms/

Vector推荐使用remap插件处理数据,它使用VRL语言对日志进行处理,它提供了非常丰富的函数,可以拿来即用。在线调试地址:https://playground.vrl.dev/,VRL语言快速入门参考文档:https://vector.dev/docs/reference/vrl/

接下来列举几个常用的转换案例

- 字段增删改

# 配置文件

sources:

my_source: # 数据源名称

type: "stdin"

transforms:

my_transform:

type: remap

inputs: # 匹配输入源

- my_source

source: |

. = parse_json!(.message) # 解析json数据

.@timestamp = now() # 新增字段

del(.user) # 删除字段

.age = del(.value) # 重命名字段

sinks:

my_print:

type: "console"

inputs: ["my_transform"] # 匹配过滤项

encoding:

codec: "json"

# 执行结果

{"hello":"world","user":"张三","value":18}

{"@timestamp":"2023-11-19T03:02:07.060420199Z","age":18,"hello":"world"}

- 字段值操作

# 配置文件

sources:

my_source: # 数据源名称

type: "stdin"

transforms:

my_transform:

type: remap

inputs: # 匹配输入源

- my_source

source: |

. = parse_json!(.message) # 解析json数据

.msg = downcase(string!(.msg)) # 转小写

.user = replace(string!(.user), "三", "四") # 字符串替换

.value = floor(float!(.value), precision: 2) # 小数保留指定长度

sinks:

my_print:

type: "console"

inputs: ["my_transform"] # 匹配过滤项

encoding:

codec: "json"

# 执行结果

{"msg":"Hello, World!","user":"张三","value":3.1415926}

{"msg":"hello, world!","user":"张四","value":3.14}

- 正则解析

# 配置文件

sources:

my_source: # 数据源名称

type: "stdin"

transforms:

my_transform:

type: remap

inputs: # 匹配输入源

- my_source

source: |

. = parse_regex!(.message, r'^\[(?[^\]]*)\] (?[^ ]*) (?[^ ]*) (?<id>\d*)$')</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"my_transform"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配过滤项</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span>

<span class="token comment"># 执行结果</span>

<span class="token punctuation">[</span>2023<span class="token punctuation">-</span>11<span class="token punctuation">-</span>11 12<span class="token punctuation">:</span>00<span class="token punctuation">:</span>00 +0800<span class="token punctuation">]</span> alice engineer 1

<span class="token punctuation">{</span>"id"<span class="token punctuation">:</span><span class="token string">"1"</span><span class="token punctuation">,</span>"logtime"<span class="token punctuation">:</span><span class="token string">"2023-11-11 12:00:00 +0800"</span><span class="token punctuation">,</span>"name"<span class="token punctuation">:</span><span class="token string">"alice"</span><span class="token punctuation">,</span>"title"<span class="token punctuation">:</span><span class="token string">"engineer"</span><span class="token punctuation">}</span>

</code></pre>

<ul>

<li>时间格式化</li>

</ul>

<pre><code class="prism language-yaml"><span class="token comment"># 配置文件</span>

<span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">my_source</span><span class="token punctuation">:</span> <span class="token comment"># 数据源名称</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"stdin"</span>

<span class="token key atrule">transforms</span><span class="token punctuation">:</span>

<span class="token key atrule">my_transform</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> remap

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token comment"># 匹配输入源</span>

<span class="token punctuation">-</span> my_source

<span class="token key atrule">source</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

. = parse_regex!(.message, r'^\[(?<logtime>[^\]]*)\] (?<name>[^ ]*) (?<title>[^ ]*) (?<id>\d*)$')

.logtime = parse_timestamp!((.logtime), format:"%Y-%m-%d %H:%M:%S %:z")</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"my_transform"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配过滤项</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span>

<span class="token comment"># 执行结果</span>

<span class="token punctuation">[</span>2023<span class="token punctuation">-</span>11<span class="token punctuation">-</span>11 12<span class="token punctuation">:</span>00<span class="token punctuation">:</span>00 +0800<span class="token punctuation">]</span> alice engineer 1

<span class="token punctuation">{</span>"id"<span class="token punctuation">:</span><span class="token string">"1"</span><span class="token punctuation">,</span>"logtime"<span class="token punctuation">:</span><span class="token string">"2023-11-11T04:00:00Z"</span><span class="token punctuation">,</span>"name"<span class="token punctuation">:</span><span class="token string">"alice"</span><span class="token punctuation">,</span>"title"<span class="token punctuation">:</span><span class="token string">"engineer"</span><span class="token punctuation">}</span>

</code></pre>

<ul>

<li>geoip解析</li>

</ul>

<pre><code class="prism language-yaml"><span class="token comment"># 配置文件</span>

<span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">my_source</span><span class="token punctuation">:</span> <span class="token comment"># 数据源名称</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"stdin"</span>

<span class="token key atrule">transforms</span><span class="token punctuation">:</span>

<span class="token key atrule">my_transform</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> remap

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token comment"># 匹配输入源</span>

<span class="token punctuation">-</span> my_source

<span class="token key atrule">source</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

. = parse_json!(.message) # 解析json数据

.geoip = get_enrichment_table_record!("geoip_table",

{

"ip": .ip_address

})</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"my_transform"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配过滤项</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span>

<span class="token key atrule">enrichment_tables</span><span class="token punctuation">:</span>

<span class="token key atrule">geoip_table</span><span class="token punctuation">:</span> <span class="token comment"># 指定geoip数据库文件</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> <span class="token string">"/root/GeoLite2-City.mmdb"</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> geoip

<span class="token comment"># 执行结果</span>

<span class="token punctuation">{</span>"ip_address"<span class="token punctuation">:</span><span class="token string">"185.14.47.131"</span><span class="token punctuation">}</span>

<span class="token punctuation">{</span>"geoip"<span class="token punctuation">:</span><span class="token punctuation">{</span>"city_name"<span class="token punctuation">:</span><span class="token string">"Hong Kong"</span><span class="token punctuation">,</span>"continent_code"<span class="token punctuation">:</span><span class="token string">"AS"</span><span class="token punctuation">,</span>"country_code"<span class="token punctuation">:</span><span class="token string">"HK"</span><span class="token punctuation">,</span>"country_name"<span class="token punctuation">:</span><span class="token string">"Hong Kong"</span><span class="token punctuation">,</span>"latitude"<span class="token punctuation">:</span><span class="token number">22.2842</span><span class="token punctuation">,</span>"longitude"<span class="token punctuation">:</span><span class="token number">114.1759</span><span class="token punctuation">,</span>"metro_code"<span class="token punctuation">:</span><span class="token null important">null</span><span class="token punctuation">,</span>"postal_code"<span class="token punctuation">:</span><span class="token null important">null</span><span class="token punctuation">,</span>"region_code"<span class="token punctuation">:</span><span class="token string">"HCW"</span><span class="token punctuation">,</span>"region_name"<span class="token punctuation">:</span><span class="token string">"Central and Western District"</span><span class="token punctuation">,</span>"timezone"<span class="token punctuation">:</span><span class="token string">"Asia/Hong_Kong"</span><span class="token punctuation">}</span><span class="token punctuation">,</span>"ip_address"<span class="token punctuation">:</span><span class="token string">"185.14.47.131"</span><span class="token punctuation">}</span>

</code></pre>

<h3>转换(transforms)-Lua</h3>

<p>如果 VRL 不能满足用户对日志的处理需求,Vector 也支持嵌入 Lua 语言对日志进行处理,但是这种方式要比 VRL 慢将近 60 %。具体内容可参考文档:https://vector.dev/docs/reference/configuration/transforms/lua/,此处不再做过多介绍,推荐优先使用VRL语言转换。</p>

<h3>转换(transforms)-过滤</h3>

<p>很多时候从数据源采集过来的数据我们并不是全部都需要,filter顾名思义便是用来解决这一问题的,例如删除debug等级的日志信息。</p>

<pre><code class="prism language-yaml"><span class="token comment"># 配置文件</span>

<span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">my_source</span><span class="token punctuation">:</span> <span class="token comment"># 数据源名称</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"stdin"</span>

<span class="token key atrule">transforms</span><span class="token punctuation">:</span>

<span class="token key atrule">transform_json</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> remap

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token comment"># 匹配输入源</span>

<span class="token punctuation">-</span> my_source

<span class="token key atrule">source</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

. = parse_json!(.message) # 解析json数据</span>

<span class="token key atrule">transform_filter</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> filter

<span class="token key atrule">inputs</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> transform_json

<span class="token key atrule">condition</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

.level != "debug"</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"transform_filter"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配过滤项</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span>

<span class="token comment"># 执行结果</span>

<span class="token punctuation">{</span>"level"<span class="token punctuation">:</span><span class="token string">"debug"</span><span class="token punctuation">,</span>"msg"<span class="token punctuation">:</span><span class="token string">"hello"</span><span class="token punctuation">}</span>

<span class="token punctuation">{</span>"level"<span class="token punctuation">:</span><span class="token string">"waring"</span><span class="token punctuation">,</span>"msg"<span class="token punctuation">:</span><span class="token string">"hello"</span><span class="token punctuation">}</span>

<span class="token punctuation">{</span>"level"<span class="token punctuation">:</span><span class="token string">"waring"</span><span class="token punctuation">,</span>"msg"<span class="token punctuation">:</span><span class="token string">"hello"</span><span class="token punctuation">}</span>

</code></pre>

<h3>接收器(sinks)</h3>

<p>接收器是事件的目的地,Vector同样提供了很多 Sinks 类型,其中有些和 Sources 是重合的,比如 Kafka、AWS S3 等,更多支持的接收器类型可参考文档:https://vector.dev/docs/reference/configuration/sinks/</p>

<ul>

<li>输出到控制台</li>

</ul>

<pre><code class="prism language-yaml"><span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"in"</span><span class="token punctuation">]</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span> <span class="token comment"># 输出为json格式,也可设置为text文件</span>

</code></pre>

<ul>

<li>输出到文件</li>

</ul>

<pre><code class="prism language-yaml"><span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_sink_id</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> file

<span class="token key atrule">inputs</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> my<span class="token punctuation">-</span>source<span class="token punctuation">-</span>or<span class="token punctuation">-</span>transform<span class="token punctuation">-</span>id

<span class="token key atrule">path</span><span class="token punctuation">:</span> /tmp/vector<span class="token punctuation">-</span>%Y<span class="token punctuation">-</span>%m<span class="token punctuation">-</span>%d.log

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"text"</span>

</code></pre>

<ul>

<li>输出到kafka</li>

</ul>

<pre><code class="prism language-yaml"><span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_sink_id</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> kafka

<span class="token key atrule">inputs</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> my<span class="token punctuation">-</span>source<span class="token punctuation">-</span>or<span class="token punctuation">-</span>transform<span class="token punctuation">-</span>id

<span class="token key atrule">bootstrap_servers</span><span class="token punctuation">:</span> 10.14.22.123<span class="token punctuation">:</span><span class="token number">9092</span><span class="token punctuation">,</span>10.14.23.332<span class="token punctuation">:</span><span class="token number">9092</span>

<span class="token key atrule">topic</span><span class="token punctuation">:</span> topic<span class="token punctuation">-</span><span class="token number">1234</span>

</code></pre>

<ul>

<li>输出到elasticsearch</li>

</ul>

<pre><code class="prism language-yaml"><span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_es</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> elasticsearch

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"my_transform"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配转换配置</span>

<span class="token key atrule">api_version</span><span class="token punctuation">:</span> <span class="token string">"v8"</span> <span class="token comment"># ES版本,非必填</span>

<span class="token key atrule">mode</span><span class="token punctuation">:</span> <span class="token string">"data_stream"</span> <span class="token comment"># 数据流方式写入</span>

<span class="token key atrule">auth</span><span class="token punctuation">:</span> <span class="token comment"># es认证信息 </span>

<span class="token key atrule">strategy</span><span class="token punctuation">:</span> <span class="token string">"basic"</span>

<span class="token key atrule">user</span><span class="token punctuation">:</span> <span class="token string">"elastic"</span>

<span class="token key atrule">password</span><span class="token punctuation">:</span> <span class="token string">"WbZN3xfa5M4uy+UcxJeH"</span>

<span class="token key atrule">data_stream</span><span class="token punctuation">:</span> <span class="token comment"># 数据流名称配置</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"logs"</span>

<span class="token key atrule">dataset</span><span class="token punctuation">:</span> <span class="token string">"vector"</span>

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> <span class="token string">"default"</span>

<span class="token key atrule">endpoints</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"https://192.168.10.50:9200"</span><span class="token punctuation">]</span> <span class="token comment"># es连接地址</span>

<span class="token key atrule">tls</span><span class="token punctuation">:</span> <span class="token comment"># tls证书配置</span>

<span class="token key atrule">verify_certificate</span><span class="token punctuation">:</span> <span class="token boolean important">false</span> <span class="token comment"># 跳过证书验证</span>

<span class="token comment"># ca_file: "XXXXX" # ca证书路径</span>

</code></pre>

<p>写入es中的数据流信息如下所示:<br> <a href="http://img.e-com-net.com/image/info8/a5827c6a3cc04b86af3db19c51dbc9b3.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/a5827c6a3cc04b86af3db19c51dbc9b3.jpg" alt="ES8生产实践——日志清洗过滤(vector方案)_第2张图片" width="650" height="189" style="border:1px solid black;"></a></p>

<h3>全局配置</h3>

<ul>

<li>数据目录</li>

</ul>

<p>用于持久化 Vector 状态的目录,例如 作为磁盘缓冲区、文件检查点等功能。</p>

<pre><code class="prism language-yaml"><span class="token key atrule">data_dir</span><span class="token punctuation">:</span> <span class="token string">"/var/lib/vector"</span>

</code></pre>

<ul>

<li>API</li>

</ul>

<p>vector为我们提供了常用的API接口,可以很方便的进行监控检查与状态信息获取。<br> 首先打开 Vector 的 api 功能,在 vector.toml 配置文件中加入以下内容即可:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">api</span><span class="token punctuation">:</span>

<span class="token key atrule">enabled</span><span class="token punctuation">:</span> <span class="token boolean important">true</span>

<span class="token key atrule">address</span><span class="token punctuation">:</span> <span class="token string">"0.0.0.0:8686"</span>

</code></pre>

<p>重启 Vector,获取 Vector 的健康状态:</p>

<pre><code class="prism language-javascript">$ curl localhost<span class="token operator">:</span><span class="token number">8686</span><span class="token operator">/</span>health

<span class="token punctuation">{</span><span class="token string-property property">"ok"</span><span class="token operator">:</span><span class="token boolean">true</span><span class="token punctuation">}</span>

</code></pre>

<p>开启api后,我们还可以通过命令行<code>vector top</code>命令获取各个任务的性能信息<br> <a href="http://img.e-com-net.com/image/info8/c3990f46428145249e816bf77c4fe716.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/c3990f46428145249e816bf77c4fe716.jpg" alt="ES8生产实践——日志清洗过滤(vector方案)_第3张图片" width="650" height="159" style="border:1px solid black;"></a></p>

<h2>监控</h2>

<h3>指标</h3>

<ul>

<li>prometheus_remote_write</li>

</ul>

<p>将 Vector 内部指标 Sink 到 Prometheus,据此建立更为详细的 Dashboard 和告警。<br> 在 vector.toml 配置文件中加入以下内容即可,vector 内置的指标通过远程写入的方式写入指定的 Prometheus中。</p>

<pre><code class="prism language-yaml"><span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">vector_metrics</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> internal_metrics

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">prometheus</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> prometheus_remote_write

<span class="token key atrule">endpoint</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> https<span class="token punctuation">:</span>//<prometheus_ip_address<span class="token punctuation">></span><span class="token punctuation">:</span>8087/

<span class="token key atrule">inputs</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> vector_metrics

</code></pre>

<ul>

<li>prometheus_exporter</li>

</ul>

<p>除了远程写入外,vector也支持通过exporter方式保留指标数据供Prometheus抓取,配置文件如下</p>

<pre><code class="prism language-yaml"><span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">metrics</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> internal_metrics

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> vector

<span class="token key atrule">scrape_interval_secs</span><span class="token punctuation">:</span> <span class="token number">30</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">prometheus</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> prometheus_exporter

<span class="token key atrule">inputs</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> metrics

<span class="token key atrule">address</span><span class="token punctuation">:</span> 0.0.0.0<span class="token punctuation">:</span><span class="token number">9598</span>

<span class="token key atrule">default_namespace</span><span class="token punctuation">:</span> service

</code></pre>

<p>然后在Prometheus的job中配置地址为<code>http://IP:9598/metrics</code>即可。</p>

<h3>日志</h3>

<p>将vector的运行日志写入本地文件或者elasticsearch中存储,以本地存储为例:</p>

<pre><code class="prism language-yaml"><span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">logs</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"internal_logs"</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">files</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> file

<span class="token key atrule">inputs</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> logs

<span class="token key atrule">path</span><span class="token punctuation">:</span> /tmp/vector<span class="token punctuation">-</span>%Y<span class="token punctuation">-</span>%m<span class="token punctuation">-</span>%d.log

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> text

</code></pre>

<h2>特色功能</h2>

<h3>vector自动均衡kafka消费</h3>

<p>在之前使用logstash消费kafka数据时,需要根据topic数据量大小配置kafka partition数、Logstash副本数、每个logstash线程数,而这些数量只能根据性能监控图和数据量逐个调整至合适的大小。例如有6台logstash机器,其中5台机器专门用于消费数据量大的topic,其他机器消费小数据量的topic,经常存在logstash节点负载不均衡的问题。<br> 使用vector后,我们只需要让所有机器使用相同的配置,借助Kafka的Consumer Group技术,不同配置文件通过同一个group_id即可一起消费所有的topic,vector在消费的过程中会自动均衡kafka消费速率。</p>

<h3>自适应并发请求</h3>

<p>在0.11.0版本后默认启用了自适应并发,这是一个智能、强大的功能,官方介绍https://vector.dev/blog/adaptive-request-concurrency/#rate-limiting-problem<br> 在之前的版本中,为了保障数据正常写入下游Elasticsearch或Clickhouse时,需要进行速率限制。但限制速率值设定存在以下问题</p>

<ul>

<li>将限制设置得太高,从而使服务不堪重负,从而损害系统可靠性。</li>

<li>设置的限制太低,浪费资源。</li>

</ul>

<p>为了解决这个问题,vector推出了自适应并发的功能,它会重点观察两件事:请求的往返时间 (RTT) 和 HTTP 响应代码(失败与成功),从而决策出一个最佳的速率!<br> <a href="http://img.e-com-net.com/image/info8/1385baef10dc49c0afa7d46dea1db6fb.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/1385baef10dc49c0afa7d46dea1db6fb.jpg" alt="ES8生产实践——日志清洗过滤(vector方案)_第4张图片" width="650" height="232" style="border:1px solid black;"></a><br> 在写入Elasticsearch或Clickhouse时,默认已将其设为启用, 不需要进一步的配置。</p>

<h2>vector解析日志实践</h2>

<h3>调试解析配置</h3>

<p>假设线上应用原始日志格式如下,接下来我们通过vector解析日志内容。</p>

<pre><code>2023-07-23 09:35:18.987 | INFO | __main__:debug_log:49 - {'access_status': 200, 'request_method': 'GET', 'request_uri': '/account/', 'request_length': 67, 'remote_address': '185.14.47.131', 'server_name': 'cu-36.cn', 'time_start': '2023-07-23T09:35:18.879+08:00', 'time_finish': '2023-07-23T09:35:19.638+08:00', 'http_user_agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.2999.0 Safari/537.36'}

</code></pre>

<ol>

<li>修改vector配置文件,添加sources配置项,从控制台读取数据。并新增sinks配置项,输出到控制台,vector配置文件如下所示:</li>

</ol>

<pre><code class="prism language-yaml"><span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">my_source</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"stdin"</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"my_source"</span><span class="token punctuation">]</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span>

</code></pre>

<p>观察控制台输出内容,已经将控制台输出的日志数据添加到了message字段中</p>

<pre><code class="prism language-bash"><span class="token number">2023</span>-07-23 09:35:18.987 <span class="token operator">|</span> INFO <span class="token operator">|</span> __main__:debug_log:49 - <span class="token punctuation">{</span><span class="token string">'access_status'</span><span class="token builtin class-name">:</span> <span class="token number">200</span>, <span class="token string">'request_method'</span><span class="token builtin class-name">:</span> <span class="token string">'GET'</span>, <span class="token string">'request_uri'</span><span class="token builtin class-name">:</span> <span class="token string">'/account/'</span>, <span class="token string">'request_length'</span><span class="token builtin class-name">:</span> <span class="token number">67</span>, <span class="token string">'remote_address'</span><span class="token builtin class-name">:</span> <span class="token string">'185.14.47.131'</span>, <span class="token string">'server_name'</span><span class="token builtin class-name">:</span> <span class="token string">'cu-36.cn'</span>, <span class="token string">'time_start'</span><span class="token builtin class-name">:</span> <span class="token string">'2023-07-23T09:35:18.879+08:00'</span>, <span class="token string">'time_finish'</span><span class="token builtin class-name">:</span> <span class="token string">'2023-07-23T09:35:19.638+08:00'</span>, <span class="token string">'http_user_agent'</span><span class="token builtin class-name">:</span> <span class="token string">'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.2999.0 Safari/537.36'</span><span class="token punctuation">}</span>

<span class="token punctuation">{</span><span class="token string">"host"</span><span class="token builtin class-name">:</span><span class="token string">"huanbao"</span>,<span class="token string">"message"</span><span class="token builtin class-name">:</span><span class="token string">"2023-07-23 09:35:18.987 | INFO | __main__:debug_log:49 - {'access_status': 200, 'request_method': 'GET', 'request_uri': '/account/', 'request_length': 67, 'remote_address': '185.14.47.131', 'server_name': 'cu-36.cn', 'time_start': '2023-07-23T09:35:18.879+08:00', 'time_finish': '2023-07-23T09:35:19.638+08:00', 'http_user_agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.2999.0 Safari/537.36'}"</span>,<span class="token string">"source_type"</span><span class="token builtin class-name">:</span><span class="token string">"stdin"</span>,<span class="token string">"timestamp"</span><span class="token builtin class-name">:</span><span class="token string">"2023-11-19T08:07:07.270947582Z"</span><span class="token punctuation">}</span>

</code></pre>

<ol start="2">

<li>接下来调试VRL解析配置,推荐使用在线调试工具https://playground.vrl.dev/<a href="http://img.e-com-net.com/image/info8/a5e49e332d79490394cb208c49f2c427.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/a5e49e332d79490394cb208c49f2c427.jpg" alt="ES8生产实践——日志清洗过滤(vector方案)_第5张图片" width="650" height="367" style="border:1px solid black;"></a></li>

</ol>

<p>调试无误后,将VRL处理语句添加到vector配置中。</p>

<pre><code class="prism language-yaml"><span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">my_source</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"stdin"</span>

<span class="token key atrule">transforms</span><span class="token punctuation">:</span>

<span class="token key atrule">my_transform</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> remap

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token comment"># 匹配输入源</span>

<span class="token punctuation">-</span> my_source

<span class="token key atrule">source</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

. = parse_regex!(.message, r'^(?<logtime>[^|]+) \| (?<level>[A-Z]*) *\| __main__:(?<class>\D*:\d*) - (?<content>.*)$') # 正则提取logtime、level、class、content

.content = replace(.content, "'", "\"") # 将content单引号替换为双引号

.content = parse_json!(.content) # json解析content内容

.access_status = (.content.access_status) # 将content中的子字段提取到根级

.http_user_agent = (.content.http_user_agent)

.remote_address = (.content.remote_address)

.request_length = (.content.request_length)

.request_method = (.content.request_method)

.request_uri = (.content.request_uri)

.server_name = (.content.server_name)

.time_finish = (.content.time_finish)

.access_status = (.content.access_status)

.time_start = (.content.time_start)

del(.content) # 删除content字段

.logtime = parse_timestamp!((.logtime), format:"%Y-%m-%d %H:%M:%S.%3f") # 格式化时间字段

.time_start = parse_timestamp!((.time_start), format:"%Y-%m-%dT%H:%M:%S.%3f%:z")

.time_finish = parse_timestamp!((.time_finish), format:"%Y-%m-%dT%H:%M:%S.%3f%:z")

.level = downcase(.level) # 将level字段值转小写</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"my_transform"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配转换配置</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span>

</code></pre>

<p>添加VRL解析规则后,控制台输出内容如下,已成功对原始数据完成解析处理</p>

<pre><code>2023-07-23 09:35:18.987 | INFO | __main__:debug_log:49 - {'access_status': 200, 'request_method': 'GET', 'request_uri': '/account/', 'request_length': 67, 'remote_address': '185.14.47.131', 'server_name': 'cu-36.cn', 'time_start': '2023-07-23T09:35:18.879+08:00', 'time_finish': '2023-07-23T09:35:19.638+08:00', 'http_user_agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.2999.0 Safari/537.36'}

{"access_status":200,"class":"debug_log:49","http_user_agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.2999.0 Safari/537.36","level":"info","logtime":"2023-07-23T01:35:18.987Z","remote_address":"185.14.47.131","request_length":67,"request_method":"GET","request_uri":"/account/","server_name":"cu-36.cn","time_finish":"2023-07-23T01:35:19.638Z","time_start":"2023-07-23T01:35:18.879Z"}

</code></pre>

<ol start="3">

<li>我们已经成功解析到了remote_address字段,接下来从geoip数据库中查询ip的地理位置信息。</li>

</ol>

<pre><code class="prism language-yaml"><span class="token key atrule">sources</span><span class="token punctuation">:</span>

<span class="token key atrule">my_source</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"stdin"</span>

<span class="token key atrule">transforms</span><span class="token punctuation">:</span>

<span class="token key atrule">my_transform</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> remap

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token comment"># 匹配输入源</span>

<span class="token punctuation">-</span> my_source

<span class="token key atrule">source</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

. = parse_regex!(.message, r'^(?<logtime>[^|]+) \| (?<level>[A-Z]*) *\| __main__:(?<class>\D*:\d*) - (?<content>.*)$') # 正则提取logtime、level、class、content

.content = replace(.content, "'", "\"") # 将content单引号替换为双引号

.content = parse_json!(.content) # json解析content内容

.access_status = (.content.access_status) # 将content中的子字段提取到根级

.http_user_agent = (.content.http_user_agent)

.remote_address = (.content.remote_address)

.request_length = (.content.request_length)

.request_method = (.content.request_method)

.request_uri = (.content.request_uri)

.server_name = (.content.server_name)

.time_finish = (.content.time_finish)

.access_status = (.content.access_status)

.time_start = (.content.time_start)

del(.content) # 删除content字段

.logtime = parse_timestamp!((.logtime), format:"%Y-%m-%d %H:%M:%S.%3f") # 格式化时间字段

.time_start = parse_timestamp!((.time_start), format:"%Y-%m-%dT%H:%M:%S.%3f%:z")

.time_finish = parse_timestamp!((.time_finish), format:"%Y-%m-%dT%H:%M:%S.%3f%:z")

.level = downcase(.level) # 将level字段值转小写

.geoip = get_enrichment_table_record!("geoip_table", # ip地理位置信息解析

{

"ip": .remote_address

})</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">my_print</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"console"</span>

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"my_transform"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配转换配置</span>

<span class="token key atrule">encoding</span><span class="token punctuation">:</span>

<span class="token key atrule">codec</span><span class="token punctuation">:</span> <span class="token string">"json"</span>

<span class="token key atrule">enrichment_tables</span><span class="token punctuation">:</span>

<span class="token key atrule">geoip_table</span><span class="token punctuation">:</span> <span class="token comment"># 指定geoip数据库文件</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> <span class="token string">"/root/GeoLite2-City.mmdb"</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> geoip

</code></pre>

<p>观察控制台输出,已经成功通过remote_address字段的ip地址获取到了地理位置信息内容。</p>

<pre><code>2023-07-23 09:35:18.987 | INFO | __main__:debug_log:49 - {'access_status': 200, 'request_method': 'GET', 'request_uri': '/account/', 'request_length': 67, 'remote_address': '185.14.47.131', 'server_name': 'cu-36.cn', 'time_start': '2023-07-23T09:35:18.879+08:00', 'time_finish': '2023-07-23T09:35:19.638+08:00', 'http_user_agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.2999.0 Safari/537.36'}

{"access_status":200,"class":"debug_log:49","geoip":{"city_name":"Hong Kong","continent_code":"AS","country_code":"HK","country_name":"Hong Kong","latitude":22.2842,"longitude":114.1759,"metro_code":null,"postal_code":null,"region_code":"HCW","region_name":"Central and Western District","timezone":"Asia/Hong_Kong"},"http_user_agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.2999.0 Safari/537.36","level":"info","logtime":"2023-07-23T01:35:18.987Z","remote_address":"185.14.47.131","request_length":67,"request_method":"GET","request_uri":"/account/","server_name":"cu-36.cn","time_finish":"2023-07-23T01:35:19.638Z","time_start":"2023-07-23T01:35:18.879Z"}

</code></pre>

<h3>构建vector镜像</h3>

<p>由于vector镜像未包含geoip数据库文件,如果需要根据IP地址解析获取地理位置信息,则需要提前构建包含geoip文件的vector镜像,并上传至harbor仓库中。</p>

<pre><code class="prism language-bash"><span class="token punctuation">[</span>root@tiaoban evk<span class="token punctuation">]</span><span class="token comment"># ls</span>

Dockerfile filebeat GeoLite2-City.mmdb kafka log-demo.yaml strimzi-kafka-operator vector

<span class="token punctuation">[</span>root@tiaoban evk<span class="token punctuation">]</span><span class="token comment"># cat Dockerfile </span>

FROM timberio/vector:0.34.1-debian

ADD GeoLite2-City.mmdb /etc/vector/GeoLite2-City.mmdb

<span class="token punctuation">[</span>root@tiaoban evk<span class="token punctuation">]</span><span class="token comment"># docker build -t harbor.local.com/elk/vector:v0.34.1 .</span>

<span class="token punctuation">[</span>root@tiaoban evk<span class="token punctuation">]</span><span class="token comment"># docker push harbor.local.com/elk/vector:v0.34.1</span>

</code></pre>

<h3>k8s资源清单</h3>

<ul>

<li>vector-config.yaml</li>

</ul>

<p>此配置文件为vector的全局通用配置文件,主要配置了api和监控指标。</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> ConfigMap

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> vector<span class="token punctuation">-</span>config

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> elk

<span class="token key atrule">data</span><span class="token punctuation">:</span>

<span class="token key atrule">vector.yaml</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

data_dir: "/var/lib/vector"

api:

enabled: true

address: "0.0.0.0:8686"

sources:

metrics:

type: internal_metrics

namespace: vector

scrape_interval_secs: 30</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">prometheus</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> prometheus_exporter

<span class="token key atrule">inputs</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> metrics

<span class="token key atrule">address</span><span class="token punctuation">:</span> 0.0.0.0<span class="token punctuation">:</span><span class="token number">9598</span>

<span class="token key atrule">default_namespace</span><span class="token punctuation">:</span> service

</code></pre>

<ul>

<li>pod-config.yaml</li>

</ul>

<p>此配置文件主要是从kafka中读取数据,然后移除非必要的字段信息,最后写入es中</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> ConfigMap

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>config

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> elk

<span class="token key atrule">data</span><span class="token punctuation">:</span>

<span class="token key atrule">pod.yaml</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

sources:

pod_kafka:

type: "kafka"

bootstrap_servers: "my-cluster-kafka-brokers.kafka.svc:9092"

group_id: "pod"

topics:

- "pod_logs"

decoding:

codec: "json"

auto_offset_reset: "latest"</span>

<span class="token key atrule">transforms</span><span class="token punctuation">:</span>

<span class="token key atrule">pod_transform</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> remap

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token comment"># 匹配输入源</span>

<span class="token punctuation">-</span> pod_kafka

<span class="token key atrule">source</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

del(.agent)

del(.event)

del(.ecs)

del(.host)

del(.input)

del(.kubernetes.labels)

del(.log)

del(.orchestrator)

del(.stream)</span>

<span class="token key atrule">sinks</span><span class="token punctuation">:</span>

<span class="token key atrule">pod_es</span><span class="token punctuation">:</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> elasticsearch

<span class="token key atrule">inputs</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"pod_transform"</span><span class="token punctuation">]</span> <span class="token comment"># 匹配转换配置</span>

<span class="token key atrule">api_version</span><span class="token punctuation">:</span> <span class="token string">"v8"</span> <span class="token comment"># ES版本,非必填</span>

<span class="token key atrule">mode</span><span class="token punctuation">:</span> <span class="token string">"data_stream"</span> <span class="token comment"># 数据流方式写入</span>

<span class="token key atrule">auth</span><span class="token punctuation">:</span> <span class="token comment"># es认证信息 </span>

<span class="token key atrule">strategy</span><span class="token punctuation">:</span> <span class="token string">"basic"</span>

<span class="token key atrule">user</span><span class="token punctuation">:</span> <span class="token string">"elastic"</span>

<span class="token key atrule">password</span><span class="token punctuation">:</span> <span class="token string">"2zg5q6AU7xW5jY649yuEpZ47"</span>

<span class="token key atrule">data_stream</span><span class="token punctuation">:</span> <span class="token comment"># 数据流名称配置</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> <span class="token string">"logs"</span>

<span class="token key atrule">dataset</span><span class="token punctuation">:</span> <span class="token string">"pod"</span>

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> <span class="token string">"elk"</span>

<span class="token key atrule">endpoints</span><span class="token punctuation">:</span> <span class="token punctuation">[</span><span class="token string">"https://elasticsearch-es-http.elk.svc:9200"</span><span class="token punctuation">]</span> <span class="token comment"># es连接地址</span>

<span class="token key atrule">tls</span><span class="token punctuation">:</span> <span class="token comment"># tls证书配置</span>

<span class="token key atrule">verify_certificate</span><span class="token punctuation">:</span> <span class="token boolean important">false</span> <span class="token comment"># 跳过证书验证</span>

</code></pre>

<ul>

<li>myapp-config.yaml</li>

</ul>

<p>此配置文件从kafka中读取pod日志数据,然后通过filter过滤出log-demo的日志数据,做进一步解析处理后写入es中</p>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> ConfigMap

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> myapp<span class="token punctuation">-</span>config

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> elk

<span class="token key atrule">data</span><span class="token punctuation">:</span>

<span class="token key atrule">myapp.yaml</span><span class="token punctuation">:</span> <span class="token punctuation">|</span><span class="token scalar string">

sources:

myapp_kafka:

type: "kafka"

bootstrap_servers: "my-cluster-kafka-brokers.kafka.svc:9092"

group_id: "myapp"

topics:

- "pod_logs"

decoding:

codec: "json"

auto_offset_reset: "latest"

transforms:

myapp_filter:

type: filter

inputs: # 匹配输入源

- myapp_kafka

condition: |

.kubernetes.deployment.name == "log-demo"

myapp_transform:

type: remap

inputs: # 匹配输入源

- myapp_filter

source: |

. = parse_regex!(.message, r'^(?<logtime>[^|]+) \| (?<level>[A-Z]*) *\| __main__:(?<class>\D*:\d*) - (?<content>.*)$') # 正则提取logtime、level、class、content

.content = replace(.content, "'", "\"") # 将content单引号替换为双引号

.content = parse_json!(.content) # json解析content内容

.access_status = (.content.access_status) # 将content中的子字段提取到根级

.http_user_agent = (.content.http_user_agent)

.remote_address = (.content.remote_address)

.request_length = (.content.request_length)

.request_method = (.content.request_method)

.request_uri = (.content.request_uri)

.server_name = (.content.server_name)

.time_finish = (.content.time_finish)

.access_status = (.content.access_status)

.time_start = (.content.time_start)

del(.content) # 删除content字段

.logtime = parse_timestamp!((.logtime), format:"%Y-%m-%d %H:%M:%S.%3f") # 格式化时间字段

.time_start = parse_timestamp!((.time_start), format:"%Y-%m-%dT%H:%M:%S.%3f%:z")

.time_finish = parse_timestamp!((.time_finish), format:"%Y-%m-%dT%H:%M:%S.%3f%:z")

.level = downcase(.level) # 将level字段值转小写

.geoip = get_enrichment_table_record!("geoip_table",

{

"ip": .remote_address

}) # 地理位置信息解析

sinks:

myapp_es:

type: elasticsearch

inputs: ["myapp_transform"] # 匹配转换配置

api_version: "v8" # ES版本,非必填

mode: "data_stream" # 数据流方式写入

auth: # es认证信息

strategy: "basic"

user: "elastic"

password: "2zg5q6AU7xW5jY649yuEpZ47"

data_stream: # 数据流名称配置

type: "logs"

dataset: "myapp"

namespace: "elk"

endpoints: ["https://elasticsearch-es-http.elk.svc:9200"] # es连接地址

tls: # tls证书配置

verify_certificate: false # 跳过证书验证 </span>

<span class="token key atrule">enrichment_tables</span><span class="token punctuation">:</span>

<span class="token key atrule">geoip_table</span><span class="token punctuation">:</span> <span class="token comment"># 指定geoip数据库文件</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> <span class="token string">"/etc/vector/GeoLite2-City.mmdb"</span>

<span class="token key atrule">type</span><span class="token punctuation">:</span> geoip

</code></pre>

<ul>

<li>deployment.yaml</li>

</ul>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> apps/v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Deployment

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> vector

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> elk

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">replicas</span><span class="token punctuation">:</span> <span class="token number">2</span>

<span class="token key atrule">selector</span><span class="token punctuation">:</span>

<span class="token key atrule">matchLabels</span><span class="token punctuation">:</span>

<span class="token key atrule">app</span><span class="token punctuation">:</span> vector

<span class="token key atrule">template</span><span class="token punctuation">:</span>

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">labels</span><span class="token punctuation">:</span>

<span class="token key atrule">app</span><span class="token punctuation">:</span> vector

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">securityContext</span><span class="token punctuation">:</span>

<span class="token key atrule">runAsUser</span><span class="token punctuation">:</span> <span class="token number">0</span>

<span class="token key atrule">containers</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">image</span><span class="token punctuation">:</span> harbor.local.com/elk/vector<span class="token punctuation">:</span>v0.34.1

<span class="token key atrule">name</span><span class="token punctuation">:</span> vector

<span class="token key atrule">resources</span><span class="token punctuation">:</span>

<span class="token key atrule">limits</span><span class="token punctuation">:</span>

<span class="token key atrule">cpu</span><span class="token punctuation">:</span> <span class="token string">"1"</span>

<span class="token key atrule">memory</span><span class="token punctuation">:</span> 1Gi

<span class="token key atrule">args</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token punctuation">-</span>c

<span class="token punctuation">-</span> /etc/vector/<span class="token important">*.yaml</span>

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> exporter

<span class="token key atrule">containerPort</span><span class="token punctuation">:</span> <span class="token number">9598</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> api

<span class="token key atrule">containerPort</span><span class="token punctuation">:</span> <span class="token number">8686</span>

<span class="token key atrule">volumeMounts</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> timezone

<span class="token key atrule">mountPath</span><span class="token punctuation">:</span> /etc/localtime

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> data

<span class="token key atrule">mountPath</span><span class="token punctuation">:</span> /var/lib/vector

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> vector<span class="token punctuation">-</span>config

<span class="token key atrule">mountPath</span><span class="token punctuation">:</span> /etc/vector/vector.yaml

<span class="token key atrule">subPath</span><span class="token punctuation">:</span> vector.yaml

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>config

<span class="token key atrule">mountPath</span><span class="token punctuation">:</span> /etc/vector/pod.yaml

<span class="token key atrule">subPath</span><span class="token punctuation">:</span> pod.yaml

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> myapp<span class="token punctuation">-</span>config

<span class="token key atrule">mountPath</span><span class="token punctuation">:</span> /etc/vector/myapp.yaml

<span class="token key atrule">subPath</span><span class="token punctuation">:</span> myapp.yaml

<span class="token key atrule">readinessProbe</span><span class="token punctuation">:</span>

<span class="token key atrule">httpGet</span><span class="token punctuation">:</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> /health

<span class="token key atrule">port</span><span class="token punctuation">:</span> <span class="token number">8686</span>

<span class="token key atrule">livenessProbe</span><span class="token punctuation">:</span>

<span class="token key atrule">httpGet</span><span class="token punctuation">:</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> /health

<span class="token key atrule">port</span><span class="token punctuation">:</span> <span class="token number">8686</span>

<span class="token key atrule">volumes</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> timezone

<span class="token key atrule">hostPath</span><span class="token punctuation">:</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> /usr/share/zoneinfo/Asia/Shanghai

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> data

<span class="token key atrule">hostPath</span><span class="token punctuation">:</span>

<span class="token key atrule">path</span><span class="token punctuation">:</span> /data/vector

<span class="token key atrule">type</span><span class="token punctuation">:</span> DirectoryOrCreate

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> vector<span class="token punctuation">-</span>config

<span class="token key atrule">configMap</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> vector<span class="token punctuation">-</span>config

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>config

<span class="token key atrule">configMap</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> pod<span class="token punctuation">-</span>config

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> myapp<span class="token punctuation">-</span>config

<span class="token key atrule">configMap</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> myapp<span class="token punctuation">-</span>config

</code></pre>

<ul>

<li>service.yaml</li>

</ul>

<pre><code class="prism language-yaml"><span class="token key atrule">apiVersion</span><span class="token punctuation">:</span> v1

<span class="token key atrule">kind</span><span class="token punctuation">:</span> Service

<span class="token key atrule">metadata</span><span class="token punctuation">:</span>

<span class="token key atrule">name</span><span class="token punctuation">:</span> vector<span class="token punctuation">-</span>exporter

<span class="token key atrule">namespace</span><span class="token punctuation">:</span> elk

<span class="token key atrule">spec</span><span class="token punctuation">:</span>

<span class="token key atrule">selector</span><span class="token punctuation">:</span>

<span class="token key atrule">app</span><span class="token punctuation">:</span> vector

<span class="token key atrule">ports</span><span class="token punctuation">:</span>

<span class="token punctuation">-</span> <span class="token key atrule">name</span><span class="token punctuation">:</span> exporter

<span class="token key atrule">port</span><span class="token punctuation">:</span> <span class="token number">9598</span>

<span class="token key atrule">targetPort</span><span class="token punctuation">:</span> <span class="token number">9598</span>

</code></pre>

<h3>效果演示</h3>

<p>查看kibana数据流信息,已成功创建myapp和pod日志的索引。<br> <a href="http://img.e-com-net.com/image/info8/c8555e17b5ff4252b2281209d7ca4fe2.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/c8555e17b5ff4252b2281209d7ca4fe2.jpg" alt="ES8生产实践——日志清洗过滤(vector方案)_第6张图片" width="650" height="203" style="border:1px solid black;"></a><br> 查看pod索引数据信息<br> <a href="http://img.e-com-net.com/image/info8/d1822172e1c14184a2d46b02e568c643.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/d1822172e1c14184a2d46b02e568c643.jpg" alt="ES8生产实践——日志清洗过滤(vector方案)_第7张图片" width="650" height="468" style="border:1px solid black;"></a><br> 查看myapp索引数据信息<br> <a href="http://img.e-com-net.com/image/info8/004b335402e8434597fb179551e02dd0.jpg" target="_blank"><img src="http://img.e-com-net.com/image/info8/004b335402e8434597fb179551e02dd0.jpg" alt="ES8生产实践——日志清洗过滤(vector方案)_第8张图片" width="650" height="468" style="border:1px solid black;"></a></p>

<h2>注意事项</h2>

<h3>多配置文件启动</h3>

<p>我们可以通过<code>-c /etc/vector/*.yaml</code>方式指定多个配置文件启动,此时vector会扫描指定路径下的所有yaml配置文件并加载启动。这样配置便于管理各个管道处理规则配置,使配置文件结构更加清晰,便于日后维护工作。</p>

<h3>管道名称全局唯一</h3>

<p>sources、transforms、sinks的自定义名称,全局必须唯一,尤其是多个vector配置文件时,唯一名称尤为重要。</p>

<h2>完整资源清单</h2>

<p>本实验案例所有yaml文件已上传至git仓库。访问地址如下:</p>

<h3>github</h3>

<p>https://github.com/cuiliang0302/blog-demo</p>

<h3>gitee</h3>

<p>https://gitee.com/cuiliang0302/blog_demo</p>

<h2>参考文档</h2>

<p>VRL常用函数:https://vector.dev/docs/reference/vrl/functions/<br> VRL常用案例:https://vector.dev/docs/reference/vrl/examples/<br> VRL时间格式化:https://docs.rs/chrono/latest/chrono/format/strftime/index.html#specifiers</p>

<h2>查看更多</h2>

<h3>微信公众号</h3>

<p>微信公众号同步更新,欢迎关注微信公众号《崔亮的博客》第一时间获取最近文章。</p>

<h3>博客网站</h3>

<p>崔亮的博客-专注devops自动化运维,传播优秀it运维技术文章。更多原创运维开发相关文章,欢迎访问https://www.cuiliangblog.cn</p>

</div>

</div>

</div>

</div>

</div>

<!--PC和WAP自适应版-->

<div id="SOHUCS" sid="1728761113983660032"></div>

<script type="text/javascript" src="/views/front/js/chanyan.js"></script>

<!-- 文章页-底部 动态广告位 -->

<div class="youdao-fixed-ad" id="detail_ad_bottom"></div>

</div>

<div class="col-md-3">

<div class="row" id="ad">

<!-- 文章页-右侧1 动态广告位 -->

<div id="right-1" class="col-lg-12 col-md-12 col-sm-4 col-xs-4 ad">

<div class="youdao-fixed-ad" id="detail_ad_1"> </div>

</div>

<!-- 文章页-右侧2 动态广告位 -->

<div id="right-2" class="col-lg-12 col-md-12 col-sm-4 col-xs-4 ad">

<div class="youdao-fixed-ad" id="detail_ad_2"></div>

</div>

<!-- 文章页-右侧3 动态广告位 -->

<div id="right-3" class="col-lg-12 col-md-12 col-sm-4 col-xs-4 ad">

<div class="youdao-fixed-ad" id="detail_ad_3"></div>

</div>

</div>

</div>

</div>

</div>

</div>

<div class="container">

<h4 class="pt20 mb15 mt0 border-top">你可能感兴趣的:(ELK,Stack,elasticsearch)</h4>

<div id="paradigm-article-related">

<div class="recommend-post mb30">

<ul class="widget-links">

<li><a href="/article/1939601690621702144.htm"

title="UBOOT学习笔记(六):UBOOT启动--CPU架构及板级初始化阶段" target="_blank">UBOOT学习笔记(六):UBOOT启动--CPU架构及板级初始化阶段</a>

<span class="text-muted">ZH_2025</span>

<a class="tag" taget="_blank" href="/search/uboot/1.htm">uboot</a><a class="tag" taget="_blank" href="/search/%26amp%3B/1.htm">&</a><a class="tag" taget="_blank" href="/search/linux%E5%90%AF%E5%8A%A8%E7%AF%87/1.htm">linux启动篇</a><a class="tag" taget="_blank" href="/search/linux/1.htm">linux</a><a class="tag" taget="_blank" href="/search/arm/1.htm">arm</a>

<div>3.1、_mainENTRY(_main)#ifdefined(CONFIG_TPL_BUILD)&&defined(CONFIG_TPL_NEEDS_SEPARATE_STACK)ldrr0,=(CONFIG_TPL_STACK)/*TPL(三级引导)使用独立栈*/#elifdefined(CONFIG_SPL_BUILD)&&defined(CONFIG_SPL_STACK)ldrr0,=(C</div>

</li>

<li><a href="/article/1939536506947432448.htm"

title="【Elasticsearch】most_fields、best_fields、cross_fields 的区别与用法" target="_blank">【Elasticsearch】most_fields、best_fields、cross_fields 的区别与用法</a>

<span class="text-muted">G皮T</span>

<a class="tag" taget="_blank" href="/search/elasticsearch/1.htm">elasticsearch</a><a class="tag" taget="_blank" href="/search/%E5%A4%A7%E6%95%B0%E6%8D%AE/1.htm">大数据</a><a class="tag" taget="_blank" href="/search/%E6%90%9C%E7%B4%A2%E5%BC%95%E6%93%8E/1.htm">搜索引擎</a><a class="tag" taget="_blank" href="/search/multi_match/1.htm">multi_match</a><a class="tag" taget="_blank" href="/search/best_fields/1.htm">best_fields</a><a class="tag" taget="_blank" href="/search/most_fields/1.htm">most_fields</a><a class="tag" taget="_blank" href="/search/cross_fields/1.htm">cross_fields</a>

<div>most_fields、best_fields、cross_fields的区别与用法1.核心区别概述2.详细解析与用法2.1best_fields(最佳字段匹配)2.2most_fields(多字段匹配)2.3cross_fields(跨字段匹配)3.对比案例3.1使用best_fields搜索3.2使用most_fields搜索3.3使用cross_fields搜索4.选型建议1.核心区别概述这</div>

</li>

<li><a href="/article/1939489119944110080.htm"

title="Python爬虫实战:使用Scrapy+Selenium+Playwright高效爬取Stack Overflow问答数据" target="_blank">Python爬虫实战:使用Scrapy+Selenium+Playwright高效爬取Stack Overflow问答数据</a>

<span class="text-muted">Python爬虫项目</span>

<a class="tag" taget="_blank" href="/search/2025%E5%B9%B4%E7%88%AC%E8%99%AB%E5%AE%9E%E6%88%98%E9%A1%B9%E7%9B%AE/1.htm">2025年爬虫实战项目</a><a class="tag" taget="_blank" href="/search/python/1.htm">python</a><a class="tag" taget="_blank" href="/search/%E7%88%AC%E8%99%AB/1.htm">爬虫</a><a class="tag" taget="_blank" href="/search/scrapy/1.htm">scrapy</a><a class="tag" taget="_blank" href="/search/%E5%BE%AE%E4%BF%A1/1.htm">微信</a><a class="tag" taget="_blank" href="/search/%E5%BC%80%E5%8F%91%E8%AF%AD%E8%A8%80/1.htm">开发语言</a><a class="tag" taget="_blank" href="/search/%E7%A7%91%E6%8A%80/1.htm">科技</a><a class="tag" taget="_blank" href="/search/selenium/1.htm">selenium</a>

<div>摘要本文将详细介绍如何使用Python生态中最先进的爬虫技术组合(Scrapy+Selenium+Playwright)来爬取StackOverflow的问答数据。我们将从基础爬虫原理讲起,逐步深入到分布式爬虫、反反爬策略、数据存储等高级话题,并提供完整的可运行代码示例。本文适合有一定Python基础,想要掌握专业级网络爬虫技术的开发者阅读。1.爬虫技术概述1.1为什么选择StackOverflo</div>

</li>

<li><a href="/article/1939390303744094208.htm"

title="干货 | 50题带你玩转numpy" target="_blank">干货 | 50题带你玩转numpy</a>

<span class="text-muted">朱卫军 AI</span>

<a class="tag" taget="_blank" href="/search/numpy/1.htm">numpy</a>

<div>这是在stackoverflow和numpy文档里汇总的numpy练习题,目的是为新老用户提供快速参考。1.Importthenumpypackageunderthenamenp(★☆☆)导入numpy包,命名为npimportnumpyasnp2.Printthenumpyversionandtheconfiguration(★☆☆)打印numpy版本和配置print(np.__version_</div>

</li>

<li><a href="/article/1939305585560317952.htm"

title="MCU的heap,stack两者的区别、联系" target="_blank">MCU的heap,stack两者的区别、联系</a>

<span class="text-muted">S,D</span>

<a class="tag" taget="_blank" href="/search/%E5%8D%95%E7%89%87%E6%9C%BA/1.htm">单片机</a><a class="tag" taget="_blank" href="/search/%E5%B5%8C%E5%85%A5%E5%BC%8F%E7%A1%AC%E4%BB%B6/1.htm">嵌入式硬件</a><a class="tag" taget="_blank" href="/search/mcu/1.htm">mcu</a><a class="tag" taget="_blank" href="/search/stm32/1.htm">stm32</a>

<div>【】在单片机(MCU)系统中,Heap(堆)和Stack(栈)同样是关键的内存管理概念,但由于资源受限(RAM小、无MMU),它们的实现和使用与通用计算机(如PC)有所不同。【】区别/对比内存分配方式对比:Stack(栈)--自动分配(编译器管理,函数调用/中断时使用)Heap(堆)--需手动管理(如malloc/free,但MCU中慎用)释放时机对比:Stack(栈)--函数返回时自动释放Hea</div>

</li>

<li><a href="/article/1939218079816937472.htm"

title="【企业研发】ELK开发" target="_blank">【企业研发】ELK开发</a>

<span class="text-muted">flyair_China</span>

<a class="tag" taget="_blank" href="/search/django/1.htm">django</a><a class="tag" taget="_blank" href="/search/python/1.htm">python</a><a class="tag" taget="_blank" href="/search/%E5%90%8E%E7%AB%AF/1.htm">后端</a>

<div>一、ElasticSearchElasticsearch作为当前最流行的全文检索引擎之一,在众多领域展现出强大的搜索和分析能力。1.1、全文检索与精准检索的差异Elasticsearch提供两种主要的查询方式:全文检索匹配检索(Full-textMatchQuery)和精准匹配检索(ExactMatchQuery),它们在处理查询词和索引数据时有显著区别。1.精准匹配检索(ExactMatchQu</div>

</li>

<li><a href="/article/1939198158529687552.htm"

title="2025年AI编程工具推荐" target="_blank">2025年AI编程工具推荐</a>

<span class="text-muted">小猴崽</span>

<a class="tag" taget="_blank" href="/search/AI%E7%BC%96%E7%A8%8B/1.htm">AI编程</a><a class="tag" taget="_blank" href="/search/AI%E7%BC%96%E7%A8%8B/1.htm">AI编程</a><a class="tag" taget="_blank" href="/search/ai%E7%BC%96%E7%A8%8B/1.htm">ai编程</a>

<div>以下基于2025年权威技术报告、开发者社区评测及厂商白皮书,对当前主流AI编程工具进行客观综述与推荐。数据来源包括IDC《2025中国生态告》、信通院《AI辅助编程技术成熟度评》、StackOverflow开发者调查及头部企业实测案例。一、国际主流AI编程工具GitHubCopilotX核心能力:基于GPT-4模型升级,支持37种编程语言(Python/Java/JS等),可解析数万行代码库的全局</div>

</li>

<li><a href="/article/1939124918318854144.htm"