大数据实验:MapReduce的编程实践

文章目录

- 前言

- 环境说明

- Eclipse 创建 Map-Reduce 项目

- 实验代码

-

- 说明

- 运行演示

-

- 说明

- 总结

前言

最近就快要期末考了,大家除开实验,也要顾好课内哟,期待大佬出一下软件测试的期末复习提纲和Oracle的复习提纲!

环境说明

VMware + Ubantu18.04 桌面版本 + Hadoop3.2.1 + Eclipse2021

在开始实验之前,先把 hadoop 启动起来!!,不然后续程序会有问题!!

start-all.sh

Eclipse 创建 Map-Reduce 项目

按照下图操作即可

将内容填入文件中

f1.txt

20150101 x

20150102 y

20150103 x

20150104 y

f2.txt

20150105 z

20150106 x

20150101 y

20150102 y

n1.txt

33

37

12

40

n2.txt

4

16

39

5

n3.txt

1

45

25

实验代码

说明

conf.set(“master”, “hdfs://master:9000”);

这行代码中的 master 都替换成你们配置 hadoop 的时候 core-site.xml 文件中的 fs.default 或者是 fs.default.name 中配置的域名,端口也一样

- FileMerge.java

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import java.io.IOException;

/**

* Created by bee on 17/3/25.

*/

public class FileMerge {

public static class Map extends Mapper<Object, Text, Text, Text> {

private static Text text = new Text();

public void map(Object key, Text value, Context content) throws IOException, InterruptedException {

text = value;

content.write(text, new Text(""));

}

}

public static class Reduce extends Reducer<Text, Text, Text, Text> {

public void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

context.write(key, new Text(""));

}

}

public static void main(String[] args) throws Exception {

// delete output directory

FileUtil.deleteDir("output");

Configuration conf = new Configuration();

conf.set("master", "hdfs://master:9000");

String[] otherArgs = new String[]{"input/f*.txt",

"output"};

if (otherArgs.length != 2) {

System.err.println("Usage:Merge and duplicate removal " );

System.exit(2);

}

Job job = Job.getInstance();

job.setJarByClass(FileMerge.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

- FileUtil.java

import java.io.File;

/**

* Created by bee on 3/25/17.

*/

public class FileUtil {

public static boolean deleteDir(String path) {

File dir = new File(path);

if (dir.exists()) {

for (File f : dir.listFiles()) {

if (f.isDirectory()) {

deleteDir(f.getName());

} else {

f.delete();

}

}

dir.delete();

return true;

} else {

System.out.println("文件(夹)不存在!");

return false;

}

}

}

- MergeSort.java

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class MergeSort {

/**

* @param args

* 输入多个文件,每个文件中的每行内容均为一个整数

* 输出到一个新的文件中,输出的数据格式为每行两个整数,第一个数字为第二个整数的排序位次,第二个整数为原待排列的整数

*/

//map函数读取输入中的value,将其转化成IntWritable类型,最后作为输出key

public static class Map extends Mapper<Object, Text, IntWritable, IntWritable>{

private static IntWritable data = new IntWritable();

public void map(Object key, Text value, Context context) throws IOException,InterruptedException{

/********** Begin **********/

String line = value.toString();

data.set(Integer.parseInt(line));

context.write(data, new IntWritable(1));

/********** End **********/

}

}

//reduce函数将map输入的key复制到输出的value上,然后根据输入的value-list中元素的个数决定key的输出次数,定义一个全局变量line_num来代表key的位次

public static class Reduce extends Reducer<IntWritable, IntWritable, IntWritable, IntWritable>{

private static IntWritable line_num = new IntWritable(1);

public void reduce(IntWritable key, Iterable<IntWritable> values, Context context) throws IOException,InterruptedException{

/********** Begin **********/

for(IntWritable num : values) {

context.write(line_num, key);

line_num = new IntWritable(line_num.get() + 1);

}

/********** End **********/

}

}

//自定义Partition函数,此函数根据输入数据的最大值和MapReduce框架中Partition的数量获取将输入数据按照大小分块的边界,然后根据输入数值和边界的关系返回对应的Partiton ID

public static class Partition extends Partitioner<IntWritable, IntWritable>{

public int getPartition(IntWritable key, IntWritable value, int num_Partition){

/********** Begin **********/

int Maxnumber = 65223;//int型的最大数值

int bound = Maxnumber / num_Partition + 1;

int Keynumber = key.get();

for(int i = 0; i < num_Partition; i++){

if(Keynumber < bound * i && Keynumber >= bound * (i - 1)) {

return i - 1;

}

}

return -1 ;

/********** End **********/

}

}

public static void main(String[] args) throws Exception{

// TODO Auto-generated method stub

FileUtil.deleteDir("output_n");

Configuration conf = new Configuration();

conf.set("master","hdfs://master:9000");

String[] otherArgs = new String[]{"input/n*.txt","output_n"}; /* 直接设置输入参数 */

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount " );

System.exit(2);

}

Job job = Job.getInstance(conf,"Merge and Sort");

job.setJarByClass(MergeSort.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setPartitionerClass(Partition.class);

job.setOutputKeyClass(IntWritable.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

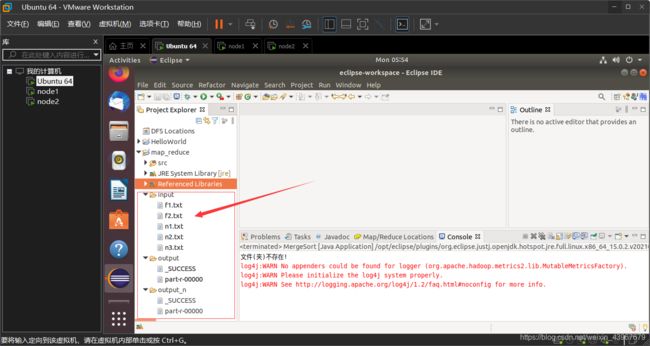

运行演示

说明

A.Eclipse 点击运行的按钮的时候,如果是第一次,则选择 Java Application即可

B.运行后记得右键项目,Refresh刷新一下,才会看到 output 里面的文件

- 合并去重操作,合并 f1.txt 和 f2.txt 的文件,输出到 output 里面

- 合并排序 n1.txt , n2.txt, n3.txt 的文件,输出到 output_n 里面

总结

解决问题很重要的能力就是有效资料搜索的效率提高能力,如果遇到一个陌生的问题,能够很快提取出这个问题的关键字并且通过搜索引擎搜索到解决方案,那么会大大减少解决问题的时间消耗!希望大家可以给博主三连支持!谢谢!