SPARK-SQL中join问题

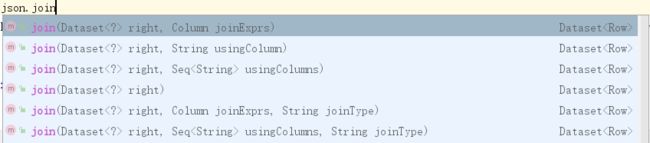

首先抛出Dataset的join算子在spark-sql_2.11 版本 2.3.0中所有重载方法:

由于本人公司产品在执行挖掘任务时任务过长,划分stage过多,并且在过程中存在着关联关系,因此不得不进行数据关联。首次本人在编写代码时使用Seq的join方式:

Dataset select = json1.select("id", "callID");

Seq callID = JavaConverters.asScalaIteratorConverter(Arrays.asList("callID").iterator()).asScala().toSeq();

Dataset left = json.join(select, callID, "left");

此方式是将关联字段callID转换为scala的Seq类型,再进行join操作。在任务流程超长,spark进行优化后任务过程中会抛出以下异常:

| 19/07/04 10:56:02 ERROR StreamingMain: com.calabar.phm.driver.spark.exception.OperatorRunningException: org.apache.spark.sql.AnalysisException: Resolved attribute(s) id#585L missing from f12#918,resultId#922,f6#920,cc3#914,f5#919,ee5#916,dd4#915,callID#913,f8#921,bb2#912,aa1#911,f1#917,id#929L,callID#927 in operator !Project [callID#913, aa1#911, bb2#912, cc3#914, dd4#915, ee5#916, f1#917, f12#918, f5#919, f6#920, f8#921, resultId#922, id#585L]. Attribute(s) with the same name appear in the operation: id. Please check if the right attribute(s) are used.;;(此处报错我贴出来的是候来运行的异常,与下面的那个id#921L日志并非同一次程序运行,但异常都相同,只是id字段标号不同!) ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 物理计划为: Exchange hashpartitioning(aa1#627, cc3#630, bb2#628, ee5#632, dd4#631, id#585L, 200) ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 逻辑计划为: Aggregate [count(1) AS count#944L] |

观察物理执行计划与逻辑执行计划,发现id#921L并没有被查询出来,在进行关联后会发现缺失id#921L。因此推测是由于SPARK进行流程优化的时候漏掉了id字段,因此抛出异常。

尝试修改上面join的方法为其他重载:

Dataset select = json1.select("id", "callID").withColumnRenamed("callID", "callID1");

Dataset left = json.join(select, json.col("callID").equalTo(select.col("callID1")), "left");

// 或者

Dataset select = json1.select("id", "callID")

json.createOrReplaceTempView("tmp1");

select.createOrReplaceTempView("tmp2");

Dataset left = session.sql("select tmp1.*,tmp2.id from tmp1 left join tmp2 on tmp1.callID = tmp2.callID");

这两种join方式都能不抛异常完成spark任务。针对相同Dataset的join查看源码发现:

public Dataset join(Dataset right, Seq usingColumns, String joinType) {

Join joined = (Join)this.sparkSession().sessionState().executePlan(new Join(this.org$apache$spark$sql$Dataset$$planWithBarrier(), right.org$apache$spark$sql$Dataset$$planWithBarrier(), org.apache.spark.sql.catalyst.plans.JoinType..MODULE$.apply(joinType), scala.None..MODULE$)).analyzed();

return this.org$apache$spark$sql$Dataset$$withPlan(new Join(joined.left(), joined.right(), new UsingJoin(org.apache.spark.sql.catalyst.plans.JoinType..MODULE$.apply(joinType), usingColumns), scala.None..MODULE$));

}

public Dataset join(Dataset right, Column joinExprs, String joinType) {

Join plan = (Join)this.org$apache$spark$sql$Dataset$$withPlan(new Join(this.org$apache$spark$sql$Dataset$$planWithBarrier(), right.org$apache$spark$sql$Dataset$$planWithBarrier(), org.apache.spark.sql.catalyst.plans.JoinType..MODULE$.apply(joinType), new Some(joinExprs.expr()))).queryExecution().analyzed();

if (this.sparkSession().sessionState().conf().dataFrameSelfJoinAutoResolveAmbiguity()) {

LogicalPlan lanalyzed = this.org$apache$spark$sql$Dataset$$withPlan(this.org$apache$spark$sql$Dataset$$planWithBarrier()).queryExecution().analyzed();

LogicalPlan ranalyzed = this.org$apache$spark$sql$Dataset$$withPlan(right.org$apache$spark$sql$Dataset$$planWithBarrier()).queryExecution().analyzed();

if (lanalyzed.outputSet().intersect(ranalyzed.outputSet()).isEmpty()) {

return this.org$apache$spark$sql$Dataset$$withPlan(plan);

} else {

final class NamelessClass_18 extends AbstractFunction1 implements Serializable {

public static final long serialVersionUID = 0L;

public final Join plan$1;

public final Expression apply(Expression x$14) {

final class NamelessClass_1 extends AbstractPartialFunction implements Serializable {

public static final long serialVersionUID = 0L;

public final B1 applyOrElse(A1 x1, Function1 var2) {

Object var9;

if (x1 instanceof EqualTo) {

EqualTo var4 = (EqualTo)x1;

Expression a = var4.left();

Expression b = var4.right();

if (a instanceof AttributeReference) {

AttributeReference var7 = (AttributeReference)a;

if (b instanceof AttributeReference) {

AttributeReference var8 = (AttributeReference)b;

if (var7.sameRef(var8)) {

var9 = new EqualTo((Expression)this.$outer.org$apache$spark$sql$Dataset$$anonfun$$$outer().org$apache$spark$sql$Dataset$$withPlan(this.$outer.plan$1.left()).resolve(var7.name()), (Expression)this.$outer.org$apache$spark$sql$Dataset$$anonfun$$$outer().org$apache$spark$sql$Dataset$$withPlan(this.$outer.plan$1.right()).resolve(var8.name()));

return var9;

}

}

}

}

var9 = var2.apply(x1);

return var9;

}

public final boolean isDefinedAt(Expression x1) {

boolean var8;

if (x1 instanceof EqualTo) {

EqualTo var3 = (EqualTo)x1;

Expression a = var3.left();

Expression b = var3.right();

if (a instanceof AttributeReference) {

AttributeReference var6 = (AttributeReference)a;

if (b instanceof AttributeReference) {

AttributeReference var7 = (AttributeReference)b;

if (var6.sameRef(var7)) {

var8 = true;

return var8;

}

}

}

}

var8 = false;

return var8;

}

public NamelessClass_1(Dataset.$anonfun$19 $outer) {

if ($outer == null) {

throw null;

} else {

this.$outer = $outer;

super();

}

}

}

return (Expression)x$14.transform(new NamelessClass_1(this));

}

public NamelessClass_18(Dataset $outer, Join plan$1) {

if ($outer == null) {

throw null;

} else {

this.$outer = $outer;

this.plan$1 = plan$1;

super();

}

}

}

Option cond = plan.condition().map(new NamelessClass_18(this, plan));

LogicalPlan x$66 = plan.copy$default$1();

LogicalPlan x$67 = plan.copy$default$2();

JoinType x$68 = plan.copy$default$3();

return this.org$apache$spark$sql$Dataset$$withPlan(plan.copy(x$66, x$67, x$68, cond));

}

} else {

return this.org$apache$spark$sql$Dataset$$withPlan(plan);

}

}

两种重载实现方式有不同,具体不同并没有深究,还烦请大佬帮忙看看。

对于API调用而言,尽量不要使用Seq的join方式,可能会出现以上异常。