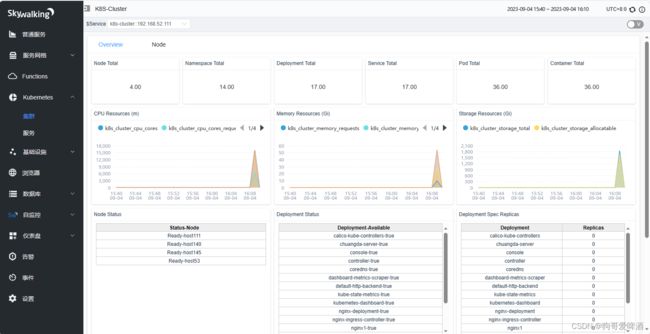

SkyWalking v9.1监控k8s集群资源

监控k8s集群指标是SkyWalking v9版本新特性,配置的时候网上一篇文章没有,搞了很久,记录一下

1、K8s kube-state-metrics 和 cAdvisor 从 K8s 收集指标数据。

1)、安装kube-state-metric

https://github.com/kubernetes/kube-state-metrics/tree/main/examples

链接:https://pan.baidu.com/s/121dPbeONwBfkCG4zClbf1Q

提取码:j9br

执行yaml

[root@host111 opentelemetry]# cd /home/k8s/examples/standard

[root@host111 standard]# kubectl apply -f .

验证kube-state-metric

执行命令

curl localhost:8080/metrics

2)、cAdvisor

默认情况下,cAdvisor 已集成到 kubelet 中

验证

启动一个免认证的apiserver访问入口:

[root@host111 standard]# kubectl proxy --port=8081

Starting to serve on 127.0.0.1:8081

重启一个终端,然后访问kube-proxy的端口,指定某个node(我这里叫做host111)即可:

curl http://localhost:8081/api/v1/nodes/host111/proxy/metrics/cadvisor

2、开放遥测收集器 K8s OpenTelemetry Collector

组成

Receiver

Processor

Exporter

Extension

Service

Receiver

Receiver是指的接收器,即collector接收的数据源的形式。Receiver可以支持多个数据源,也能支持pull和push两种模式。

Processor

Processor是在Receiver和Exportor之间执行的类似于处理数据的插件。Processor可以配置多个并且根据在配置中pipeline的顺序,依次执行。

Exportor

Exportor是指的导出器,即collector输出的数据源的形式。Exportor可以支持多个数据源,也能支持pull和push两种模式。

Extension

Extension是collector的扩展,要注意Extension不处理 otel 的数据,他负责处理的是一些类似健康检查服务发现,压缩算法等等的非 otel 数据的扩展能力。

Service

上述的这些配置都是配置的具体数据源或者是插件本身的应用配置,但是实际上的生效与否,使用顺序都是在Service中配置。主要包含如下几项:

- extensions

- pipelines

- telemetry

otel-config.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-agent-conf

namespace: skywalking

labels:

app: opentelemetry

component: otel-agent-conf

data:

otel-agent-config: |

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'kubernetes-cadvisor'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- source_labels: [ ]

target_label: cluster

replacement: 192.168.52.111 ## skywalking仪表盘集群显示的名称,需要修改

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [ __meta_kubernetes_node_name ]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/$${1}/proxy/metrics/cadvisor

- source_labels: [ instance ]

separator: ;

regex: (.+)

target_label: node

replacement: $$1

action: replace

- job_name: 'kube-state-metrics'

metrics_path: /metrics

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [ __meta_kubernetes_service_label_app_kubernetes_io_name ]

regex: kube-state-metrics

replacement: $$1

action: keep

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [ ]

target_label: cluster

replacement: 192.168.52.111 ## skywalking仪表盘集群显示的名称,需要修改 默认为skywalking-showcase

exporters:

otlp:

endpoint: "otel-collector.skywalking.svc.cluster.local:4317"## otel-collector的域名解析地址,需要修改

tls:

insecure: true

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 400

# 25% of limit up to 2G

spike_limit_mib: 100

check_interval: 5s

extensions:

zpages: {}

memory_ballast:

# Memory Ballast size should be max 1/3 to 1/2 of memory.

size_mib: 165

service:

extensions: [zpages, memory_ballast]

pipelines:

metrics:

receivers: [prometheus]

processors: [batch]

exporters: [otlp]

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: otel-agent

namespace: skywalking

labels:

app: opentelemetry

component: otel-agent

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-agent

template:

metadata:

labels:

app: opentelemetry

component: otel-agent

spec:

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-agent-config.yaml"

image: otel/opentelemetry-collector:0.83.0

name: otel-agent

resources:

limits:

cpu: 500m

memory: 500Mi

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 55679 # ZPages endpoint.

- containerPort: 4317 # Default OpenTelemetry receiver port.

- containerPort: 8888 # Metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

volumeMounts:

- name: otel-agent-config-vol

mountPath: /conf

volumes:

- configMap:

name: otel-agent-conf

items:

- key: otel-agent-config

path: otel-agent-config.yaml

name: otel-agent-config-vol

---

apiVersion: v1

kind: ConfigMap

metadata:

name: otel-collector-conf

namespace: skywalking

labels:

app: opentelemetry

component: otel-collector-conf

data:

otel-collector-config: |

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

processors:

batch:

memory_limiter:

# 80% of maximum memory up to 2G

limit_mib: 1500

# 25% of limit up to 2G

spike_limit_mib: 512

check_interval: 5s

extensions:

zpages: {}

memory_ballast:

# Memory Ballast size should be max 1/3 to 1/2 of memory.

size_mib: 683

exporters:

opencensus:

endpoint: "http://192.168.59.66:11800" # skywalking oap后端地址 oapip:11800,需要修改

tls:

insecure: true

service:

extensions: [zpages, memory_ballast]

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [opencensus]

---

apiVersion: v1

kind: Service

metadata:

name: otel-collector

namespace: skywalking

labels:

app: opentelemetry

component: otel-collector

spec:

ports:

- name: otlp-grpc # Default endpoint for OpenTelemetry gRPC receiver.

port: 4317

protocol: TCP

targetPort: 4317

- name: otlp-http # Default endpoint for OpenTelemetry HTTP receiver.

port: 4318

protocol: TCP

targetPort: 4318

- name: metrics # Default endpoint for querying metrics.

port: 8888

selector:

component: otel-collector

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: otel-collector

namespace: skywalking

labels:

app: opentelemetry

component: otel-collector

spec:

selector:

matchLabels:

app: opentelemetry

component: otel-collector

minReadySeconds: 5

progressDeadlineSeconds: 120

replicas: 1 #TODO - adjust this to your own requirements

template:

metadata:

labels:

app: opentelemetry

component: otel-collector

spec:

containers:

- command:

- "/otelcol"

- "--config=/conf/otel-collector-config.yaml"

image: otel/opentelemetry-collector:0.83.0

name: otel-collector

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 200m

memory: 400Mi

ports:

- containerPort: 55679 # Default endpoint for ZPages.

- containerPort: 4317 # Default endpoint for OpenTelemetry receiver.

- containerPort: 14250 # Default endpoint for Jaeger gRPC receiver.

- containerPort: 14268 # Default endpoint for Jaeger HTTP receiver.

- containerPort: 9411 # Default endpoint for Zipkin receiver.

- containerPort: 8888 # Default endpoint for querying metrics.

env:

- name: MY_POD_IP

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: status.podIP

volumeMounts:

- name: otel-collector-config-vol

mountPath: /conf

# - name: otel-collector-secrets

# mountPath: /secrets

volumes:

- configMap:

name: otel-collector-conf

items:

- key: otel-collector-config

path: otel-collector-config.yaml

name: otel-collector-config-vol

# - secret:

# name: otel-collector-secrets

# items:

# - key: cert.pem

# path: cert.pem

# - key: key.pem

# path: key.pem

三处需要修改

1、replacement: 192.168.52.111 ## skywalking仪表盘集群显示的名称,需要修改

2、endpoint: “otel-collector.default.svc.cluster.local:4317”## otel-collector的域名解析地址,需要修改

3、endpoint: “http://192.168.59.66:11800” # skywalking oap后端地址 oapip:11800,需要修改

执行yaml

[root@host111 standard]# cd /home/k8s/opentelemetry

[root@host111 opentelemetry]# kubectl apply -f otel-config.yaml

有权限问题执行

kubectl apply -f rlusterRoleBinding.yaml

rlusterRoleBinding.yaml

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: skywalking #ClusterRoleBinding的名字

subjects:

- kind: ServiceAccount

name: default #serviceaccount资源对象的name

namespace: skywalking #serviceaccount的namespace

roleRef:

kind: ClusterRole

name: cluster-admin #k8s集群中最高权限的角色

apiGroup: rbac.authorization.k8s.io

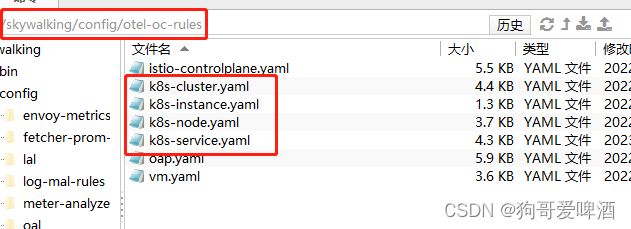

3、配置 SkyWalking OpenTelemetry receiver

进入skywalking安装目录

修改config目录下配置文件application.yml

receiver-otel:

selector: ${SW_OTEL_RECEIVER:default}

default:

enabledHandlers: ${SW_OTEL_RECEIVER_ENABLED_HANDLERS:"oc"}

enabledOcRules: ${SW_OTEL_RECEIVER_ENABLED_OC_RULES:"istio-controlplane,k8s-cluster,k8s-node,k8s-service,oap,vm"}

重启aop服务

[root@node66 skywalking]# ps -ef | grep aop

[root@node66 skywalking]# kill -9 31684

[root@node66 skywalking]# ./bin/oapService.sh

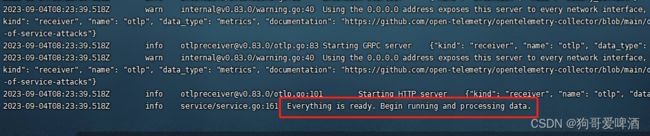

[root@node66 skywalking]# tail -400f ./logs/skywalking-oap-server.log