K8S部署(Elasticsearch+Kibana+Fluentd)

实验环境版本

Kubernetes:v1.14.1

Elasticsearch镜像:docker.elastic.co/elasticsearch/elasticsearch:7.6.2

Kibana 镜像:docker.elastic.co/kibana/kibana:7.6.2

Fluentd 镜像:quay.io/fluentd_elasticsearch/fluentd:v3.0.1

简介

1、Elasticsearch

Elasticsearch 是一个实时的、分布式的可扩展的搜索引擎,允许进行全文、结构化搜索,它通常用于索引和搜索大量日志数据,也可用于搜索许多不同类型的文档。

2、Kibana

Elasticsearch 通常与 Kibana 一起部署,Kibana 是 Elasticsearch 的一个功能强大的数据可视化 Dashboard,Kibana 允许你通过 web 界面来浏览 Elasticsearch 日志数据。

3、Fluentd

Fluentd是一个流行的开源数据收集器,我们将在 Kubernetes 集群节点上安装 Fluentd,通过获取容器日志文件、过滤和转换日志数据,然后将数据传递到 Elasticsearch 集群,在该集群中对其进行索引和存储。

如果你了解 EFK 的基本原理,只是为了测试可以直接使用 Kubernetes 官方提供的 addon 插件的资源清单,地址:https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/fluentd-elasticsearch/,直接安装即可。

1、创建 Elasticsearch 集群

在创建 Elasticsearch 集群之前,我们先创建一个命名空间,我们将在其中安装所有日志相关的资源对象。

新建一个namespace-logging.yaml 文件

vim namespace-logging.yaml

apiVersion: v1

kind: Namespace

metadata:

name: logging

然后通过 kubectl 创建该资源清单,创建一个名为 logging 的 namespace

[root@master ~]#kubectl create -f kube-logging.yaml

namespace/logging created

[root@master ~]# kubectl get ns

NAME STATUS AGE

default Active 5d6h

kube-node-lease Active 5d6h

kube-public Active 5d6h

kube-system Active 5d6h

logging Active 5d6h

接下来可以部署 EFK 相关组件,首先开始部署一个3节点的 Elasticsearch 集群。

一个关键点是您应该设置参数discover.zen.minimum_master_nodes=N/2+1,其中N是 Elasticsearch 集群中符合主节点的节点数,比如我们这里3个节点,意味着N应该设置为2。这样,如果一个节点暂时与集群断开连接,则另外两个节点可以选择一个新的主节点,并且集群可以在最后一个节点尝试重新加入时继续运行,在扩展 Elasticsearch 集群时,一定要记住这个参数。

首先创建一个名为 elasticsearch 的无头服务,新建文件 elasticsearch-svc.yaml,文件内容如下

vim elasticsearch-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

定义了一个名为 elasticsearch 的 Service,指定标签 app=elasticsearch,当我们将 Elasticsearch StatefulSet 与此服务关联时,服务将返回带有标签 app=elasticsearch的 Elasticsearch Pods 的 DNS A 记录,然后设置 clusterIP=None,将该服务设置成无头服务。最后,我们分别定义端口9200、9300,分别用于与 REST API 交互,以及用于节点间通信

使用 kubectl 直接创建上面的服务资源对象

[root@master es]# kubectl create -f elasticsearch-svc.yaml

service/elasticsearch created

[root@master es]# kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None 9200/TCP,9300/TCP 55m

现在我们已经为 Pod 设置了无头服务和一个稳定的域名.elasticsearch.logging.svc.cluster.local,接下来我们通过 StatefulSet 来创建具体的 Elasticsearch 的 Pod 应用。

Kubernetes StatefulSet 允许我们为 Pod 分配一个稳定的标识和持久化存储,Elasticsearch 需要稳定的存储来保证 Pod 在重新调度或者重启后的数据依然不变,所以需要使用 StatefulSet 来管理 Pod

创建statefulset的资源清单

vim elasticsearch-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es

namespace: logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

nodeSelector:

es: log

initContainers:

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

ports:

- name: rest

containerPort: 9200

- name: inter

containerPort: 9300

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: "es-0,es-1,es-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: discovery.seed_hosts

value: "elasticsearch"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: network.host

value: "0.0.0.0"

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: es-data-db

resources:

requests:

storage: 50Gi

1、replicas: 3 副本数

2、将 matchLabels 设置为 app=elasticsearch,所以 Pod 的模板部分.spec.template.metadata.lables也必须包含app=elasticsearch标签

3、cluster.name:Elasticsearch 集群的名称,我们这里命名成 k8s-logs

4、node.name:节点的名称,通过 metadata.name 来获取。这将解析为 es-[0,1,2],取决于节点的指定顺序

5、discovery.zen.minimum_master_nodes:我们将其设置为(N/2) + 1,N是我们的群集中符合主节点的节点的数量。我们有3个

6、ES_JAVA_OPTS:这里我们设置为-Xms512m -Xmx512m,告诉JVM使用512 MB的最小和最大堆。

注意我这里了添加了nodeSelector策略,需要在每个节点上加上label标签为es=log,es集群才能部署成功,执行以下命令。

[root@master es]# kubectl label nodes node名 es=log

因为我们这里使用 volumeClaimTemplates 来定义持久化模板,Kubernetes 会使用它为 Pod 创建 PersistentVolume,设置访问模式为ReadWriteOnce,这意味着它只能被 mount 到单个节点上进行读写,然后最重要的是使用了一个 StorageClass 对象,这里我们需要创建的 Ceph RBD 类型的名为 es-data-db 的 StorageClass 对象即可。最后,我们指定了每个 PersistentVolume 的大小为 50GB,我们可以根据自己的实际需要进行调整该值

创建kubernetes持久化存储-StorageClass

以NFS 作为后端存储资源,在主节点安装NFS,共享/data/k8s/目录,也可以新找一台机器作为存储

安装NFS

[root@master ~]# systemctl stop firewalld.service

[root@master ~]# yum -y install nfs-utils rpcbind

[root@master ~]# mkdir -p /data/k8s

[root@master ~]# chmod 755 /data/k8s

[root@master ~]# vim /etc/exports

/data/k8s 192.168.241.0/24(rw,sync,no_root_squash)

[root@master ~]# systemctl start rpcbind.service

[root@master ~]# systemctl start nfs.service

创建 Provisioner,使用nfs-client 的自动配置程序

vim nfs-client.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.241.129

- name: NFS_PATH

value: /data/k8s

volumes:

- name: nfs-client-root

nfs:

server: 192.168.241.129

path: /data/k8s

创建 sa,然后绑定上对应的权限

vim nfs-client-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["create", "delete", "get", "list", "watch", "patch", "update"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

创建StorageClass

vim elasticsearch-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: es-data-db

provisioner: fuseim.pri/ifs

部署

[root@master ~]# kubectl create -f nfs-client.yaml

[root@master ~]# kubectl create -f nfs-client-sa.yaml

[root@master ~]# kubectl create -f elasticsearch-storageclass.yaml

[root@master ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-74f78549f6-h2xd8 1/1 Running 0 97m

现在直接使用 kubectl 工具部署elasticsearch statefulset资源

[root@master es]# kubectl create -f elasticsearch-statefulset.yaml

statefulset.apps/es created

[root@master es]# kubectl get sts -n logging

NAME READY AGE

es 3/3 80m

[root@master es]# kubectl get po -n logging

NAME READY STATUS RESTARTS AGE

es-0 1/1 Running 0 80m

es-1 1/1 Running 0 85m

es-2 1/1 Running 0 90m

Pods 部署完成后,我们可以通过请求一个 REST API 来检查 Elasticsearch 集群是否正常运行。使用下面的命令将本地端口9200 转发到 Elasticsearch 节点(如es-0)对应的端口

[root@master es]# kubectl port-forward es-0 9200:9200 --namespace=logging

Forwarding from 127.0.0.1:9200 -> 9200

Forwarding from [::1]:9200 -> 9200

然后,在另外的终端窗口中,执行如下请求

[root@master ~]# curl http://localhost:9200/

[root@master ~]# curl http://localhost:9200/_cluster/state?pretty

内容如下

{

"name" : "es-0",

"cluster_name" : "k8s-logs",

"cluster_uuid" : "9AFOqPu0S2-8rqwTec3drw",

"version" : {

"number" : "7.6.2",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f",

"build_date" : "2020-03-26T06:34:37.794943Z",

"build_snapshot" : false,

"lucene_version" : "8.4.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

创建 Kibana 服务

Elasticsearch 集群启动成功了,接下来我们可以来部署 Kibana 服务,新建一个名为 kibana.yaml 的文件,对应的文件内容

vim kibana

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

ports:

- port: 5601

type: NodePort

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

nodeSelector:

es: log

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.6.2

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch:9200

ports:

- containerPort: 5601

我们定义了两个资源对象,一个 Service 和 Deployment,为了测试方便,我们将 Service 设置为了 NodePort 类型,Kibana Pod 中配置都比较简单,唯一需要注意的是我们使用 ELASTICSEARCH_HOSTS 这个环境变量来设置Elasticsearch 集群的端点和端口,直接使用 Kubernetes DNS 即可,此端点对应服务名称为 elasticsearch,由于是一个 headless service,所以该域将解析为3个 Elasticsearch Pod 的 IP 地址列表

直接使用 kubectl 工具创建

[root@master kibana]# kubectl create -f kibana.yaml

service/kibana created

deployment.apps/kibana created

创建完成后,可以查看 Kibana Pod 的运行状态

[root@master kibana]# kubectl get pods --namespace=logging

NAME READY STATUS RESTARTS AGE

es-0 1/1 Running 0 85m

es-1 1/1 Running 0 84m

es-2 1/1 Running 0 83m

kibana-5c565c47dd-xj4bd 1/1 Running 0 80m

[root@master kibana]# kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None 9200/TCP,9300/TCP 41h

kibana NodePort 10.99.98.23 5601:30417/TCP 41h

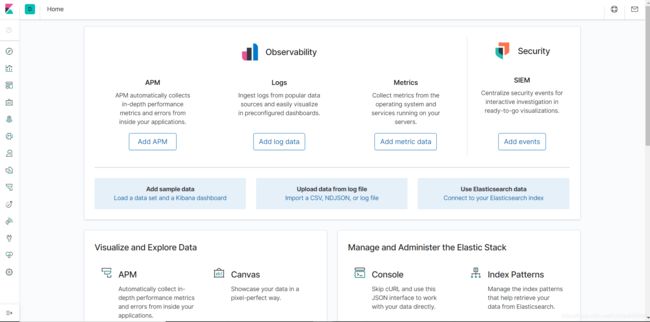

如果 Pod 已经是 Running 状态了,证明应用已经部署成功了,然后可以通过 NodePort 来访问 Kibana 这个服务,在浏览器中打开http://<任意节点IP>:30417即可,如果看到如下欢迎界面证明 Kibana 已经成功部署到了 Kubernetes集群之中

部署 Fluentd

Fluentd 是一个高效的日志聚合器,是用 Ruby 编写的,并且可以很好地扩展。对于大部分企业来说,Fluentd 足够高效并且消耗的资源相对较少,另外一个工具Fluent-bit更轻量级,占用资源更少,但是插件相对 Fluentd 来说不够丰富,所以整体来说,Fluentd 更加成熟,使用更加广泛,所以我们这里也同样使用 Fluentd 来作为日志收集工具

工作原理

Fluentd 通过一组给定的数据源抓取日志数据,处理后(转换成结构化的数据格式)将它们转发给其他服务,比如 Elasticsearch、对象存储等等

1、首先 Fluentd 从多个日志源获取数据

2、结构化并且标记这些数据

3、然后根据匹配的标签将数据发送到多个目标服务去

配置

日志源配置

比如我们这里为了收集 Kubernetes 节点上的所有容器日志,就需要做如下的日志源配置

@id fluentd-containers.log

@type tail # Fluentd 内置的输入方式,其原理是不停地从源文件中获取新的日志。

path /var/log/containers/*.log # 挂载的服务器Docker容器日志地址

pos_file /var/log/es-containers.log.pos

tag raw.kubernetes.* # 设置日志标签

read_from_head true

# 多行格式化成JSON

@type multi_format # 使用 multi-format-parser 解析器插件

format json # JSON 解析器

time_key time # 指定事件时间的时间字段

time_format %Y-%m-%dT%H:%M:%S.%NZ # 时间格式

format /^(?

上面配置部分参数说明如下

1、id:表示引用该日志源的唯一标识符,该标识可用于进一步过滤和路由结构化日志数据

2、type:Fluentd 内置的指令,tail 表示 Fluentd 从上次读取的位置通过 tail 不断获取数据,另外一个是 http 表示通过一个 GET 请求来收集数据。

3、path:tail 类型下的特定参数,告诉 Fluentd 采集 /var/log/containers 目录下的所有日志,这是 docker 在 Kubernetes 节点上用来存储运行容器 stdout 输出日志数据的目录。

4、pos_file:检查点,如果 Fluentd 程序重新启动了,它将使用此文件中的位置来恢复日志数据收集。

5、tag:用来将日志源与目标或者过滤器匹配的自定义字符串,Fluentd 匹配源/目标标签来路由日志数据。

路由配置

上面是日志源的配置,接下来看看如何将日志数据发送到 Elasticsearch

@id elasticsearch

@type elasticsearch

@log_level info

include_tag_key true

type_name fluentd

host "#{ENV['OUTPUT_HOST']}"

port "#{ENV['OUTPUT_PORT']}"

logstash_format true

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size "#{ENV['OUTPUT_BUFFER_CHUNK_LIMIT']}"

queue_limit_length "#{ENV['OUTPUT_BUFFER_QUEUE_LIMIT']}"

overflow_action block

1、match:标识一个目标标签,后面是一个匹配日志源的正则表达式,我们这里想要捕获所有的日志并将它们发送给 Elasticsearch,所以需要配置成**。

2、id:目标的一个唯一标识符。

3、type:支持的输出插件标识符,我们这里要输出到 Elasticsearch,所以配置成 elasticsearch,这是 Fluentd 的一个内置插件。

4、log_level:指定要捕获的日志级别,我们这里配置成 info,表示任何该级别或者该级别以上(INFO、WARNING、ERROR)的日志都将被路由到 Elsasticsearch。

5、host/port:定义 Elasticsearch 的地址,也可以配置认证信息,我们的 Elasticsearch 不需要认证,所以这里直接指定 host 和 port 即可。

6、logstash_format:Elasticsearch 服务对日志数据构建反向索引进行搜索,将 logstash_format 设置为 true,Fluentd 将会以 logstash 格式来转发结构化的日志数据。

7、Buffer: Fluentd 允许在目标不可用时进行缓存,比如,如果网络出现故障或者 Elasticsearch 不可用的时候。缓冲区配置也有助于降低磁盘的 IO。

过滤

由于 Kubernetes 集群中应用太多,也还有很多历史数据,所以我们可以只将某些应用的日志进行收集,比如我们只采集具有 logging=true 这个 Label 标签的 Pod 日志,这个时候就需要使用 filter,如下所示

# 删除无用的属性

@type record_transformer

remove_keys $.docker.container_id,$.kubernetes.container_image_id,$.kubernetes.pod_id,$.kubernetes.namespace_id,$.kubernetes.master_url,$.kubernetes.labels.pod-template-hash

# 只保留具有logging=true标签的Pod日志

@id filter_log

@type grep

key $.kubernetes.labels.logging

pattern ^true$

3.2、安装

要收集 Kubernetes 集群的日志,直接用 DasemonSet 控制器来部署 Fluentd 应用,这样,它就可以从 Kubernetes 节点上采集日志,确保在集群中的每个节点上始终运行一个 Fluentd 容器。当然可以直接使用 Helm 来进行一键安装,为了能够了解更多实现细节,我们这里还是采用手动方法来进行安装。

我们通过 ConfigMap 对象来指定 Fluentd 配置文件,新建 fluentd-configmap.yaml 文件,文件内容如下

kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-config

namespace: logging

data:

system.conf: |-

root_dir /tmp/fluentd-buffers/

containers.input.conf: |-

@id fluentd-containers.log

@type tail # Fluentd 内置的输入方式,其原理是不停地从源文件中获取新的日志。

path /var/log/containers/*.log # 挂载的服务器Docker容器日志地址

pos_file /var/log/es-containers.log.pos

tag raw.kubernetes.* # 设置日志标签

read_from_head true

# 多行格式化成JSON

@type multi_format # 使用 multi-format-parser 解析器插件

format json # JSON解析器

time_key time # 指定事件时间的时间字段

time_format %Y-%m-%dT%H:%M:%S.%NZ # 时间格式

format /^(?

# 在日志输出中检测异常,并将其作为一条日志转发

# https://github.com/GoogleCloudPlatform/fluent-plugin-detect-exceptions

# 匹配tag为raw.kubernetes.**日志信息

@id raw.kubernetes

@type detect_exceptions # 使用detect-exceptions插件处理异常栈信息

remove_tag_prefix raw # 移除 raw 前缀

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

# 拼接日志

@id filter_concat

@type concat # Fluentd Filter 插件,用于连接多个事件中分隔的多行日志。

key message

multiline_end_regexp /\n$/ # 以换行符“\n”拼接

separator ""

# 添加 Kubernetes metadata 数据

@id filter_kubernetes_metadata

@type kubernetes_metadata

# 修复 ES 中的 JSON 字段

# 插件地址:https://github.com/repeatedly/fluent-plugin-multi-format-parser

@id filter_parser

@type parser # multi-format-parser多格式解析器插件

key_name log # 在要解析的记录中指定字段名称。

reserve_data true # 在解析结果中保留原始键值对。

remove_key_name_field true # key_name 解析成功后删除字段。

@type multi_format

format json

format none

# 删除一些多余的属性

@type record_transformer

remove_keys $.docker.container_id,$.kubernetes.container_image_id,$.kubernetes.pod_id,$.kubernetes.namespace_id,$.kubernetes.master_url,$.kubernetes.labels.pod-template-hash

# 只保留具有logging=true标签的Pod日志

@id filter_log

@type grep

key $.kubernetes.labels.logging

pattern ^true$

###### 监听配置,一般用于日志聚合用 ######

forward.input.conf: |-

# 监听通过TCP发送的消息

@id forward

@type forward

output.conf: |-

@id elasticsearch

@type elasticsearch

@log_level info

include_tag_key true

host elasticsearch

port 9200

logstash_format true

logstash_prefix k8s # 设置 index 前缀为 k8s

request_timeout 30s

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

我们将上面创建的 fluentd-config 这个 ConfigMap 对象通过 volumes 挂载到了 Fluentd 容器中,另外为了能够灵活控制哪些节点的日志可以被收集,所以我们这里还添加了一个 nodSelector 属性

nodeSelector:

beta.kubernetes.io/fluentd-ds-ready: "true"

意思就是要想采集节点的日志,那么我们就需要给节点打上上面的标签,比如我们这里只给节点4和节点6打上了该标签

如果你需要在其他节点上采集日志,则需要给对应节点打上标签,使用如下命令

kubectl label nodes node名 beta.kubernetes.io/fluentd-ds-ready=true

另外由于我们的集群使用的是 kubeadm 搭建的,默认情况下 master 节点有污点,所以如果要想也收集 master 节点的日志,则需要添加上容忍

tolerations:

- operator: Exists

另外需要注意的地方是,我这里的测试环境更改了 docker 的根目录

[root@node2 ~]# docker info

Docker Root Dir: /var/lib/docker

所以上面要获取 docker 的容器目录需要更改成/data/docker/containers,这个地方非常重要,当然如果你没有更改 docker 根目录则使用默认的/var/lib/docker目录

分别创建上面的 ConfigMap 对象和 DaemonSet

[root@master fluentd]# kubectl create -f fluentd-configmap.yaml

configmap "fluentd-config" created

[root@master fluentd]# kubectl create -f fluentd-daemonset.yaml

serviceaccount "fluentd-es" created

clusterrole.rbac.authorization.k8s.io "fluentd-es" created

clusterrolebinding.rbac.authorization.k8s.io "fluentd-es" created

daemonset.apps "fluentd-es" created

创建完成后,查看对应的 Pods 列表,检查是否部署成功

[root@master fluentd]# kubectl get po -n logging

NAME READY STATUS RESTARTS AGE

es-0 1/1 Running 1 46h

es-1 1/1 Running 1 46h

es-2 1/1 Running 1 46h

fluentd-es-9b9c6 1/1 Running 0 6s

fluentd-es-jlr69 1/1 Running 0 6s

fluentd-es-psccl 1/1 Running 0 6s

kibana-749ccd7d79-bsfgd 1/1 Running 0 34m

Fluentd 启动成功后,这个时候就可以发送日志到 ES 了,但是我们这里是过滤了只采集具有 logging=true 标签的 Pod 日志,所以现在还没有任何数据会被采集

示例1、我们部署一个简单的测试应用, 新建 counter.yaml 文件,文件内容如下

apiVersion: v1

kind: Pod

metadata:

name: counter

labels:

logging: "true"

spec:

containers:

- name: count

image: busybox

args: [/bin/sh, -c,

'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done']

该 Pod 只是简单将日志信息打印到 stdout,所以正常来说 Fluentd 会收集到这个日志数据,在 Kibana 中也就可以找到对应的日志数据了,使用 kubectl 工具创建该 Pod

[root@master pod]# kubectl get po

NAME READY STATUS RESTARTS AGE

counter 1/1 Running 0 5h20m

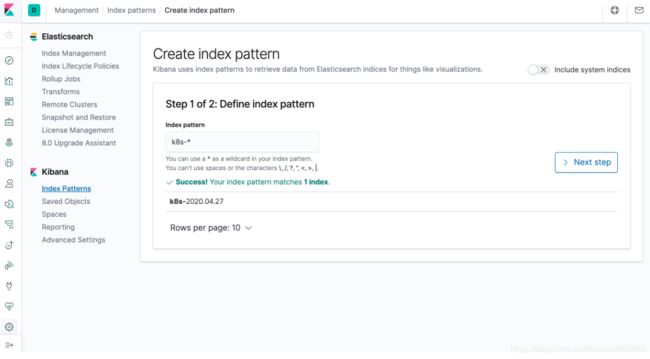

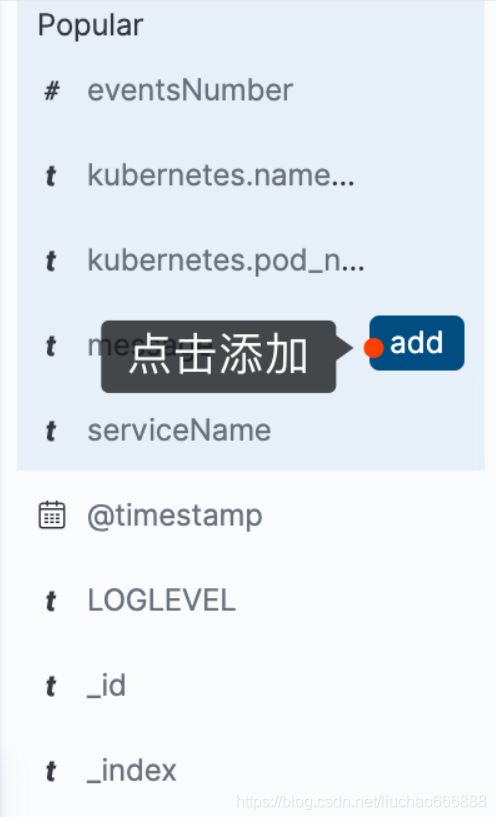

Pod 创建并运行后,回到 Kibana Dashboard 页面,点击左侧最下面的 management 图标,然后点击 Kibana 下面的 Index Patterns 开始导入索引数据

在这里可以配置我们需要的 Elasticsearch 索引,前面 Fluentd 配置文件中我们采集的日志使用的是 logstash 格式,定义了一个 k8s 的前缀,所以这里只需要在文本框中输入k8s-*即可匹配到 Elasticsearch 集群中采集的 Kubernetes 集群日志数据,然后点击下一步,进入以下页面

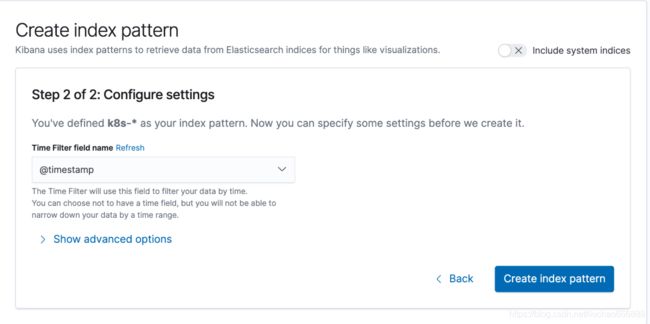

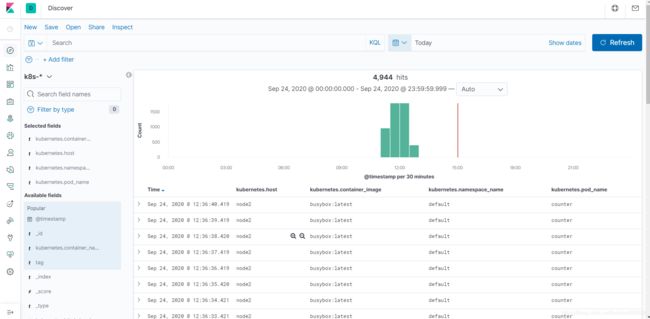

在该页面中配置使用哪个字段按时间过滤日志数据,在下拉列表中,选择@timestamp字段,然后点击Create index pattern,创建完成后,点击左侧导航菜单中的Discover,然后就可以看到一些直方图和最近采集到的日志数据了

示例2、我们部署一个简单的nginx测试应用, 新建 nginx-deployment.yaml 文件,文件内容如下

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default

labels:

nginx: "true"

spec:

replicas: 2

selector:

matchLabels:

app: nginx

release: stabel

template:

metadata:

labels:

app: nginx

nginx: "true"

release: stabel

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

nginx: "true"

release: stabel

namespace: default

spec:

type: NodePort

selector:

app: nginx

nginx: "true"

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30002

注意 nginx: “true” 字段,此字段需要在configmap文件中定义

在原configmap中添加以下字段

@id filter_log

@type grep

key $.kubernetes.labels.logging

key $.kubernetes.labels.nginx //新添加的nginx为true字段

pattern ^true$

和

output.conf: |-

@id elasticsearch

@type elasticsearch

@log_level info

include_tag_key true

host elasticsearch

port 9200

logstash_format true

logstash_prefix k8s

logstash_prefix nginx //新添加的前缀为nginx的索引字段

request_timeout 30s

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

重启configmap和fluentd

[root@master fluentd]# kubectl apply -f fluentd-configmap.yaml

[root@master fluentd]# kubectl apply -f fluentd-daemonset.yaml

再按照counter的步骤到kibana中添加索引查询数据,显示数据如下

日志分析

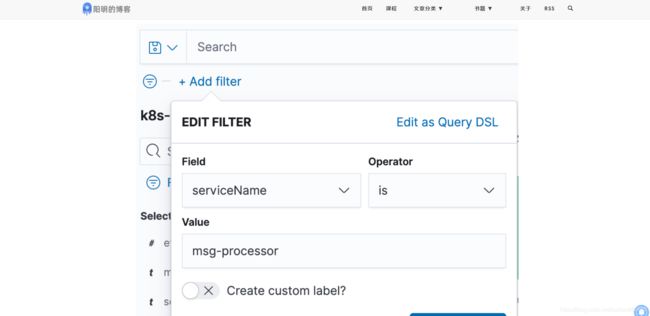

上面我们已经可以将应用日志收集起来了,下面我们来使用一个应用演示如何分析采集的日志。示例应用会输出如下所示的 JSON 格式的日志信息

{"LOGLEVEL":"WARNING","serviceName":"msg-processor","serviceEnvironment":"staging","message":"WARNING client connection terminated unexpectedly."}

{"LOGLEVEL":"INFO","serviceName":"msg-processor","serviceEnvironment":"staging","message":"","eventsNumber":5}

{"LOGLEVEL":"INFO","serviceName":"msg-receiver-api":"msg-receiver-api","serviceEnvironment":"staging","volume":14,"message":"API received messages"}

{"LOGLEVEL":"ERROR","serviceName":"msg-receiver-api","serviceEnvironment":"staging","message":"ERROR Unable to upload files for processing"}

因为 JSON 格式的日志解析非常容易,当我们将日志结构化传输到 ES 过后,我们可以根据特定的字段值而不是文本搜索日志数据,当然纯文本格式的日志我们也可以进行结构化,但是这样每个应用的日志格式不统一,都需要单独进行结构化,非常麻烦,所以建议将日志格式统一成 JSON 格式输出。

我们这里的示例应用会定期输出不同类型的日志消息,包含不同日志级别(INFO/WARN/ERROR)的日志,一行 JSON 日志就是我们收集的一条日志消息,该消息通过 fluentd 进行采集发送到 Elasticsearch。这里我们会使用到 fluentd 里面的自动 JSON 解析插件,默认情况下,fluentd 会将每个日志文件的一行作为名为 log 的字段进行发送,并自动添加其他字段,比如 tag 标识容器,stream 标识 stdout 或者 stderr

由于在 fluentd 配置中我们添加了如下所示的过滤器

@id filter_parser

@type parser # multi-format-parser多格式解析器插件

key_name log # 在要解析的记录中指定字段名称

reserve_data true # 在解析结果中保留原始键值对

remove_key_name_field true # key_name 解析成功后删除字段。

@type multi_format

format json

format none

该过滤器使用 json 和 none 两个插件将 JSON 数据进行结构化,这样就会把 JSON 日志里面的属性解析成一个一个的字段,解析生效过后记得刷新 Kibana 的索引字段,否则会识别不了这些字段,通过 管理 -> Index Pattern 点击刷新字段列表即可

下面我们将示例应用部署到 Kubernetes 集群中

vim dummylogs.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: dummylogs

spec:

replicas: 3

selector:

matchLabels:

app: dummylogs

template:

metadata:

labels:

app: dummylogs

logging: "true" # 要采集日志需要加上该标签

spec:

containers:

- name: dummy

image: cnych/dummylogs:latest

args:

- msg-processor

直接部署上面的应用即可

kubectl apply -f dummylogs.yaml

kubectl get pods -l logging=true

我们可以根据自己的需求来筛选需要查看的日志数据

参考文章

https://www.qikqiak.com/post/install-efk-stack-on-k8s/

https://www.jianshu.com/p/91833f7c42cd

https://www.jianshu.com/p/aca71580a7c8