Python爬虫-如何通过Fiddler抓包国外的app(安卓+ios)+Scrapy深层级页面

目录

- 配置工具

- 抓包

-

- IOS抓包思路

- 安卓抓包思路

-

- 方法一:Xposed+JustTrustMe

- 方法二:反编译

- Scrapy

-

- items类

- spider类

- pipeline类

- setting类

配置工具

Python3.9以上 、Scrapy、Fiddler、手机、梯子(pc端)

抓包

IOS抓包思路

首先,网上已经有很多教程关于怎么用Fiddler抓包ios的方法,这里就不赘述。我只提几个点,只要这几个点做到了就能保证成功抓包。

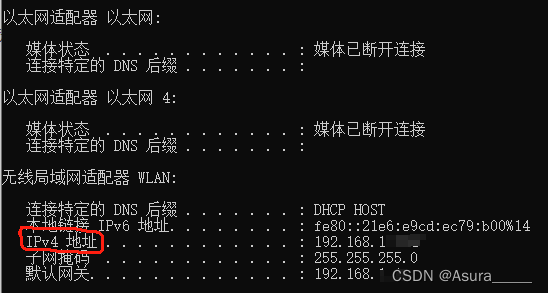

1.确保ios的手动代理的IP端口与fiddler一致

2.ios端下载证书时一定要连上fiddler的端口,且安装好证书之后一定要点击信任该证书

3.所有设置都做完之后,为确保成功fiddler和手机网络全部重启一遍,注意:重启顺序一定是先启动fiddler再连网络端口,因为要使pc端处于监听状态。然后在pc端开启梯子,手机不用挂梯子。(fiddler自动检测系统代理)

至此,当你发现移动端能访问外网app时,就代表已经成功抓到包了。

安卓抓包思路

首先,安卓7.0之后的版本已经全部需要证书验证。就是它分为系统证书和个人证书,通过fiddler安装的证书就是个人证书,安卓7.0之后就不认可个人证书,导致市面上很多app都抓不到,只有一小部分冷门的可以抓。但是7.0之前的版本不需要验证,所以这里直接用夜神模拟器安卓5.0版本自带root权限,有雷电的话更推荐雷电。

方法一:Xposed+JustTrustMe

posed框架是一款可以在不修改APK的情况下影响程序运行(修改系统)的框架服务,基于它可以制作出许多功能强大的模块,且在功能不冲突的情况下同时运作。

JustTrustMe:一个禁用SSL证书检查的xposed模块。

这两个的作用是跳过SSL Pinning的限制,网上也有很多教程,不多赘述。

这里推荐用多开鸭的xpose,justtrustme就用github官方的就可以。

多开鸭

justtrustme

方法二:反编译

1.apktool

apktool是一个反编译工具,将apk导入后,就能改写源码。我是看这个博主学的。

apktool

apk解完包之后我们能看到文件大概长这样:

2.在源码res文件夹下的xml文件夹,新建一个network_security_config.xml文件

<network-security-config>

<base-config cleartextTrafficPermitted="true">

<trust-anchors>

<certificates src="system" overridePins="true" />

<certificates src="user" overridePins="true" />

trust-anchors>

base-config>

network-security-config>

ps:src=“system"表示信任系统的CA证书,src=“user"表示信任用户导入的CA证书,网上也有说把system全改成user的,两种都可以尝试一下。

3.修改项目的AndroidManifest.xml文件

<manifest ... >

<application android:networkSecurityConfig="@xml/network_security_config"

... >

...

application>

manifest>

4.重新编译打包安装

———————————————————————————————————————————————————

如果安卓这两种方法都不行,那就直接建议去ios吧,暴力简单。

Scrapy

抓到包之后的事情就顺利了,进入到熟悉的爬虫环节了。这次的爬虫是多层级的深度爬取,主页面下面还嵌套两层子页面,每层页面还要再有翻页操作。以往都是顶多在一个页面翻页,没有这次难度这么高。。。

items类

每次在新建一个scrapy时,都建议从item类开始写起,可以明确你要的所有字段。

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

#一级网页 店铺码、名字、logo、品类、距离、营业时间

storeCode = scrapy.Field()

storeName = scrapy.Field()

storeLogo = scrapy.Field()

departmentName = scrapy.Field()

roadDistance = scrapy.Field()

operationalMsg = scrapy.Field()

superCategoryCode = scrapy.Field()

#二级网页 最低消费、商品分类名字、图片、预计到达时间

minOrder = scrapy.Field()

title = scrapy.Field()

iconImage = scrapy.Field()

estimatedDeliveryTime = scrapy.Field()

catagoryNum = scrapy.Field()

#三级网页 商品名字、图片、重量、价格

goodsName = scrapy.Field()

goodsPhoto = scrapy.Field()

goodsGM = scrapy.Field()

goodsPrice = scrapy.Field()

goods_url = scrapy.Field()

goodsNum = scrapy.Field()

goodsPage = scrapy.Field()

pass

spider类

由于是深层级的爬取,所以要编写三层parse,每层parse之间用meta传递参数。翻页的话就写一个循环,给自己yield一个request。

import random # 导入 random 模块,用于生成随机数

import scrapy # 导入 scrapy 模块,用于爬取网页

import json # 导入 json 模块,用于处理 JSON 数据

from .items import Item # 导入 Item 类,确保路径正确

import time # 导入 time 模块,用于添加延迟

class spiderSpider(scrapy.Spider): # 定义 spiderSpider 类,继承自 scrapy.Spider

name = "spider" # 设置爬虫的名称

allowed_domains = ["mp-shop-api-catalog.fd.noon.com"] # 设置允许爬取的域名

start_urls = ["https://mp-shop-api-catalog.fd.noon.com/v2/content/search?type=store&page=1&limit=20"]

# 设置爬虫的起始 URL

def parse(self, response): # 定义解析函数,处理初始页面的响应

content = response.text # 获取响应内容

content = json.loads(content) # 将 JSON 字符串转换为 Python 对象

print("----------------Start------------------") # 打印分隔符

# 循环处理每个 store

for i in range(20):

storeCode = content["results"][-1]["results"][i]["storeCode"] # 获取 storeCode

storeName = content["results"][-1]["results"][i]["nameEn"] # 获取店铺名称

storeLogo = "https://f.nooncdn.com//" + content["results"][-1]["results"][i]["image"] # 获取店铺 Logo 的 URL

departmentName = content["results"][-1]["results"][i]["departmentNameEn"] # 获取部门名称

roadDistance = content["results"][-1]["results"][i]["serviceabilityInfo"]["roadDistance"] # 获取道路距离

operationalMsg = content["results"][-1]["results"][i]["operationality"]["operationalMsg"] # 获取运营信息

page = content["search"]["page"] # 获取当前页数

print("第{}个store!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!".format(i + 1))

# 创建 Item 对象

stores = Item(storeCode=storeCode,

storeName=storeName,

storeLogo=storeLogo,

departmentName=departmentName,

roadDistance=roadDistance,

operationalMsg=operationalMsg)

store_url = "https://mp-shop-api-catalog.fd.noon.com/v2/content/search?type=super_category&page={}&f[store_code]={}".format(page, storeCode)

# 发送请求到 store_url,回调函数为 second_parse

yield scrapy.Request(url=store_url, callback=self.second_parse, meta={'item': stores})

# 循环处理下一页

for page in range(2, 3):

nextpage_url = "https://mp-shop-api-catalog.fd.noon.com/v2/content/search?type=store&page={}&limit=20".format(page)

yield scrapy.Request(url=nextpage_url, callback=self.parse)

time.sleep(random.randint(10, 15)) # 添加随机延迟

def second_parse(self, response): # 定义解析函数,处理第二层页面的响应

content = response.text # 获取响应内容

content = json.loads(content) # 将 JSON 字符串转换为 Python 对象

# 获取之前传递的 item 对象

second = response.meta['item']

second['minOrder'] = content["results"][0]["minOrder"] # 获取最小订单数

second['estimatedDeliveryTime'] = content["results"][0]["estimatedDeliveryTime"] # 获取预估交货时间

second['catagoryNum'] = content["nbHits"] # 获取类别数量

storeCode = content["results"][0]["storeCode"] # 获取店铺代码

# 循环处理每个类目

for i in range(second['catagoryNum']):

second['title'] = content["results"][-1]["results"][i]["title"] # 获取类目标题

second['superCategoryCode'] = content["results"][-1]["results"][i]["superCategoryCode"] # 获取超级类别代码

second['iconImage'] = "https://f.nooncdn.com//" + content["results"][-1]["results"][i]["iconImage"] # 获取类目图标的 URL

second['goods_url'] = "https://mp-shop-api-catalog.fd.noon.com/v1/store/{}/{}?page=1&limit=30&category_code=all".format(storeCode, second['superCategoryCode']) # 构建商品页面的 URL

# 发送请求到 goods_url,回调函数为 third_parse

yield scrapy.Request(url=second['goods_url'], callback=self.third_parse, meta={'item': second})

print("第{}个类目!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!".format(i + 1))

def third_parse(self, response): # 定义解析函数,处理第三层页面的响应

content = response.text # 获取响应内容

content = json.loads(content) # 将 JSON 字符串转换为 Python 对象

third = response.meta['item'] # 获取之前传递的 item 对象

storeCode = content["data"]["storeDetails"]["storeCode"] # 获取店铺代码

third['superCategoryCode'] = content["data"]["products"][0]["superCategoryCode"] # 获取超级类别代码

third['goodsPage'] = content["data"]["nbPages"] # 获取商品总页数

third['goodsNum'] = content["data"]["nbHits"] # 获取商品总数

# 循环处理每个商品

for i in range(third['goodsNum']):

third['goodsName'] = content["data"]["products"][i]["nameEn"] # 获取商品名称

third['goodsPhoto'] = "https://f.nooncdn.com//" + content["data"]["products"][i]["images"][0] # 获取商品照片的 URL

third['goodsGM'] = content["data"]["products"][i]["size"] # 获取商品规格

third['goodsPrice'] = content["data"]["products"][i]["price"] # 获取商品价格

print("第{}个商品!!!!!!!!!!!!!!!!!!!!!!!!!!!!!".format(i + 1))

yield third # 返回第三层解析结果

# 循环处理下一页

for page in range(third['goodsPage'] + 1):

nextpage_url = "https://mp-shop-api-catalog.fd.noon.com/v1/store/{}/{}?page={}&limit=30&category_code=all".format(storeCode, third['superCategoryCode'], page + 1)

yield scrapy.Request(url=nextpage_url, callback=self.third_parse)

pipeline类

分两个管线,一个负责数据一个负责图片。

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

import csv

import pandas as pd

import urllib.request

class Pipeline:

def open_spider(self, spider):

self.fp = open('store.csv', 'w', encoding='utf-8',newline="")

self.writer = csv.writer(self.fp)

self.writer.writerow(['storeName', 'title', "storeLogo", 'storeCode','roadDistance','operationalMsg','minOrder','estimatedDeliveryTime','departmentName',

'catagoryNum','superCategoryCode','iconImage','goodsName','goodsPrice','goodsPage','goodsNum','goods_url','goodsPhoto','goodsGM'])

# items就是yield后面的book对象

def process_item(self, item, spider):

print("开始下载数据"+item['goodsName']+"!!!!!!!!!!!!!!!!!!!!!!!!")

re_list = [item['storeName'], item['title'], item['storeLogo'], item['storeCode'],

item['roadDistance'],item['operationalMsg'], item['minOrder'],

item['estimatedDeliveryTime'],item['departmentName'], item['catagoryNum'],

item['superCategoryCode'], item['iconImage'], item['goodsName'],

item['goodsPrice'], item['goodsPage'], item['goodsNum'],

item['goods_url'], item['goodsPhoto'], item['goodsGM'],

]

self.writer.writerow(re_list)

return item

def close_spider(self, spider):

self.writer.close()

self.fp.close()

class PhotoDownLoadPipeline:

def process_item(self, item, spider):

print("开始下载图片"+item['goodsName']+"!!!!!!!!!!!!!!!!!!!!!!!!")

url = item['goodsPhoto']

filename = './goods/'+str(item['storeName'])+" "+str(item['superCategoryCode'])+" "+str(item['goodsName'])+str(item['goodsPrice'])+'UAE.jpg'

urllib.request.urlretrieve(url=url, filename=filename)

return item

setting类

setting的头文件信息建议能写全就写全,我刚开始就没写’x-lng’, 'x-lat’这两个经纬度参数,数据都显示不全。然后这次由于是挂了梯子的原因,没敢用ip代理池,我怕会冲突,当然也没用随机UA,只模拟了cookies。下载管线的话,数字越小优先级越高。然后这个版本没发现有random-download-delay呀,所以只能自己在请求那里写delay。

# Scrapy settings for project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = ""

SPIDER_MODULES = [".spiders"]

NEWSPIDER_MODULE = ".spiders"

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = " / 2 CFNetwork / 1335.0.3 Darwin / 21.6.0"

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# ".middlewares.SpiderMiddleware": 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# ".middlewares.DownloaderMiddleware": 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# "scrapy.extensions.telnet.TelnetConsole": None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

".pipelines.Pipeline": 301,

".pipelines.PhotoDownLoadPipeline": 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = "httpcache"

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage"

# Set settings whose default value is deprecated to a future-proof value

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"