Tekton构建Springboot项目操作手册

Tekton构建前后端项目操作手册——本地Harbor仓库版本

概述

前端Vue项目,后端Springboot项目,后端项目使用Dubbo+Zookeeper+PostgreSQL框架。

镜像版本,本地Harbor仓库,版本V2.0。

Kubernetes集群框架,版本v1.18.17。

Tekton,版本v0.24.1。

Tekton介绍

Tekton 是一个 Kubernetes 原生的构建 CI/CD Pipeline 的解决方案,能够以 Kubernetes 扩展的方式安装和运行。它提供了一组 Kubernetes 自定义资源(custom resource),借助这些自定义资源,我们可以为 Pipeline 创建和重用构建块。

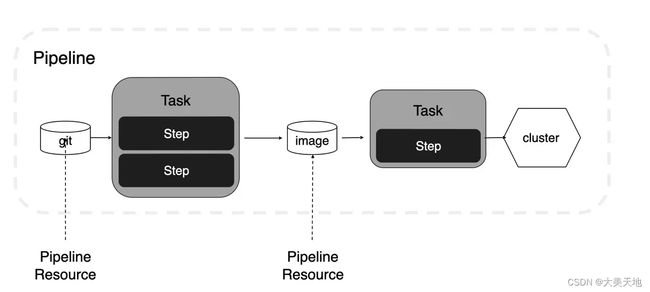

一个简单的pipeline流程

实体

Tekton 定义了如下的基本 Kubernetes 自定义资源定义(Kubernetes Custom Resource Definition,CRD)来构建 Pipeline:

PipelineResource:能够定义可引用的资源,比如源码仓库或容器镜像。

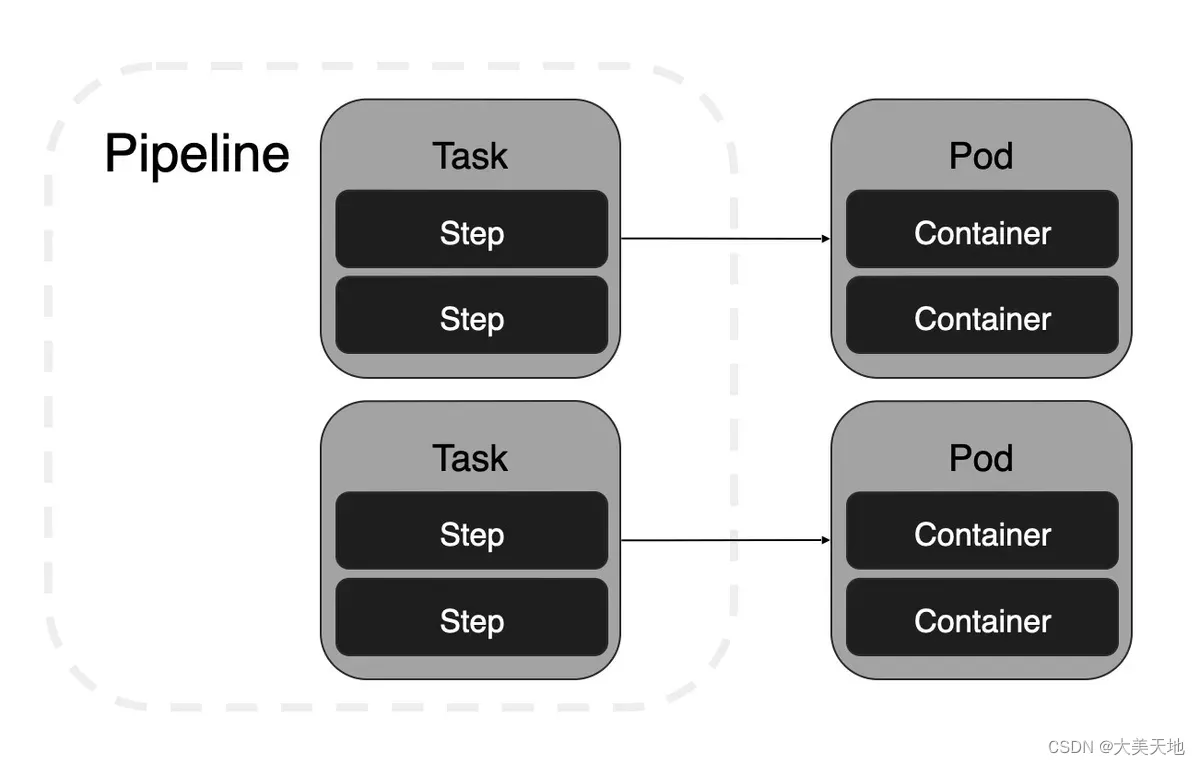

Task:定义了一个按顺序执行的 step 列表。每个 step 会在容器中执行命令。每个 task 都是一个 Kubernetes Pod,Pod 中包含了与 step 同等数量的容器。

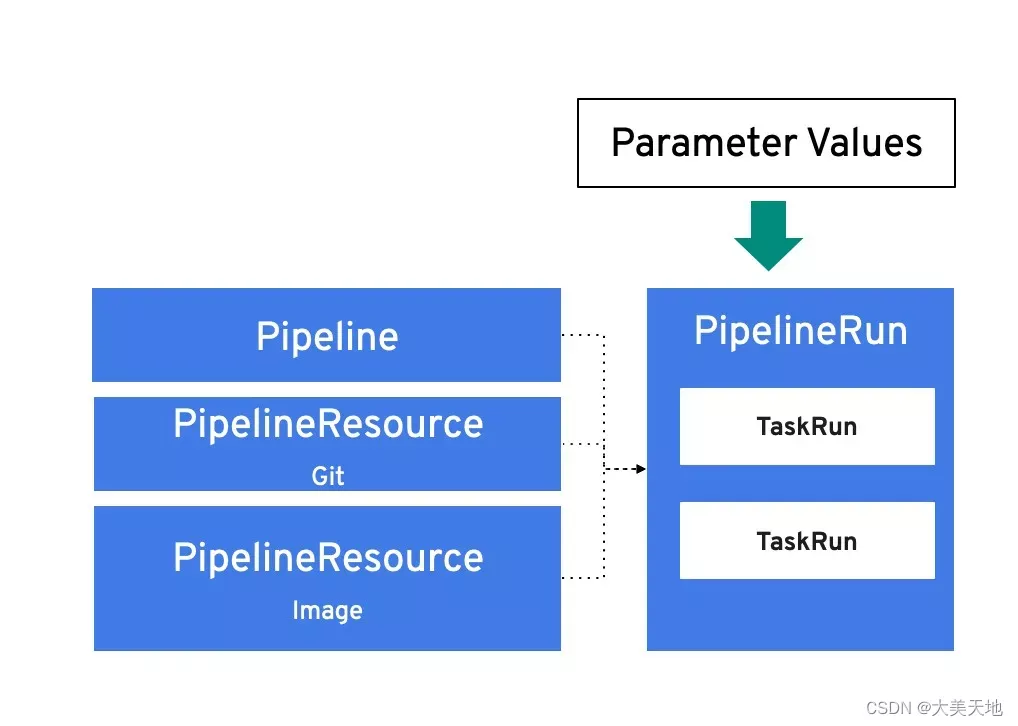

TaskRun:会实例化一个要执行的Task,并且会带有具体的输入、输出和参数。

Pipeline:会定义一个 task 的列表,这些 task 会按照特定的顺序来执行。

PipelineRun:会实例化一个要执行的Pipeline,并且会带有具体的输入、输出和参数。它会自动为每个Task创建TaskRun实例。

Task可以通过创建TaskRun对象单独运行,也可以作为Pipeline的一部分运行。

准备

Tekton安装

安装资源文件下载地址:

https://gitee.com/coolops/tekton-install/tree/master

创建安装文件夹

mkdir -p /usr/local/install-k8s/plugin/tekton

我们选择安装v0.24.1版本的,下载install.yaml

wget https://gitee.com/coolops/tekton-install/blob/master/v0.24.1/install.yaml

kubectl apply -f install.yaml

安装tekton-dashboard

mkdir dashboard

cd dashboard

wget https://gitee.com/coolops/tekton-install/blob/master/dashboard/0.24.1.yaml

kubectl apply -f 0.24.1.yaml

安装tekton-trigger

mkdir trigger

cd trigger

wget https://gitee.com/coolops/tekton-install/blob/master/trigger/v0.15.0/install.yaml

kubectl apply -f install.yaml安装完成后访问地址:http://192.168.20.68:31011

Harbor的安装,参考如下harbor安装手册:

https://github.com/goharbor/harbor/blob/v2.0.2/docs/README.md

流水线的流程

本文实现一个 springboot 项目 CI/CD 的完整流程,具体包括以下步骤:

①从 git 仓库拉取代码;

②maven 构建,将源码打包成 jar 包;

③使用kaniko根据 Dockerfile 构建镜像并推送到镜像仓库;

④使用yq编辑 deployment YAML 文件;

⑤使用 kubectl 命令部署全局信息:镜像仓库的secret(多个chart包会共用,加到多个chart包会报错);

⑥使用 kubeconfig部署应用。

使用到的材料、工具:

①git:存放源码的地址、账号信息;

②maven:打包java项目的工具;

③registry:远程镜像仓库,存放打包的镜像;

④GoogleContainerTools/kaniko:容器内构建镜像并推送到镜像仓库;

⑤Lachie83/k8s-kubectl:容器内访问k8s集群;

⑥quay.io/rhdevelopers/tutorial-tools:0.0.3:启动部署资源文件;

⑦Kubernetes环境;

⑧使用nodejs和nginx,构建和部署前端项目。

构建开始

目录结构

git-resource.yaml

git-secret.yaml

git-ui-resource.yaml

image-consumer-resource.yaml

image-provider-resource.yaml

image-ui-resource.yaml

image-secret.yaml

kubectl-deploy-consumer.yaml

kubectl-deploy-provider.yaml

kubectl-deploy-nodejs-task.yaml

maven-kaniko-consumer-task.yaml

maven-kaniko-provider-task.yaml

kaniko-ui-task.yaml

maven-unittest-task.yaml

pipelinerun.yaml

pipeline.yaml

serviceaccount.yaml详细配置步骤

①新建/usr/local/k8s-install/plugin/tekton/swurtmp文件夹

mkdir -p /usr/local/k8s-install/plugin/tekton/swurtmp②准备资源,定义五个PipelineResource数据源

第一个资源存放后端源码的git数据源,其中标记部分为git账号用户名和密码。

首先通过PipelineResource定义源代码的配置信息,存放在 swurtmp-git-resource.yaml 文件中,type 指定了类型为 git。

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: swurtmp-git-resource

namespace: swurtmp-harbor

spec:

type: git

params:

- name: url

value: http://duanmin:******@git.siwill.com/SW-BDP/swurtmp.git

- name: revision

value: master第二个资源存放前端源码的git数据源,存放在git-ui-resource.yaml文件中:

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: git-ui-resource

namespace: swurtmp-harbor

spec:

type: git

params:

- name: url

value: http://duanmin:******@git.siwill.com/SW-BDP/metromanage.git

- name: revision

value: master如果git仓库不是公开的,需要定义账号密码信息,存放在 swurtmp-git-secret.yaml 文件中,annotations 中的 tekton.dev/git-0 指定了将此账号密码信息应用于哪个域名下的git仓库。

apiVersion: v1

kind: Secret

metadata:

name: swurtmp-git-secret

namespace: swurtmp-harbor

annotations:

tekton.dev/git-0: http://git.siwill.com

type: kubernetes.io/basic-auth

stringData:

username: duanmin

password: "******"新建第三和第四个资源,由于dubbo项目,提供方和消费方的服务是分开的,所以分别新建两个资源文件image-provider-resource.yaml和image-consumer-resource.yaml,用于镜像仓库地址资源配置,type 指定了类型是 image。

#image-provider-resource.yaml

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: provider-image-resource

namespace: swurtmp-harbor

spec:

type: image

params:

- name: url

value: 192.168.20.69/swurtmp/provider#image-consumer-resource.yaml

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: consumer-image-resource

namespace: swurtmp-harbor

spec:

type: image

params:

- name: url

value: 192.168.20.69/swurtmp/consumer新建第五个资源,用于前端镜像资源配置。

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: image-ui-resource

namespace: swurtmp-harbor

spec:

type: image

params:

- name: url

value: 192.168.20.69/swurtmp/ui新建资源文件,swurtmp-image-secret.yaml,用于镜像地址用户名和密码的配置。annotations 字段指定了账号密码应用于那个镜像仓库。

apiVersion: v1

kind: Secret

metadata:

name: image-secret

namespace: swurtmp-harbor

annotations:

tekton.dev/docker-0: 192.168.20.69

type: kubernetes.io/basic-auth

stringData:

username: admin

password: Harbor12345③定义三个Task

单元测试的Task,maven-unittest-task.yaml。

resources.inputs 定义了该 Task 需要用到的资源信息

image:定义了执行该Task的镜像的maven镜像,里面预装了maven软件

volumeMounts:设置磁盘挂载,挂载到宿主机上的/root/.m2 目录,避免每次执行流水线都要下载依赖包

command & args:在容器内执行 mvn test 命令

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: maven-test-task

namespace: swurtmp-harbor

spec:

resources:

inputs:

- name: swurtmp-git-repo

type: git

steps:

- name: maven-test

image: maven:3.5.0-jdk-8-alpine

workingDir: /workspace/swurtmp-git-repo

command:

- mvn

args:

- test

volumeMounts:

- name: m2

mountPath: /root/.m2

volumes:

- name: m2

hostPath:

path: /root/.m2新建第二个Task,maven构建和镜像构建并推送的Task,maven-kaniko-consumer-task.yaml,maven-kaniko-provider-task.yaml

该 Task 定义了两个 Step:

源码通过maven构建成jar包,调用 mvn clean package 命令

通过Dockerfile构建成镜像,并推送到镜像仓库

构建镜像使用的是google开源的Kaniko,因为使用docker构建,存在 docker in docker 的问题,docker构建需要docker daemon进程,因此需要挂载宿主机的 docker.sock 文件,这样不安全。Kaniko 这个镜像构建工具特别轻量化,不像 docker 一样依赖一个 daemon 进程。

执行的命令:/kaniko/executor

相关参数说明:

dockerfile:引用了 inputs 的 resource 中的 git 仓库地址中的 Dockerfile

context:引用了 inputs 的 resource 中的 git 仓库地址

destination:应用了 outputs 的 resource 中的 image 仓库地址

使用到两个资源文件:

inputs 类型的 src-git-repo,指明需要使用的源码地址,type 是 git

outputs 类型的 image-repo,指明镜像构建完成后推送到的目的地址,type 是 image

文件中还定义了一个名为 DOCKER_CONFIG 的环境变量,这个变量是用于 Kaniko 去查找 Docker 认证信息的

#maven-kaniko-provider-task.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: maven-kaniko-provider-task

namespace: swurtmp-harbor

spec:

params:

- name: imageTag

type: string

resources:

inputs:

- name: swurtmp-git-repo

type: git

outputs:

- name: image-provider-repo

type: image

steps:

- name: maven-build

image: maven:3.5.0-jdk-8-alpine

workingDir: /workspace/swurtmp-git-repo

command:

- mvn

args:

- clean

- package

volumeMounts:

- name: m2

mountPath: /root/.m2

- name: kaniko-build

image: cnych/kaniko-executor:v0.22.0

workingDir: /workspace/kaniko

env:

- name: DOCKER_CONFIG

value: /tekton/home/.docker

command:

- /kaniko/executor

- --dockerfile=$(resources.inputs.swurtmp-git-repo.path)/swurtmp-provider/Dockerfile

- --context=$(resources.inputs.swurtmp-git-repo.path)

- --destination=$(resources.outputs.image-provider-repo.url):$(params.imageTag)

- --insecure

- --skip-tls-verify

- --skip-tls-verify-pull

- --insecure-pull

volumes:

- name: m2

hostPath:

path: /root/.m2#maven-kaniko-consumer-task.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: maven-kaniko-consumer-task

namespace: swurtmp-harbor

spec:

params:

- name: imageTag

type: string

resources:

inputs:

- name: swurtmp-git-repo

type: git

outputs:

- name: image-consumer-repo

type: image

steps:

- name: maven-build

image: maven:3.5.0-jdk-8-alpine

workingDir: /workspace/swurtmp-git-repo

command:

- mvn

args:

- clean

- package

volumeMounts:

- name: m2

mountPath: /root/.m2

- name: kaniko-build

image: cnych/kaniko-executor:v0.22.0

workingDir: /workspace/kaniko

env:

- name: DOCKER_CONFIG

value: /tekton/home/.docker

command:

- /kaniko/executor

- --dockerfile=$(resources.inputs.swurtmp-git-repo.path)/swurtmp-consumer/Dockerfile

- --context=$(resources.inputs.swurtmp-git-repo.path)

- --destination=$(resources.outputs.image-consumer-repo.url):$(params.imageTag)

- --insecure

- --skip-tls-verify

- --skip-tls-verify-pull

- --insecure-pull

volumes:

- name: m2

hostPath:

path: /root/.m2同时,定义前端打包镜像task,kaniko-ui-task.yaml,通过nodejs进行前端代码生成,并仍然通过kaniko进行镜像生成并push到镜像仓库。

apiVersion: tekton.dev/v1alpha1

kind: Task

metadata:

name: kaniko-ui-nodejs-task

namespace: swurtmp-harbor

spec:

inputs:

resources:

- name: git-ui-repo

type: git

outputs:

resources:

- name: image-ui-repo

type: image

steps:

- name: create-image-and-push

image: cnych/kaniko-executor:v0.22.0

workingDir: /workspace/kaniko

env:

- name: DOCKER_CONFIG

value: /tekton/home/.docker

command:

- /kaniko/executor

args:

- --dockerfile=$(resources.inputs.git-ui-repo.path)/DockerfileHarbor

- --context=$(resources.inputs.git-ui-repo.path)

- --destination=$(outputs.resources.image-ui-repo.url):v1.0.1

- --insecure=false

- --skip-tls-verify

volumeMounts:

- mountPath: /kaniko/.docker

name: kaniko-secret

volumes:

- name: kaniko-secret

secret:

secretName: regcred

items:

- key: .dockerconfigjson

path: config.json前端应用,通过内置nginx进行前端应用的启动,dockerfile内容如下:

FROM node:lts-alpine as build-stage

WORKDIR /app

COPY package*.json ./

RUN npm install -g cnpm --registry=https://registry.npm.taobao.org

RUN cnpm install

COPY . .

RUN npm run build

FROM nginx:stable-alpine

COPY nginx/nginx.conf /etc/nginx/nginx.conf

COPY nginx/default.conf /etc/nginx/conf.d/default.conf

COPY nginx/tekton.conf /etc/nginx/conf.d/tekton.conf

COPY --from=build-stage /app/dist /usr/share/nginx/tekton

EXPOSE 8081

CMD ["nginx", "-g", "daemon off;"]在前端项目新建nginx文件夹,对配置文件进行定制化配置,分别新建default.conf、nginx.conf、tekton.conf三个配置文件,内容如下:

#default.conf

server {

listen 8000;

listen [::]:8000;

server_name localhost;

#access_log /var/log/nginx/host.access.log main;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}#nginx.conf,其中标记部分为后端访问地址。

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

upstream metroapi {

server 192.168.20.68:31646;

}

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

} #tekton.conf,标记端口号为kubectl部署前端项目时,约定好的nginx端口,该端口号用于扩展对外端口号。

server {

listen 8666;

server_name localhost;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

root /usr/share/nginx/tekton;

index index.html index.htm;

}

location /metroapi {

client_max_body_size 1024m;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_pass http://metroapi/;

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/tekton;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

}新建第三个Task,kubectl-deploy-consumer.yaml,kubectl-deploy-provider.yaml用于启动部署文件。使用yq工具编辑deployment file。使用kubectl启动部署文件。swurtmp-kubectl-deploy.yaml,内容如下:

#kubectl-deploy-provider.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: kubectl-deploy-provider

namespace: swurtmp-harbor

spec:

params:

- name: deploymentFile

type: string

description: "gcp-kubectl-task.yaml"

resources:

inputs:

- name: swurtmp-git-repo

type: git

- name: image-provider-repo

type: image

steps:

- name: update-deployment-file

image: quay.io/lordofthejars/image-updater:1.0.0

script: |

#!/usr/bin/env ash

yq eval -i '.spec.template.spec.containers[0].image = env(DESTINATION_IMAGE)' $DEPLOYMENT_FILE

env:

- name: DESTINATION_IMAGE

value: "$(inputs.resources.image-provider-repo.url)"

- name: DEPLOYMENT_FILE

value: "/workspace/swurtmp-git-repo/swurtmp-provider/$(inputs.params.deploymentFile)"

- name: kubeconfig

image: quay.io/rhdevelopers/tutorial-tools:0.0.3

command: ["kubectl"]

args:

- apply

- -f

- /workspace/swurtmp-git-repo/swurtmp-provider/$(inputs.params.deploymentFile)

- name: deploy-application

image: lachlanevenson/k8s-kubectl

command: ["kubectl"]

args:

- "rollout"

- "restart"

- "deployment/deploy-provider"

- "-n"

- "swurtmp-harbor"

#kubectl-deploy-consumer.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: kubectl-deploy-consumer

namespace: swurtmp-harbor

spec:

params:

- name: deploymentFile

type: string

description: "gcp-kubectl-task.yaml"

resources:

inputs:

- name: swurtmp-git-repo

type: git

- name: image-consumer-repo

type: image

steps:

- name: update-deployment-file

image: quay.io/lordofthejars/image-updater:1.0.0

script: |

#!/usr/bin/env ash

yq eval -i '.spec.template.spec.containers[0].image = env(DESTINATION_IMAGE)' $DEPLOYMENT_FILE

env:

- name: DESTINATION_IMAGE

value: "$(inputs.resources.image-consumer-repo.url)"

- name: DEPLOYMENT_FILE

value: "/workspace/swurtmp-git-repo/swurtmp-consumer/$(inputs.params.deploymentFile)"

- name: kubeconfig

image: quay.io/rhdevelopers/tutorial-tools:0.0.3

command: ["kubectl"]

args:

- apply

- -f

- /workspace/swurtmp-git-repo/swurtmp-consumer/$(inputs.params.deploymentFile)

- name: deploy-application

image: lachlanevenson/k8s-kubectl

command: ["kubectl"]

args:

- "rollout"

- "restart"

- "deployment/deploy-consumer"

- "-n"

- "swurtmp-harbor"前端部署,标记文件需要在工程项目中新建。

apiVersion: tekton.dev/v1alpha1

kind: Task

metadata:

name: deploy-nodejs-task

namespace: swurtmp-harbor

spec:

inputs:

resources:

- name: git-ui-repo

type: git

params:

- name: DEPLOY_ENVIRONMENT

description: The environment where you deploy the app

default: 'swurtmp-harbor'

type: string

steps:

- name: deploy-resources

image: lachlanevenson/k8s-kubectl

command: ["kubectl"]

args:

- "apply"

- "-f"

- "$(inputs.resources.git-ui-repo.path)/deploy-harbor.yaml"

- "-n"

- "$(inputs.params.DEPLOY_ENVIRONMENT)"

- name: deploy-application

image: lachlanevenson/k8s-kubectl

command: ["kubectl"]

args:

- "rollout"

- "restart"

- "deployment/nodejs-app"

- "-n"

- "$(inputs.params.DEPLOY_ENVIRONMENT)"在项目中新建deploy-dev.yaml资源文件,用于前端部署,32426为k8s部署对外暴露前端项目访问端口。

apiVersion: v1

kind: Service

metadata:

name: nodejs-app

labels:

app: nodejs-app

namespace: swurtmp-harbor

spec:

type: NodePort

ports:

- port: 8666

name: nodejs-app

nodePort: 32426

selector:

app: nodejs-app

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nodejs-app

labels:

app: nodejs-app

namespace: swurtmp-harbor

spec:

replicas: 1

selector:

matchLabels:

app: nodejs-app

template:

metadata:

labels:

app: nodejs-app

spec:

terminationGracePeriodSeconds: 30

containers:

- name: nodejs-app

image: registry.cn-hangzhou.aliyuncs.com/dazlove/swurtmp-ui:latest

imagePullPolicy: "Always"

ports:

- containerPort: 8666④定义Serviceaccount

Serviceaccount 定义了需要访问k8s资源的权限, 引用 git 和 image 的 secret,文件名serviceaccount.yaml,内容如下:

apiVersion: v1

kind: ServiceAccount

metadata:

name: swurtmp-harbor-build-bot

namespace: swurtmp-harbor

secrets:

- name: git-secret

- name: image-secret

- name: regcred

- name: image-ui-secret创建regcred,其中/root/.docker/config.json为登录本地仓库的用户名:密码的base编码,查看一下内容如下:

{

"auths": {

"192.168.20.69": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

},

"https://index.docker.io/v1/": {

"auth": "ZG10ZDc4OkRtdGQ4NzA2MTM="

},

"hub.sunwin.com": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

},

"registry.cn-hangzhou.aliyuncs.com": {

"auth": "ZGF6bG92ZTEzMTpEbXRkODcwNjEz"

}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/19.03.13 (linux)"

}

}此文件为docker自动生成,生成方法,可以通过如下命令:

docker login 192.168.20.69

输入username:admin

password:Harbor12345

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded看到login seccessed说明登录成功,系统会自动将

"192.168.20.69": {

"auth": "YWRtaW46SGFyYm9yMTIzNDU="

},

写入/root/.docker/config.json中。

通过kubectl create secret generic 命令生成regcred的secret,该secret可用于kaniko的本地harbor仓库的登录密钥。

kubectl create secret generic regcred --from-file=.dockerconfigjson=/root/.docker/config.json --type=kubernetes.io/dockerconfigjson -n swurtmp-harbor新增角色权限绑定

kubectl create clusterrolebinding swurtmp-harbor-build-bot --clusterrole cluster-admin --serviceaccount=swurtmp:swurtmp-harbor-build-bot⑤新建pipeline资源文件,pipeline.yaml,内容如下:

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: swurtmp-pipeline

namespace: swurtmp-harbor

spec:

params:

- name: imageTag

default: v0.0.2

- name: kubectl_script

type: string

resources:

- name: swurtmp-git-repo

type: git

- name: git-ui-repo

type: git

- name: image-provider-repo

type: image

- name: image-consumer-repo

type: image

- name: image-ui-repo

type: image

tasks:

- name: maven-test-task

taskRef:

name: maven-test-task

resources:

inputs:

- name: swurtmp-git-repo

resource: swurtmp-git-repo

- name: maven-kaniko-provider-task

taskRef:

name: maven-kaniko-provider-task

params:

- name: imageTag

value: $(params.imageTag)

resources:

inputs:

- name: swurtmp-git-repo

resource: swurtmp-git-repo

outputs:

- name: image-provider-repo

resource: image-provider-repo

- name: maven-kaniko-consumer-task

taskRef:

name: maven-kaniko-consumer-task

params:

- name: imageTag

value: $(params.imageTag)

resources:

inputs:

- name: swurtmp-git-repo

resource: swurtmp-git-repo

outputs:

- name: image-consumer-repo

resource: image-consumer-repo

- name: kaniko-ui-nodejs-task

taskRef:

name: kaniko-ui-nodejs-task

resources:

inputs:

- name: git-ui-repo

resource: git-ui-repo

outputs:

- name: image-ui-repo

resource: image-ui-repo

- name: kubectl-deploy-provider

taskRef:

name: kubectl-deploy-provider

params:

- name: deploymentFile

value: provider-deployment-local.yaml

resources:

inputs:

- name: swurtmp-git-repo

resource: swurtmp-git-repo

- name: image-provider-repo

resource: image-provider-repo

from:

- maven-kaniko-provider-task

- name: kubectl-deploy-consumer

taskRef:

name: kubectl-deploy-consumer

params:

- name: deploymentFile

value: consumer-deployment-local.yaml

resources:

inputs:

- name: swurtmp-git-repo

resource: swurtmp-git-repo

- name: image-consumer-repo

resource: image-consumer-repo

from:

- maven-kaniko-consumer-task

runAfter:

- kubectl-deploy-provider

- name: kubectl-deploy-nodejs

taskRef:

name: deploy-nodejs-task

resources:

inputs:

- name: git-ui-repo

resource: git-ui-repo

runAfter:

- kaniko-ui-nodejs-task⑥新建pipelinerun资源文件,swurtmp-pipelinerun.yaml,内容如下。

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

namespace: swurtmp-harbor

generateName: swurtmp-pipeline-run-

spec:

serviceAccountName: swurtmp-harbor-build-bot

pipelineRef:

name: swurtmp-pipeline

params:

- name: imageTag

value: latest

- name: kubectl_script

value: "kubectl get pod"

resources:

- name: swurtmp-git-repo

resourceRef:

name: git-resource

- name: git-ui-repo

resourceRef:

name: git-ui-resource

- name: image-provider-repo

resourceRef:

name: provider-image-resource

- name: image-consumer-repo

resourceRef:

name: consumer-image-resource

- name: image-ui-repo

resourceRef:

name: image-ui-resource⑦工程项目新建DockerFile和provider-deployment.yaml、consumer-deployment.yaml文件,内容分别如下:

DockerFile

#swurtmp-provider项目根目录下新建Dockerfile内容如下:

FROM openjdk:8-jdk-alpine

RUN cd workspace/swurtmp-git-repo && ls

ADD swurtmp-provider/target/swurtmp-provider-1.0-SNAPSHOT.jar swurtmp-provider-1.0.1-SNAPSHOT.jar

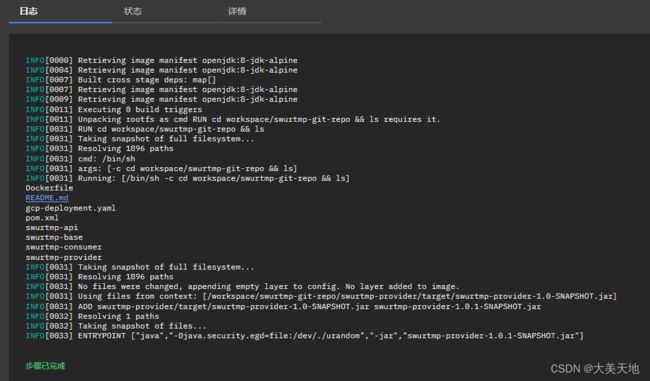

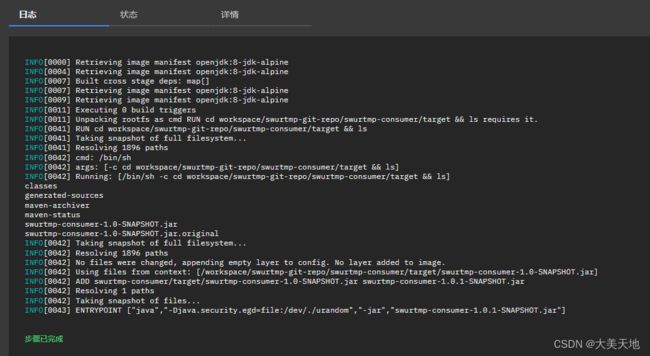

ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","swurtmp-provider-1.0.1-SNAPSHOT.jar"]相对应Tekton页面输出日志如下:

#swurtmp-consumer项目根目录下新建Dockerfile内容如下:

FROM openjdk:8-jdk-alpine

RUN cd workspace/swurtmp-git-repo/swurtmp-consumer/target && ls

ADD swurtmp-consumer/target/swurtmp-consumer-1.0-SNAPSHOT.jar swurtmp-consumer-1.0.1-SNAPSHOT.jar

ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","swurtmp-consumer-1.0.1-SNAPSHOT.jar"]相对应Tekton页面输出日志如下:

provider-deployment.yaml,标记处为版本号,默认为latest。

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: deploy-consumer

name: deploy-consumer

namespace: swurtmp-harbor

spec:

replicas: 1

selector:

matchLabels:

app: deploy-consumer

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: deploy-consumer

spec:

containers:

- image: 192.168.20.69/swurtmp-consumer:latest

name: deploy-consumer

resources: {}

imagePullPolicy: "Always"

ports:

- containerPort: 8088consumer-deployment.yaml,标记处为版本号,默认为latest。

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: deploy-provider

name: deploy-provider

namespace: swurtmp-harbor

spec:

replicas: 1

selector:

matchLabels:

app: deploy-provider

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: deploy-provider

spec:

containers:

- image: 192.168.20.69/swurtmp/privider:latest

name: deploy-provider

resources: {}前端构建日志

执行过程如下:

kubectl apply -f git-resource.yaml

kubectl apply -f git-secret.yaml

kubectl apply -f git-ui-resource.yaml

kubectl apply -f image-provider-resource.yaml

kubectl apply -f image-consumer-resource.yaml

kubectl apply -f image-ui-resource.yaml

kubectl apply -f image-secret.yaml

kubectl apply -f maven-unittest-task.yaml

kubectl apply -f maven-kaniko-consumer-task.yaml

kubectl apply -f maven-kaniko-provider-task.yaml

kubectl apply -f kaniko-ui-task.yaml

kubectl apply -f kubectl-deploy-consumer.yaml

kubectl apply -f kubectl-deploy-provider.yaml

kubectl apply -f kubectl-deploy-nodejs-task.yaml

kubectl apply -f pipeline.yaml

kubectl apply -f serviceaccount.yaml

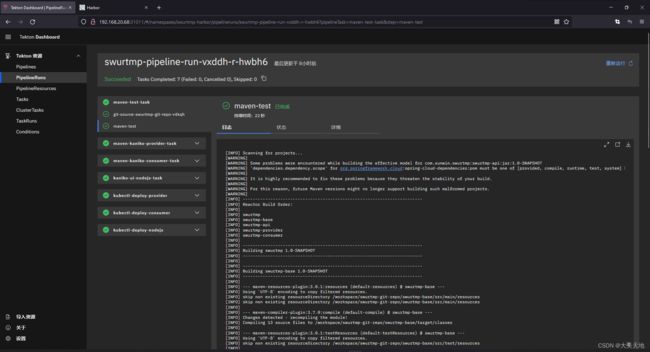

kubectl create -f swurtmp-pipelinerun.yaml最终页面显示结果如图所示:

通过kubectl命令查看,可以看到pod已经部署成功。

kubectl get pod -n swurtmp-harbor -o wide

到此,整个Tekton构建项目完成。

常见错误:

dial tcp 208.91.197.27:443: connect: connection refused

由于kaniko访问本地harbor仓库时,无法访问harbor.yml配置文件中配置的docker harbor域名。解决方案,是将harbor.yml配置文件中的域名地址改为本机IP,另外将所有镜像推送地址改为ip/xxx/yyy:v1.0.1格式。

参考资料:

harbor安装:https://github.com/goharbor/harbor/blob/v2.0.2/docs/README.md

kaniko使用手册:https://github.com/GoogleContainerTools/kaniko

tekton使用手册:https://tekton.dev/docs/