第十五章 scrapy框架使用

文章目录

- 1. 数据提取

- 2. 数据过滤

- 3. 使用items格式化数据

- 4. 数据存储

-

- 1. 数据存储在csv文件中

- 2. 数据存储到mysql中

- 3. MongoDB的存储

- 4. 文件的存储

1. 数据提取

CSS获取数据

xptah和CSS混合提取数据

web.css(".class_name::text").extract()

2. 数据过滤

# 根据元素属性判断

if web.xpath("./@class") == "class_name":

#根据元素的值判断

value = web.xpath("./div/text()"):

if not value:

3. 使用items格式化数据

格式化数据,便于后期问题定位

定义数据格式

import scrapy

class DirectItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

house_name= scrapy.Field() # 等于字典的key

huxing = scrapy.Field()

mianji = scrapy.Field()

chaoxiang = scrapy.Field()

louceng = scrapy.Field()

zhaungxiu = scrapy.Field()

pass

# 数据的使用

# 导包

from direct.items import DirectItem

class GameSpider(scrapy.Spider):

name = "game"

allowed_domains = ["bj.5i5j.com"]

start_urls = ["https://bj.5i5j.com/zufang/"]

def parse(self, response):

div_lst = house_name = response.xpath("//ul[@class='pList rentList']/li/div[2]")

for i in div_lst:

house_name = i.xpath("./h3/a/text()").extract()

house_msg = i.xpath("./div[1]/p[1]/text()").extract()[0].strip().split(" · ")

val = DirectItem()

val["house_name"]=house_name,

val["huxing"]= house_msg[0],

val["mianji"]=house_msg[1],

val["chaoxiang"]= house_msg[2],

val["louceng"]= house_msg[3],

val["zhaungxiu"]=house_msg[4]

yield val

4. 数据存储

数据存储方案

- 数据存储在csv文件中

- 数据存储在mysql中

- 数据存储在MongoDB中

- 文件的存储

1. 数据存储在csv文件中

# 创建存储类

class CsvSave:

#打开文件

def open_spider(self, spider):

self.f = open("./message.csv",mode="a",encoding="utf-8")

def close_spider(self, spider):

if self.f:

self.f.close()

def process_item(self,item,spider):

self.f.write(f"{item}\n")

return item

# settings中打开管道

ITEM_PIPELINES = {

"direct.pipelines.DirectPipeline": 300,

"direct.pipelines.CsvSave":301

}

2. 数据存储到mysql中

数据存储到mysql

连接数据库

class MysqlSave:

def open_spider(self,spider):

self.con = pymysql.connect(

host='localhost',

port=3306,

user="root",

password="root",

database= "test_day1"

)

def close_spider(self, spider):

if self.con:

self.con.close()

def process_item(self,item,spider):

try:

cursor = self.con.cursor()

sql = "INSERT INTO house_msg(house_name,house_huxing,house_mianji,house_chaoxiang,house_louceng,house_zhuangxiu) VALUES (%s,%s,%s,%s,%s,%s);"

val = (item['house_name'],item['huxing'],item['mianji'],item['chaoxiang'],item['louceng'],item['zhuangxiu'])

cursor.execute(sql,val)

self.con.commit()

except Exception as e:

print(e)

self.con.rollback()

finally:

if cursor:

cursor.close()

return item

数据库定义到settings中

MySql = {

'host':'localhost',

'port':3306,

'user':'root',

'password':'root',

'database':'test_day1'

}

class MysqlSave:

def open_spider(self,spider):

self.con = pymysql.connect(

host=MySql['host'],

port=MySql['port'],

user=MySql['user'],

password=MySql['password'],

database= MySql['database']

)

3. MongoDB的存储

class MongoDBSave:

def open_spider(self,spider):

self.client = pymongo.MongoClient(host="localhost",port=27017)

db = self.client['house']

self.collection = db['house_msg']

def close_spider(self,spider):

self.client.close()

def process_item(self, item, spider):

val = {

'house_name':item['house_name'],

'huxing':item['huxing'],

'mianji':item['mianji'],

'chaoxiang':item['chaoxiang'],

'louceng':item['louceng'],

'zhuangxiu':item['zhuangxiu']

}

self.collection.insert_one(val)

return item

4. 文件的存储

文件会涉及到多个href的请求,可以在spider里,添加多次请求

'''

1.将获取的url与resp中请求的url合并

resp.urljoin(href)

2.callback回调,可以将返回的request返回个callback后面的函数(参数)

'''

class PictureSpider(scrapy.Spider):

name = "picture"

allowed_domains = ["75ll.com"]

start_urls = ["https://www.75ll.com/meinv/"]

# 获取文件的src,并返回给pipline

def parse(self, resp,**kwargs):

src_lst = resp.xpath("//div[@class='channel_list']/ul/div/div/div/div/a/img/@src").extract()

for i in src_lst:

src_dic = TupianItem()

src_dic['src'] = i

yield src_dic

# 翻页,返回请求,回调parser函数

next_href =resp.xpath("//a[@class='next_a']/@href").extract_first()

if next_href:

yield scrapy.Request(

url=next_href,

method='get',

callback=self.parse

)

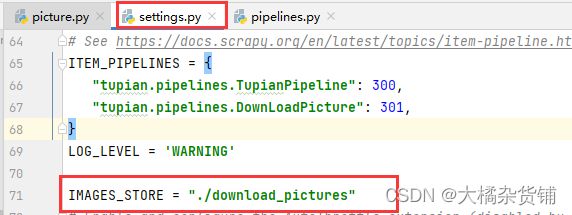

下载图片的pipline

使用图片下载的pipline时,需要单独配置用来保存图片的文件夹

class DownLoadPicture(ImagesPipeline):

# 负责下载,直接返回request请求

def get_media_requests(self, item, info):

# 返回请求

yield scrapy.Request(item['src'])

# 准备文件路径

def file_path(self, request, response=None, info=None, *, item=None):

name = request.url.split('/')[-1]

# 返回文件路径

return f"img/{name}"

# 返回保存的文件的信息

def item_completed(self, results, item, info):

print(results)

判断xpath中是否包含xx文字

# 判断标签文本中是否包含下一页

href = resp.xpath("//div[@class='page']/a[contains(text(),'下一页')]")