BM3D降噪算法实现

文章目录

-

- BM3D简介

- 关于一些参数的选择

- MATLAB代码实现

- Python代码实现

- C++代码实现

刚开始接触BM3D时,其实是比较懵的,因为之前一直沉醉于卷积滤波的降噪中,尤其在学完NLM算法后更是如此,一度认为BM3D也是卷积核滤波降噪的。但后面才发现,应该把它叫做“分块滤波”,顾名思义,即将图像分成若干图像块,对每个图像块进行降噪后再合并到大图中,注意图像块之间是存在重叠的,这也是BM3D的巧妙之处。谨以此段,希望能点醒和我之前一样迷茫的朋友。

BM3D简介

BM3D(Block-matching and 3D filtering,3维块匹配滤波)降噪是芬兰的坦佩雷理工大学(Pampere University of Technology)的Kosadin、Alessandro、Vladimir、Karen等人2007年提出的基于传统方法的图像降噪算法,该方法的去噪性能目前是非AI图像降噪中去噪效果最好的,无愧于state-of-art denoising performance的称号。BM3D的基本思想来自于自然图像中本身有很多相似的重复结构的观察结果,采用图像块匹配的方式对这些相似的结构进行收集聚合,然后对其正交变换,得到它们的一个稀疏表示,充分利用稀疏性和结构相似性,进行滤波处理,BM3D的去噪能够充分保留图像的结构和细节,得到很好的信噪比。

BM3D的论文为《Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering》,后续发展了各种形式的算法,如BM4D等,其项目网址为:https://webpages.tuni.fi/foi/GCF-BM3D/。

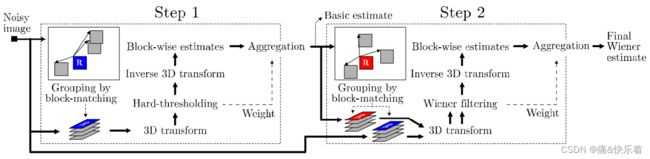

其原理如下,主要分两步,其中第一步为初始估计,第二步为最终估计。通常情况下,第一步的效果就可以达到降噪要求,这可以根据自己的需求进行选择。另外,也可以使用其他降噪算法来代替第一步以作为第二步的输入(噪声图像和去噪图像)。详细的讲解在参考的文章中说得都很好,在此不赘述了。

关于一些参数的选择

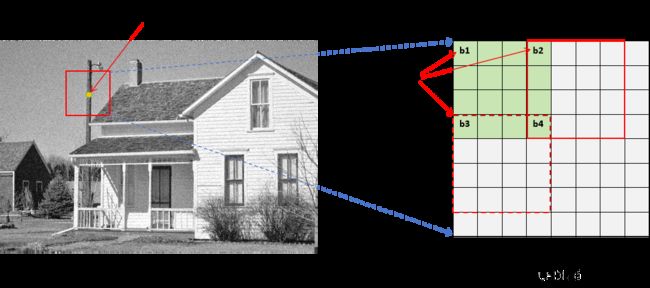

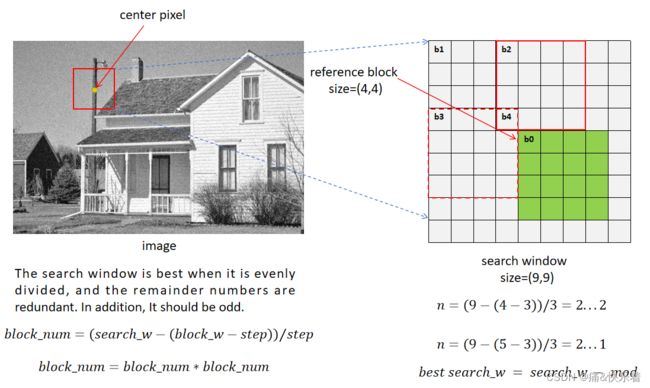

为了更进一步研究BM3D,我使用MATLAB编写了第一步的实现,确定一些参数对降噪效果的影响。为了不浪费计算资源,应该设置合适搜索窗、块大小以及步长。

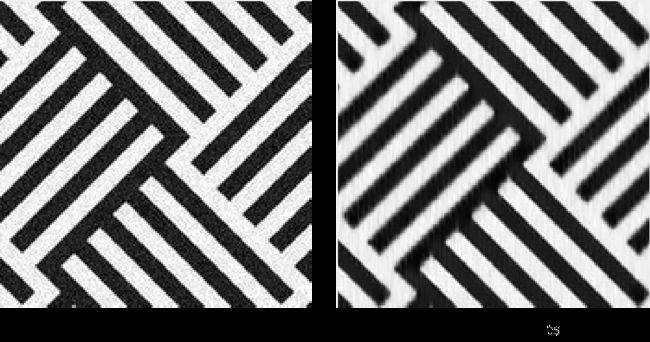

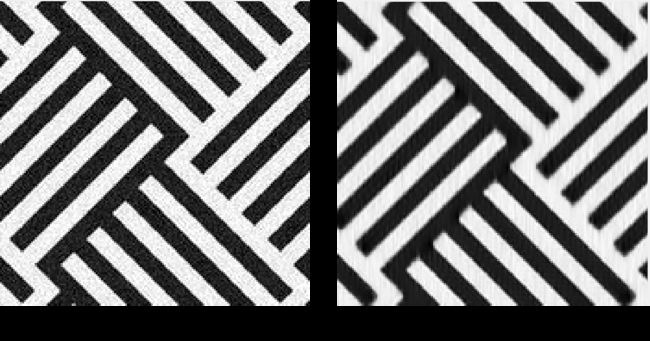

除此之外,也注意到参考块的选择,其起点在搜索窗的左上角以及中心时得到的降噪效果是不一样的,如下图所示可以发现参考块位于左上角时对45度角的条纹降噪效果不佳,边缘过度平滑,这主要是相似块较少的缘故。

最后,得到第一步的降噪效果如下:

MATLAB代码实现

MATLAB代码可以文章的项目地址进行下载,另外,我自己也实现了BM3D的第一步,以加深理解。部分代码如下:

function [dst_crop] = BM3D(src_img,enable_step2, sigma)

enable_step1 = 1;

search_window_size = 12; %搜索窗半径

search_step = 3;

block_step = 3;

block_size = 8;

src_img = padarray(src_img,[search_window_size search_window_size],'symmetric');

[row, col] = size(src_img);

dst_img = zeros(row, col);

weight_img = zeros(row, col);

%% the first step basic estimation

if enable_step1

for i = search_window_size+1:search_step:row-search_window_size+1

for j = search_window_size+1:search_step:col-search_window_size+1

r1 = i-search_window_size;

r2 = i+search_window_size;

c1 = j-search_window_size;

c2 = j+search_window_size;

if r2 > row && r2 - row < search_step

continue;

end

if c2 > col && c2 - col < search_step

continue;

end

if r2 > row && r2 - row > search_step

t = r2 - row;

r1 = r1 - t;

r2 = r2 - t;

end

if c2 > col && c2 - col > search_step

t = c2 - col;

c1 = c1 - t;

c2 = c2 - t;

end

temp = src_img(r1:r2, c1:c2);

% Grouping by matching

[similar_block_num,similar_block,similar_position] = Grouping(temp, block_size, block_step, i-search_window_size, j-search_window_size);

if 1

if i == 82 && j == 135

figure

imshow(src_img,[])

for k = 1:16

if similar_position(k,1) > 0

hold on

rectangle('Position',[similar_position(k,2),similar_position(k,1),block_size,block_size],'LineWidth',2,'EdgeColor','g');

pause(1);

end

end

hold off

end

end

% Collaborative fitering

filtered_similar_block = Step1_3DFilter(similar_block, sigma);

[basic_img,weight] = Step1_Aggregation(search_window_size*2+1, filtered_similar_block, similar_block_num,similar_position, i-search_window_size, j-search_window_size);

dst_img(r1:r2, c1:c2) = dst_img(r1:r2, c1:c2) + basic_img;

weight_img(r1:r2, c1:c2) = weight_img(r1:r2, c1:c2)+weight;

end

end

dst_img = dst_img./weight_img;

end

%% the second step final estimation

if enable_step2

basic_img = dst_img;

for i = 1:row

for j = 1:col

temp = basic_img(i:i+search_window_size-1, j:j+search_window_size-1);

% Grouping by matching

% Collaborative fitering ->wiener filter

% Aggregation

end

end

end

dst_crop = dst_img(search_window_size + 1:row-search_window_size,search_window_size+1:col-search_window_size);

end

Python代码实现

关于BM3D的python代码,可以参考https://github.com/liuhuang31/BM3D-Denoise/blob/master/BM3D.py,以防万一还是贴下代码:

# -*- coding: utf-8 -*-

"""

*BM3D算法简单实现,主要程序部分

*创建于2016.9.13

*作者:Huang Liu

"""

import cv2

import PSNR

import numpy

cv2.setUseOptimized(True)

# Parameters initialization

sigma = 25

Threshold_Hard3D = 2.7*sigma # Threshold for Hard Thresholding

First_Match_threshold = 2500 # 用于计算block之间相似度的阈值

Step1_max_matched_cnt = 16 # 组最大匹配的块数

Step1_Blk_Size = 8 # block_Size即块的大小,8*8

Step1_Blk_Step = 3 # Rather than sliding by one pixel to every next reference block, use a step of Nstep pixels in both horizontal and vertical directions.

Step1_Search_Step = 3 # 块的搜索step

Step1_Search_Window = 39 # Search for candidate matching blocks in a local neighborhood of restricted size NS*NS centered

Second_Match_threshold = 400 # 用于计算block之间相似度的阈值

Step2_max_matched_cnt = 32

Step2_Blk_Size = 8

Step2_Blk_Step = 3

Step2_Search_Step = 3

Step2_Search_Window = 39

Beta_Kaiser = 2.0

def init(img, _blk_size, _Beta_Kaiser):

"""该函数用于初始化,返回用于记录过滤后图像以及权重的数组,还有构造凯撒窗"""

m_shape = img.shape

m_img = numpy.matrix(numpy.zeros(m_shape, dtype=float))

m_wight = numpy.matrix(numpy.zeros(m_shape, dtype=float))

K = numpy.matrix(numpy.kaiser(_blk_size, _Beta_Kaiser))

m_Kaiser = numpy.array(K.T * K) # 构造一个凯撒窗

return m_img, m_wight, m_Kaiser

def Locate_blk(i, j, blk_step, block_Size, width, height):

'''该函数用于保证当前的blk不超出图像范围'''

if i*blk_step+block_Size < width:

point_x = i*blk_step

else:

point_x = width - block_Size

if j*blk_step+block_Size < height:

point_y = j*blk_step

else:

point_y = height - block_Size

m_blockPoint = numpy.array((point_x, point_y), dtype=int) # 当前参考图像的顶点

return m_blockPoint

def Define_SearchWindow(_noisyImg, _BlockPoint, _WindowSize, Blk_Size):

"""该函数返回一个二元组(x,y),用以界定_Search_Window顶点坐标"""

point_x = _BlockPoint[0] # 当前坐标

point_y = _BlockPoint[1] # 当前坐标

# 获得SearchWindow四个顶点的坐标

LX = point_x+Blk_Size/2-_WindowSize/2 # 左上x

LY = point_y+Blk_Size/2-_WindowSize/2 # 左上y

RX = LX+_WindowSize # 右下x

RY = LY+_WindowSize # 右下y

# 判断一下是否越界

if LX < 0: LX = 0

elif RX > _noisyImg.shape[0]: LX = _noisyImg.shape[0]-_WindowSize

if LY < 0: LY = 0

elif RY > _noisyImg.shape[0]: LY = _noisyImg.shape[0]-_WindowSize

return numpy.array((LX, LY), dtype=int)

def Step1_fast_match(_noisyImg, _BlockPoint):

"""快速匹配"""

'''

*返回邻域内寻找和当前_block相似度最高的几个block,返回的数组中包含本身

*_noisyImg:噪声图像

*_BlockPoint:当前block的坐标及大小

'''

(present_x, present_y) = _BlockPoint # 当前坐标

Blk_Size = Step1_Blk_Size

Search_Step = Step1_Search_Step

Threshold = First_Match_threshold

max_matched = Step1_max_matched_cnt

Window_size = Step1_Search_Window

blk_positions = numpy.zeros((max_matched, 2), dtype=int) # 用于记录相似blk的位置

Final_similar_blocks = numpy.zeros((max_matched, Blk_Size, Blk_Size), dtype=float)

img = _noisyImg[present_x: present_x+Blk_Size, present_y: present_y+Blk_Size]

dct_img = cv2.dct(img.astype(numpy.float64)) # 对目标作block作二维余弦变换

Final_similar_blocks[0, :, :] = dct_img

blk_positions[0, :] = _BlockPoint

Window_location = Define_SearchWindow(_noisyImg, _BlockPoint, Window_size, Blk_Size)

blk_num = (Window_size-Blk_Size)/Search_Step # 确定最多可以找到多少相似blk

blk_num = int(blk_num)

(present_x, present_y) = Window_location

similar_blocks = numpy.zeros((blk_num**2, Blk_Size, Blk_Size), dtype=float)

m_Blkpositions = numpy.zeros((blk_num**2, 2), dtype=int)

Distances = numpy.zeros(blk_num**2, dtype=float) # 记录各个blk与它的相似度

# 开始在_Search_Window中搜索,初始版本先采用遍历搜索策略,这里返回最相似的几块

matched_cnt = 0

for i in range(blk_num):

for j in range(blk_num):

tem_img = _noisyImg[present_x: present_x+Blk_Size, present_y: present_y+Blk_Size]

dct_Tem_img = cv2.dct(tem_img.astype(numpy.float64))

m_Distance = numpy.linalg.norm((dct_img-dct_Tem_img))**2 / (Blk_Size**2)

# 下面记录数据自动不考虑自身(因为已经记录)

if m_Distance < Threshold and m_Distance > 0: # 说明找到了一块符合要求的

similar_blocks[matched_cnt, :, :] = dct_Tem_img

m_Blkpositions[matched_cnt, :] = (present_x, present_y)

Distances[matched_cnt] = m_Distance

matched_cnt += 1

present_y += Search_Step

present_x += Search_Step

present_y = Window_location[1]

Distances = Distances[:matched_cnt]

Sort = Distances.argsort()

# 统计一下找到了多少相似的blk

if matched_cnt < max_matched:

Count = matched_cnt + 1

else:

Count = max_matched

if Count > 0:

for i in range(1, Count):

Final_similar_blocks[i, :, :] = similar_blocks[Sort[i-1], :, :]

blk_positions[i, :] = m_Blkpositions[Sort[i-1], :]

return Final_similar_blocks, blk_positions, Count

def Step1_3DFiltering(_similar_blocks):

'''

*3D变换及滤波处理

*_similar_blocks:相似的一组block,这里已经是频域的表示

*要将_similar_blocks第三维依次取出,然在频域用阈值滤波之后,再作反变换

'''

statis_nonzero = 0 # 非零元素个数

m_Shape = _similar_blocks.shape

# 下面这一段代码很耗时

for i in range(m_Shape[1]):

for j in range(m_Shape[2]):

tem_Vct_Trans = cv2.dct(_similar_blocks[:, i, j])

tem_Vct_Trans[numpy.abs(tem_Vct_Trans[:]) < Threshold_Hard3D] = 0.

statis_nonzero += tem_Vct_Trans.nonzero()[0].size

_similar_blocks[:, i, j] = cv2.idct(tem_Vct_Trans)[0]

return _similar_blocks, statis_nonzero

def Aggregation_hardthreshold(_similar_blocks, blk_positions, m_basic_img, m_wight_img, _nonzero_num, Count, Kaiser):

'''

*对3D变换及滤波后输出的stack进行加权累加,得到初步滤波的图片

*_similar_blocks:相似的一组block,这里是频域的表示

*对于最后的数组,乘以凯撒窗之后再输出

'''

_shape = _similar_blocks.shape

if _nonzero_num < 1:

_nonzero_num = 1

block_wight = (1./_nonzero_num) * Kaiser

for i in range(Count):

point = blk_positions[i, :]

tem_img = (1./_nonzero_num)*cv2.idct(_similar_blocks[i, :, :]) * Kaiser

m_basic_img[point[0]:point[0]+_shape[1], point[1]:point[1]+_shape[2]] += tem_img

m_wight_img[point[0]:point[0]+_shape[1], point[1]:point[1]+_shape[2]] += block_wight

def BM3D_1st_step(_noisyImg):

"""第一步,基本去噪"""

# 初始化一些参数:

(width, height) = _noisyImg.shape # 得到图像的长宽

block_Size = Step1_Blk_Size # 块大小

blk_step = Step1_Blk_Step # N块步长滑动

Width_num = (width - block_Size)/blk_step

Height_num = (height - block_Size)/blk_step

# 初始化几个数组

Basic_img, m_Wight, m_Kaiser = init(_noisyImg, Step1_Blk_Size, Beta_Kaiser)

# 开始逐block的处理,+2是为了避免边缘上不够

for i in range(int(Width_num+2)):

for j in range(int(Height_num+2)):

# m_blockPoint当前参考图像的顶点

m_blockPoint = Locate_blk(i, j, blk_step, block_Size, width, height) # 该函数用于保证当前的blk不超出图像范围

Similar_Blks, Positions, Count = Step1_fast_match(_noisyImg, m_blockPoint)

Similar_Blks, statis_nonzero = Step1_3DFiltering(Similar_Blks)

Aggregation_hardthreshold(Similar_Blks, Positions, Basic_img, m_Wight, statis_nonzero, Count, m_Kaiser)

Basic_img[:, :] /= m_Wight[:, :]

basic = numpy.matrix(Basic_img, dtype=int)

basic.astype(numpy.uint8)

return basic

def Step2_fast_match(_Basic_img, _noisyImg, _BlockPoint):

'''

*快速匹配算法,返回邻域内寻找和当前_block相似度最高的几个block,要同时返回basicImg和IMG

*_Basic_img: 基础去噪之后的图像

*_noisyImg:噪声图像

*_BlockPoint:当前block的坐标及大小

'''

(present_x, present_y) = _BlockPoint # 当前坐标

Blk_Size = Step2_Blk_Size

Threshold = Second_Match_threshold

Search_Step = Step2_Search_Step

max_matched = Step2_max_matched_cnt

Window_size = Step2_Search_Window

blk_positions = numpy.zeros((max_matched, 2), dtype=int) # 用于记录相似blk的位置

Final_similar_blocks = numpy.zeros((max_matched, Blk_Size, Blk_Size), dtype=float)

Final_noisy_blocks = numpy.zeros((max_matched, Blk_Size, Blk_Size), dtype=float)

img = _Basic_img[present_x: present_x+Blk_Size, present_y: present_y+Blk_Size]

dct_img = cv2.dct(img.astype(numpy.float32)) # 对目标作block作二维余弦变换

Final_similar_blocks[0, :, :] = dct_img

n_img = _noisyImg[present_x: present_x+Blk_Size, present_y: present_y+Blk_Size]

dct_n_img = cv2.dct(n_img.astype(numpy.float32)) # 对目标作block作二维余弦变换

Final_noisy_blocks[0, :, :] = dct_n_img

blk_positions[0, :] = _BlockPoint

Window_location = Define_SearchWindow(_noisyImg, _BlockPoint, Window_size, Blk_Size)

blk_num = (Window_size-Blk_Size)/Search_Step # 确定最多可以找到多少相似blk

blk_num = int(blk_num)

(present_x, present_y) = Window_location

similar_blocks = numpy.zeros((blk_num**2, Blk_Size, Blk_Size), dtype=float)

m_Blkpositions = numpy.zeros((blk_num**2, 2), dtype=int)

Distances = numpy.zeros(blk_num**2, dtype=float) # 记录各个blk与它的相似度

# 开始在_Search_Window中搜索,初始版本先采用遍历搜索策略,这里返回最相似的几块

matched_cnt = 0

for i in range(blk_num):

for j in range(blk_num):

tem_img = _Basic_img[present_x: present_x+Blk_Size, present_y: present_y+Blk_Size]

dct_Tem_img = cv2.dct(tem_img.astype(numpy.float32))

m_Distance = numpy.linalg.norm((dct_img-dct_Tem_img))**2 / (Blk_Size**2)

# 下面记录数据自动不考虑自身(因为已经记录)

if m_Distance < Threshold and m_Distance > 0:

similar_blocks[matched_cnt, :, :] = dct_Tem_img

m_Blkpositions[matched_cnt, :] = (present_x, present_y)

Distances[matched_cnt] = m_Distance

matched_cnt += 1

present_y += Search_Step

present_x += Search_Step

present_y = Window_location[1]

Distances = Distances[:matched_cnt]

Sort = Distances.argsort()

# 统计一下找到了多少相似的blk

if matched_cnt < max_matched:

Count = matched_cnt + 1

else:

Count = max_matched

if Count > 0:

for i in range(1, Count):

Final_similar_blocks[i, :, :] = similar_blocks[Sort[i-1], :, :]

blk_positions[i, :] = m_Blkpositions[Sort[i-1], :]

(present_x, present_y) = m_Blkpositions[Sort[i-1], :]

n_img = _noisyImg[present_x: present_x+Blk_Size, present_y: present_y+Blk_Size]

Final_noisy_blocks[i, :, :] = cv2.dct(n_img.astype(numpy.float64))

return Final_similar_blocks, Final_noisy_blocks, blk_positions, Count

def Step2_3DFiltering(_Similar_Bscs, _Similar_Imgs):

'''

*3D维纳变换的协同滤波

*_similar_blocks:相似的一组block,这里是频域的表示

*要将_similar_blocks第三维依次取出,然后作dct,在频域进行维纳滤波之后,再作反变换

*返回的Wiener_wight用于后面Aggregation

'''

m_Shape = _Similar_Bscs.shape

Wiener_wight = numpy.zeros((m_Shape[1], m_Shape[2]), dtype=float)

for i in range(m_Shape[1]):

for j in range(m_Shape[2]):

tem_vector = _Similar_Bscs[:, i, j]

tem_Vct_Trans = numpy.matrix(cv2.dct(tem_vector))

Norm_2 = numpy.float64(tem_Vct_Trans.T * tem_Vct_Trans)

m_weight = Norm_2/(Norm_2 + sigma**2)

if m_weight != 0:

Wiener_wight[i, j] = 1./(m_weight**2 * sigma**2)

# else:

# Wiener_wight[i, j] = 10000

tem_vector = _Similar_Imgs[:, i, j]

tem_Vct_Trans = m_weight * cv2.dct(tem_vector)

_Similar_Bscs[:, i, j] = cv2.idct(tem_Vct_Trans)[0]

return _Similar_Bscs, Wiener_wight

def Aggregation_Wiener(_Similar_Blks, _Wiener_wight, blk_positions, m_basic_img, m_wight_img, Count, Kaiser):

'''

*对3D变换及滤波后输出的stack进行加权累加,得到初步滤波的图片

*_similar_blocks:相似的一组block,这里是频域的表示

*对于最后的数组,乘以凯撒窗之后再输出

'''

_shape = _Similar_Blks.shape

block_wight = _Wiener_wight # * Kaiser

for i in range(Count):

point = blk_positions[i, :]

tem_img = _Wiener_wight * cv2.idct(_Similar_Blks[i, :, :]) # * Kaiser

m_basic_img[point[0]:point[0]+_shape[1], point[1]:point[1]+_shape[2]] += tem_img

m_wight_img[point[0]:point[0]+_shape[1], point[1]:point[1]+_shape[2]] += block_wight

def BM3D_2nd_step(_basicImg, _noisyImg):

'''Step 2. 最终的估计: 利用基本的估计,进行改进了的分组以及协同维纳滤波'''

# 初始化一些参数:

(width, height) = _noisyImg.shape

block_Size = Step2_Blk_Size

blk_step = Step2_Blk_Step

Width_num = (width - block_Size)/blk_step

Height_num = (height - block_Size)/blk_step

# 初始化几个数组

m_img, m_Wight, m_Kaiser = init(_noisyImg, block_Size, Beta_Kaiser)

for i in range(int(Width_num+2)):

for j in range(int(Height_num+2)):

m_blockPoint = Locate_blk(i, j, blk_step, block_Size, width, height)

Similar_Blks, Similar_Imgs, Positions, Count = Step2_fast_match(_basicImg, _noisyImg, m_blockPoint)

Similar_Blks, Wiener_wight = Step2_3DFiltering(Similar_Blks, Similar_Imgs)

Aggregation_Wiener(Similar_Blks, Wiener_wight, Positions, m_img, m_Wight, Count, m_Kaiser)

m_img[:, :] /= m_Wight[:, :]

Final = numpy.matrix(m_img, dtype=int)

Final.astype(numpy.uint8)

return Final

if __name__ == '__main__':

cv2.setUseOptimized(True) # OpenCV 中的很多函数都被优化过(使用 SSE2,AVX 等)。也包含一些没有被优化的代码。使用函数 cv2.setUseOptimized() 来开启优化。

img_name = "C:/Users/admin/Desktop/BM3D-Denoise/BM3D_test_images/lw3.png" # 图像的路径

img = cv2.imread(img_name, cv2.IMREAD_GRAYSCALE) # 读入图像,cv2.IMREAD_GRAYSCALE:以灰度模式读入图像

# 记录程序运行时间

e1 = cv2.getTickCount() # cv2.getTickCount 函数返回从参考点到这个函数被执行的时钟数

# if(img is not None):

# print("success")

Basic_img = BM3D_1st_step(img)

e2 = cv2.getTickCount()

time = (e2 - e1) / cv2.getTickFrequency() # 计算函数执行时间

print ("The Processing time of the First step is %f s" % time)

cv2.imwrite("Basic3.jpg", Basic_img)

psnr = PSNR.PSNR(img, Basic_img)

print ("The PSNR between the two img of the First step is %f" % psnr)

# Basic_img = cv2.imread("Basic3.jpg", cv2.IMREAD_GRAYSCALE)

Final_img = BM3D_2nd_step(Basic_img, img)

e3 = cv2.getTickCount()

time = (e3 - e2) / cv2.getTickFrequency()

print ("The Processing time of the Second step is %f s" % time)

cv2.imwrite("Final3.jpg", Final_img)

psnr = PSNR.PSNR(img, Final_img)

print ("The PSNR between the two img of the Second step is %f" % psnr)

time = (e3 - e1) / cv2.getTickFrequency()

print ("The total Processing time is %f s" % time)

PSNR.py

import cv2

import math

import numpy

def PSNR(img1, img2):

D = numpy.array(img1 - img2, dtype=numpy.int64)

D[:, :] = D[:, :]**2

RMSE = D.sum()/img1.size

psnr = 10*math.log10(float(255.**2)/RMSE)

return psnr

if __name__ == "__main__":

img1 = cv2.imread("original 2D4F.bmp", cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread("Basic2.jpg", cv2.IMREAD_GRAYSCALE)

psnr = PSNR(img1, img2)

print ("The PSNR between the two img of the two is %f" % psnr)

img1 = cv2.imread("original 2D4F.bmp", cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread("Final2.jpg", cv2.IMREAD_GRAYSCALE)

psnr = PSNR(img1, img2)

print ("The PSNR between the two img of the two is %f" % psnr)

另外,还可以使用BM3D算法python模块

pip install bm3d

C++代码实现

这个可以参考文献【3】中作者的实现,效果不错,算法设计也很巧妙。

[1] 通俗语言说BM3D

[2] BM3D图像去噪算法原理及代码详解

[3] 传统图像降噪算法之BM3D原理详解

[4] BM3D 算法原理详细解析 按过程步骤讲解(附C++实现代码)