ELK分析k8s应用日志

ELK分析k8s应用日志

-

-

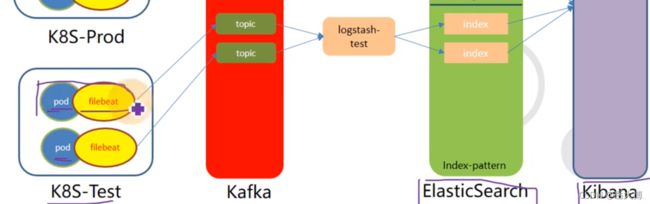

- 日志收集过程

- 架构

- 安装elasticsearch-6.8.6

- 安装kafka

- kafka-manager 安装

- 安装filebeat

-

- 在spinnaker中配置日志收集容器filebeat

- 部署logstash

- kibana部署

-

- kibana使用

-

日志收集过程

收集,能够采集多种来源的日志数据(流式日志收集齐)

传输,能够稳定的吧日志传输到中央系统;ElasticSearch可以通过9200http方式传输,也可以通过

架构

安装elasticsearch-6.8.6

服务部署在21上

src]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.8.6.tar.gz

src]# tar -xf elasticsearch-6.8.6.tar.gz -C /opt/release/

src]# ln -s /opt/release/elasticsearch-6.8.6 /opt/apps/elasticsearch

~]# grep -Ev "^$|^#" /opt/apps/elasticsearch/config/elasticsearch.yml # 调整以下配置

cluster.name: elasticsearch.host.com

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

bootstrap.memory_lock: true

network.host: 172.16.0.21

http.port: 9200

~]# vim /opt/apps/elasticsearch/config/jvm.options # 默认1g

......

# 生产中一般不超过32G

-Xms16g

-Xmx16g

~]# useradd -M es

~]# mkdir -p /data/elasticsearch/{logs,data}

~]# chown -R es.es /data/elasticsearch /opt/release/elasticsearch-6.8.6

## 修改es用户的内核配置

~]# vim /etc/security/limits.conf

......

es hard nofile 65536

es soft fsize unlimited

es hard memlock unlimited

es soft memlock unlimited

~]# echo "vm.max_map_count=262144" >> /etc/sysctl.conf ; sysctl -p

# 管理es命令

~]# su es -c "/opt/apps/elasticsearch/bin/elasticsearch -d" # 启动

~]# su es -c "/opt/apps/elasticsearch/bin/elasticsearch -d -p /data/elasticsearch/logs/pid" # 指定pid记录的文件启动es

~]# netstat -lntp | grep 9.00

tcp6 0 0 10.4.7.12:9200 :::* LISTEN 69352/java

tcp6 0 0 10.4.7.12:9300 :::* LISTEN 69352/java

~]# su es -c "ps aux|grep -v grep|grep java|grep elasticsearch|awk '{print \$2}'|xargs kill" # kill Pid

~]# pkill -F /data/elasticsearch/logs/pid

# 添加k8s日志索引模板

~]# curl -H "Content-Type:application/json" -XPUT http://172.16.0.21:9200/_template/k8s -d '{

"template" : "k8s*",

"index_patterns": ["k8s*"],

"settings": {

"number_of_shards": 5,

"number_of_replicas": 0

}

}'

安装kafka

最好不要超过2.2版本,超过之后kafka_manager就不再支持

src]# wget https://archive.apache.org/dist/kafka/2.2.0/kafka_2.12-2.2.0.tgz

src]# tar -xf kafka_2.12-2.2.0.tgz -C /opt/release/

src]# ln -s /opt/release/kafka_2.12-2.2.0 /opt/apps/kafka

~]# vim /opt/apps/kafka/config/server.properties

~]# vim /opt/apps/kafka/config/server.properties

......

log.dirs=/data/kafka/logs

# 超过10000条日志强制刷盘,超过1000ms刷盘

log.flush.interval.messages=10000

log.flush.interval.ms=1000

# 填写需要连接的 zookeeper 集群地址,当前连接本地的 zk 集群。

zookeeper.connect=localhost:2181

# 新增以下两项

delete.topic.enable=true

listeners=PLAINTEXT://172.16.0.21:9092

# 启动kafka之前需要先启动zookeeper

/opt/apps/kafka/bin/zookeeper-server-start.sh -daemon /opt/apps/kafka/config/zookeeper.properties

~]# mkdir -p /data/kafka/logs

~]# /opt/apps/kafka/bin/kafka-server-start.sh -daemon /opt/apps/kafka/config/server.properties

~]# netstat -lntp|grep 121952

tcp6 0 0 10.4.7.11:9092 :::* LISTEN 121952/java

tcp6 0 0 :::41211 :::* LISTEN 121952/java

# 查询kafka的topic是否创建成功

/opt/apps/kafka/bin/kafka-topics.sh --list --zookeeper localhost:2181

kafka-manager 安装

配置dockerfile

kafka-manager 改名为 CMAK,压缩包名称和内部目录名发生了变化,后续安装

# 这里存在几个问题:

# 1. kafka-manager 改名为 CMAK,压缩包名称和内部目录名发生了变化

# 2. sbt 编译需要下载很多依赖,因为不可描述的原因,速度非常慢,个人非VPN网络大概率失败

# 3. 因本人不具备VPN条件,编译失败。又因为第一条,这个dockerfile大概率需要修改

# 4. 生产环境中一定要自己重新做一份!

FROM hseeberger/scala-sbt

ENV ZK_HOSTS=localhost:2181 \

KM_VERSION=2.0.0.2

RUN mkdir -p /tmp && \

cd /tmp && \

wget https://github.com/yahoo/kafka-manager/archive/${KM_VERSION}.tar.gz && \

tar xf ${KM_VERSION}.tar.gz && \

cd /tmp/kafka-manager-${KM_VERSION} && \

sbt clean dist && \

unzip -d / ./target/universal/kafka-manager-${KM_VERSION}.zip && \

rm -fr /tmp/${KM_VERSION} /tmp/kafka-manager-${KM_VERSION}

WORKDIR /kafka-manager-${KM_VERSION}

EXPOSE 9000

ENTRYPOINT ["./bin/kafka-manager","-Dconfig.file=conf/application.conf"]

apiVersion: apps/v1

kind: Deployment

metadata:

name: kafka-manager

namespace: infra

labels:

name: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

app: kafka-manager

template:

metadata:

labels:

app: kafka-manager

spec:

containers:

- name: kafka-manager

image: 172.16.1.10:8900/public/kafka-manager:v2.0.0.2

ports:

- containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: zk1.host.com:2181

- name: APPLICATION_SECRET

value: letmein

apiVersion: v1

kind: Service

metadata:

name: kafka-manager

namespace: infra

spec:

ports:

- protocol: TCP

port: 9000

targetPort: 9000

selector:

app: kafka-manager

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kafka-manager

namespace: infra

spec:

rules:

- host: kafka-manager.host.com

http:

paths:

- path: /

backend:

serviceName: kafka-manager

servicePort: 9000

安装filebeat

日志收集服务使用filebeat,因为传统的ruby收集的日志的方式十分消耗资源;其与业务服务采用边车模式运行;通过挂载卷的方式共享业务容器中的日志

ps:

- 在k8s的yaml文件中定义containers时,同时定义的容器就是以边车模式运行的

- docker的边车模式下两个容器共享了网络名称空间,USER(用户名称空间)和UTS(时间),隔离了IPC(进程空间)和文件系统

注意点:要与elasticsearch版本一致

制作镜像

# windows环境中下载好的压缩包直接ADD到镜像中 https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.4.0-linux-x86_64.tar.gz

FROM debian:jessie

ADD filebeat-7.4.0-linux-x86_64.tar.gz /opt/

RUN set -x && cp /opt/filebeat-*/filebeat /bin && rm -fr /opt/filebeat*

COPY entrypoint.sh /

ENTRYPOINT ["/entrypoint.sh"]

filebeat]# cat docker-entrypoint.sh

#!/bin/bash

ENV=${ENV:-"dev"} # 运行环境

PROJ_NAME=${PROJ_NAME:-"no-define"} # project 名称,关系到topic

MULTILINE=${MULTILINE:-"^\d{2}"} # 多行匹配,根据日志格式来定

KAFKA_ADDR=${KAFKA_ADDR:-'"172.16.0.21:9092"'}

cat > /etc/filebeat.yaml << EOF

filebeat.inputs:

- type: log

fields_under_root: true

fields:

topic: logm-${PROJ_NAME}

paths:

- /logm/*.log

- /logm/*/*.log

- /logm/*/*/*.log

- /logm/*/*/*/*.log

- /logm/*/*/*/*/*.log

scan_frequency: 120s

max_bytes: 10485760

multiline.pattern: '$MULTILINE'

multiline.negate: true

multiline.match: after

multiline.max_lines: 100

- type: log

fields_under_root: true

fields:

topic: logu-${PROJ_NAME}

paths:

- /logu/*.log

- /logu/*/*.log

- /logu/*/*/*.log

- /logu/*/*/*/*.log

- /logu/*/*/*/*/*.log

- /logu/*/*/*/*/*/*.log

output.kafka:

hosts: [${KAFKA_ADDR}]

topic: k8s-fb-$ENV-%{[topic]}

version: 2.0.0

required_acks: 0

max_message_bytes: 10485760

EOF

set -xe

if [[ "$1" == "" ]]; then

exec filebeat -c /etc/filebeat.yaml

else

exec "$@"

fi

docker build -t 172.16.1.10:8900/filebeat:v7.4.0 .创建docker镜像

docker push 172.16.1.10:8900/filebeat:v7.4.0推送镜像到仓库

在spinnaker中配置日志收集容器filebeat

- 配置emptydir类型的存储卷;将/logm下的文件挂载到该配置卷下;将业务容器的日志同样挂载到该存储卷下;

- 配置环境变量,在

filebeat.yaml配置文件中的所有环境变量都可以配置到容器环境变量中,有项目名称$PROJ_NAME,环境名称$ENV,多行匹配正则规则$MULTILINE;

部署logstash

版本要求:与elasticsearch一致

启动方式:开发和生产环境各使用docker run启动一份

部署步骤:

# 下载镜像

~]# docker image pull logstash:6.8.3

~]# docker image tag logstash:6.8.3 172.16.1.10:8900/logstash:6.8.3

~]# docker image push 172.16.1.10:8900/logstash:6.8.3

cat /etc/logstash/logstash-dev.conf

input {

kafka {

bootstrap_servers => "172.16.0.21:9092"

client_id => "172.16.0.21"

consumer_threads => 4

group_id => "k8s_dev"

topics_pattern => "k8s-fb-dev-.*"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["172.16.0.21:9200"]

index => "k8s-dev-%{+YYYY.MM}"

}

}

# 运行logstash dev环境的日志收集

docker run -d --name logstash-dev -v /etc/logstash:/etc/logstash 172.16.1.10:8900/logstash:v6.8.3 -f /etc/logstash/logstash-dev.conf

kibana部署

~]# docker pull kibana:6.8.3

~]# docker image tag kibana:6.8.3 172.16.1.10:8900/kibana:6.8.3

~]# docker image push 172.16.1.10:8900/kibana:6.8.3

pd控制器资源配置清单 dp.yml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: infra

labels:

name: kibana

spec:

replicas: 1

selector:

matchLabels:

name: kibana

template:

metadata:

labels:

app: kibana

name: kibana

spec:

containers:

- name: kibana

image: 172.16.1.10:8900/kibana:6.8.3

ports:

- containerPort: 5601

protocol: TCP

env:

- name: ELASTICSEARCH_URL

value: http://172.16.0.21:9200

service资源配置清单 svc.yml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 5601

selector:

app: kibana

ingress资源配置清单 ingress.yml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana

namespace: infra

spec:

rules:

- host: kibana.host.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 80

应用资源配置清单

添加kibana.host.com域名到bind9服务

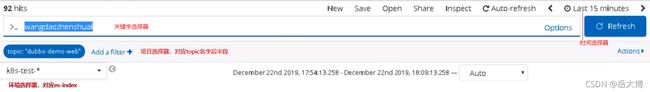

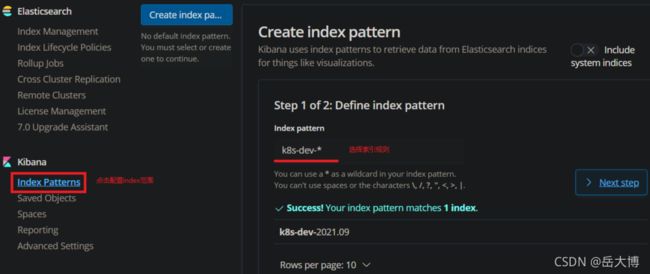

kibana使用

-

初始化kibana,点击Explore on my own按钮;协助优化kibana,选择NO;Monitoring选项中,点击Turn On,开启监控,可以查询es的状态及索引;

-

在Management中,配置index,使用logstash中建立index的规则名称去匹配,之后选择kibana中默认的@timestamp时间戳规则,创建index patterns

-

看日志,选择Discover,选择器的使用

-

配置常用字段

Time, message, log.file.path, hostname -

落盘的日志直接filebeat收集;没有落盘的日志,统一输出重定向

command >> /opt/logs/*.log 2>&1