Scrapy_Study01

Scrapy

scrapy 爬虫框架的爬取流程

scrapy框架各个组件的简介

对于以上四步而言,也就是各个组件,它们之间没有直接的联系,全部都由scrapy引擎来连接传递数据。引擎由scrapy框架已经实现,而需要手动实现一般是spider爬虫和pipeline管道,对于复杂的爬虫项目可以手写downloader和spider 的中间件来满足更复杂的业务需求。

scrapy框架的简单使用

在安装好scrapy第三方库后,通过terminal控制台来直接输入命令

- 创建一个scrapy项目

scrapy startproject myspider

- 生成一个爬虫

scrapy genspider itcast itcast.cn

- 提取数据

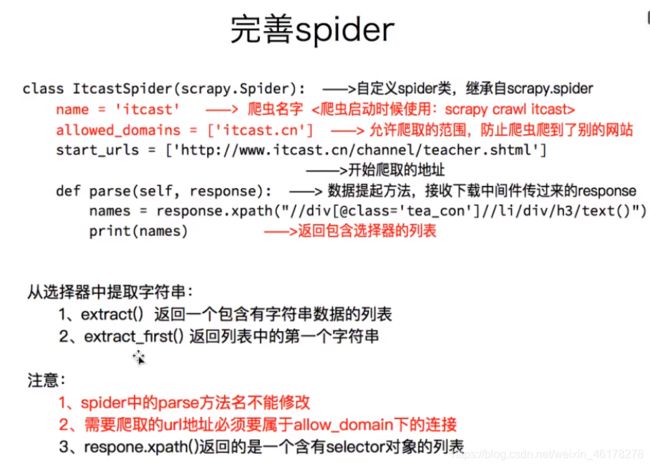

完善spider,使用xpath等

- 保存数据

在pipeline中进行操作

- 启动爬虫

scrapy crawl itcast

scrapy框架使用的简单流程

- 创建scrapy项目,会自动生成一系列的py文件和配置文件

- 创建一个自定义名称,确定爬取域名(可选)的爬虫

- 书写代码完善自定义的爬虫,以实现所需效果

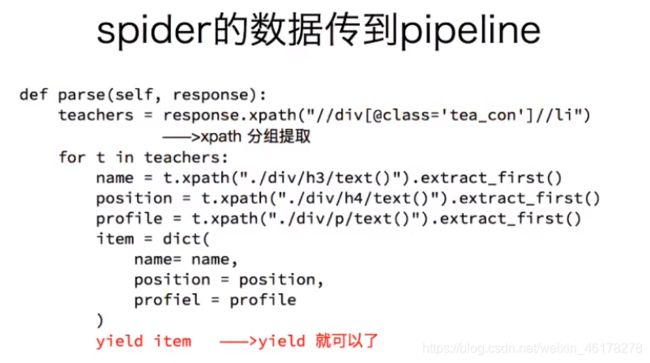

- 使用yield 将解析出的数据传递到pipeline

- 使用pipeline将数据存储(在pipeline中操作数据需要在settings.py中将配置开启,默认是关闭)

- 使用pipeline的几点注意事项

使用logging模块

在scrapy 中

settings中设置LOG_LEVEL = “WARNING”

settings中设置LOG_FILE = “./a.log” # 设置日志文件保存位置及文件名, 同时终端中不会显示日志内容

import logging, 实例化logger的方式在任何文件中使用logger输出内容

在普通项目中

import logging

logging.basicConfig(…) # 设置日志输出的样式, 格式

实例化一个’logger = logging.getLogger(name)’

在任何py文件中调用logger即可

scrapy中实现翻页请求

案例 爬取腾讯招聘

因为现在网站主流趋势是前后分离,直接去get网站只能得到一堆不含数据的html标签,而网页展示出的数据都是由js请求后端接口获取数据然后将数据拼接在html中,所以不能直接访问网站地址,而是通过chrome开发者工具获知网站请求的后端接口地址,然后去请求该地址

通过比对网站请求后端接口的querystring,确定下要请求的url

在腾讯招聘网中,翻页查看招聘信息也是通过请求后端接口实现的,因此翻页爬取实际上就是对后端接口的请求但需要传递不同的querystring

spider 代码

import scrapy

import random

import json

class TencenthrSpider(scrapy.Spider):

name = 'tencenthr'

allowed_domains = ['tencent.com']

start_urls = ['https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1614839354704&parentCategoryId=40001&pageIndex=1&pageSize=10&language=zh-cn&area=cn']

# start_urls = "https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=1614839354704&parentCategoryId=40001&pageIndex=1&pageSize=10&language=zh-cn&area=cn"

def parse(self, response):

# 由于是请求后端接口,所以返回的是json数据,因此获取response对象的text内容,

# 然后转换成dict数据类型便于操作

gr_list = response.text

gr_dict = json.loads(gr_list)

# 因为实现翻页功能就是querystring中的pageIndex的变化,所以获取每次的index,然后下一次的index加一即可

start_url = str(response.request.url)

start_index = int(start_url.find("Index") + 6)

mid_index = int(start_url.find("&", start_index))

num_ = start_url[start_index:mid_index]

# 一般返回的json数据会有共有多少条数据,这里取出

temp = gr_dict["Data"]["Count"]

# 定义一个字典

item = {}

for i in range(10):

# 填充所需数据,通过访问dict 的方式取出数据

item["Id"] = gr_dict["Data"]["Posts"][i]["PostId"]

item["Name"] = gr_dict["Data"]["Posts"][i]["RecruitPostName"]

item["Content"] = gr_dict["Data"]["Posts"][i]["Responsibility"]

item["Url"] = "https://careers.tencent.com/jobdesc.html?postid=" + gr_dict["Data"]["Posts"][i]["PostId"]

# 将item数据交给引擎

yield item

# 下一个url

# 这里确定下一次请求的url,同时url中的timestamp就是一个13位的随机数字

rand_num1 = random.randint(100000, 999999)

rand_num2 = random.randint(1000000, 9999999)

rand_num = str(rand_num1) + str(rand_num2)

# 这里确定pageindex 的数值

nums = int(start_url[start_index:mid_index]) + 1

if nums > int(temp)/10:

pass

else:

nums = str(nums)

next_url = 'https://careers.tencent.com/tencentcareer/api/post/Query?timestamp=' + rand_num + '&parentCategoryId=40001&pageIndex=' + nums +'&pageSize=10&language=zh-cn&area=cn'

# 将 下一次请求的url封装成request对象传递给引擎

yield scrapy.Request(next_url, callback=self.parse)

pipeline 代码

import csv

class TencentPipeline:

def process_item(self, item, spider):

# 将获取到的各个数据 保存到csv文件

with open('./tencent_hr.csv', 'a+', encoding='utf-8') as file:

fieldnames = ['Id', 'Name', 'Content', 'Url']

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

print(item)

writer.writerow(item)

return item

补充scrapy.Request

scrapy的item使用

案例 爬取阳光网的问政信息

爬取阳光政务网的信息,通过chrome开发者工具知道网页的数据都是正常填充在html中,所以爬取阳关网就只是正常的解析html标签数据。

但注意的是,因为还需要爬取问政信息详情页的图片等信息,因此在书写spider代码时需要注意parse方法的书写

spider 代码

import scrapy

from yangguang.items import YangguangItem

class YangguanggovSpider(scrapy.Spider):

name = 'yangguanggov'

allowed_domains = ['sun0769.com']

start_urls = ['http://wz.sun0769.com/political/index/politicsNewest?page=1']

def parse(self, response):

start_url = response.url

# 按页分组进行爬取并解析数据

li_list = response.xpath("/html/body/div[2]/div[3]/ul[2]")

for li in li_list:

# 在item中定义的工具类。来承载所需的数据

item = YangguangItem()

item["Id"] = str(li.xpath("./li/span[1]/text()").extract_first())

item["State"] = str(li.xpath("./li/span[2]/text()").extract_first()).replace(" ", "").replace("\n", "")

item["Content"] = str(li.xpath("./li/span[3]/a/text()").extract_first())

item["Time"] = li.xpath("./li/span[5]/text()").extract_first()

item["Link"] = "http://wz.sun0769.com" + str(li.xpath("./li/span[3]/a[1]/@href").extract_first())

# 访问每一条问政信息的详情页,并使用parse_detail方法进行处理

# 借助scrapy的meta 参数将item传递到parse_detail方法中

yield scrapy.Request(

item["Link"],

callback=self.parse_detail,

meta={"item": item}

)

# 请求下一页

start_url_page = int(str(start_url)[str(start_url).find("=")+1:]) + 1

next_url = "http://wz.sun0769.com/political/index/politicsNewest?page=" + str(start_url_page)

yield scrapy.Request(

next_url,

callback=self.parse

)

# 解析详情页的数据

def parse_detail(self, response):

item = response.meta["item"]

item["Content_img"] = response.xpath("/html/body/div[3]/div[2]/div[2]/div[3]/img/@src")

yield item

items 代码

import scrapy

# 在item类中定义所需的字段

class YangguangItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

Id = scrapy.Field()

Link = scrapy.Field()

State = scrapy.Field()

Content = scrapy.Field()

Time = scrapy.Field()

Content_img = scrapy.Field()

pipeline 代码

class YangguangPipeline:

# 简单的打印出所需数据

def process_item(self, item, spider):

print(item)

return item

scrapy的debug信息认识

通过查看scrapy框架打印的debug信息,可以查看scrapy启动顺序,在出现错误时,可以辅助解决成为。

scrapy深入之scrapy shell

通过scrapy shell可以在未启动spider的情况下尝试以及调试代码,在一些不能确定操作的情况下可以先通过shell来验证尝试。

scrapy深入之settings和管道

settings

对scrapy项目的settings文件的介绍:

# Scrapy settings for yangguang project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

# 项目名

BOT_NAME = 'yangguang'

# 爬虫模块所在位置

SPIDER_MODULES = ['yangguang.spiders']

# 新建爬虫所在位置

NEWSPIDER_MODULE = 'yangguang.spiders'

# 输出日志等级

LOG_LEVEL = 'WARNING'

# 设置每次发送请求时携带的headers的user-argent

# Crawl responsibly by identifying yourself (and your website) on the user-agent

# USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.72 Safari/537.36 Edg/89.0.774.45'

# 设置是否遵守 robot协议

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# 设置最大同时请求发出量

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

# 设置每次请求间歇时间

#DOWNLOAD_DELAY = 3

# 一般用处较少

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# cookie是否开启,默认可以开启

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# 控制台组件是否开启

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# 设置默认请求头,user-argent不能同时放置在此处

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# 设置爬虫中间件是否开启

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'yangguang.middlewares.YangguangSpiderMiddleware': 543,

#}

# 设置下载中间件是否开启

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'yangguang.middlewares.YangguangDownloaderMiddleware': 543,

#}

#

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# 设置管道是否开启

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'yangguang.pipelines.YangguangPipeline': 300,

}

# 自动限速相关设置

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# HTTP缓存相关设置

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

管道 pipeline

在管道中不仅只有项目创建时的process_item方法,管道中还有open_spider,close_spider方法等,这两个方法就是分别在爬虫开启时和爬虫结束时执行一次。

举例代码:

class YangguangPipeline:

def process_item(self, item, spider):

print(item)

# 如果不return的话,另一个权重较低的pipeline就不会获取到该item

return item

def open_spider(self, spider):

# 这在爬虫开启时执行一次

spider.test = "hello"

# 为spider添加了一个属性值,之后在pipeline中的process_item或spider中都可以使用该属性值

def close_spider(self, spider):

# 这在爬虫关闭时执行一次

spider.test = ""

mongodb的补充

借助pymongo第三方包来操作

scrapy中的crawlspider爬虫

生成crawlspider的命令:

scrapy genspider -t crawl 爬虫名 要爬取的域名

crawlspider的使用

-

创建爬虫scrapy genspider -t crawl 爬虫名 allow_domain

-

指定start_url, 对应的响应会经过rules提取url地址

-

完善rules, 添加Rule

Rule(LinkExtractor(allow=r’ /web/site0/tab5240/info\d+.htm’), callback=‘parse_ item’),

- 注意点:

url地址不完整, crawlspider会自动补充完整之后在请求

parse函数还不能定义, 他有特殊的功能需要实现

callback: 连接提取器提取出来的url地址对应的响应交给他处理

follow: 连接提取器提取出来的url地址对应的响应是否继续被rules来过滤

LinkExtractors链接提取器:

使用LinkExtractors可以不用程序员自己提取想要的url,然后发送请求。这些工作都可以交给LinkExtractors,他会在所有爬的页面中找到满足规则的url,实现自动的爬取。以下对LinkExtractors类做一个简单的介绍:

class scrapy.linkextractors.LinkExtractor(

allow = (),

deny = (),

allow_domains = (),

deny_domains = (),

deny_extensions = None,

restrict_xpaths = (),

tags = ('a','area'),

attrs = ('href'),

canonicalize = True,

unique = True,

process_value = None

)

主要参数讲解:

- allow:允许的url。所有满足这个正则表达式的url都会被提取。

- deny:禁止的url。所有满足这个正则表达式的url都不会被提取。

- allow_domains:允许的域名。只有在这个里面指定的域名的url才会被提取。

- deny_domains:禁止的域名。所有在这个里面指定的域名的url都不会被提取。

- restrict_xpaths:严格的xpath。和allow共同过滤链接。

Rule规则类:

定义爬虫的规则类。以下对这个类做一个简单的介绍:

class scrapy.spiders.Rule(

link_extractor,

callback = None,

cb_kwargs = None,

follow = None,

process_links = None,

process_request = None

)

主要参数讲解:

- link_extractor:一个

LinkExtractor对象,用于定义爬取规则。 - callback:满足这个规则的url,应该要执行哪个回调函数。因为

CrawlSpider使用了parse作为回调函数,因此不要覆盖parse作为回调函数自己的回调函数。 - follow:指定根据该规则从response中提取的链接是否需要跟进。

- process_links:从link_extractor中获取到链接后会传递给这个函数,用来过滤不需要爬取的链接。

案例 爬取笑话大全网站

分析xiaohua.zolcom.cn 可以得知, 网页的数据是直接嵌在HTML中, 请求网站域名, 服务器直接返回的html标签包含了网页内可见的全部信息. 所以直接对服务器响应的html标签进行解析.

同时翻页爬取数据时,也发现下页的url 已被嵌在html中, 因此借助crawlspider可以非常方便的提取出下一页url.

spider 代码:

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

import re

class XhzolSpider(CrawlSpider):

name = 'xhzol'

allowed_domains = ['xiaohua.zol.com.cn']

start_urls = ['http://xiaohua.zol.com.cn/lengxiaohua/1.html']

rules = (

# 这里定义从相应中提取符合该正则的url地址,并且可以自动补全, callpack指明哪一个处理函数来处理响应, follow表示从响应中提取出的符合正则的url 是否要继续进行请求

Rule(LinkExtractor(allow=r'/lengxiaohua/\d+\.html'), callback='parse_item', follow=True),

)

def parse_item(self, response):

item = {}

# item["title"] = response.xpath("/html/body/div[6]/div[1]/ul/li[1]/span/a/text()").extract_first()

# print(re.findall("(.*?)", response.body.decode("gb18030"), re.S))

# 这里按正则搜索笑话的标题

for i in re.findall(r'(.*?)', response.body.decode("gb18030"), re.S):

item["titles"] = i

yield item

return item

pipeline 代码:

class XiaohuaPipeline:

def process_item(self, item, spider):

print(item)

return item

简单的打印来查看运行结果

案例 爬取中国银监会网站的处罚信息

分析网页信息得知,网页的具体数据信息都是网页通过发送Ajax请求,请求后端接口获取到json数据,然后通过js动态的将数据嵌在html中,渲染出来。所以不能直接去请求网站域名,而是去请求后端的api接口。并且通过比对翻页时请求的后端api接口的变化,确定翻页时下页的url。

spider 代码:

import scrapy

import re

import json

class CbircSpider(scrapy.Spider):

name = 'cbirc'

allowed_domains = ['cbirc.gov.cn']

start_urls = ['https://www.cbirc.gov.cn/']

def parse(self, response):

start_url = "http://www.cbirc.gov.cn/cbircweb/DocInfo/SelectDocByItemIdAndChild?itemId=4113&pageSize=18&pageIndex=1"

yield scrapy.Request(

start_url,

callback=self.parse1

)

def parse1(self, response):

# 数据处理

json_data = response.body.decode()

json_data = json.loads(json_data)

for i in json_data["data"]["rows"]:

item = {}

item["doc_name"] = i["docSubtitle"]

item["doc_id"] = i["docId"]

item["doc_time"] = i["builddate"]

item["doc_detail"] = "http://www.cbirc.gov.cn/cn/view/pages/ItemDetail.html?docId=" + str(i["docId"]) + "&itemId=4113&generaltype=" + str(i["generaltype"])

yield item

# 翻页, 确定下一页的url

str_url = response.request.url

page = re.findall(r'.*?pageIndex=(\d+)', str_url, re.S)[0]

mid_url = str(str_url).strip(str(page))

page = int(page) + 1

# 请求的url变化就是 page 的增加

if page <= 24:

next_url = mid_url + str(page)

yield scrapy.Request(

next_url,

callback=self.parse1

)

pipeline 代码:

import csv

class CircplusPipeline:

def process_item(self, item, spider):

with open('./circ_gb.csv', 'a+', encoding='gb2312') as file:

fieldnames = ['doc_id', 'doc_name', 'doc_time', 'doc_detail']

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writerow(item)

return item

def open_spider(self, spider):

with open('./circ_gb.csv', 'a+', encoding='gb2312') as file:

fieldnames = ['doc_id', 'doc_name', 'doc_time', 'doc_detail']

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

将数据保存在csv文件中

下载中间件

学习download middleware的使用,下载中间件用于初步处理将调度器发送给下载器的request url 或 初步处理下载器请求后获取的response

同时还有process_exception 方法用于处理当中间件程序抛出异常时进行的异常处理。

下载中间件的简单使用

自定义中间件的类,在类中定义process的三个方法,方法中书写实现代码。注意要在settings中开启,将类进行注册。

代码尝试:

import random

# useful for handling different item types with a single interface

from itemadapter import is_item, ItemAdapter

class RandomUserArgentMiddleware:

# 处理请求

def process_request(self, request, spider):

ua = random.choice(spider.settings.get("USER_ARENT_LIST"))

request.headers["User-Agent"] = ua[0]

class SelectRequestUserAgent:

# 处理响应

def process_response(self, request, response, spider):

print(request.headers["User=Agent"])

# 需要返回一个response(通过引擎将response交给spider)或request(通过引擎将request交给调度器)或none

return response

class HandleMiddlewareEcxeption:

# 处理异常

def process_exception(self, request, exception, spider):

print(exception)

settings 代码:

DOWNLOADER_MIDDLEWARES = {

'suningbook.middlewares.RandomUserArgentMiddleware': 543,

'suningbook.middlewares.SelectRequestUserAgent': 544,

'suningbook.middlewares.HandleMiddlewareEcxeption': 544,

}

scrapy 模拟登录

scrapy 携带cookie登录

在scrapy中, start_url不会经过allowed_domains的过滤, 是一定会被请求, 查看scrapy 的源码, 请求start_url就是由start_requests方法操作的, 因此通过自己重写start_requests方法可以为请求start_url 携带上cookie信息等, 实现模拟登录等功能.

通过重写start_requests 方法,为我们的请求携带上cookie信息,来实现模拟登录功能。

补充知识点:

scrapy中 cookie信息是默认开启的,所以默认请求下是直接使用cookie的。可以通过开启COOKIE_DEBUG = True 可以查看到详细的cookie在函数中的传递。

案例 携带cookie模拟登录人人网

通过重写start_requests方法,为请求携带上cookie信息,去访问需要登录后才能访问的页面,获取信息。模拟实现模拟登录的功能。

import scrapy

import re

class LoginSpider(scrapy.Spider):

name = 'login'

allowed_domains = ['renren.com']

start_urls = ['http://renren.com/975252058/profile']

# 重写方法

def start_requests(self):

# 添加上cookie信息,这之后的请求中都会携带上该cookie信息

cookies = "anonymid=klx1odv08szk4j; depovince=GW; _r01_=1; taihe_bi_sdk_uid=17f803e81753a44fe40be7ad8032071b; taihe_bi_sdk_session=089db9062fdfdbd57b2da32e92cad1c2; ick_login=666a6c12-9cd1-433b-9ad7-97f4a595768d; _de=49A204BB9E35C5367A7153C3102580586DEBB8C2103DE356; t=c433fa35a370d4d8e662f1fb4ea7c8838; societyguester=c433fa35a370d4d8e662f1fb4ea7c8838; id=975252058; xnsid=fadc519c; jebecookies=db5f9239-9800-4e50-9fc5-eaac2c445206|||||; JSESSIONID=abcb9nQkVmO0MekR6ifGx; ver=7.0; loginfrom=null; wp_fold=0"

cookie = {i.split("=")[0]:i.split("=")[1] for i in cookies.split("; ")}

yield scrapy.Request(

self.start_urls[0],

callback=self.parse,

cookies=cookie

)

# 打印用户名,验证是否模拟登录成功

def parse(self, response):

print(re.findall("该用户尚未开", response.body.decode(), re.S))

scrapy模拟登录之发送post请求

借助scrapy提供的FromRequest对象发送Post请求,并且可以设置fromdata,headers,cookies等参数。

案例 scrapy模拟登录github

模拟登录GitHub,访问github.com/login, 获取from参数, 再去请求/session 验证账号密码,最后登录成功

spider 代码:

import scrapy

import re

import random

class GithubSpider(scrapy.Spider):

name = 'github'

allowed_domains = ['github.com']

start_urls = ['https://github.com/login']

def parse(self, response):

# 先从login 页面的响应中获取出authenticity_token和commit,在请求登录是必需

authenticity_token = response.xpath("//*[@id='login']/div[4]/form/input[1]/@value").extract_first()

rand_num1 = random.randint(100000, 999999)

rand_num2 = random.randint(1000000, 9999999)

rand_num = str(rand_num1) + str(rand_num2)

commit = response.xpath("//*[@id='login']/div[4]/form/div/input[12]/@value").extract_first()

form_data = dict(

commit=commit,

authenticity_token=authenticity_token,

login="[email protected]",

password="tcc062556",

timestamp=rand_num,

# rusted_device="",

)

# form_data["webauthn-support"] = ""

# form_data["webauthn-iuvpaa-support"] = ""

# form_data["return_to"] = ""

# form_data["allow_signup"] = ""

# form_data["client_id"] = ""

# form_data["integration"] = ""

# form_data["required_field_b292"] = ""

headers = {

"referer": "https://github.com/login",

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'accept-language': 'zh-CN,zh;q=0.9',

'accept-encoding': 'gzip, deflate, br',

'origin': 'https://github.com'

}

# 借助fromrequest 发送post请求,进行登录

yield scrapy.FormRequest.from_response(

response,

formdata=form_data,

headers=headers,

callback=self.login_data

)

def login_data(self, response):

# 打印用户名验证是否登录成功

print(re.findall("xiangshiersheng", response.body.decode()))

# 保存成本地html 文件

with open('./github.html', 'a+', encoding='utf-8') as f:

f.write(response.body.decode())

总结:

模拟登录三种方式:

1. 携带cookie登录

使用scrapy.Request(url, callback=, cookies={})

将cookies填入,在请求url时会携带cookie去请求。

2. 使用FormRequest

scrapy.FromRequest(url, formdata={}, callback=)

formdata 就是请求体, 在formdata中填入要提交的表单数据

3. 借助from_response

scrapy.FromRequest.from_response(response, formdata={}, callback=)

from_response 会自动从响应中搜索到表单提交的地址(如果存在表单及提交地址)

知识的简单总结

crawlspider 如何使用

- 创建爬虫 scrapy genspider -t crawl spidername allow_domain

- 完善爬虫

- start_url

- 完善rules

- 元组

- Rule(LinkExtractor, callback, follow)

- LinkExtractor 连接提取器, 提取url

- callback url的响应会交给该callback处理

- follow = True url的响应会继续被Rule提取地址

- 完善 callback, 处理数据

下载中间件如何使用

- 定义类

- process_request 处理请求, 不需要return

- process_response 处理响应, 需要return request response

- settings中开启

scrapy如何模拟登录

- 携带cookie登录

- 准备cookie字典

- scrapy.Request(url, callba, cookies=cookies_dict)

- scrapy.FromRequest(post_url, formdata={}, callback)

- scrapy.FromRequest.from_response(response, formdata={}, callback)

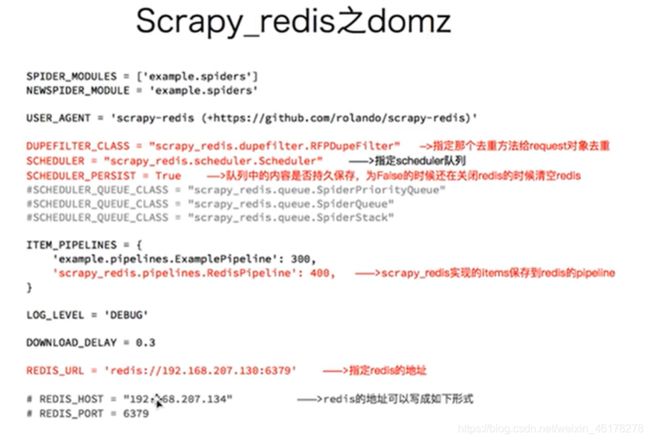

scrapy_redis 的学习

Scrapy 是一个通用的爬虫框架,但是不支持分布式,Scrapy-redis是为了更方便地实现Scrapy分布式爬取,而提供了一些以redis为基础的组件(仅有组件)。

scrapy_redis 的爬取流程

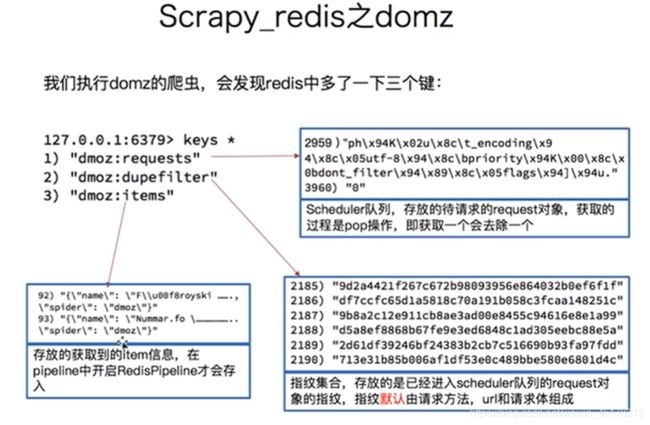

相比scrapy的工作流程,scrapy-redis就只是多了redis的一部分,并且调度器的request是从redis中读取出的,而且spider爬取过程中获取到的url也会经过调度器判重和调度再由spider爬取。最会spider返回的item会被存储到redis中。

Scrapy-redis提供了下面四种组件(基于redis)

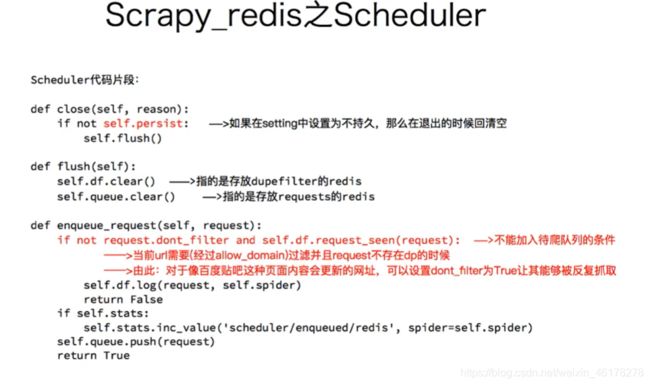

Scheduler:

Scrapy改造了python本来的collection.deque(双向队列)形成了自己的Scrapy queue(https://github.com/scrapy/queuelib/blob/master/queuelib/queue.py)),但是Scrapy多个spider不能共享待爬取队列Scrapy queue, 即Scrapy本身不支持爬虫分布式,scrapy-redis 的解决是把这个Scrapy queue换成redis数据库(也是指redis队列),从同一个redis-server存放要爬取的request,便能让多个spider去同一个数据库里读取。

Scrapy中跟“待爬队列”直接相关的就是调度器Scheduler,它负责对新的request进行入列操作(加入Scrapy queue),取出下一个要爬取的request(从Scrapy queue中取出)等操作。它把待爬队列按照优先级建立了一个字典结构,比如:

{

优先级0 : 队列0

优先级1 : 队列1

优先级2 : 队列2

}

然后根据request中的优先级,来决定该入哪个队列,出列时则按优先级较小的优先出列。为了管理这个比较高级的队列字典,Scheduler需要提供一系列的方法。但是原来的Scheduler已经无法使用,所以使用Scrapy-redis的scheduler组件。

Duplication Filter:

Scrapy中用集合实现这个request去重功能,Scrapy中把已经发送的request指纹放入到一个集合中,把下一个request的指纹拿到集合中比对,如果该指纹存在于集合中,说明这个request发送过了,如果没有则继续操作。这个核心的判重功能是这样实现的:

def request_seen(self, request):

# self.figerprints就是一个指纹集合

fp = self.request_fingerprint(request)

# 这就是判重的核心操作

if fp in self.fingerprints:

return True

self.fingerprints.add(fp)

if self.file:

self.file.write(fp + os.linesep)

在scrapy-redis中去重是由Duplication Filter组件来实现的,它通过redis的set 不重复的特性,巧妙的实现了Duplication Filter去重。scrapy-redis调度器从引擎接受request,将request的指纹存⼊redis的set检查是否重复,并将不重复的request push写⼊redis的 request queue。

引擎请求request(Spider发出的)时,调度器从redis的request queue队列⾥里根据优先级pop 出⼀个request 返回给引擎,引擎将此request发给spider处理。

Item Pipeline:

引擎将(Spider返回的)爬取到的Item给Item Pipeline,scrapy-redis 的Item Pipeline将爬取到的 Item 存⼊redis的 items queue。

修改过Item Pipeline可以很方便的根据 key 从 items queue 提取item,从⽽实现 items processes集群。

Base Spider:

不再使用scrapy原有的Spider类,重写的RedisSpider继承了Spider和RedisMixin这两个类,RedisMixin是用来从redis读取url的类。

当我们生成一个Spider继承RedisSpider时,调用setup_redis函数,这个函数会去连接redis数据库,然后会设置signals(信号):

- 一个是当spider空闲时候的signal,会调用spider_idle函数,这个函数调用schedule_next_request函数,保证spider是一直活着的状态,并且抛出DontCloseSpider异常。

- 一个是当抓到一个item时的signal,会调用item_scraped函数,这个函数会调用schedule_next_request函数,获取下一个request。

当下载scrapy-redis后会自带一个demo程序,如下

domz spider 代码:

同普通的crawlspider项目相比,主要差距在parse处理响应上.

尝试在settings中关闭redispipeline,观察redis中三个键的变化情况

scrapy-redis的源码解析

scrapy-redis重写的 scrapy本身的request去重功能,DUperFilter。

相比scrapy的pipeline, scrapy-redis只是将item 存储在redis中

重点补充:

request对象什么时候入队

-

dont_filter = True, 构造请求的时候, 把dont_filter置为True, 该url会被反复抓取(url地址对应的内容会更新的情况)

-

一个全新的url地址被抓到的时候, 构造request请求

-

url地址在start_urls中的时候, 会入队, 不管之前是否请求过构造start_urls 地址的时请求时候,dont_filter = True

scrapy-redis 入队源码

def enqueue_request(self, request):

if not request.dont_filter and self.df.request_seen(request):

# dont_filter = False self.df.request_seen = True 此时不会入队,因为request指纹已经存在

# dont_filter = False self.df.request_seen = False 此时会入队,因为此时是全新的url

self.df.log(request, self.spider)

return False

if self.stats:

self.stats.inc_value('scheduler/enqueued/redis', spider=self.spider)

self.queue.push(request) # 入队

return True

scrapy-redis去重方法

**

- 使用sha1加密request得到指纹

- 把指纹存在redis的集合中

- 下一次新来一个request, 同样的方式生成指纹, 判断指纹是否存在redis的集合中

生成指纹

fp = hashlib.sha1()

fp.update(to_bytes(request.method))

fp.update(to_byte(canonicalize_url(request.url)))

fp.update(request.body or b'')

return fp.hexdigest()

判断数据是否存在redis的集合中, 不存在插入

added = self.server.sadd(self.key, fp)

return added != 0

练习 爬取百度贴吧

spider 代码:

处理正确响应后获取到的信息,多使用正则,因为贴吧就算是获取到正确响应 页面内的html元素都是被注释起来,在渲染网页时由js处理,因此xpath等手段无法使用。

import scrapy

from copy import deepcopy

from tieba.pipelines import HandleChromeDriver

import re

class TiebaspiderSpider(scrapy.Spider):

name = 'tiebaspider'

allowed_domains = ['tieba.baidu.com']

start_urls = ['https://tieba.baidu.com/index.html']

def start_requests(self):

item = {}

# cookie1 = "BAIDUID_BFESS = 9250B568D2AF5E8D7C24501FD8947F10:FG=1; BDRCVFR[feWj1Vr5u3D]=I67x6TjHwwYf0; ZD_ENTRY=baidu; BA_HECTOR=0l2ha48g00ah842l6k1g46ooh0r; H_PS_PSSID=33518_33358_33272_31660_33595_33393_26350; delPer=0; PSINO=5; NO_UNAME=1; BIDUPSID=233AE38C1766688048F6AA80C4F0D56C; PSTM=1614821745; BAIDUID=233AE38C176668807122431B232D9927:FG=1; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598"

# cookie2 = {i.split("=")[0]: i.split("=")[1] for i in cookie1.split("; ")}

cookies = self.parse_cookie()

print(cookies)

# print(cookies)

headers = {

'Cache-Control': 'no-cache',

'Host': 'tieba.baidu.com',

'Pragma': 'no-cache',

'sec-ch-ua': '"Google Chrome";v = "89", "Chromium";v = "89", ";Not A Brand"; v = "99"',

'sec-ch-ua-mobile': '?0',

'Sec-Fetch-Dest': 'document',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-Site': 'none',

'Sec-Fetch-User': '?1',

'Upgrade-Insecure-Requests': 1

}

yield scrapy.Request(

'https://tieba.baidu.com/index.html',

cookies=cookies,

callback=self.parse,

headers=headers,

meta={"item": item}

)

print("ok")

def parse(self, response):

# 处理首页页面

if str(response.url).find("captcha") != -1:

HandleChromeDriver.handle_tuxing_captcha(url=str(response.url))

print(response.url)

print(response.status)

item = response.meta["item"]

grouping_list = response.xpath("//*[@id='f-d-w']/div/div/div")

for i in grouping_list:

group_link = "https://tieba.baidu.com" + i.xpath("./a/@href").extract_first()

group_name = i.xpath("./a/@title").extract_first()

item["group_link"] = group_link

item["group_name"] = group_name

if group_name is not None:

yield scrapy.Request(

group_link,

callback=self.parse_detail,

meta={"item": deepcopy(item)}

)

print("parse")

def parse_detail(self, response):

# 处理分组页面

detail_data = response.body.decode()

if str(response.url).find("captcha") != -1:

detail_data = HandleChromeDriver.handle_tuxing_captcha(url=str(response.url))

print(response.url)

print(response.status)

detail_list_link = re.findall(

'.*?',

detail_data, re.S)

print(detail_list_link)

detail_list_name = re.findall(

'.*?(.*?)

', detail_data, re.S)

item = response.meta["item"]

for i in range(len(detail_list_link)):

detail_link = "https://tieba.baidu.com" + detail_list_link[i]

detail_name = detail_list_name[i]

item["detail_link"] = detail_link

item["detail_name"] = detail_name

yield scrapy.Request(

detail_link,

callback=self.parse_data,

meta={"item": deepcopy(item)}

)

start_parse_url = response.url[:str(response.url).find("pn=") + 3]

start_parse_body = response.body.decode()

last_parse_page = re.findall('下一页>.*?.*?', start_parse_body, re.S)[0]

page_parse_num = re.findall('(\d+)', start_parse_body, re.S)[0]

page_parse_num = int(page_parse_num) + 1

end_parse_url = start_parse_url + str(page_parse_num)

if page_parse_num <= int(last_parse_page):

yield scrapy.Request(

end_parse_url,

callback=self.parse_detail,

meta={"item": deepcopy(item)}

)

print("parse_detail")

def parse_data(self, response):

body_data = response.body.decode()

if str(response.url).find("captcha") != -1:

body_data = HandleChromeDriver.handle_tuxing_captcha(url=str(response.url))

print(response.url)

print(response.status)

# print(response.body.decode())

data_name = re.findall('(.*?)',

body_data, re.S)

# print(data_name)

data_link = re.findall(

'.*?.*?',

body_data, re.S)

# print(data_link)

# data_list = response.xpath('//*[@id="thread_list"]/li//div[@class="threadlist_title pull_left j_th_tit "]/a')

# print(data_list.extract_first())

item = response.meta["item"]

for i in range(len(data_link)):

item["data_link"] = "https://tieba.baidu.com" + data_link[i]

item["data_name"] = data_name[i]

yield item

temp_url_find = str(response.url).find("pn=")

if temp_url_find == -1:

start_detail_url = response.url + "&ie=utf-8&pn="

else:

start_detail_url = str(response.url)[:temp_url_find + 3]

start_detail_body = response.body.decode()

last_detail_page = re.findall('下一页>.*?尾页', start_detail_body, re.S)[0]

page_detail_num = re.findall('(.*?)', start_detail_body, re.S)[0]

page_detail_num = int(page_detail_num) * 50

end_detail_url = start_detail_url + str(page_detail_num)

print(end_detail_url)

if page_detail_num <= int(last_detail_page):

yield scrapy.Request(

end_detail_url,

callback=self.parse_data,

meta={"item": deepcopy(item)}

)

print("parse_data")

def parse_data1(self, response):

pass

def parse_cookie(self):

lis = []

lst_end = {}

lis_link = ["BAIDUID", "PSTM", "BIDUPSID", "__yjs_duid", "BDORZ", "BDUSS", "BAIDUID_BFESS", "H_PS_PSSID",

"bdshare_firstime", "BDUSS_BFESS", "NO_UNAME", "tb_as_data", "STOKEN", "st_data",

"Hm_lvt_287705c8d9e2073d13275b18dbd746dc", "Hm_lvt_98b9d8c2fd6608d564bf2ac2ae642948", "st_key_id",

"ab_sr", "st_sign"]

with open("./cookie.txt", "r+", encoding="utf-8") as f:

s = f.read()

t = s.strip("[").strip("]").replace("'", "")

while True:

num = t.find("}, ")

if num != -1:

lis.append({i.split(": ")[0]: i.split(": ")[1] for i in t[:num].strip("{").split(", ")})

t = t.replace(t[:num + 3], "")

else:

break

cookie1 = "BAIDUID_BFESS = 9250B568D2AF5E8D7C24501FD8947F10:FG=1; BDRCVFR[feWj1Vr5u3D] = I67x6TjHwwYf0; ZD_ENTRY = baidu; BA_HECTOR = 0l2ha48g00ah842l6k1g46ooh0r; H_PS_PSSID = 33518_33358_33272_31660_33595_33393_26350; delPe r= 0; PSINO = 5; NO_UNAME = 1; BIDUPSID = 233AE38C1766688048F6AA80C4F0D56C; PSTM = 1614821745; BAIDUID = 233AE38C176668807122431B232D9927:FG=1; BDORZ = B490B5EBF6F3CD402E515D22BCDA1598"

cookie2 = {i.split(" = ")[0]: i.split(" = ")[-1] for i in cookie1.split("; ")}

for i in lis_link:

for j in lis:

if j["name"] == i:

lst_end[i] = j["value"]

for z in cookie2:

if i == z:

lst_end[i] = cookie2[i]

return lst_end

pipeline 代码:

这里主要是数据的存储,存在csv文件内。以及一个工具类, 带有两个静态方法,一个用于处理自动登录贴吧以获取到完整且正确的cookie信息,以便之后的请求携带,能得到正确的响应信息,一个用于处理爬虫在爬取时遇到贴吧的检测图形验证码(该验证码,人都不是很容易通过。。。)

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

from selenium import webdriver

import time

import csv

class TiebaPipeline:

def process_item(self, item, spider):

with open('./tieba.csv', 'a+', encoding='utf-8') as file:

fieldnames = ['group_link', 'group_name', 'detail_link', 'detail_name', 'data_link', 'data_name']

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writerow(item)

return item

def open_spider(self, spider):

with open('./tieba.csv', 'w+', encoding='utf-8') as file:

fieldnames = ['group_link', 'group_name', 'detail_link', 'detail_name', 'data_link', 'data_name']

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

HandleChromeDriver.handle_cookie(url="http://tieba.baidu.com/f/user/passport?jumpUrl=http://tieba.baidu.com")

class HandleChromeDriver:

@staticmethod

def handle_cookie(url):

driver = webdriver.Chrome("E:\python_study\spider\data\chromedriver_win32\chromedriver.exe")

driver.implicitly_wait(2)

driver.get(url)

driver.implicitly_wait(2)

login_pwd = driver.find_element_by_xpath('//*[@id="TANGRAM__PSP_4__footerULoginBtn"]')

login_pwd.click()

username = driver.find_element_by_xpath('//*[@id="TANGRAM__PSP_4__userName"]')

pwd = driver.find_element_by_xpath('//*[@id="TANGRAM__PSP_4__password"]')

login_btn = driver.find_element_by_xpath('//*[@id="TANGRAM__PSP_4__submit"]')

time.sleep(1)

username.send_keys("18657589370")

time.sleep(1)

pwd.send_keys("tcc062556")

time.sleep(1)

login_btn.click()

time.sleep(15)

tb_cookie = str(driver.get_cookies())

with open("./cookie.txt", "w+", encoding="utf-8") as f:

f.write(tb_cookie)

# print(tb_cookie)

driver.close()

@staticmethod

def handle_tuxing_captcha(url):

drivers = webdriver.Chrome("E:\python_study\spider\data\chromedriver_win32\chromedriver.exe")

drivers.implicitly_wait(2)

drivers.get(url)

drivers.implicitly_wait(2)

time.sleep(10)

drivers.close()

# print(tb_cookie)

return drivers.page_source

settings 代码:

这里主要设置一些请求头得到信息以及在每次请求时间歇两秒

BOT_NAME = 'tieba'

SPIDER_MODULES = ['tieba.spiders']

NEWSPIDER_MODULE = 'tieba.spiders'

LOG_LEVEL = 'WARNING'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.72 Safari/537.36 Edg/89.0.774.45'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

DOWNLOAD_DELAY = 2

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8',

'Connection': 'keep-alive',

}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'tieba.pipelines.TiebaPipeline': 300,

}