alertmanager——webhook与API

alertmanager简介与部署

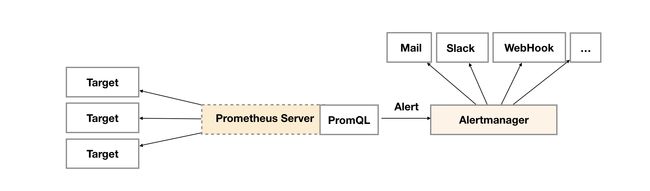

alertmanager简介

prometheus整个监控系统中,prometheus只负责将数据采集和生成告警信息,而告警信息的处理是由Alertmanager负责处理。

在Prometheus中定义好告警规则后,Prometheus会周期性的对告警规则进行计算,如果满足告警触发条件就会向Alertmanager发送告警信息。

Alertmanager负责接收并处理来自Prometheus Server的告警信息。对这些告警信息进行进一步的处理,比如当接收到大量重复告警时能够消除重复的告警信息,同时对告警信息进行分组并且路由到正确的通知方,Prometheus内置了对邮件、Slack、webhook等多种通知方式的支持,同时AlertManager还提供了静默和告警抑制机制来对告警通知行为进行优化。

关于alertmanager详细介绍可参考以下文章

- 《Alertmanager——基础入门》:https://www.cuiliangblog.cn/detail/article/34

- 《Alertmanager——配置详解》:https://www.cuiliangblog.cn/detail/article/35

alertmanager部署

为方便后续演示与程序调用alertmanager API,此处以docker方式部署为例演示,并将告警路由时间参数调至最小。

# 创建目录与默认配置文件

➜ mkdir alertmanager

➜ cd alertmanager

➜ cat alertmanager.yml

route:

group_by: ['alertname']

group_wait: 1s

group_interval: 1s

repeat_interval: 1h

receiver: 'webhook'

receivers:

- name: 'webhook'

webhook_configs:

- url: 'http://192.168.8.20:5001'

# 运行docker容器

➜ docker run -d -p 9093:9093 --name alertmanager -v $PWD/alertmanager.yml:/etc/alertmanager/alertmanager.yml -v $PWD/data:/alertmanager prom/alertmanager:latest

也可以在k8s中部署Alertmanager,详细内容参考以下文章

《thanos高可用prometheus集群部署》:https://www.cuiliangblog.cn/detail/article/30

Alertmanager webhook

使用场景

- 自定义告警媒介:虽然Alertmanager内置了对邮件、Slack、PagerDuty、OpsGenie、VictorOps、telegram等工具的通知集成,但是对于国内环境,大家使用最多的还是企业微信、钉钉、飞书、公有云短信电话等方式,所以要想实现自定义告警媒介通知,就需要使用webhook功能,开发自定义程序实现。

- 告警历史记录:Alertmanager UI界面只显示当前激活的告警,对于已恢复的历史告警记录,就无法从Alertmanager中查询到。可以通过webhook每次收到告警通知时做记录,写入数据库或ES中,最后通过grafana制作 dashboard可以从多个标签纬度分析历史告警记录,突显出运维工作的高频故障与薄弱环节,为后续运维工作优化提供参考。

- 告警通知升级:Alertmanager目前只提供了repeat_inteval参数对于未恢复的告警超过指定时间重复通知,如果想实现告警事件默认短信通知,超过2小时未处理改为电话通知,就可以通过webhook来实现。

webhook配置

回到alertmanager.yaml配置,默认的配置中使用的就是webhook

route:

group_by: ['alertname']

group_wait: 1s

group_interval: 1s

repeat_interval: 1h

receiver: 'web.hook' # 接收组名,与下面的receivers组名对应

receivers:

- name: 'web.hook'

webhook_configs:

- url: 'http://127.0.0.1:5001/' # webhook地址

webhook程序

此webhook的功能就是接收Alertmanager推送的告警事件,解析获取内容,格式化数据后调用告警媒介发送通知。

from flask import Flask, request

from log import logger

from datetime import datetime, timedelta

app = Flask(__name__)

def send_content(content, team):

"""

对接其他告警媒介发送内容

:param content:发送内容

:param team:通知组

:return:

"""

logger.info("开始发送告警,发送内容%s发送组%s" % (content, team))

@app.route('/', methods=["POST"])

def index():

"""

对接alert manager,解析告警内容,推送至自定义告警媒介

:return: success

"""

req = request.json

logger.info("接收到告警事件通知,内容为:%s" % req)

for alert in req['alerts']:

status = ''

if alert['status'] == 'firing':

status = "告警触发"

elif alert['status'] == 'resolved':

status = "告警恢复"

else:

pass

job = alert['labels']['job']

team = alert['labels']['team']

severity = alert['labels']['severity']

description = alert['annotations']['description']

name = alert['labels']['alertname']

time_obj = datetime.strptime(alert['startsAt'][:19], '%Y-%m-%dT%H:%M:%S') + timedelta(hours=8)

time = datetime.strftime(time_obj, '%Y-%m-%d %H:%M:%S')

content = "========={0}=========\n" \

"告警名称:{1}\n" \

"告警类型:{2}\n" \

"告警级别:{3}\n" \

"告警小组:{4}\n" \

"告警时间:{5}\n" \

"告警内容:{6}".format(status, name, job, severity, team, time, description)

send_content(content, team)

return "success!"

@app.route('/health')

def healthy():

return 'ok'

if __name__ == '__main__':

app.run()

告警数据样例

运行服务后,通过控制台打印的内容可知,告警触发时,Alertmanager推送的数据内容如下:

{

"receiver": "webhook",

"status": "firing",

"alerts": [

{

"status": "firing",

"labels": {

"alertname": "ServicePortUnavailable",

"group": "elasticsearch",

"instance": "192.168.10.55:9200",

"job": "blackbox_exporter_tcp",

"severity": "warning",

"team": "elk"

},

"annotations": {

"description": "elasticsearch 192.168.10.55:9200 service port is unavailable",

"summary": "service port unavailable",

"value": "192.168.10.55:9200"

},

"startsAt": "2023-07-08T09:16:01.979669601Z",

"endsAt": "0001-01-01T00:00:00Z",

"generatorURL": "/graph?g0.expr=probe_success%7Binstance%3D~%22%28%5C%5Cd%2B.%29%7B4%7D%5C%5Cd%2B%22%7D+%3D%3D+0&g0.tab=1",

"fingerprint": "1e43318d4e7834f1"

}

],

"groupLabels": {

"alertname": "ServicePortUnavailable"

},

"commonLabels": {

"alertname": "ServicePortUnavailable",

"group": "elasticsearch",

"instance": "192.168.10.55:9200",

"job": "blackbox_exporter_tcp",

"severity": "warning",

"team": "elk"

},

"commonAnnotations": {

"description": "elasticsearch 192.168.10.55:9200 service port is unavailable",

"summary": "service port unavailable",

"value": "192.168.10.55:9200"

},

"truncatedAlerts": 0

}

告警恢复时,推送的数据格式内容如下:

{

"receiver": "webhook",

"status": "resolved",

"alerts": [

{

"status": "resolved",

"labels": {

"alertname": "ServicePortUnavailable",

"group": "elasticsearch",

"instance": "192.168.10.55:9200",

"job": "blackbox_exporter_tcp",

"severity": "warning",

"team": "elk"

},

"annotations": {

"description": "elasticsearch 192.168.10.55:9200 service port is unavailable",

"summary": "service port unavailable",

"value": "192.168.10.55:9200"

},

"startsAt": "2023-07-08T09:16:31.979669601Z",

"endsAt": "2023-07-08T09:17:31.979669601Z",

"generatorURL": "/graph?g0.expr=probe_success%7Binstance%3D~%22%28%5C%5Cd%2B.%29%7B4%7D%5C%5Cd%2B%22%7D+%3D%3D+0&g0.tab=1",

"fingerprint": "fdc02ded56786bca"

}

],

"groupLabels": {

"alertname": "ServicePortUnavailable"

},

"commonLabels": {

"alertname": "ServicePortUnavailable",

"group": "elasticsearch",

"instance": "192.168.10.55:9200",

"job": "blackbox_exporter_tcp",

"severity": "warning",

"team": "elk"

},

"commonAnnotations": {

"description": "elasticsearch 192.168.10.55:9200 service port is unavailable",

"summary": "service port unavailable",

"value": "192.168.10.55:9200"

},

"externalURL": "http://alertmanager-55b94ccc7d-7psb2:9093",

"truncatedAlerts": 0

}

Alertmanager API

接口文档

官方地址:https://github.com/prometheus/alertmanager/blob/main/api/v2/openapi.yaml

apifox地址:https://apifox.com/apidoc/shared-d39e7f21-9992-4d0e-9ab8-65aa169d6be5

接口概述

由接口文档可知,Alertmanager的API接口主要分为以下几类

/status:获取Alertmanager实例及其集群的当前状态

/receivers:获取所有接收者的列表(通知集成的名称)

/silence:新增、删除告警静默规则

/alerts:查询、新增告警事件

/alerts/groups:获取警报组列表

接下来我们选取几个常用的接口演示使用

新增告警事件

使用场景

例如现在有一个定时任务备份脚本,如果备份脚本执行失败时,我们希望收到告警推送通知。如果单独对这个脚本开发exporter或者部署pushgateway推送指标就显得小题大做了,而且我们不需要在Prometheus中存储历史数据,此时就可以调用Alertmanager的API接口,完成告警事件推送。

请求格式

请求方式:POST

请求地址:/api/v2/alerts

请求示例:请求内容是一个数组,里面是多个的告警内容。其中只有labels是必填参数,其他都是可选。时间参数默认值为当前时间的整时,如果传参时间格式必须是标准UTC时间。

[

{

"labels": {"label": "value", ...},

"annotations": {"label": "value", ...},

"generatorURL": "string", # 可选

"startsAt": "2023-01-01T00:00:00.00Z", # 可选

"endsAt": "2023-01-01T00:00:00.00Z" # 可选

},

...

]

请求示例

请求内容如下:

[

{

"startsAt": "2023-07-07T07:07:07.00Z",

"labels": {

"alertname": "NodeStatusDown",

"job": "node-exporter",

"severity": "warning",

"team": "server"

},

"annotations": {

"description": "192.168.10.2 host down more than 5 minutes",

"summary": "node status down",

"value": "192.168.10.2"

}

}

]

使用curl命令请求

curl -X POST -H 'content-type:application/json' -d '[{"startsAt":"2023-07-07T07:07:07.00Z","labels":{"alertname":"NodeStatusDown","job":"node-exporter","severity":"warning","team":"server"},"annotations":{"description":"192.168.10.2 host down more than 5 minutes","summary":"node status down","value":"192.168.10.2"}}]' 127.0.0.1:9093/api/v2/alerts

查看Alertmanager UI,已经收到了告警事件

如果想要主动推送告警恢复通知,只需要传入一个历史的endsAt时间即可

curl -X POST -H 'content-type:application/json' -d '["startsAt":"2023-07-07T07:07:07.00Z","endsAt":"2023-07-07T08:08:08.00Z","labels":{"alertname":"NodeStatusDown","job":"node-exporter","severity":"warning","team":"server"},"annotations":{"description":"192.168.10.2 host down more than 5 minutes","summary":"node status down","value":"192.168.10.2"}}]' 127.0.0.1:9093/api/v2/alerts

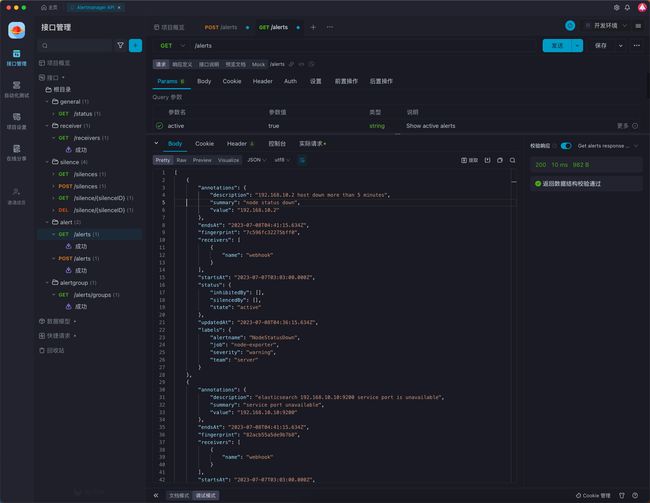

查询告警事件

使用场景

获取当前激活状态的告警列表,可以传多个参数过滤匹配。可以在每天下班前查询所有未处理的告警,推送事件广播,提示大家及时处理告警事件。

请求格式

请求方式:GET

请求地址:/api/v2/alerts

请求参数

| 参数名 | 类型 | 说明 | 是否必填 |

|---|---|---|---|

| active | string | 查询激活的告警 | 否 |

| silenced | string | 查询静默的告警 | 否 |

| inhibited | string | 查询抑制的告警 | 否 |

| unprocessed | string | 查询未处理的告警 | 否 |

| filter | array | 按指定标签查询告警 | 否 |

| receiver | string | 查询告警接收组 | 否 |

请求示例

- 获取所有激活的告警

curl http://127.0.0.1:9093/api/v2/alerts?active=true

- 获取team为server,且状态为激活的告警

curl http://127.0.0.1:9093/api/v2/alerts?active=true&filter=team=%22server%22

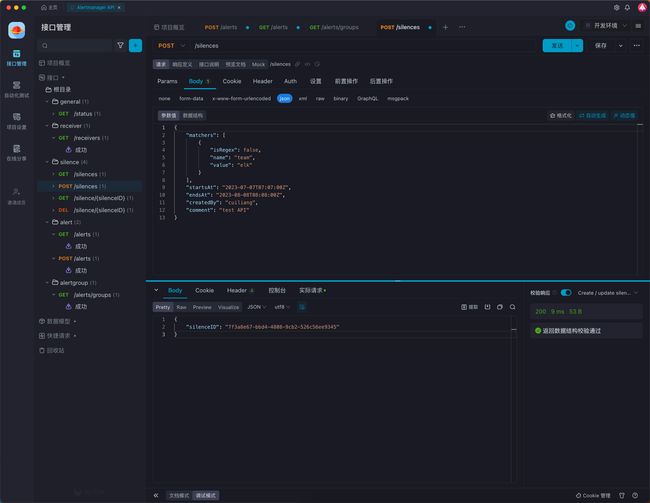

新增告警静默规则

使用场景

某些自动化场景下,例如批量依次重启服务器升级内核版本,在此期间就会收到大量的告警事件。可是在执行自动化脚本第一步时通过请求API接口自动添加告警静默规则,待脚本执行完成后再自动取消静默规则。

或者在告警事件平台与工单系统集成后,某些告警故障无法立即解决,例如服务器硬件故障等待工程师处理时,就可以在工单系统点击暂缓处理按钮,调用Alertmanager API时间告警事件的临时静默。

请求格式

请求方式:POST

请求地址:/api/v2/silences

请求参数:

{

"id": "string", # 可选

"matchers": [ # 必填,需要静默的告警规则标签

{

"name": "string", # 标签键

"value": "string", # 标签值

"isRegex": true, # 是否为正则表达式

"isEqual": true # 可选,默认true

}

],

"startsAt": "2019-08-24T14:15:22Z", # 必填,静默生效开始时间

"endsAt": "2019-08-24T14:15:22Z", # 必填,静默失效时间

"createdBy": "string", # 必填,创建者

"comment": "string" # 必填,创建说明备注

}

请求示例

请求内容如下:

{

"matchers": [

{

"isRegex": false,

"name": "team",

"value": "elk"

}

],

"startsAt": "2023-07-07T07:07:00Z",

"endsAt": "2023-08-08T08:08:00Z",

"createdBy": "cuiliang",

"comment": "test API"

}

通过API请求工具请求接口数据,返回成功状态码

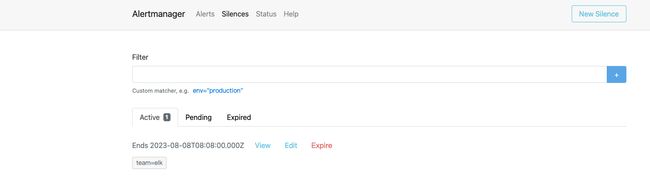

接下来查看Alertmanager UI中的静默信息,发现已经成功添加静默规则

查询与删除告警静默规则

我们通过调用API接口,创建了两条告警静默规则,并将team=elk的静默规则设置为失效状态,效果如下:

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-at9bE8Hu-1688959175595)(https://cdn.nlark.com/yuque/0/2023/png/2308212/1688793549802-fae4ca4a-9a4a-40fe-bfb3-8c8bb92195f5.png#averageHue=%23fcfcfc&clientId=u1a3aa41c-9a04-4&from=paste&height=407&id=ubd9efd5b&originHeight=407&originWidth=1495&originalType=binary&ratio=1&rotation=0&showTitle=false&size=30516&status=done&style=none&taskId=u39413554-81d0-492f-97e5-b64e27111f8&title=&width=1495)]

查询所有告警静默规则

请求方式:GET

请求地址:/api/v2/silences

默认会返回所有静默规则,包括失效状态的规则

查询指定标签的静默规则

请求方式:GET

请求地址:/api/v2/silences

请求参数:filter:[team=elk]

查询指定ID的静默规则

例如查询id为5d5f1ed3-9033-4a70-92c6-1d93eeaa08db的规则信息

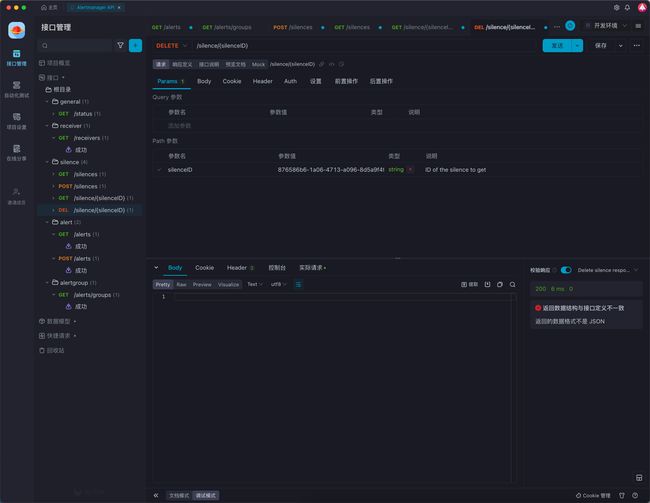

删除告警静默规则

例如删除id为876586b6-1a06-4713-a096-8d5a9f4ffa8a的规则信息

删除静默规则本质是是把状态为active的变为expired状态,并不会真正删除规则。对于已经是expired状态的规则,无法进行删除操作。

查看更多

微信公众号

微信公众号同步更新,欢迎关注微信公众号第一时间获取最近文章。![]()

博客网站

崔亮的博客-专注devops自动化运维,传播优秀it运维技术文章。更多原创运维开发相关文章,欢迎访问https://www.cuiliangblog.cn