Python爬取解放号外包需求案例,利用post参数多页爬取

代码展示:

import requests

import csv

f = open('外包数据.csv',mode='a',encoding='utf-8',newline='')

csv_writer = csv.writer(f)

csv_writer.writerow(['标题','编号','开始时间','结束时间','价格','状态','类型','投标人数','详情页'])

def down_load(page):

for page in range(1,page+1):

url = 'https://www.jfh.com/jfportal/workMarket/getRequestData'

data = {

'putTime': '',

'minPrice': '',

'maxPrice': '',

'isRemoteWork': '',

'orderCondition': '0',

'orderConfig': '1',

'pageNo': str(page),

'searchName': '',

'fitCode': '0',

'workStyleCode':'',

'jfId': '235086452',

'buId': '',

'currentBuid': '',

'jieBaoType': '',

'login': '1',

'city': '',

'orderType': '',

'serviceTypeKey':'',

'webSite': '',

'webSign': '',

'source': '1'

}

headers = {'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36',

'Referer':

'https://www.jfh.com/jfportal/market/index?m=b01'

}

response = requests.post(url=url,data=data,headers=headers)

json_data = response.json()

for index in json_data['resultList']:

index_url = f'https://www.jfh.com/jfportal/orders/jf{index["orderNo"]}'

title = index['orderName']

orderNo = index['orderNo']

starttime = index['bidValidtime']

endtime = index['formatOrderTime']

price = index['price']

status = index['bidValidTimeOut']

techDirection = index['techDirection']

bookCount = index['bookCount']

csv_writer.writerow([title,orderNo,starttime,endtime,price,status,techDirection,bookCount,index_url])

down_load(100)

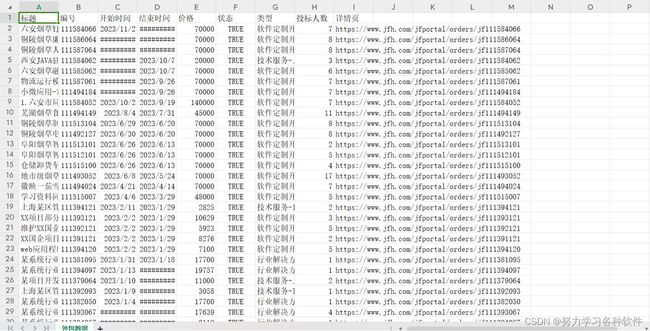

结果展示:

难点注意:观察不同页之间的差距,发现只有参数中pageno发生了变化。亲测有效,没有反爬,换个headers即可。