从构建分布式秒杀系统聊聊分布式锁

从构建分布式秒杀系统聊聊分布式锁

1.案例介绍

在尝试了解分布式锁之前,大家可以想象一下,什么场景下会使用分布式锁?

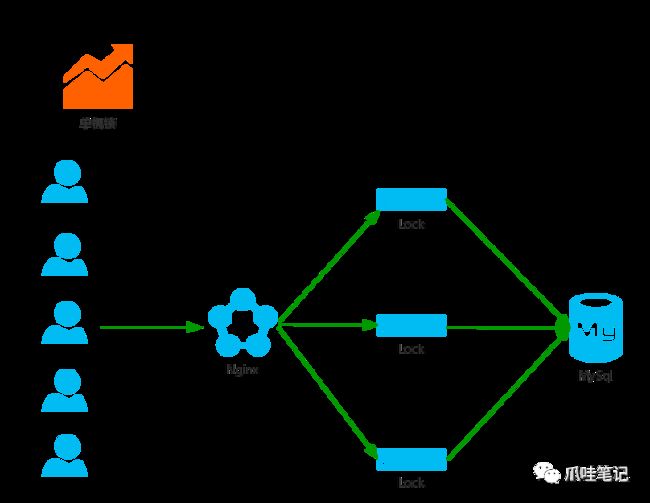

单机应用架构中,秒杀案例使用ReentrantLcok或者synchronized来达到秒杀商品互斥的目的。然而在分布式系统中,会存在多台机器并行去实现同一个功能。也就是说,在多进程中,如果还使用以上JDK提供的进程锁,来并发访问数据库资源就可能会出现商品超卖的情况。因此,需要我们来实现自己的分布式锁。

实现一个分布式锁应该具备的特性:

- 高可用、高性能的获取锁与释放锁

- 在分布式系统环境下,一个方法或者变量同一时间只能被一个线程操作

- 具备锁失效机制,网络中断或宕机无法释放锁时,锁必须被删除,防止死锁

- 具备阻塞锁特性,即没有获取到锁,则继续等待获取锁

- 具备非阻塞锁特性,即没有获取到锁,则直接返回获取锁失败

- 具备可重入特性,一个线程中可以多次获取同一把锁,比如一个线程在执行一个带锁的方法,该方法中又调用了另一个需要相同锁的方法,则该线程可以直接执行调用的方法,而无需重新获得锁

在之前的秒杀案例中,我们曾介绍过关于分布式锁几种实现方式:

- 基于数据库实现分布式锁

- 基于 Redis 实现分布式锁

- 基于 Zookeeper 实现分布式锁

前两种对于分布式生产环境来说并不是特别推荐,高并发下数据库锁性能太差,Redis在锁时间限制和缓存一致性存在一定问题。这里我们重点介绍一下 Zookeeper 如何实现分布式锁。

2.实现原理

ZooKeeper是一个分布式的,开放源码的分布式应用程序协调服务,它内部是一个分层的文件系统目录树结构,规定同一个目录下只能存在唯一文件名。

ZooKeeper数据模型与文件系统目录树(源自网络)

3.数据模型

- PERSISTENT 持久化节点,节点创建后,不会因为会话失效而消失

- EPHEMERAL 临时节点, 客户端session超时此类节点就会被自动删除

- EPHEMERAL_SEQUENTIAL 临时自动编号节点

- PERSISTENT_SEQUENTIAL 顺序自动编号持久化节点,这种节点会根据当前已存在的节点数自动加 1

4.监视器(watcher)

当创建一个节点时,可以注册一个该节点的监视器,当节点状态发生改变时,watch被触发时,ZooKeeper将会向客户端发送且仅发送一条通知,因为watch只能被触发一次。

根据zookeeper的这些特性,我们来看看如何利用这些特性来实现分布式锁:

- 创建一个锁目录lock

- 线程A获取锁会在lock目录下,创建临时顺序节点

- 获取锁目录下所有的子节点,然后获取比自己小的兄弟节点,如果不存在,则说明当前线程顺序号最小,获得锁

- 线程B创建临时节点并获取所有兄弟节点,判断自己不是最小节点,设置监听(watcher)比自己次小的节点

- 线程A处理完,删除自己的节点,线程B监听到变更事件,判断自己是最小的节点,获得锁

5.代码分析

尽管ZooKeeper已经封装好复杂易出错的关键服务,将简单易用的接口和性能高效、功能稳定的系统提供给用户。但是如果让一个普通开发者去手撸一个分布式锁还是比较困难的,在秒杀案例中我们直接使用 Apache 开源的curator 开实现 Zookeeper 分布式锁。

这里我们使用以下版本,截止目前最新版4.0.1,maven依赖如下:

<dependency>

<groupId>org.apache.curatorgroupId>

<artifactId>curator-recipesartifactId>

<version>2.12.0version>

dependency>

首先,我们看下InterProcessLock接口中的几个方法:

package org.apache.curator.framework.recipes.locks;

import java.util.concurrent.TimeUnit;

public interface InterProcessLock

{

/**

* Acquire the mutex - blocking until it's available. Each call to acquire must be balanced by a call

* to {@link #release()}

*

* @throws Exception ZK errors, connection interruptions

*/

public void acquire() throws Exception;

/**

* Acquire the mutex - blocks until it's available or the given time expires. Each call to acquire that returns true must be balanced by a call

* to {@link #release()}

*

* @param time time to wait

* @param unit time unit

* @return true if the mutex was acquired, false if not

* @throws Exception ZK errors, connection interruptions

*/

public boolean acquire(long time, TimeUnit unit) throws Exception;

/**

* Perform one release of the mutex.

*

* @throws Exception ZK errors, interruptions, current thread does not own the lock

*/

public void release() throws Exception;

/**

* Returns true if the mutex is acquired by a thread in this JVM

*

* @return true/false

*/

boolean isAcquiredInThisProcess();

}

InterProcessLock接口总共有4个实现类,结构如下

-

InterProcessMultiLock:管理多个锁的容器最为单一对象,当调用acquire方法获取锁时,所有的锁被同时获取,业务处理完成后,线程释放锁时也是同时释放所有的锁

/** * A container that manages multiple locks as a single entity. When {@link #acquire()} is called, * all the locks are acquired. If that fails, any paths that were acquired are released. Similarly, when * {@link #release()} is called, all locks are released (failures are ignored). */ public class InterProcessMultiLock implements InterProcessLock { private final List<InterProcessLock> locks; /** * Creates a multi lock of {@link InterProcessMutex}s * * @param client the client * @param paths list of paths to manage in the order that they are to be locked */ public InterProcessMultiLock(CuratorFramework client, List<String> paths) { // paths get checked in each individual InterProcessMutex, so trust them here this(makeLocks(client, paths)); } -

InterProcessMutex:支持可重入锁

/** * A re-entrant mutex that works across JVMs. Uses Zookeeper to hold the lock. All processes in all JVMs that * use the same lock path will achieve an inter-process critical section. Further, this mutex is * "fair" - each user will get the mutex in the order requested (from ZK's point of view) */ public class InterProcessMutex implements InterProcessLock, Revocable<InterProcessMutex> { private final LockInternals internals; private final String basePath; private final ConcurrentMap<Thread, LockData> threadData = Maps.newConcurrentMap(); private static class LockData { final Thread owningThread; final String lockPath; final AtomicInteger lockCount = new AtomicInteger(1); private LockData(Thread owningThread, String lockPath) { this.owningThread = owningThread; this.lockPath = lockPath; } } private static final String LOCK_NAME = "lock-"; -

InterProcessSemaphoreMutex : 不支持可重入锁

/** * A NON re-entrant mutex that works across JVMs. Uses Zookeeper to hold the lock. All processes in all JVMs that * use the same lock path will achieve an inter-process critical section. */ public class InterProcessSemaphoreMutex implements InterProcessLock { private final InterProcessSemaphoreV2 semaphore; private volatile Lease lease; /** * @param client the client * @param path path for the lock */ public InterProcessSemaphoreMutex(CuratorFramework client, String path) { this.semaphore = new InterProcessSemaphoreV2(client, path, 1); } -

InternalInterProcessMutex:zk读写锁的静态内部类,充当zk读写锁

public class InterProcessReadWriteLock { private final InterProcessMutex readMutex; private final InterProcessMutex writeMutex; private static class InternalInterProcessMutex extends InterProcessMutex { private final String lockName; private final byte[] lockData; InternalInterProcessMutex(CuratorFramework client, String path, String lockName, byte[] lockData, int maxLeases, LockInternalsDriver driver) { super(client, path, lockName, maxLeases, driver); this.lockName = lockName; this.lockData = lockData; } @Override public Collection<String> getParticipantNodes() throws Exception { Collection<String> nodes = super.getParticipantNodes(); Iterable<String> filtered = Iterables.filter ( nodes, new Predicate<String>() { @Override public boolean apply(String node) { return node.contains(lockName); } } ); return ImmutableList.copyOf(filtered); } @Override protected byte[] getLockNodeBytes() { return lockData; } }

这里以支持可重复锁InterProcessMutex针对源码展开介绍,了解zk充当分布式锁的锁机制,加锁和解锁过程

1)获取锁:

/**

* Acquire the mutex - blocking until it's available. Note: the same thread

* can call acquire re-entrantly. Each call to acquire must be balanced by a call

* to {@link #release()}

*

* @throws Exception ZK errors, connection interruptions

*/

@Override

public void acquire() throws Exception

{

if ( !internalLock(-1, null) )

{

throw new IOException("Lost connection while trying to acquire lock: " + basePath);

}

}

/**

* Acquire the mutex - blocks until it's available or the given time expires. Note: the same thread

* can call acquire re-entrantly. Each call to acquire that returns true must be balanced by a call

* to {@link #release()}

*

* @param time time to wait

* @param unit time unit

* @return true if the mutex was acquired, false if not

* @throws Exception ZK errors, connection interruptions

*/

@Override

public boolean acquire(long time, TimeUnit unit) throws Exception

{

return internalLock(time, unit);

}

具体实现:

private boolean internalLock(long time, TimeUnit unit) throws Exception

{

/*

Note on concurrency: a given lockData instance

can be only acted on by a single thread so locking isn't necessary

*/

Thread currentThread = Thread.currentThread();

LockData lockData = threadData.get(currentThread);

if ( lockData != null )

{

// re-entering

lockData.lockCount.incrementAndGet();

return true;

}

// 尝试获取zk锁

String lockPath = internals.attemptLock(time, unit, getLockNodeBytes());

if ( lockPath != null )

{

LockData newLockData = new LockData(currentThread, lockPath);

threadData.put(currentThread, newLockData);

return true;

}

//获取锁超时或者zk通信异常返回失败

return false;

}

Zookeeper获取锁实现:

String attemptLock(long time, TimeUnit unit, byte[] lockNodeBytes) throws Exception

{

final long startMillis = System.currentTimeMillis();

final Long millisToWait = (unit != null) ? unit.toMillis(time) : null;

// 子节点标识

final byte[] localLockNodeBytes = (revocable.get() != null) ? new byte[0] : lockNodeBytes;

// 重试次数

int retryCount = 0;

String ourPath = null;

boolean hasTheLock = false;

boolean isDone = false;

// 自旋锁,循环获取锁

while ( !isDone )

{

isDone = true;

try

{

//在锁节点下创建临时且有序的子节点,例如:_c_008c1b07-d577-4e5f-8699-8f0f98a013b4-lock-000000001

ourPath = driver.createsTheLock(client, path, localLockNodeBytes);

//如果当前子节点序号最小,获得锁则直接返回,否则阻塞等待前一个子节点删除通知(release释放锁)

hasTheLock = internalLockLoop(startMillis, millisToWait, ourPath);

}

catch ( KeeperException.NoNodeException e )

{

// gets thrown by StandardLockInternalsDriver when it can't find the lock node

// this can happen when the session expires, etc. So, if the retry allows, just try it all again

if ( client.getZookeeperClient().getRetryPolicy().allowRetry(retryCount++, System.currentTimeMillis() - startMillis, RetryLoop.getDefaultRetrySleeper()) )

{

isDone = false;

}

else

{

throw e;

}

}

}

if ( hasTheLock )

{

return ourPath;

}

return null;

}

判断是否为最小节点:

private boolean internalLockLoop(long startMillis, Long millisToWait, String ourPath) throws Exception

{

boolean haveTheLock = false;

boolean doDelete = false;

try

{

if ( revocable.get() != null )

{

client.getData().usingWatcher(revocableWatcher).forPath(ourPath);

}

// 自旋获取锁

while ( (client.getState() == CuratorFrameworkState.STARTED) && !haveTheLock )

{

// 获取所有子节点集合

List<String> children = getSortedChildren();

// 判断当前子节点是否是最小子节点

String sequenceNodeName = ourPath.substring(basePath.length() + 1); // +1 to include the slash

PredicateResults predicateResults = driver.getsTheLock(client, children, sequenceNodeName, maxLeases);

if ( predicateResults.getsTheLock() )

{ // 当前节点是最小子节点,获得锁

haveTheLock = true;

}

else

{ // 获取前一个节点,用于监听

String previousSequencePath = basePath + "/" + predicateResults.getPathToWatch();

synchronized(this)

{

try

{

// use getData() instead of exists() to avoid leaving unneeded watchers which is a type of resource leak

client.getData().usingWatcher(watcher).forPath(previousSequencePath);

if ( millisToWait != null )

{

millisToWait -= (System.currentTimeMillis() - startMillis);

startMillis = System.currentTimeMillis();

if ( millisToWait <= 0 )

{

doDelete = true; // timed out - delete our node

break;

}

wait(millisToWait);

}

else

{

wait();

}

}

catch ( KeeperException.NoNodeException e )

{

// it has been deleted (i.e. lock released). Try to acquire again

// 如果前一个子节点已经被删除则throw exception,只需要自旋获取一次即可

}

}

}

}

}

catch ( Exception e )

{

ThreadUtils.checkInterrupted(e);

doDelete = true;

throw e;

}

finally

{

if ( doDelete )

{

deleteOurPath(ourPath);

}

}

return haveTheLock;

}

2) 释放锁:

/**

* Perform one release of the mutex if the calling thread is the same thread that acquired it. If the

* thread had made multiple calls to acquire, the mutex will still be held when this method returns.

*

* @throws Exception ZK errors, interruptions, current thread does not own the lock

*/

@Override

public void release() throws Exception

{

/*

Note on concurrency: a given lockData instance

can be only acted on by a single thread so locking isn't necessary

*/

Thread currentThread = Thread.currentThread();

LockData lockData = threadData.get(currentThread);

if ( lockData == null )

{

throw new IllegalMonitorStateException("You do not own the lock: " + basePath);

}

// 获取重复梳理

int newLockCount = lockData.lockCount.decrementAndGet();

if ( newLockCount > 0 )

{

return;

}

if ( newLockCount < 0 )

{

throw new IllegalMonitorStateException("Lock count has gone negative for lock: " + basePath);

}

try

{

internals.releaseLock(lockData.lockPath);

}

finally

{

// 移除当前线程获取zk锁数据

threadData.remove(currentThread);

}

}

6.测试案例

为了更好的理解其原理和代码分析中获取锁的过程,这里我们实现一个简单的Demo:

package com.netease.it.access.web.util;

import org.apache.curator.RetryPolicy;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.framework.recipes.locks.InterProcessLock;

import org.apache.curator.framework.recipes.locks.InterProcessMutex;

import org.apache.curator.retry.ExponentialBackoffRetry;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.TimeUnit;

/**

* 基于curator的zookeeper分布式锁

* Created by dujiayong on 2020/3/8.

*/

public class CuratorUtil {

private static final String ZK_LOCK_DIR = "/curator/lock";

public static void main(String[] args) {

// 1:定义重试策略:初始睡眠1s 最大重试3次

RetryPolicy retryPolicy = new ExponentialBackoffRetry(1000, 3);

// 2.通过工厂创建连接

CuratorFramework client = CuratorFrameworkFactory.newClient("localhost:2181", retryPolicy);

// 3.打开连接

client.start();

// 4.分布式锁

final InterProcessLock lock = new InterProcessMutex(client, ZK_LOCK_DIR);

ExecutorService executorService = Executors.newFixedThreadPool(5);

for (int i = 0; i < 5; i++) {

executorService.submit(new Runnable() {

@Override

public void run() {

boolean acquire = false;

try {

acquire = lock.acquire(5000, TimeUnit.MILLISECONDS);

if (acquire) {

System.out.println("线程->" + Thread.currentThread().getName() + "获得锁成功");

} else {

System.out.println("线程->" + Thread.currentThread().getName() + "获得锁失败");

}

// 模拟业务,延迟4s

Thread.sleep(4000);

} catch (Exception e) {

e.printStackTrace();

} finally {

if (acquire) {

try {

lock.release();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

});

}

}

}

这里我们开启5个线程,每个线程获取锁的最大等待时间为5秒,为了模拟具体业务场景,方法中设置4秒等待时间。开始执行main方法,通过ZooInspector监控/curator/lock下的节点如下图:

对,没错,设置4秒的业务处理时长就是为了观察生成了几个顺序节点。果然如案例中所述,每个线程都会生成一个节点并且还是有序的。

观察控制台,我们会发现只有两个线程获取锁成功,另外三个线程超时获取锁失败会自动删除节点。线程执行完毕我们刷新一下/curator/lock节点,发现刚才创建的五个子节点已经不存在了。

来源:https://www.cnblogs.com/williamjie/p/9406031.html

zookeeper原理博客:

- zookeeper实现分布式锁(非公平锁)原理