零基础_Python爬虫_urllib

目录

1.urllib基本使用

2.urllib 一个类型六个方法

编辑 3.urllib下载

4. urllib请求对象的定制

4.urllib_get请求的quote方法

5.urllib_get请求的urlencode方法

6.urllib_post请求

7.urllib_ajax的get请求

获取豆瓣电影的第一页数据 并且存储到本地

获取豆瓣电影的前十页数据 并且存储到本地

8.urllib_ajax的post请求

9.urllib_异常

10.urllib_Handler处理器基本使用

11.urllib_代理

12.urllib_代理池

注意:请求url一定要找对,我的很多都是url没有找对导致运行错误的

1.urllib基本使用

#使用urllib来获取百度首页的源码

import urllib.request

#(1)定义一个url 就是要访问的网站

url = "http://www.baidu.com"

#(2)模拟浏览器向服务器发送请求

response = urllib.request.urlopen(url)

#(3)获取响应中的页面源码

#read方法 返回的是字节形式的二进制数据

#我们要求将二进制的数据转换为字符串 使用decode 设置编码格式

content = response.read().decode('utf-8')

#(¥)打印数据

print(content)2.urllib 一个类型六个方法

#使用urllib来获取百度首页的源码

import urllib.request

#定义一个url 就是要访问的网站

url = "http://www.baidu.com"

#模拟浏览器向服务器发送请求

response = urllib.request.urlopen(url)

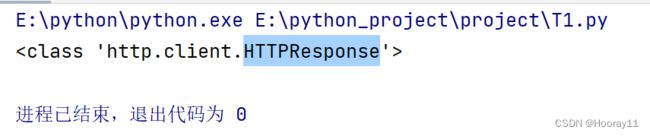

#一个类型(response是HTTPResponse类型)

# print(type(response))

#六个方法

#按照一个字节一个字节的去读取

content = response.read()

print(content)

#读取一行

content.readline()

print(content)

#读取多行

content = response.readlines()

print(content)

#返回状态码

print(response.getcode())

#返回url地址

print(response.geturl())

#获取状态信息

print(response.getheaders()) 3.urllib下载

3.urllib下载

import urllib.request

#下载网页\图片\视频同理

url_page = 'http://www.baidu.com'

#第一个参数为url,第二个为filename

urllib.request.urlretrieve(url_page,'baidu.html')4. urllib请求对象的定制

一个网址(URL)有哪些部分组成?域名包括哪些?_网址组成_crary,记忆的博客-CSDN博客![]() https://blog.csdn.net/qq_44327851/article/details/133175861

https://blog.csdn.net/qq_44327851/article/details/133175861

import urllib.request

from urllib.request import urlopen

url = 'http://www.baidu.com'

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

#请求对象的定制

#注意:因为参数顺序的原因 不能直接写url 和headers,中间还有data 所哟我们必须使用关键字传参

resquest = urllib.request.Request(url=url,headers=headers)

response = urllib.request.urlopen(resquest)

content = response.read().decode('utf8')

print(content)

4.urllib_get请求的quote方法

import urllib.request

from urllib.request import urlopen

import urllib.parse

url = 'https://www.baidu.com/s?wd='

#请求对象的定制是为了解决反爬的一种手段

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

#将周杰伦三个字变成unicode编码

name = urllib.parse.quote('周杰伦')

url += name

#请求对象的定制

request = urllib.request.Request(url=url,headers=headers)

#模拟浏览器向服务器发送请求

response = urllib.request.urlopen(request)

#获取响应内容

content = response.read().decode('utf-8')

print(content)5.urllib_get请求的urlencode方法

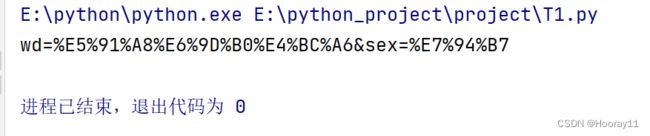

urlencode的应用场景:多个参数的时候

import urllib.parse

data = {

'wd':'周杰伦',

'sex':'男'

}

a = urllib.parse.urlencode(data)

print(a)6.urllib_post请求

1.post请求的参数 必须要进行编码

data = urllib.parse.urlencode(data).encode('utf-8')2.post请求的参数,是不会拼接在url的后面,而是需要放在请求对象定制的参数中

request = urllib.request.Request(url=url,data=data,headers=headers)

import urllib.request

import urllib.parse

import json

url = 'https://fanyi.baidu.com/'

data = {

'kw': 'spider'

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

data = urllib.parse.urlencode(data).encode('utf-8')

request = urllib.request.Request(url=url,data=data,headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)

# obj = json.loads(content)

#

# print(obj)

import urllib.request

import urllib.parse

import json

url = 'https://fanyi.baidu.com/'

data = {

'from': 'en',

'to': 'zh',

'query': 'spider',

'transtype': 'realtime',

'simple_means_flag': '3',

'sign': '63766.268839',

'token': '57abc218470ecde5f243716ff139d792',

'domain': 'common',

'ts': '1699633768731'

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82',

'Cookie':'BIDUPSID=27C677FC03842E7B4593C0FC23E63309; PSTM=1633330321; BAIDUID=DA5564F1160BAB4A47C7DA6772F3B9EA:FG=1; BAIDUID_BFESS=DA5564F1160BAB4A47C7DA6772F3B9EA:FG=1; ZFY=WMyGDJ9xOPi2:AJjfiiFFCIrg8JrCq3v03cIXPlzYGZ8:C; __bid_n=1838403fb79a2548194207; jsdk-uuid=d85ccdd5-f917-4d66-8cc6-bd351168ebe5; RT="z=1&dm=baidu.com&si=a3626b33-feb3-485b-b048-c642195c3c57&ss=lor8jods&sl=2&tt=7r3&bcn=https%3A%2F%2Ffclog.baidu.com%2Flog%2Fweirwood%3Ftype%3Dperf&ld=426s"; H_PS_PSSID=39633_39670; APPGUIDE_10_6_7=1; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1699633696; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1699633738; ab_sr=1.0.1_MTMwOTFkY2FmZDZkYmIwMTEwY2JkYTg0YWZiYzQ0Y2E4N2YzMDI4ZjlhYzU0Zjg1ZTg0YzY3MTA1ODFmY2U4OWU0MWJhM2E1NmE5YTQ1NDMzOTlhMzI4N2Y5MDFmNjVkOWRkMjRkYWQ0ZDcxOTEyZjVmZDdmOTc0ZDllNjFhNTBkOTk3ZDJlMTc5MWEwM2VlNjg4ZjRmYjI2OWZiODkzNQ=='

}

data = urllib.parse.urlencode(data).encode('utf-8')

request = urllib.request.Request(url=url,data=data,headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)

# obj = json.loads(content)

#

# print(obj)

7.urllib_ajax的get请求

获取豆瓣电影的第一页数据 并且存储到本地

#get请求

#获取豆瓣电影的第一页数据 并且存储到本地

import urllib.request

url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&start=0&limit=20'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

request = urllib.request.Request(url=url,headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

# f = open('douban.json','w',encoding='utf-8')

# f.write(content)

with open('douban1.json','w',encoding='utf-8') as f:

f.write(content)获取豆瓣电影的前十页数据 并且存储到本地

自己看完课程之后独立写出来的,一次性运行成功,我写代码的思路,是先从主函数开始写,先把最基本的框架写出来,对于写函数,就是先把函数的基本框架和固定的格式和步骤全写出来,然后根据需要慢慢再去补充。

#下载豆瓣电影前十页的电影

#https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action=&start=40&limit=20

#start : (page-1)*20

import urllib.request

import urllib.parse

#请求对象的定制

def create_request(page):

base_url = 'https://movie.douban.com/j/chart/top_list?type=5&interval_id=100%3A90&action='

data = {

'start':'(page-1)*20',

'limit':'20'

}

data = urllib.parse.urlencode(data)

url = base_url+data

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

request = urllib.request.Request(url=url,headers=headers)

return request

#获取响应数据

def get_response(request):

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

#下载数据

def down_load(page,content):

with open('douban_'+str(page)+'.json','w',encoding='utf-8') as f:

f.write(content)

if __name__ == '__main__':

start_page = int(input("请输入起始页码:"))

end_page = int(input("请输入截止页码:"))

for page in range(start_page,end_page+1):

request = create_request(page)

content = get_response(request)

down_load(page,content)

8.urllib_ajax的post请求

#http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

# cname: 北京

# pid:

# pageIndex: 1

# pageSize: 10

#http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname

# cname: 北京

# pid:

# pageIndex: 2

# pageSize: 10

import urllib.request

import urllib.parse

#请求对象的定制

def create_request(page):

url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=cname'

data = {

'cname': '北京',

'pid':'',

'pageIndex': page,

'pageSize': '10'

}

data = urllib.parse.urlencode(data).encode('utf-8')

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

request = urllib.request.Request(url=url,data=data,headers=headers)

return request

#获取响应数据

def get_response():

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

return content

#下载数据

def down_load(page,content):

with open('kfc'+str(page)+'.json','w',encoding='utf-8') as f:

f.write(content)

if __name__ == '__main__':

start_page = int(input("请输入起始页码:"))

end_page = int(input("请输入截止页码:"))

for page in range(start_page,end_page+1):

request = create_request(page)

content = get_response()

down_load(page,content)

9.urllib_异常

#https://blog.csdn.net/weixin_43936332/article/details/131465538

import urllib.request

import urllib.error

url = 'http://douban111.com'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

try:

request = urllib.request.Request(url=url,headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)

except urllib.error.HTTPError:

print("系统正在升级")

except urllib.error.URLError:

print("系统真的在升级")10.urllib_cookie_QQ空间登录

import urllib.request

url = 'https://qzone.qq.com/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82',

#cookie中携带着登录信息 如果有登录之后的cookie 那么就可以携带着cookie进入到任何的页面

'Cookie':'RK=bOytW6VLlo; ptcz=7e42b5634fed507c62cd10eac2db090c6086233be352a3b5acd6289c1e5cb0c7; pgv_pvid=2191120395; tvfe_boss_uuid=7a43881ad4b8395d; o_cookie=2647969182; iip=0; pac_uid=1_2647969182; fqm_pvqid=6348b068-eacc-4111-a1b6-b4fb7a040cde; qq_domain_video_guid_verify=7365d123d341ac43; _qz_referrer=cn.bing.com; _qpsvr_localtk=0.33179965702435776; pgv_info=ssid=s5577236883',

#referer 判断当前路径是不是由上一个路径进来的 一般情况下 是做图片防盗链

'Referer':'https://cn.bing.com/'

}

request = urllib.request.Request(url=url,headers=headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf-8')

print(content)10.urllib_Handler处理器基本使用

import urllib.request

url = 'https://www.baidu.com/'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

request = urllib.request.Request(url=url,headers=headers)

#response = urllib.request.urlopen(request)

#获取hanler对象

handler = urllib.request.HTTPHandler()

#获取opener对象

opener = urllib.request.build_opener(handler)

#调用open 方法

response = opener.open(request)

content = response.read().decode('utf-8')

print(content)

11.urllib_代理

国内高匿HTTP免费代理IP_IP代理_HTTP代理 - 快代理 (kuaidaili.com)

import urllib.request

url = 'http://www.baidu.com/s?wd=ip'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

request = urllib.request.Request(url=url,headers=headers)

proxies = {

'http':'223.70.126.84:8888'

}

handler = urllib.request.ProxyHandler(proxies=proxies)

opener = urllib.request.build_opener(handler)

response = opener.open(request)

content = response.read().decode('utf-8')

print(content)12.urllib_代理池

import urllib.request

proxies_pool = [

{'http':'118.24.151.16817'},

{'http':'118.24.151.16817'}

]

import random

proxies = random.choice(proxies_pool)

url = 'http://www.baidu.com/s?wd=ip'

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36 Edg/114.0.1823.82'

}

request = urllib.request.Request(url=url,headers=headers)

handler = urllib.request.ProxyHandler(proxies=proxies)

opener = urllib.request.build_opener(handler)

response = opener.open(request)

content = response.read().decode('utf-8')

with open('daili.html','w',encoding='utf-8') as fp:

fp.write(content)