G2周-人脸图像生成(DCGAN)

本文为365天深度学习训练营 中的学习记录博客

原作者:K同学啊|接辅导、项目定制

我的环境:

1.语言:python3.7

2.编译器:pycharm

3.深度学习框架Pytorch 1.8.0+cu111

深度卷积对抗网络(Deep Convolutional Generative Adversarial Network,DCGAN)是一种生成对抗网络(GAN)的变体,专门设计用于生成图像。DCGAN结合了卷积神经网络(CNN)的思想,使其能够更有效地学习图像的特征和纹理,从而生成更逼真的图像。

以下是深度卷积对抗网络的主要特征和原则:

-

生成器网络(Generator):

- 生成器使用卷积转置层(Convolutional Transpose Layers)进行上采样,以逐渐从潜在空间生成图像。这些层帮助学习图像的细节和结构。

- 生成器通常以一个较小的噪声向量(通常称为潜在向量或噪声)为输入,并通过多个卷积转置层生成图像。

-

判别器网络(Discriminator):

- 判别器使用卷积层进行下采样,以逐步降低输入图像的分辨率,并最终输出一个二进制判别结果,指示输入是真实图像还是由生成器生成的假图像。

- 判别器的设计使其能够有效地捕捉图像的局部特征和整体结构。

-

卷积层和批量归一化:

- DCGAN中使用卷积层而不是全连接层,这有助于捕捉图像的空间关系,使网络更适用于图像生成任务。

- 批量归一化(Batch Normalization)在生成器和判别器中广泛使用,有助于稳定训练过程并提高模型的性能。

-

激活函数:

- 生成器中通常使用ReLU(Rectified Linear Unit)激活函数,而判别器中可能使用Leaky ReLU或其他激活函数,以避免梯度消失问题。

-

损失函数:

- GAN的损失函数通常包括生成器损失和判别器损失。生成器试图最小化生成图像与真实图像的差异,而判别器试图最大化正确分类真实和生成图像的概率。

-

图像生成过程:

- 在训练过程中,生成器和判别器通过对抗的方式进行学习。生成器通过生成更逼真的图像来愚弄判别器,而判别器则努力正确地区分真实和生成的图像。

DCGAN已经在许多图像生成任务中取得了显著的成功,包括人脸生成、风格迁移、图像编辑等。其卷积结构和对抗训练的策略使得它能够有效地捕捉并生成复杂的图像内容。

一、准备

1、导入库

import torch, random, os

import torch.nn as nn

import torch.nn.parallel

import torch.optim as optim

import torch.utils.data

import torchvision.datasets as dset

import torchvision.transforms as transforms

import torchvision.utils as vutils

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

from IPython.display import HTML

from multiprocessing import freeze_support

manualSeed = 999

print("Random seed:",manualSeed)

random.seed(manualSeed)

torch.manual_seed(manualSeed)

torch.use_deterministic_algorithms(True)

2、设置超参数

if __name__ == '__main__':

freeze_support()

dataroot = "E:/GAN/"

batch_size = 128

image_size = 64

nz = 100 # 生成器输入的尺寸

ngf = 64 # 生成器的特征图大小

ndf = 64 # 判别器的特征图大小

num_epochs = 50

lr = 0.0002

beta1 = 0.5 # Adam优化器超参数之后的代码请放入如下位置:

import torch

import random

import os

import torch.nn as nn

import torch.nn.parallel

import torch.optim as optim

import torch.utils.data

import torchvision.datasets as dset

import torchvision.transforms as transforms

import torchvision.utils as vutils

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

from IPython.display import HTML

from multiprocessing import freeze_support # 导入 freeze_support

manualSeed = 999

print("Random seed:", manualSeed)

random.seed(manualSeed)

torch.manual_seed(manualSeed)

torch.use_deterministic_algorithms(True)

# 其余部分的代码...

if __name__ == '__main__':

freeze_support()

freeze_support()

dataroot = "E:/GAN/"

batch_size = 128

image_size = 64

nz = 100 # 生成器输入的尺寸

ngf = 64 # 生成器的特征图大小

ndf = 64 # 判别器的特征图大小

num_epochs = 50

lr = 0.0002

beta1 = 0.5 # Adam优化器超参数

# 其余部分的代码...

3、导入数据

dataset = dset.ImageFolder(root=dataroot,

transform=transforms.Compose([

transforms.Resize(image_size),

transforms.CenterCrop(image_size),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5),

(0.5, 0.5, 0.5)),

]))

dataloader = torch.utils.data.DataLoader(

dataset,

batch_size=batch_size,

shuffle=True,

num_workers=5 # 使用多个线程加载数据的工作进程数

)

device = torch.device('cuda:0' if (torch.cuda.is_available()) else "cpu")

print("使用的设备是:", device)

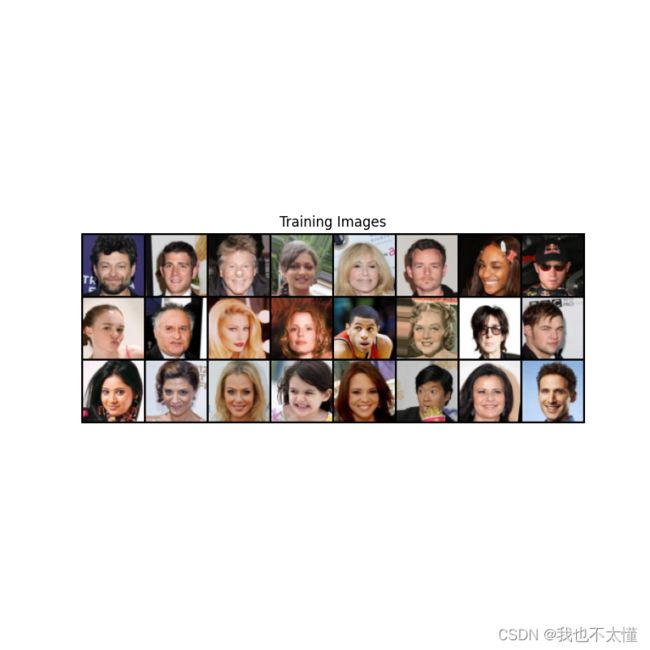

# 绘制一些训练图像

real_batch = next(iter(dataloader))

plt.figure(figsize=(8, 8))

plt.axis("off")

plt.title("Training Images")

plt.imshow(np.transpose(vutils.make_grid(real_batch[0].to(device)[:24],

padding=2,

normalize=True).cpu(), (1, 2, 0)))

plt.show() 使用 ImageFolder 类加载图像数据集,transform 参数定义了一系列的图像转换,包括将图像调整大小、中心裁剪、转换为张量(tensor)以及标准化。

使用 DataLoader 将数据集包装成可迭代的数据加载器。batch_size 定义了每个批次的样本数量,shuffle=True 表示在每个 epoch 时打乱数据,num_workers 指定了用于加载数据的线程数。

二、定义模型

1、初始化权重

def weights_init(m):

classname = m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find('BatchNorm') != -1:

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0)2、定义生成器

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.main = nn.Sequential(

nn.ConvTranspose2d(nz, ngf * 8, 4, 1, 0, bias=False),

nn.BatchNorm2d(ngf * 8),

nn.ReLU(True),

nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

nn.ConvTranspose2d(ngf * 4, ngf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

nn.ConvTranspose2d(ngf * 2, ngf, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

nn.ConvTranspose2d(ngf, 3, 4, 2, 1, bias=False),

nn.Tanh()

)

def forward(self, input):

return self.main(input)

netG = Generator().to(device)

netG.apply(weights_init)

print(netG)Generator(

(main): Sequential(

(0): ConvTranspose2d(100, 512, kernel_size=(4, 4), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(7): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(8): ReLU(inplace=True)

(9): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(10): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(11): ReLU(inplace=True)

(12): ConvTranspose2d(64, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(13): Tanh()

)

)

3、定义鉴别器

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.main = nn.Sequential(

nn.Conv2d(3, ndf, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 2),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 4),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 8),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input)

netD = Discriminator().to(device)

netD.apply(weights_init)

print(netD)Discriminator(

(main): Sequential(

(0): Conv2d(3, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): LeakyReLU(negative_slope=0.2, inplace=True)

(2): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): LeakyReLU(negative_slope=0.2, inplace=True)

(5): Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): LeakyReLU(negative_slope=0.2, inplace=True)

(8): Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(9): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(10): LeakyReLU(negative_slope=0.2, inplace=True)

(11): Conv2d(512, 1, kernel_size=(4, 4), stride=(1, 1), bias=False)

(12): Sigmoid()

)

)

三、训练模型

1、训练模型

criterion = nn.BCELoss()

fixed_noise = torch.randn(64, nz, 1, 1, device=device)

real_label = 1

fake_label = 0

optimizerD = optim.Adam(netD.parameters(), lr=lr, betas=(beta1, 0.999))

optimizerG = optim.Adam(netG.parameters(), lr=lr, betas=(beta1, 0.999))

img_list = []

G_losses = []

D_losses = []

iters = 0

print("Starting Training Loop. . .") # 输出训练开始的提示信息#对于每个epoch(训练周期〉

for epoch in range(num_epochs):

# 对于dataloader中的每个batch

for i, data in enumerate(dataloader, 0):

netD.zero_grad() # 清除判别器网络的梯度#准备真实图像的数据

real_cpu = data[0].to(device)

b_size = real_cpu.size(0)

label = torch.full((b_size,), real_label, dtype=torch.float, device=device)

# 将真实图像样本输入判别器,进行前向传播

output = netD(real_cpu).view(- 1)

errD_real = criterion(output, label) # 通过反向传播计算判别器的梯度

errD_real.backward()

D_x = output.mean().item()

noise = torch.randn(b_size, nz, 1, 1, device=device)

fake = netG(noise)

label.fill_(fake_label)

output = netD(fake.detach()).view(-1)

errD_fake = criterion(output, label) # 通过反向传播计算判别器的梯度

errD_fake.backward()

D_G_z1 = output.mean().item()

errD = errD_real + errD_fake

optimizerD.step()

netG.zero_grad() # 清除生成器网络的梯度

label.fill_(real_label)

output = netD(fake).view(-1)

# 根据判别器的输出计算生成器的损失

errG = criterion(output, label)

# 通过反向传播计算生成器的梯度

errG.backward()

D_G_z2 = output.mean().item()

optimizerG.step()

# 输出训练统计信息

if i % 400 == 0:

print("[%d/%d][%d/%d]\tLoss_D: %.4f\tlOSS_G: %.4f\tD(x): %.4f\D(G(z)): %.4f / %.4f"

% (epoch, num_epochs, i, len(dataloader), errD.item(), errG.item(), D_x, D_G_z1, D_G_z2))

G_losses.append(errG.item())

D_losses.append(errD.item())

if (iters % 500 == 0) or ((epoch == num_epochs - 1) and (i == len(dataloader) - 1)):

with torch.no_grad():

fake = netG(fixed_noise).detach().cpu()

img_list.append(vutils.make_grid(fake, padding=2, normalize=True))

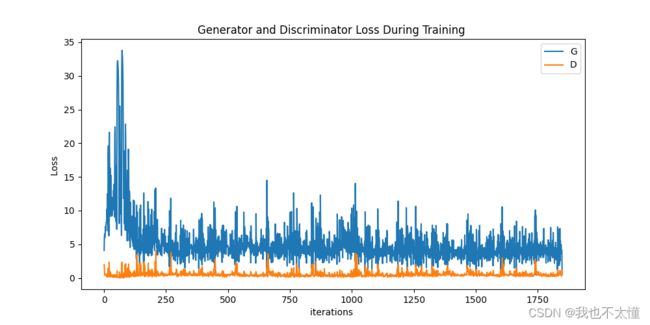

iters += 1 使用二进制交叉熵损失作为损失函数,并分别为判别器和生成器定义 Adam 优化器。固定噪声用于在训练过程中生成一组相同的假图像。real_label 和 fake_label 是用于标记真实和生成图像的标签。

训练循环通过对抗训练使生成器生成逼真的图像,同时判别器试图区分真实和生成的图像。在训练过程中,生成器和判别器相互竞争,逐渐提高其性能。注意,这段代码假设您的生成器(netG)和判别器(netD)的定义是正确的,且数据集加载正确。

2、可视化

plt.figure(figsize=(10, 5))

plt.title("Generator and Discriminator Loss During Training ")

plt.plot(G_losses, label="G")

plt.plot(D_losses, label="D")

plt.xlabel("iterations ")

plt.ylabel("Loss ")

plt.legend()

plt.show()

# 创建一个大小为8x8的图形对象

fig = plt.figure(figsize=(8, 8))

# 不显示坐标轴

plt.axis("off")

# 将图像列表img_list中的图像转置并创建一个包含每个图像的单个列表ims

ims = [[plt.imshow(np.transpose(i, (1, 2, 0)), animated=True)] for i in img_list]

# 使用图形对象、图像列表ims以及其他参数创建一个动画对象ani

ani = animation.ArtistAnimation(fig, ims, interval=1000, repeat_delay=1000, blit=True)

# 将动画以HTML形式呈现

HTML(ani.to_jshtml())

# 从数据加载器中获取—批真实图像

real_batch = next(iter(dataloader))

# 绘制真实图像

plt.figure(figsize=(15, 15))

plt.subplot(1, 2, 1)

plt.axis("off")

plt.title("Real Images")

plt.imshow(

np.transpose(vutils.make_grid(real_batch[0].to(device)[:64], padding=5, normalize=True).cpu(), (1, 2, 0)))

# 绘制上一个时期生成的假图像

plt.subplot(1, 2, 2)

plt.axis("off")

plt.title("Fake Images")

plt.imshow(np.transpose(img_list[-1], (1, 2, 0)))

plt.show()