关于Flink在一条计算管道中配置流和数据流通过广播方式合流的情形下,无保存点和检查点重跑时,让配置流先行,数据流等延迟几秒再进入合流节点的思考

1. 背景

笔者带领的团队在自研的大数据平台XSailboat 上进行专家策略规则的计算管道开发时,遇到这样一种情形:

计算管道中存在一条配置流和数据流通过广播方式合流的情形。配置流和数据流的输入源都是Kafka主题,但是是两个不同的Kafka主题。

配置流的Kafka主题中存储的是规则配置,数据流中存储的是要分析的数据。在开发调试的时候,这两个主题中已有数据,一调试运行,数据流先到达被处理,配置流晚到,造成开发调试的时候,配置数据没有完全应用上。

我们想要做到的目标是,配置流先到达合流节点,等配置数据都更新到广播状态存储器中时,数据流再进来。

这种需求其实只有在没有使用保存点/检查点初次运行的时候,才需要。在计算管道正常运行以后,配置数据按正常速度到达是没有问题的。

2. 解决方案探索

2.1 方案1:Kafka源节点增加拉取延时

首先Flink原生的KafkaSource是不带有这种能力的,所以需要重写改造相关设施。

但是最后发现Flink的KafkaSource的构造函数是包内可见的,并不是public/protected,所以要继承修改是不可能,只能把代码拷贝出来,写个自己的新类,并在其中改造。但这不是一种好的解决方法,并最终没有采用。但记录一下这种方法的改造关键点。

- org.apache.flink.connector.kafka.source.KafkaSource(入口)

@Internal

@Override

public SourceReader<OUT, KafkaPartitionSplit> createReader(SourceReaderContext readerContext)

throws Exception {

return createReader(readerContext, (ignore) -> {});

}

@VisibleForTesting

SourceReader<OUT, KafkaPartitionSplit> createReader(

SourceReaderContext readerContext, Consumer<Collection<String>> splitFinishedHook)

throws Exception {

FutureCompletingBlockingQueue<RecordsWithSplitIds<ConsumerRecord<byte[], byte[]>>>

elementsQueue = new FutureCompletingBlockingQueue<>();

deserializationSchema.open(

new DeserializationSchema.InitializationContext() {

@Override

public MetricGroup getMetricGroup() {

return readerContext.metricGroup().addGroup("deserializer");

}

@Override

public UserCodeClassLoader getUserCodeClassLoader() {

return readerContext.getUserCodeClassLoader();

}

});

final KafkaSourceReaderMetrics kafkaSourceReaderMetrics =

new KafkaSourceReaderMetrics(readerContext.metricGroup());

Supplier<KafkaPartitionSplitReader> splitReaderSupplier =

() -> new KafkaPartitionSplitReader(props, readerContext, kafkaSourceReaderMetrics);

KafkaRecordEmitter<OUT> recordEmitter = new KafkaRecordEmitter<>(deserializationSchema);

return new KafkaSourceReader<>(

elementsQueue,

// KafkaSourceFetcherManager是拉取数据的管理器,关注它

new KafkaSourceFetcherManager(

elementsQueue, splitReaderSupplier::get, splitFinishedHook),

recordEmitter,

toConfiguration(props),

readerContext,

kafkaSourceReaderMetrics);

}

- org.apache.flink.connector.kafka.source.reader.fetcher.KafkaSourceFetcherManager的祖先类:org.apache.flink.connector.base.source.reader.fetcher.SingleThreadFetcherManager

// SplitT就是按Kafka分区分解成的一个个可并行的任务项

@Override

public void addSplits(List<SplitT> splitsToAdd) {

SplitFetcher<E, SplitT> fetcher = getRunningFetcher();

if (fetcher == null) {

// Fetcher拉取器,如果没有正在运行的拉取器,就重新创建一个

fetcher = createSplitFetcher();

// Add the splits to the fetchers.

// 把拉取任务加入到拉取器里面

fetcher.addSplits(splitsToAdd);

// 启动拉取器,启动拉取器的代码见下面

startFetcher(fetcher);

} else {

fetcher.addSplits(splitsToAdd);

}

}

- org.apache.flink.connector.kafka.source.reader.fetcher.KafkaSourceFetcherManager的根类:org.apache.flink.connector.base.source.reader.fetcher.SplitFetcherManager

protected void startFetcher(SplitFetcher<E, SplitT> fetcher) {

// 将拉取器加入到线程执行器中。

// 如果这个拉取器想延迟n秒再拉取数据,就可以使用定时器,延迟n秒之后,再submit。这个延迟时长参数n需要层层传递进来。

// 例如:

// Executors.newScheduledThreadPool(1).schedule(()->executors.submit(fetcher) , n , TimeUnit.SECONDS) ;

executors.submit(fetcher);

}

2.2 方案2: 在合流的时候让数据流处理挂起等待配置流先处理

这种方案经验证是不可行的。但探索过程中对Flink的理解却是有意义的,因此记录在此。

配置流和数据流合流使用的是状态广播,它的函数接口是

- org.apache.flink.streaming.api.functions.co.CoProcessFunction

public abstract class CoProcessFunction<IN1, IN2, OUT> extends AbstractRichFunction {

private static final long serialVersionUID = 1L;

/**

* This method is called for each element in the first of the connected streams.

*

* This function can output zero or more elements using the {@link Collector} parameter and

* also update internal state or set timers using the {@link Context} parameter.

*

* @param value The stream element

* @param ctx A {@link Context} that allows querying the timestamp of the element, querying the

* {@link TimeDomain} of the firing timer and getting a {@link TimerService} for registering

* timers and querying the time. The context is only valid during the invocation of this

* method, do not store it.

* @param out The collector to emit resulting elements to

* @throws Exception The function may throw exceptions which cause the streaming program to fail

* and go into recovery.

*/

public abstract void processElement1(IN1 value, Context ctx, Collector<OUT> out)

throws Exception;

/**

* This method is called for each element in the second of the connected streams.

*

* This function can output zero or more elements using the {@link Collector} parameter and

* also update internal state or set timers using the {@link Context} parameter.

*

* @param value The stream element

* @param ctx A {@link Context} that allows querying the timestamp of the element, querying the

* {@link TimeDomain} of the firing timer and getting a {@link TimerService} for registering

* timers and querying the time. The context is only valid during the invocation of this

* method, do not store it.

* @param out The collector to emit resulting elements to

* @throws Exception The function may throw exceptions which cause the streaming program to fail

* and go into recovery.

*/

public abstract void processElement2(IN2 value, Context ctx, Collector<OUT> out)

throws Exception;

/**

* Called when a timer set using {@link TimerService} fires.

*

* @param timestamp The timestamp of the firing timer.

* @param ctx An {@link OnTimerContext} that allows querying the timestamp of the firing timer,

* querying the {@link TimeDomain} of the firing timer and getting a {@link TimerService}

* for registering timers and querying the time. The context is only valid during the

* invocation of this method, do not store it.

* @param out The collector for returning result values.

* @throws Exception This method may throw exceptions. Throwing an exception will cause the

* operation to fail and may trigger recovery.

*/

public void onTimer(long timestamp, OnTimerContext ctx, Collector<OUT> out) throws Exception {}

}

能够让数据流挂起等待,配置流先处理的前提是要求配置流数据的处理方法和数据流数据的处理方法是异步执行的,它们处在不同的线程中,这样一个线程sleep,不会影响另外一个方法的执行。

是否满足这个要求,就需要验证一下。编写以下测试代码:

public class Test1

{

static ERowTypeInfo initInputs(List<Row> aInputs)

{

for(int i=0 ; i<100 ; i++)

{

Row row_0 = Row.withNames() ;

row_0.setField("f0" , "k" + (int)(Math.random()*1)) ;

row_0.setField("f1" , i) ;

row_0.setField("f2" , "c"+i) ;

aInputs.add(row_0) ;

}

return new ERowTypeInfo(new TypeInformation[] {

BasicTypeInfo.STRING_TYPE_INFO ,

BasicTypeInfo.INT_TYPE_INFO ,

BasicTypeInfo.STRING_TYPE_INFO ,

} , new String[] {"f0" , "f1" , "f2"}) ;

}

public static void main(String[] args) throws Exception

{

StreamExecutionEnvironment streamEnv = StreamExecutionEnvironment.getExecutionEnvironment() ;

List<Row> inputs1 = CS.arrayList() ;

List<Row> inputs2 = CS.arrayList() ;

ERowTypeInfo rowTypeInfo1 = initInputs(inputs1) ;

ERowTypeInfo rowTypeInfo2 = initInputs(inputs2) ;

streamEnv.setParallelism(2) ;

DataStreamSource<Row> ds1 = streamEnv.fromCollection(inputs1 , rowTypeInfo1) ;

DataStreamSource<Row> ds2 = streamEnv.fromCollection(inputs2 , rowTypeInfo2) ;

ds2.connect(ds1.broadcast()).process(new _CoProcessFunction()).print() ;

streamEnv.execute() ;

}

static class _CoProcessFunction extends CoProcessFunction<Row, Row, Row>{

private static final long serialVersionUID = 1L;

public _CoProcessFunction()

{

System.out.println(this);

}

@Override

public void processElement1(Row aArg0, CoProcessFunction<Row, Row, Row>.Context aArg1, Collector<Row> aArg2)

throws Exception

{

// 设置断点-1

System.out.println(this);

}

@Override

public void processElement2(Row aArg0, CoProcessFunction<Row, Row, Row>.Context aArg1, Collector<Row> aArg2)

throws Exception

{

// 设置断点-2

System.out.println(this);

}

}

}

调试运行,发现会有2个线程都断在了断点-1处,而断点-2没有线程断住。说明了流1和流2是在同一线程里面运行的。这就说明了,如果数据流所在的线程sleep,那么配置流因为也需要在这个线程里面处理,那么将无法被处理,证明此方案是不行的。

线程堆栈:

分析到此,不妨再分析它是怎么区分调用processElement1还是processsElement2的。

区分的关键逻辑在上图选中的那一行,但确没法定位代码。经过分析(过程不赘述),

- org.apache.flink.streaming.runtime.io.StreamMultipleInputProcessor

@Override

public DataInputStatus processInput() throws Exception {

// 第1条流,还是第2条流

int readingInputIndex;

if (isPrepared) {

readingInputIndex = selectNextReadingInputIndex();

} else {

// the preparations here are not placed in the constructor because all work in it

// must be executed after all operators are opened.

readingInputIndex = selectFirstReadingInputIndex();

}

if (readingInputIndex == InputSelection.NONE_AVAILABLE) {

return DataInputStatus.NOTHING_AVAILABLE;

}

lastReadInputIndex = readingInputIndex;

// 找到相应的处理器处理

DataInputStatus inputStatus = inputProcessors[readingInputIndex].processInput();

return inputSelectionHandler.updateStatusAndSelection(inputStatus, readingInputIndex);

}

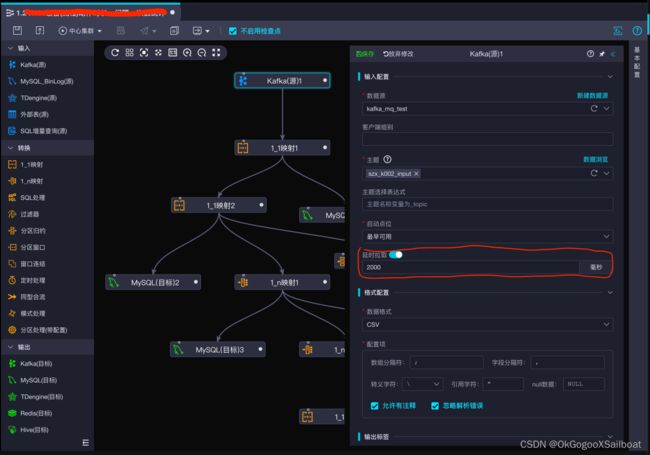

2.3 方案3(可行): 在从Kafka中读取数据第1次反序列化的时候,加延时。

XSailboat中的数据工厂包含实时计算开发和运维的相关套件,底层是Flink,我在此之上开发出一套适应可视化开发的扩展插件,以及支持可视化开发的中台服务,我将其称之为SailFlink。

在SailFlink中,Kafka源节点的CSV反序列化器是基于Flink的CsvRowDeserializationSchema自己实现的,所以支持上读取延时是很容易的事情。后续JSON和Avro格式如有需要也可照此修改。

@Override

public Row deserialize(byte[] message) throws IOException

{

if (mFirstRead && mDelayReadMs > 0)

{

mLogger.info("设置了读取延时({} ms),现在时第一次读取,延时等待。");

JCommon.sleep(mDelayReadMs);

mFirstRead = false ;

}

...省略