linux驱动(八):block,net

本文主要探讨210的block驱动和net驱动。

block

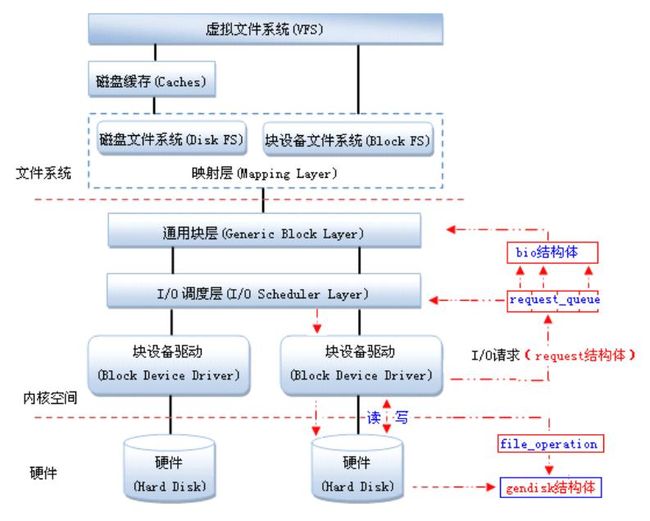

随机存取设备且读写是按块进行,缓冲区用于暂存数据,达条件后一次性写入设备或读到缓冲区

块设备与字符设备:同一设备支持块和字符访问策略,块设备驱动层支持缓冲区,字符设备驱动层没有缓冲

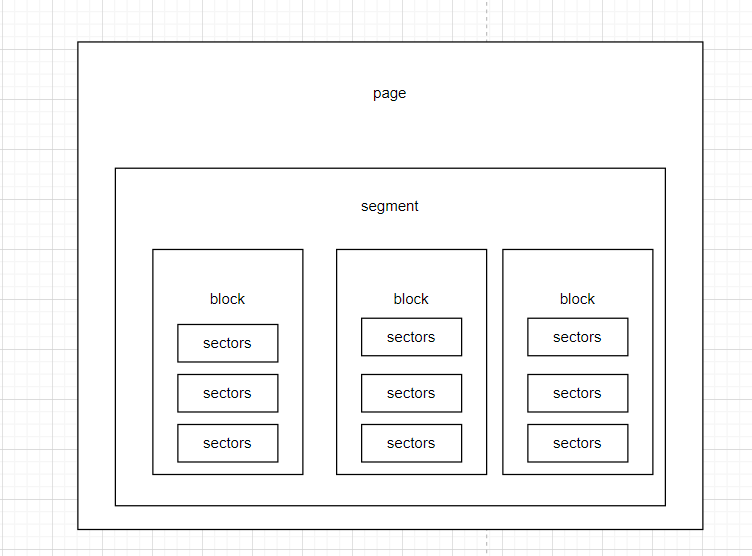

块设备单位:扇区(Sectors):1扇区为512(倍)字节,块(Blocks):1块包含1个或多个扇区,段(Segments):若干相邻块组成,页(Page):内核内存映射管理基本单位

VFS是linux系统内核软件层,统一数据结构管理各种逻辑文件系统,接受用户层对文件系统各种操作,给应用层提供标准文件操作接口,

内核空间层:通用块层(generic Block Layer):负责维持I/O请求在文件系统与底层物理磁盘关系(bio结构对应I/O请求)IO调度层:多请求到块设备,对读写请求排序在执行以扩大效率,映射层(Mapping Layer):将文件访问映射为设备访问

块设备驱动:设备I/O操作,向块设备发出请求(request结构体描述),请求速度很慢,内核提供队列机制把I/O请求添加到队列中,提交请求前内核会先执行请求合并和排序以提高访问的效率,I/O调度程序子系统提交I/O请求,挂起块I/O 请求,决定队列请求顺序和派发请求到设备

结构体

block_device:块设备实例

struct block_device {

dev_t bd_dev; /* not a kdev_t - it's a search key */

struct inode * bd_inode; /* will die */

struct super_block * bd_super;

int bd_openers;

struct mutex bd_mutex; /* open/close mutex */

struct list_head bd_inodes;

void * bd_claiming;

void * bd_holder;

int bd_holders;

#ifdef CONFIG_SYSFS

struct list_head bd_holder_list;

#endif

struct block_device * bd_contains;

unsigned bd_block_size;

struct hd_struct * bd_part;

/* number of times partitions within this device have been opened. */

unsigned bd_part_count;

int bd_invalidated;

struct gendisk * bd_disk;

struct list_head bd_list;

/*

* Private data. You must have bd_claim'ed the block_device

* to use this. NOTE: bd_claim allows an owner to claim

* the same device multiple times, the owner must take special

* care to not mess up bd_private for that case.

*/

unsigned long bd_private;

/* The counter of freeze processes */

int bd_fsfreeze_count;

/* Mutex for freeze */

struct mutex bd_fsfreeze_mutex;

};hd_struct:分区信息

struct hd_struct {

sector_t start_sect;

sector_t nr_sects;

sector_t alignment_offset;

unsigned int discard_alignment;

struct device __dev;

struct kobject *holder_dir;

int policy, partno;

#ifdef CONFIG_FAIL_MAKE_REQUEST

int make_it_fail;

#endif

unsigned long stamp;

int in_flight[2];

#ifdef CONFIG_SMP

struct disk_stats __percpu *dkstats;

#else

struct disk_stats dkstats;

#endif

struct rcu_head rcu_head;

char partition_name[GENHD_PART_NAME_SIZE];

};request:内核请求队列

/*

* try to put the fields that are referenced together in the same cacheline.

* if you modify this structure, be sure to check block/blk-core.c:rq_init()

* as well!

*/

struct request {

struct list_head queuelist;

struct call_single_data csd;

struct request_queue *q;

unsigned int cmd_flags;

enum rq_cmd_type_bits cmd_type;

unsigned long atomic_flags;

int cpu;

/* the following two fields are internal, NEVER access directly */

unsigned int __data_len; /* total data len */

sector_t __sector; /* sector cursor */

struct bio *bio;

struct bio *biotail;

struct hlist_node hash; /* merge hash */

/*

* The rb_node is only used inside the io scheduler, requests

* are pruned when moved to the dispatch queue. So let the

* completion_data share space with the rb_node.

*/

union {

struct rb_node rb_node; /* sort/lookup */

void *completion_data;

};

/*

* Three pointers are available for the IO schedulers, if they need

* more they have to dynamically allocate it.

*/

void *elevator_private;

void *elevator_private2;

void *elevator_private3;

struct gendisk *rq_disk;

unsigned long start_time;

#ifdef CONFIG_BLK_CGROUP

unsigned long long start_time_ns;

unsigned long long io_start_time_ns; /* when passed to hardware */

#endif

/* Number of scatter-gather DMA addr+len pairs after

* physical address coalescing is performed.

*/

unsigned short nr_phys_segments;

unsigned short ioprio;

int ref_count;

void *special; /* opaque pointer available for LLD use */

char *buffer; /* kaddr of the current segment if available */

int tag;

int errors;

/*

* when request is used as a packet command carrier

*/

unsigned char __cmd[BLK_MAX_CDB];

unsigned char *cmd;

unsigned short cmd_len;

unsigned int extra_len; /* length of alignment and padding */

unsigned int sense_len;

unsigned int resid_len; /* residual count */

void *sense;

unsigned long deadline;

struct list_head timeout_list;

unsigned int timeout;

int retries;

/*

* completion callback.

*/

rq_end_io_fn *end_io;

void *end_io_data;

/* for bidi */

struct request *next_rq;

};

request_queue:内核申请request资源建立请求链表并填写BIO形成队列

struct request_queue

{

/*

* Together with queue_head for cacheline sharing

*/

struct list_head queue_head;

struct request *last_merge;

struct elevator_queue *elevator;

/*

* the queue request freelist, one for reads and one for writes

*/

struct request_list rq;

request_fn_proc *request_fn;

make_request_fn *make_request_fn;

prep_rq_fn *prep_rq_fn;

unplug_fn *unplug_fn;

merge_bvec_fn *merge_bvec_fn;

prepare_flush_fn *prepare_flush_fn;

softirq_done_fn *softirq_done_fn;

rq_timed_out_fn *rq_timed_out_fn;

dma_drain_needed_fn *dma_drain_needed;

lld_busy_fn *lld_busy_fn;

/*

* Dispatch queue sorting

*/

sector_t end_sector;

struct request *boundary_rq;

/*

* Auto-unplugging state

*/

struct timer_list unplug_timer;

int unplug_thresh; /* After this many requests */

unsigned long unplug_delay; /* After this many jiffies */

struct work_struct unplug_work;

struct backing_dev_info backing_dev_info;

/*

* The queue owner gets to use this for whatever they like.

* ll_rw_blk doesn't touch it.

*/

void *queuedata;

/*

* queue needs bounce pages for pages above this limit

*/

gfp_t bounce_gfp;

/*

* various queue flags, see QUEUE_* below

*/

unsigned long queue_flags;

/*

* protects queue structures from reentrancy. ->__queue_lock should

* _never_ be used directly, it is queue private. always use

* ->queue_lock.

*/

spinlock_t __queue_lock;

spinlock_t *queue_lock;

/*

* queue kobject

*/

struct kobject kobj;

/*

* queue settings

*/

unsigned long nr_requests; /* Max # of requests */

unsigned int nr_congestion_on;

unsigned int nr_congestion_off;

unsigned int nr_batching;

void *dma_drain_buffer;

unsigned int dma_drain_size;

unsigned int dma_pad_mask;

unsigned int dma_alignment;

struct blk_queue_tag *queue_tags;

struct list_head tag_busy_list;

unsigned int nr_sorted;

unsigned int in_flight[2];

unsigned int rq_timeout;

struct timer_list timeout;

struct list_head timeout_list;

struct queue_limits limits;

/*

* sg stuff

*/

unsigned int sg_timeout;

unsigned int sg_reserved_size;

int node;

#ifdef CONFIG_BLK_DEV_IO_TRACE

struct blk_trace *blk_trace;

#endif

/*

* reserved for flush operations

*/

unsigned int ordered, next_ordered, ordseq;

int orderr, ordcolor;

struct request pre_flush_rq, bar_rq, post_flush_rq;

struct request *orig_bar_rq;

struct mutex sysfs_lock;

#if defined(CONFIG_BLK_DEV_BSG)

struct bsg_class_device bsg_dev;

#endif

};

bio:块数据传送时怎样完成填充或读取块给driver

struct bio {

sector_t bi_sector; /* device address in 512 byte

sectors */

struct bio *bi_next; /* request queue link */

struct block_device *bi_bdev;

unsigned long bi_flags; /* status, command, etc */

unsigned long bi_rw; /* bottom bits READ/WRITE,

* top bits priority

*/

unsigned short bi_vcnt; /* how many bio_vec's */

unsigned short bi_idx; /* current index into bvl_vec */

/* Number of segments in this BIO after

* physical address coalescing is performed.

*/

unsigned int bi_phys_segments;

unsigned int bi_size; /* residual I/O count */

/*

* To keep track of the max segment size, we account for the

* sizes of the first and last mergeable segments in this bio.

*/

unsigned int bi_seg_front_size;

unsigned int bi_seg_back_size;

unsigned int bi_max_vecs; /* max bvl_vecs we can hold */

unsigned int bi_comp_cpu; /* completion CPU */

atomic_t bi_cnt; /* pin count */

struct bio_vec *bi_io_vec; /* the actual vec list */

bio_end_io_t *bi_end_io;

void *bi_private;

#if defined(CONFIG_BLK_DEV_INTEGRITY)

struct bio_integrity_payload *bi_integrity; /* data integrity */

#endif

bio_destructor_t *bi_destructor; /* destructor */

/*

* We can inline a number of vecs at the end of the bio, to avoid

* double allocations for a small number of bio_vecs. This member

* MUST obviously be kept at the very end of the bio.

*/

struct bio_vec bi_inline_vecs[0];

};

gendisk:通用硬盘

struct gendisk {

/* major, first_minor and minors are input parameters only,

* don't use directly. Use disk_devt() and disk_max_parts().

*/

int major; /* major number of driver */

int first_minor;

int minors; /* maximum number of minors, =1 for

* disks that can't be partitioned. */

char disk_name[DISK_NAME_LEN]; /* name of major driver */

char *(*devnode)(struct gendisk *gd, mode_t *mode);

/* Array of pointers to partitions indexed by partno.

* Protected with matching bdev lock but stat and other

* non-critical accesses use RCU. Always access through

* helpers.

*/

struct disk_part_tbl *part_tbl;

struct hd_struct part0;

const struct block_device_operations *fops;

struct request_queue *queue;

void *private_data;

int flags;

struct device *driverfs_dev; // FIXME: remove

struct kobject *slave_dir;

struct timer_rand_state *random;

atomic_t sync_io; /* RAID */

struct work_struct async_notify;

#ifdef CONFIG_BLK_DEV_INTEGRITY

struct blk_integrity *integrity;

#endif

int node_id;

};

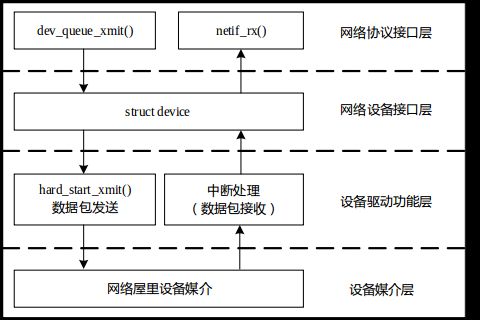

net

物理网卡:硬件网卡设备

虚拟网卡eth0等,fconfig查看网卡

网络命令ping、ifconfig等对网络API封装

socket、bind、listen、connect、send、recv等API对网络驱动封装

网络设备抽象为发送和接收数据包网络接口

结构体:net_device:网卡设备,sk_buff:内核缓冲区用于发送接收数据包

dm9000

module_init(dm9000_init);

module_exit(dm9000_cleanup);static int __init dm9000_init(void)

{

/* disable buzzer */

s3c_gpio_setpull(S5PV210_GPD0(2), S3C_GPIO_PULL_UP);//设置上拉

s3c_gpio_cfgpin(S5PV210_GPD0(2), S3C_GPIO_SFN(1));//设置输出模式

gpio_set_value(S5PV210_GPD0(2), 0);//设置输出值为0

dm9000_power_int();

printk(KERN_INFO "%s Ethernet Driver, V%s\n", CARDNAME, DRV_VERSION);

return platform_driver_register(&dm9000_driver);

}

static void __exit dm9000_cleanup(void)

{

platform_driver_unregister(&dm9000_driver);

}static struct platform_driver dm9000_driver = {

.driver = {

.name = "dm9000",

.owner = THIS_MODULE,

.pm = &dm9000_drv_pm_ops,

},

.probe = dm9000_probe,

.remove = __devexit_p(dm9000_drv_remove),

};

static int __devinit dm9000_probe(struct platform_device *pdev)

{

struct dm9000_plat_data *pdata = pdev->dev.platform_data;

struct board_info *db; /* Point a board information structure */

struct net_device *ndev;

const unsigned char *mac_src;

int ret = 0;

int iosize;

int i;

u32 id_val;

/* Init network device */

ndev = alloc_etherdev(sizeof(struct board_info));

if (!ndev) {

dev_err(&pdev->dev, "could not allocate device.\n");

return -ENOMEM;

}

SET_NETDEV_DEV(ndev, &pdev->dev);

dev_dbg(&pdev->dev, "dm9000_probe()\n");

/* setup board info structure */

db = netdev_priv(ndev);

db->dev = &pdev->dev;

db->ndev = ndev;

spin_lock_init(&db->lock);

mutex_init(&db->addr_lock);

INIT_DELAYED_WORK(&db->phy_poll, dm9000_poll_work);

db->addr_res = platform_get_resource(pdev, IORESOURCE_MEM, 0);

db->data_res = platform_get_resource(pdev, IORESOURCE_MEM, 1);

db->irq_res = platform_get_resource(pdev, IORESOURCE_IRQ, 0);

if (db->addr_res == NULL || db->data_res == NULL ||

db->irq_res == NULL) {

dev_err(db->dev, "insufficient resources\n");

ret = -ENOENT;

goto out;

}

db->irq_wake = platform_get_irq(pdev, 1);

if (db->irq_wake >= 0) {

dev_dbg(db->dev, "wakeup irq %d\n", db->irq_wake);

ret = request_irq(db->irq_wake, dm9000_wol_interrupt,IRQF_SHARED, dev_name(db->dev), ndev);

//lqm changed irq method.

//ret = request_irq(db->irq_wake, dm9000_wol_interrupt,IORESOURCE_IRQ_HIGHLEVEL, dev_name(db->dev), ndev);

if (ret) {

dev_err(db->dev, "cannot get wakeup irq (%d)\n", ret);

} else {

/* test to see if irq is really wakeup capable */

ret = set_irq_wake(db->irq_wake, 1);

if (ret) {

dev_err(db->dev, "irq %d cannot set wakeup (%d)\n",

db->irq_wake, ret);

ret = 0;

} else {

set_irq_wake(db->irq_wake, 0);

db->wake_supported = 1;

}

}

}

iosize = resource_size(db->addr_res);

db->addr_req = request_mem_region(db->addr_res->start, iosize,

pdev->name);

if (db->addr_req == NULL) {

dev_err(db->dev, "cannot claim address reg area\n");

ret = -EIO;

goto out;

}

db->io_addr = ioremap(db->addr_res->start, iosize);

if (db->io_addr == NULL) {

dev_err(db->dev, "failed to ioremap address reg\n");

ret = -EINVAL;

goto out;

}

iosize = resource_size(db->data_res);

db->data_req = request_mem_region(db->data_res->start, iosize,

pdev->name);

if (db->data_req == NULL) {

dev_err(db->dev, "cannot claim data reg area\n");

ret = -EIO;

goto out;

}

db->io_data = ioremap(db->data_res->start, iosize);

if (db->io_data == NULL) {

dev_err(db->dev, "failed to ioremap data reg\n");

ret = -EINVAL;

goto out;

}

/* fill in parameters for net-dev structure */

ndev->base_addr = (unsigned long)db->io_addr;

ndev->irq = db->irq_res->start;

/* ensure at least we have a default set of IO routines */

dm9000_set_io(db, iosize);

/* check to see if anything is being over-ridden */

if (pdata != NULL) {

/* check to see if the driver wants to over-ride the

* default IO width */

if (pdata->flags & DM9000_PLATF_8BITONLY)

dm9000_set_io(db, 1);

if (pdata->flags & DM9000_PLATF_16BITONLY)

dm9000_set_io(db, 2);

if (pdata->flags & DM9000_PLATF_32BITONLY)

dm9000_set_io(db, 4);

/* check to see if there are any IO routine

* over-rides */

if (pdata->inblk != NULL)

db->inblk = pdata->inblk;

if (pdata->outblk != NULL)

db->outblk = pdata->outblk;

if (pdata->dumpblk != NULL)

db->dumpblk = pdata->dumpblk;

db->flags = pdata->flags;

}

#ifdef CONFIG_DM9000_FORCE_SIMPLE_PHY_POLL

db->flags |= DM9000_PLATF_SIMPLE_PHY;

#endif

dm9000_reset(db);

/* try multiple times, DM9000 sometimes gets the read wrong */

for (i = 0; i < 8; i++) {

id_val = ior(db, DM9000_VIDL);

id_val |= (u32)ior(db, DM9000_VIDH) << 8;

id_val |= (u32)ior(db, DM9000_PIDL) << 16;

id_val |= (u32)ior(db, DM9000_PIDH) << 24;

if (id_val == DM9000_ID)

break;

dev_err(db->dev, "read wrong id 0x%08x\n", id_val);

}

if (id_val != DM9000_ID) {

dev_err(db->dev, "wrong id: 0x%08x\n", id_val);

ret = -ENODEV;

goto out;

}

/* Identify what type of DM9000 we are working on */

/* I/O mode */

db->io_mode = ior(db, DM9000_ISR) >> 6; /* ISR bit7:6 keeps I/O mode */

id_val = ior(db, DM9000_CHIPR);

dev_dbg(db->dev, "dm9000 revision 0x%02x , io_mode %02x \n", id_val, db->io_mode);

switch (id_val) {

case CHIPR_DM9000A:

db->type = TYPE_DM9000A;

break;

case 0x1a:

db->type = TYPE_DM9000C;

break;

default:

dev_dbg(db->dev, "ID %02x => defaulting to DM9000E\n", id_val);

db->type = TYPE_DM9000E;

}

/* from this point we assume that we have found a DM9000 */

/* driver system function */

ether_setup(ndev);

ndev->netdev_ops = &dm9000_netdev_ops;

ndev->watchdog_timeo = msecs_to_jiffies(watchdog);

ndev->ethtool_ops = &dm9000_ethtool_ops;

db->msg_enable = NETIF_MSG_LINK;

db->mii.phy_id_mask = 0x1f;

db->mii.reg_num_mask = 0x1f;

db->mii.force_media = 0;

db->mii.full_duplex = 0;

db->mii.dev = ndev;

db->mii.mdio_read = dm9000_phy_read;

db->mii.mdio_write = dm9000_phy_write;

mac_src = "eeprom";

/* try reading the node address from the attached EEPROM */

for (i = 0; i < 6; i += 2)

dm9000_read_eeprom(db, i / 2, ndev->dev_addr+i);

if (!is_valid_ether_addr(ndev->dev_addr) && pdata != NULL) {

mac_src = "platform data";

//memcpy(ndev->dev_addr, pdata->dev_addr, 6);

/* mac from bootloader */

memcpy(ndev->dev_addr, mac, 6);

}

if (!is_valid_ether_addr(ndev->dev_addr)) {

/* try reading from mac */

mac_src = "chip";

for (i = 0; i < 6; i++)

ndev->dev_addr[i] = ior(db, i+DM9000_PAR);

}

if (!is_valid_ether_addr(ndev->dev_addr))

dev_warn(db->dev, "%s: Invalid ethernet MAC address. Please "

"set using ifconfig\n", ndev->name);

platform_set_drvdata(pdev, ndev);

ret = register_netdev(ndev);

if (ret == 0)

printk(KERN_INFO "%s: dm9000%c at %p,%p IRQ %d MAC: %pM (%s)\n",

ndev->name, dm9000_type_to_char(db->type),

db->io_addr, db->io_data, ndev->irq,

ndev->dev_addr, mac_src);

return 0;

out:

dev_err(db->dev, "not found (%d).\n", ret);

dm9000_release_board(pdev, db);

free_netdev(ndev);

return ret;

}struct platform_device s5p_device_dm9000 = {

.name = "dm9000",

.id = 0,

.num_resources = ARRAY_SIZE(s5p_dm9000_resources),

.resource = s5p_dm9000_resources,

.dev = {

.platform_data = &s5p_dm9000_platdata,

}

};

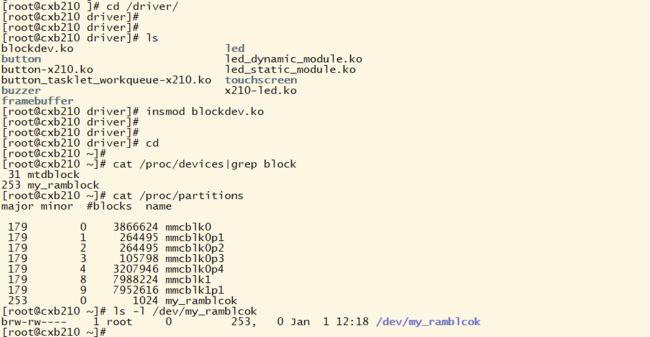

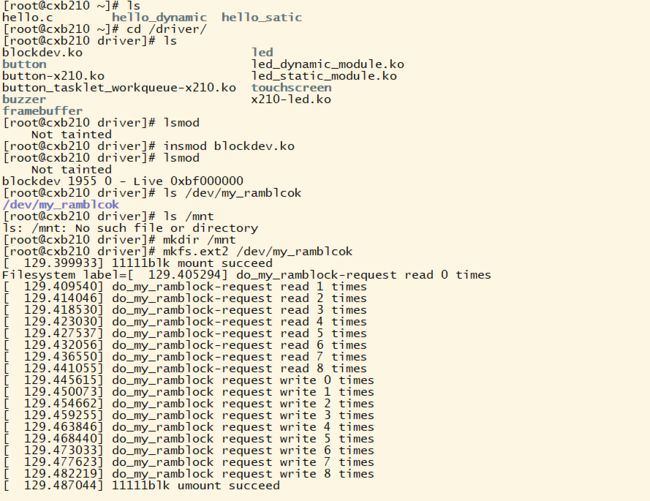

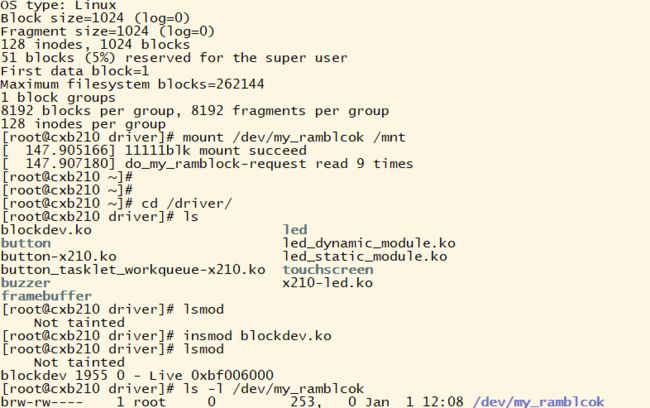

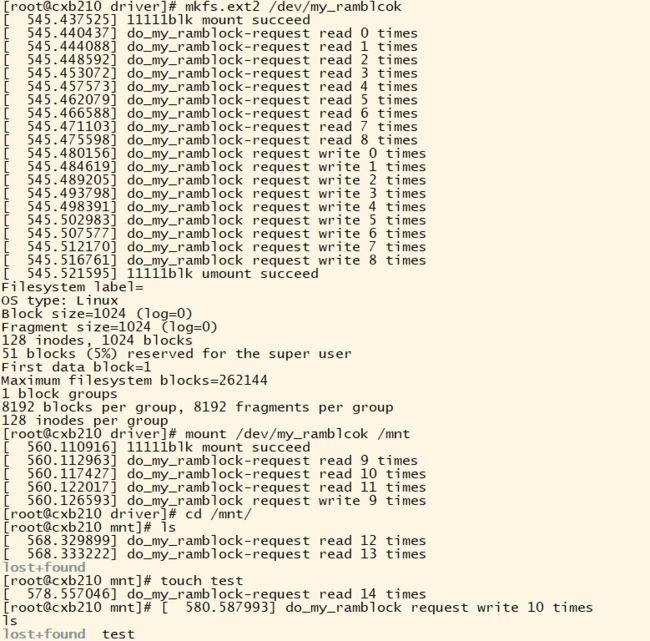

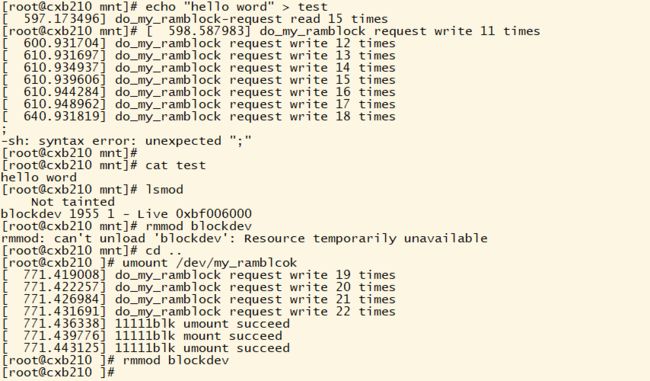

demo1:

虚拟块设备驱动

blockdev.c

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#define RAMBLOCK_SIZE (1024*1024) // 1MB,2048扇区

static struct gendisk *my_ramblock_disk; // 磁盘设备的结构体

static struct request_queue *my_ramblock_queue; // 等待队列

static DEFINE_SPINLOCK(my_ramblock_lock);

static int major;

static unsigned char *my_ramblock_buf; // 虚拟块设备的内存指针

static void do_my_ramblock_request(struct request_queue *q)

{

struct request *req;

static int r_cnt = 0; //实验用,打印出驱动读与写的调度方法

static int w_cnt = 0;

req = blk_fetch_request(q);

while (NULL != req)

{

unsigned long start = blk_rq_pos(req) *512;

unsigned long len = blk_rq_cur_bytes(req);

if(rq_data_dir(req) == READ)

{

// 读请求

memcpy(req->buffer, my_ramblock_buf + start, len); //读操作,

printk("do_my_ramblock-request read %d times\n", r_cnt++);

}

else

{

// 写请求

memcpy( my_ramblock_buf+start, req->buffer, len); //写操作

printk("do_my_ramblock request write %d times\n", w_cnt++);

}

if(!__blk_end_request_cur(req, 0))

{

req = blk_fetch_request(q);

}

}

}

static int blk_ioctl(struct block_device *dev, fmode_t no, unsigned cmd, unsigned long arg)

{

return -ENOTTY;

}

static int blk_open (struct block_device *dev , fmode_t no)

{

printk("blk mount succeed\n");

return 0;

}

static int blk_release(struct gendisk *gd , fmode_t no)

{

printk("blk umount succeed\n");

return 0;

}

static const struct block_device_operations my_ramblock_fops =

{

.owner = THIS_MODULE,

.open = blk_open,

.release = blk_release,

.ioctl = blk_ioctl,

};

static int my_ramblock_init(void)

{

major = register_blkdev(0, "my_ramblock");

if (major < 0)

{

printk("fail to regiser my_ramblock\n");

return -EBUSY;

}

// 实例化

my_ramblock_disk = alloc_disk(1); //次设备个数 ,分区个数 +1

//分配设置请求队列,提供读写能力

my_ramblock_queue = blk_init_queue(do_my_ramblock_request, &my_ramblock_lock);

//设置硬盘属性

my_ramblock_disk->major = major;

my_ramblock_disk->first_minor = 0;

my_ramblock_disk->fops = &my_ramblock_fops;

sprintf(my_ramblock_disk->disk_name, "my_ramblcok"); // /dev/name

my_ramblock_disk->queue = my_ramblock_queue;

set_capacity(my_ramblock_disk, RAMBLOCK_SIZE / 512);

/* 硬件相关操作 */

my_ramblock_buf = kzalloc(RAMBLOCK_SIZE, GFP_KERNEL);

add_disk(my_ramblock_disk); // 向驱动框架注册一个disk或者一个partation的接口

return 0;

}

static void my_ramblock_exit(void)

{

unregister_blkdev(major, "my_ramblock");

del_gendisk(my_ramblock_disk);

put_disk(my_ramblock_disk);

blk_cleanup_queue(my_ramblock_queue);

kfree(my_ramblock_buf);

}

module_init(my_ramblock_init);

module_exit(my_ramblock_exit);

MODULE_LICENSE("GPL"); Makefile

KERN_DIR = /root/kernel

obj-m += blockdev.o

all:

make -C $(KERN_DIR) M=`pwd` modules

cp:

cp *.ko /root/rootfs/driver -f

.PHONY: clean

clean:

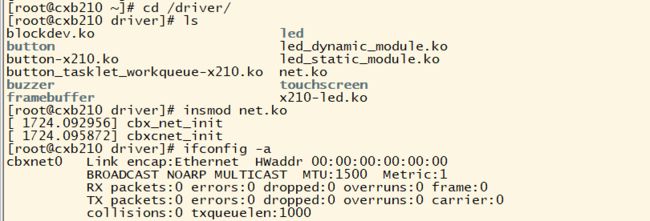

make -C $(KERN_DIR) M=`pwd` modules clean测试示例:

demo2:

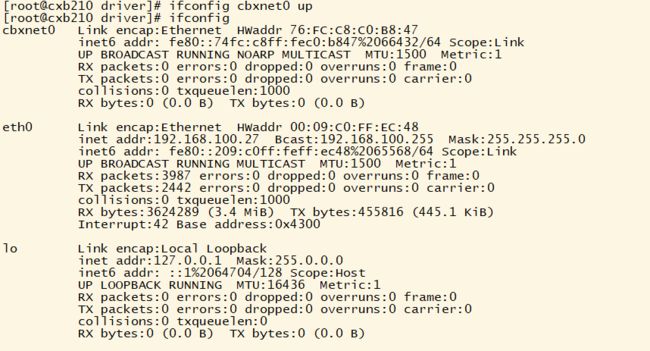

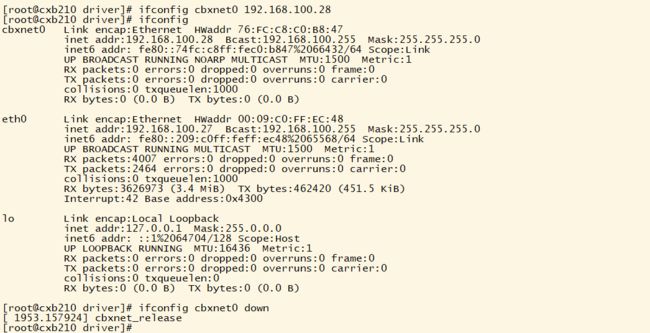

虚拟网卡驱动

net.c

#include

#include

#include /* printk() */

#include /* kmalloc() */

#include /* error codes */

#include /* size_t */

#include /* mark_bh */

#include

#include /* struct device, and other headers */

#include /* eth_type_trans */

#include /* struct iphdr */

#include /* struct tcphdr */

#include

#include

#include

#include

#include

#include

// 如果需要随机MAC地址则定义该宏

#define MAC_AUTO

static struct net_device *cbxnet_devs;

//网络设备结构体,作为net_device->priv

struct cbxnet_priv {

struct net_device_stats stats; //有用的统计信息

int status; //网络设备的状态信息,是发完数据包,还是接收到网络数据包

int rx_packetlen; //接收到的数据包长度

u8 *rx_packetdata; //接收到的数据

int tx_packetlen; //发送的数据包长度

u8 *tx_packetdata; //发送的数据

struct sk_buff *skb; //socket buffer结构体,网络各层之间传送数据都是通过这个结构体来实现的

spinlock_t lock; //自旋锁

};

//网络接口的打开函数

int cbxnet_open(struct net_device *dev)

{

printk("cbxnet_open\n");

#ifndef MAC_AUTO

int i;

for (i=0; i<6; i++)

dev->dev_addr[i] = 0xaa;

#else

random_ether_addr(dev->dev_addr); //随机源地址

#endif

netif_start_queue(dev); //打开传输队列,这样才能进行数据传输

return 0;

}

int cbxnet_release(struct net_device *dev)

{

printk("cbxnet_release\n");

//当网络接口关闭的时候,调用stop方法,这个函数表示不能再发送数据

netif_stop_queue(dev);

return 0;

}

//接包函数

void cbxnet_rx(struct net_device *dev, int len, unsigned char *buf)

{

struct sk_buff *skb;

struct cbxnet_priv *priv = (struct cbxnet_priv *) dev->ml_priv;

skb = dev_alloc_skb(len+2);//分配一个socket buffer,并且初始化skb->data,skb->tail和skb->head

if (!skb) {

printk("gecnet rx: low on mem - packet dropped\n");

priv->stats.rx_dropped++;

return;

}

skb_reserve(skb, 2); /* align IP on 16B boundary */

memcpy(skb_put(skb, len), buf, len);//skb_put是把数据写入到socket buffer

/* Write metadata, and then pass to the receive level */

skb->dev = dev;

skb->protocol = eth_type_trans(skb, dev);//返回的是协议号

skb->ip_summed = CHECKSUM_UNNECESSARY; //此处不校验

priv->stats.rx_packets++;//接收到包的个数+1

priv->stats.rx_bytes += len;//接收到包的长度

printk("cbxnet rx \n");

netif_rx(skb);//通知内核已经接收到包,并且封装成socket buffer传到上层

return;

}

//真正的处理的发送数据包

//模拟从一个网络向另一个网络发送数据包

void cbxnet_hw_tx(char *buf, int len, struct net_device *dev)

{

struct net_device *dest;//目标设备结构体,net_device存储一个网络接口的重要信息,是网络驱动程序的核心

struct cbxnet_priv *priv;

if (len < sizeof(struct ethhdr) + sizeof(struct iphdr))

{

printk("cbxnet: Hmm... packet too short (%i octets)\n", len);

return;

}

dest = cbxnet_devs;

priv = (struct cbxnet_priv *)dest->ml_priv; //目标dest中的priv

priv->rx_packetlen = len;

priv->rx_packetdata = buf;

printk("cbxnet tx \n");

dev_kfree_skb(priv->skb);

}

//发包函数

int cbxnet_tx(struct sk_buff *skb, struct net_device *dev)

{

int len;

char *data;

struct cbxnet_priv *priv = (struct cbxnet_priv *)dev->ml_priv;

if (skb == NULL)

{

printk("net_device %p, skb %p\n", dev, skb);

return 0;

}

len = skb->len < ETH_ZLEN ? ETH_ZLEN : skb->len;//ETH_ZLEN是所发的最小数据包的长度

data = skb->data;//将要发送的数据包中数据部分

priv->skb = skb;

cbxnet_hw_tx(data, len, dev);//真正的发送函数

return 0;

}

//设备初始化函数

int cbxnet_init(struct net_device *dev)

{

printk("cbxcnet_init\n");

ether_setup(dev);//填充一些以太网中的设备结构体的项

/* keep the default flags, just add NOARP */

dev->flags |= IFF_NOARP;

//为priv分配内存

dev->ml_priv = kmalloc(sizeof(struct cbxnet_priv), GFP_KERNEL);

if (dev->ml_priv == NULL)

return -ENOMEM;

memset(dev->ml_priv, 0, sizeof(struct cbxnet_priv));

spin_lock_init(&((struct cbxnet_priv *)dev->ml_priv)->lock);

return 0;

}

static const struct net_device_ops cbxnet_netdev_ops = {

.ndo_open = cbxnet_open, // 打开网卡 对应 ifconfig xx up

.ndo_stop = cbxnet_release, // 关闭网卡 对应 ifconfig xx down

.ndo_start_xmit = cbxnet_tx, // 开启数据包传输

.ndo_init = cbxnet_init, // 初始化网卡硬件

};

static void cbx_plat_net_release(struct device *dev)

{

printk("cbx_plat_net_release\n");

}

static int __devinit cbx_net_probe(struct platform_device *pdev)

{

int result=0;

cbxnet_devs = alloc_etherdev(sizeof(struct net_device));

cbxnet_devs->netdev_ops = &cbxnet_netdev_ops;

strcpy(cbxnet_devs->name, "cbxnet0");

if ((result = register_netdev(cbxnet_devs)))

printk("cbxnet: error %i registering device \"%s\"\n", result, cbxnet_devs->name);

return 0;

}

static int __devexit cbx_net_remove(struct platform_device *pdev) //设备移除接口

{

kfree(cbxnet_devs->ml_priv);

unregister_netdev(cbxnet_devs);

return 0;

}

static struct platform_device cbx_net= {

.name = "cbx_net",

.id = -1,

.dev = {

.release = cbx_plat_net_release,

},

};

static struct platform_driver cbx_net_driver = {

.probe = cbx_net_probe,

.remove = __devexit_p(cbx_net_remove),

.driver = {

.name ="cbx_net",

.owner = THIS_MODULE,

},

};

static int __init cbx_net_init(void)

{

printk("cbx_net_init \n");

platform_device_register(&cbx_net);

return platform_driver_register(&cbx_net_driver );

}

static void __exit cbx_net_cleanup(void)

{

platform_driver_unregister(&cbx_net_driver );

platform_device_unregister(&cbx_net);

}

module_init(cbx_net_init);

module_exit(cbx_net_cleanup);

MODULE_LICENSE("GPL"); Makefile

KERN_DIR = /root/kernel

obj-m += net.o

all:

make -C $(KERN_DIR) M=`pwd` modules

cp:

cp *.ko /root/rootfs/driver -f

.PHONY: clean

clean:

make -C $(KERN_DIR) M=`pwd` modules clean测试示例: