torchvision.datasets

文章目录

- torchvision

-

- 介绍

- torchvision.datasets

- 使用

-

- 手写数字MNIST识别

-

- torchvision.dasets.mnist

- 数据集创建

- Dataloader创建

- 模型搭建

- 训练

- 测试

- 结果可视化

- OxfordIIITPet猫狗识别

-

- torchvision.datasets.OxfordIIITPet

- 数据集创建

- dataloader创建

- 模型搭建

- trainer

- 训练

- 测试

- 数据集分类

-

- 图像分类数据集

- 图片检测或分割数据集

- 光流数据集

- 立体匹配数据集

- 图像描述数据集

- 视频分类数据集

- 视频预测数据集

- Related Links

torchvision

介绍

torchvision是PyTorch项目的一部分,由流行数据集,模型结构和计算机视觉的常见图像变换构成。简单而言就是

torchvision.datasets

torchvision.models

torchvision.transforms

当然还有别的模块,但这篇文章介绍第一个部分,数据集部分

torchvision.datasets

这个模块内置了很多数据集,并且所有的数据集都是 torch.utils.data.Dataset 的子集,因此可以通过 torch.utils.data.DataLoader读取

import torch

import torchvision

imagenet_data = torchvision.datasets.ImageNet('path/to/imagenet_root/')#root 你存放数据集的地址

data_loader = torch.utils.data.DataLoader(imagenet_data,

batch_size=4,

shuffle=True,

num_workers=0)

所有数据集都有相似的API,都有两个参数:transform和target_transform来对相应的input和target做变换,也可以通过提供的 base classes来创建自己的数据集。

使用

手写数字MNIST识别

torchvision.dasets.mnist

参数:

- root 存放数据集的根目录,如果downloaded为true,会下载到当前目录

- true 如果为True,会从训练文件中创建,即创建训练集,反之从测试文件中创建测试集

- download 如果为True,在root目录下载文件,如果已有文件不会重复下载,具体的下载地址和文件如下:

mirrors = [

"http://yann.lecun.com/exdb/mnist/",

"https://ossci-datasets.s3.amazonaws.com/mnist/",

]

resources = [

("train-images-idx3-ubyte.gz", "f68b3c2dcbeaaa9fbdd348bbdeb94873"),#训练

("train-labels-idx1-ubyte.gz", "d53e105ee54ea40749a09fcbcd1e9432"),

("t10k-images-idx3-ubyte.gz", "9fb629c4189551a2d022fa330f9573f3"),#测试

("t10k-labels-idx1-ubyte.gz", "ec29112dd5afa0611ce80d1b7f02629c"),

]

- transform 可选 在一个PIL图像上施加一个函数/变换,返回变换后的图像

- target_transform 可选 在target上施加一个函数/变换,在这里target是类别标签

数据集创建

数据集信息

- 训练集 60000个样本 尺寸1×28×28

- 测试集 10000个样本 尺寸1×28×28

- 类别数 0-9 10类手写数字

from torchvision import datasets

from torchvision.transforms import ToTensor

train_data=datasets.MNIST(

root='data',

train=True,

transform=ToTensor(),

download=True

)

test_data=datasets.MNIST(

root='data',

train=False,

transform=ToTensor(),

download=True

)

Dataloader创建

from torch.utils.data import DataLoader

loaders = {

'train':DataLoader(train_data,

batch_size=100,

shuffle=True,

num_workers=1),

'test':DataLoader(test_data,

batch_size=100,

shuffle=True,

num_workers=1)

}

模型搭建

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

class CNN(nn.Module):

def __init__(self):

super(CNN,self).__init__()

self.conv1 = nn.Conv2d(1,10,kernel_size=5)

self.conv2 = nn.Conv2d(10,20,kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50,10)

def forward(self, x):

#x [B, C, H, W]->[100, 1, 28, 28]

x = F.relu(F.max_pool2d(self.conv1(x),2)) #x->[100, 10, 12, 12]

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)),2))#x->[100, 20, 4, 4]

x = x.view(-1,320) #x->[100, 320]

x = F.relu(self.fc1(x)) #x->[100, 50]

x = F.dropout(x, training=self.training) #x->[100, 50]

x = self.fc2(x) #x->[100, 1]

return F.softmax(x)

训练

import torch

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = CNN().to(device)

optimizer = optim.Adam(model.parameters(), lr=0.01)

loss_fn = nn.CrossEntropyLoss()

def train(epoch):

model.train()

for batch_idx, (data, target) in enumerate(loaders['train']):

data,target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = loss_fn(output,target)

loss.backward()

optimizer.step()

if batch_idx % 20 == 0:

print(f"Train Epoch: {epoch} [{batch_idx *len(data)}/{len(loaders['train'].dataset)}({100* batch_idx/len(loaders['train']):.0f}%)\t{loss.item():.6f}]")

for epoch in range(10):

train(epoch)

测试

def test():

model.eval()

test_loss = 0

correct = 0

with torch.inference_mode():

for data,target in loaders['test']:

data,target = data.to(device),target.to(device)

output = model(data)

test_loss += loss_fn(output,target).item()

pred = output.argmax(dim=1, keepdim=True)

correct +=pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(loaders['test'].dataset)

print(f"\nTest set: Average loss: {test_loss:.4f}, Accuracy {correct}/{len(loaders['test'].dataset)} ({100. * correct/len(loaders['test'].dataset):.0f})%\n")

test()

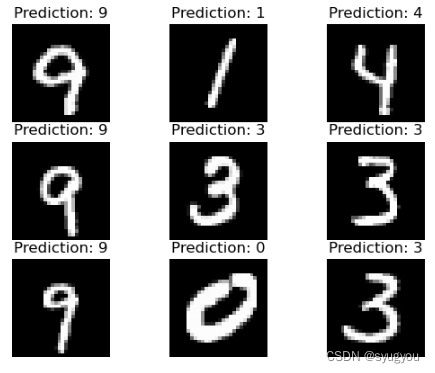

结果可视化

import matplotlib.pyplot as plt

import numpy as np

import matplotlib.gridspec as gridspec

# Create 3x3 subplots

gs = gridspec.GridSpec(3, 3)

fig = plt.figure()

for i in range(9):

model.eval();

data,target = test_data[np.random.randint(test_data.data.shape)[0]]

data = data.unsqueeze(0).to(device)

output = model(data)

prediction = output.argmax(dim=1,keepdim=True).item()

image = data.squeeze(0).squeeze(0).cpu().numpy()

ax = fig.add_subplot(gs[i]);

ax.set_title(f"Prediction: {prediction}")

ax.imshow(image,cmap='gray')

ax.axis('off')

plt.show();

OxfordIIITPet猫狗识别

torchvision.datasets.OxfordIIITPet

参数:

- root 存储数据集的根目录

- split 数据集划分,支持traineval和test

- target_types 该数据集有多种标注,如类别和像素级分割

- category 37种宠物类别

- segmentation 图像的前后景分割

- transform 施加在图片上的变换

- target_transform 施加在标签上的变换

- download 是否下载数据集

数据集创建

import datetime

import numpy as np

from tqdm import tqdm

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as T

from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay

from sklearn.model_selection import train_test_split

# 划分训练集

raw_train_dataset = torchvision.datasets.OxfordIIITPet(root='./data/oxford-pets', download=True)

# 划分测试集

raw_test_dataset = torchvision.datasets.OxfordIIITPet(root='./data/oxford-pets', split='test', download=True)

print(len(raw_train_dataset))

print(len(raw_test_dataset))

class preprocessDataset(torch.utils.data.Dataset):

def __init__(self, dataset, transform):

self.dataset = dataset

self.transform = transform

def __len__(self):

return len(self.dataset)

def __getitem__(self, index):

image, target = self.dataset[index]

augmented_image = self.transform(image)

return augmented_image, target

#因为模型主干选择内置resnet34,因此定义默认权重

weights = torchvision.models.resnet.ResNet34_Weights.DEFAULT

#得到预处理变换

preprocess = weights.transforms()

#预处理

train_dataset = preprocessDataset(raw_train_dataset, preprocess)

test_dataset = preprocessDataset(raw_test_dataset, preprocess)

#验证集划分

train_dataset, val_dataset = train_test_split(train_dataset, test_size=0.2, random_state=0)

dataloader创建

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=64, shuffle=True)

val_loader = torch.utils.data.DataLoader(val_dataset, batch_size=64, shuffle=False)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=64, shuffle=False)

模型搭建

class ResNet_Classifier(torch.nn.Module):

def __init__(self, weights, freeze_weights, dropout):

super(ResNet_Classifier, self).__init__()

# 使用内置resnet34

resnet = torchvision.models.resnet34(weights=weights)

out_features = 512

# 冻结预训练参数

if freeze_weights:

for param in resnet.parameters():

param.requires_grad = False

# 移除最后一层

base_model = nn.Sequential(*list(resnet.children())[:-1])

self.layers = nn.Sequential(

base_model,

nn.Flatten(),

nn.Linear(out_features, 512),

nn.ReLU(),

nn.Dropout(dropout),

nn.Linear(512, 37)

)

def forward(self, x):

outputs = self.layers(x)

return outputs

trainer

def save_model(model,epoch, name=''):

now = datetime.datetime.now()

now = now + datetime.timedelta(hours=5, minutes=30)

date_time = now.strftime("%Y-%m-%d_%H-%M-%S")

torch.save(model.state_dict(), f'model_{name}_epoch[{epoch}]_{date_time}.pt')

def evaluate_model(model, dataloader):

model.eval() # Set the model to evaluation mode

total_loss = 0.0

correct_predictions = 0

total_samples = 0

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

with torch.no_grad():

for inputs, labels in dataloader:

inputs, labels = inputs.to(device), labels.to(device)

# 前向传播

outputs = model(inputs)

loss = criterion(outputs, labels)

# 更新指标

total_loss += loss.item()

_, predicted = torch.max(outputs, 1)

correct_predictions += (predicted == labels).sum().item()

total_samples += labels.size(0)

average_loss = total_loss / len(dataloader)

accuracy = correct_predictions / total_samples

return average_loss, accuracy

def early_stop(val_loss, val_history, patience):

if val_loss < val_history['best']:

val_history['best'] = val_loss

val_history['no_improvement'] = 0

else:

val_history['no_improvement'] += 1

if val_history['no_improvement'] >= patience:

return True

return False

def train_model(model, dataloader, num_epochs, train_transform, device,early_stop_patience, history, val_history, data_augment=False):

current_lr = optimizer.param_groups[0]['lr']

best_val = 0

# 训练

for epoch in range(num_epochs):

total_loss = 0

correct_predictions = 0

total_samples = 0

for images, labels in dataloader:

# 数据加载到GPU

images, labels = images.to(device), labels.to(device)

# 随机数据增强

if data_augment:

images = train_transform(images)

# 梯度清零

optimizer.zero_grad()

# 前向传播

outputs = model(images)

# 后向传播

loss = criterion(outputs, labels)

loss.backward()

# 权重更新

optimizer.step()

total_loss += loss.item()

_, predicted = torch.max(outputs, 1)

correct_predictions += (predicted == labels).sum().item()

total_samples += labels.size(0)

accuracy = correct_predictions / total_samples

total_loss = total_loss/len(dataloader)

val_loss, val_accuracy = evaluate_model(model, val_loader)

# save the best model so far

if best_val < val_accuracy and val_accuracy > 0.92:

best_val = val_accuracy

save_model(model,epoch+1, str(int(10000* val_accuracy)))

if (epoch+1)%1 == 0:

print(f'Epoch [{epoch+1}/{num_epochs}], LR: {current_lr}, ', end='')

print(f'train_loss: {total_loss:.5f}, train_acc: {accuracy:.5f}, ', end='')

print(f'val_loss: {val_loss:.5f}, val_acc: {val_accuracy:.5f}')

if early_stop(val_loss, val_history, early_stop_patience):

print(f"Stopped due to no improvement for {val_history['no_improvement']} epochs")

save_model(model,epoch+1)

break

model.train(True) # Switch back to training mode

# Update the learning rate

scheduler.step(val_loss)

current_lr = optimizer.param_groups[0]['lr']

history['train_loss'].append(total_loss)

history['val_loss'].append(val_loss)

history['train_acc'].append(accuracy)

history['val_acc'].append(val_accuracy)

if (epoch+1) % 50 == 0:

save_model(model, epoch+1)

训练

num_epochs = 500 # epoch数

learning_rate = 0.015 # 初始学习率

dropout = 0.4

data_augment= True

early_stop_patience = 20 # 早停法的等待epoch数

lr_factor = 0.4

lr_scheduler_patience = 4 # number of epochs

# 数据增强

train_transform = T.RandomChoice([

T.RandomRotation(20),

T.ColorJitter(brightness=0.2, hue=0.1,saturation = 0.1),

T.RandomHorizontalFlip(0.2),

T.RandomPerspective(distortion_scale=0.2)

],

[0.3, 0.3, 0.3, 0.1] )

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = ResNet_Classifier(weights=weights, freeze_weights=False, dropout=dropout)

model = model.to(device)

model = torch.nn.DataParallel(model)

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

criterion = torch.nn.CrossEntropyLoss()

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, factor=lr_factor, patience=lr_scheduler_patience)

history = {'train_loss':[],'val_loss':[], 'train_acc':[], 'val_acc':[]}

val_history = {'best': 1e9, 'no_improvement':0}

train_model(model, train_loader, num_epochs, train_transform, device,early_stop_patience, history, val_history,data_augment)

测试

loss, acc = evaluate_model(model, test_loader)

print(f'test_loss: {loss}, test_acc: {acc*100:.3f}%')

数据集分类

图像分类数据集

所有数据集详见Datasets — Torchvision 0.16 documentation (pytorch.org)

| 数据集(部分) | 描述 |

|---|---|

| CIFAR10 | 10类别分类数据集 |

| FashionMNIST | 时装数据集 |

| Flickr8k | 时间内容描述数据集 |

| Flowers102 | 102类别花卉数据集 |

| MNIST | 手写数据集 |

| StanfordCars | 汽车数据集 |

| Food101 | 101食品类别数据集 |

图片检测或分割数据集

| 数据集(部分) | 描述 |

|---|---|

| CocoDetection | MS Coco 检测数据集 |

| VOCSegmentation | Pascal VOC分割数据集 |

| VOCDetection | Pascal VOC检测数据集 |

光流数据集

| 数据集(部分) | 描述 |

|---|---|

| HD1K | 自动驾驶光流数据集 |

| FlyingChairs | FlyingChairs光流数据集 |

立体匹配数据集

| 数据集(部分) | 描述 |

|---|---|

| CarlaStereo | Carla simulator data linked in the CREStereo github repo. |

| Kitti2012Stereo | KITTI dataset from the 2012 stereo evaluation benchmark. |

| SceneFlowStereo | Dataset interface for Scene Flow datasets. |

| SintelStereo | Sintel Stereo Dataset. |

| InStereo2k | InStereo2k dataset. |

| ETH3DStereo | ETH3D Low-Res Two-View dataset. |

图像描述数据集

| 数据集 | 描述 |

|---|---|

| CocoCaptions | MS Coco Captions 数据集 |

视频分类数据集

| 数据集(部分) | 描述 |

|---|---|

| HMDB51 | 人体动作数据集 |

| UCF101n | 动作识别数据集 |

视频预测数据集

| 数据集(部分) | 描述 |

|---|---|

| MovingMNIST | 运动的手写数字数据集 |

Related Links

torchvision — Torchvision 0.16 documentation (pytorch.org)

nithinbadi/handwritten-digits-predictor: Predicting handwritten digits using PyTorch on Google Colab. Using the MNIST dataset loaded from torchvision (github.com)

limalkasadith/OxfordIIITPet-classification: This repository contains a PyTorch implementation for classifying the Oxford IIIT Pet Dataset using KNN and ResNet. The goal is to differentiate the results obtained using these two approaches. (github.com)