python实现豆瓣网Json数据爬取

相信大家一上手,就是对豆瓣的各种爬,但json数据是个例外,求职网也都是json数据,可爬

爬取这个页面的内容,按年份爬取

选电影 (douban.com)

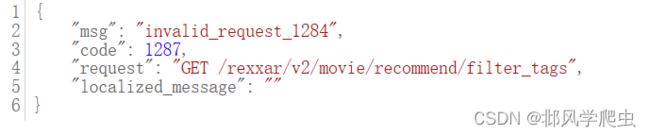

这里演示的是爬取https://m.douban.com/rexxar/api/v2/movie/recommend/filter_tags?selected_categories=%7B%7D

直接点进去,相信大家看到的是这样

二手瓜子网的json数据

这里大家区别以下

1.爬虫的万能第一步:请求头

class Spider:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36 Edg/119.0.0.0',

'Referer': 'https://movie.douban.com/explore'

}2.请求json数据,遍历年份

遍历年份,进行定位,按年份执行detail_parse函数爬取每个电影链接的内容,save_csv函数保存至文件

def index(self):

url = 'https://m.douban.com/rexxar/api/v2/movie/recommend/filter_tags?selected_categories=%7B%7D'

html = requests.get(url=url, headers=self.headers).json()

tags = html.get('tags')[0].get('tags')

for tags in tags[2:]:

movie_id = 0

all_data = []

for i in range(5):

parse_url = f'https://m.douban.com/rexxar/api/v2/movie/recommend?refresh=0&start={i * 20}&count=20&selected_categories=%7B%7D&uncollect=false&tags={tags}'

tags_html = requests.get(url=parse_url, headers=self.headers).json()

for i in tags_html['items']:

movie_id += 1

uri = i['uri'].split('douban.com/')[-1]

detail_url = 'https://www.douban.com/doubanapp/dispatch?uri=' + uri

self.detail_parse(detail_url, all_data, movie_id)

self.save_csv(tags, all_data)3.detail_pare函数实现每个电影的内容爬取

拿到每个电影的网址请求,转beautifulsoup对象,获取内容后顺便使用fill_full函数pipei函数对数据进行清理

def detail_parse(self, detail_url, all_data, movie_id):

self.random_sleep()

html = requests.get(url=detail_url, headers=self.headers).content

html = BeautifulSoup(html, 'lxml')

movies_name = html.select_one('#content > h1 > span:nth-child(1)').text

region_language = html.select_one('#info').get_text()

region_language = region_language.split('\n')

director, editor, actor, movie_type, region, language, on_time, duration = self.pipei(region_language[1:])

year = on_time.split('-')[0]

score = html.select_one('#interest_sectl > div.rating_wrap.clearbox > div.rating_self.clearfix > strong').text

# 空值处理

element = html.select_one(

'#interest_sectl > div.rating_wrap.clearbox > div.rating_self.clearfix > div > div.rating_sum > a > span')

comments = element.text if element is not None else ''

five_star, four_star, three_star, two_star, one_star = self.fill_null(

html.select('#interest_sectl > div.rating_wrap.clearbox > div.ratings-on-weight > div'))

all_data.append(

[movie_id, movies_name, year, director, editor, actor, movie_type, region, language, on_time, duration,

score, comments, five_star, four_star, three_star, two_star, one_star])

return all_data4.空值处理

通过爬取的数据观察,每个电影都有一到五星的评分,有的会有缺失,经过空值处理

def fill_null(self, isNull):

col = []

try:

for i in range(5):

element = isNull[i].select_one('div > span.rating_per')

col.append(element.text if element is not None else '')

return col

except:

return ['', '', '', '', '']5.取出所需要字段

def pipei(self, columns):

temp_col, info = ['导演', '编剧', '主演', '类型', '制片国家/地区', '语言', '上映日期', '片长'], []

temp = dict(zip([i.split(':')[0].replace(' ', '') for i in columns],

[i.split(':')[-1].replace(' ', '') for i in columns]))

for col in temp_col:

info.append(temp.get(col, ""))

return info6.保存至csv文件

def save_csv(self, tags, all_data):

with open(f'./FilmCrawl/{tags}.csv', 'w', newline='', encoding='utf-8') as fp:

csv_write = csv.writer(fp)

csv_write.writerow(

['movie_id', 'movies_name', 'year', 'director', 'editor', 'actor', 'movie_type', 'region', 'language',

'on_time', 'duration', 'score', 'comments', 'five_star', 'four_star', 'three_star', 'two_star',

'one_star'])

for row in all_data:

csv_write.writerow(row)7.随机休眠

每次请求随机休眠,防止被认定人机

def random_sleep(self):

sleep_time = random.uniform(1, 5)

time.sleep(sleep_time)

8.调用整个函数

if __name__ == '__main__':

if not os.path.exists('./FilmCrawl'):

os.mkdir('./FilmCrawl')

spider = Spider()

spider.index()