5-AM Project: day8 Practical data science with Python 2

3 SQL and Built-in File Handling Modules in Python Introduction

Loading, reading, and writing files with base Python

When we talk about "base" Python, we are talking about built-in components of the Python software. We already saw one of these components with the math module in Chapter 2, Getting Started with Python. Here, we'll first cover using the built-in open() function and the methods of file objects to read and write basic text files.

Opening a file and reading its contents

We will sometimes find ourselves wanting to read a plain text file, or maybe another type of text file, such as an HTML file. We can do this with the built-in open function:

file = open(file='textfile.txt', mode='r')

text = file.readlines()

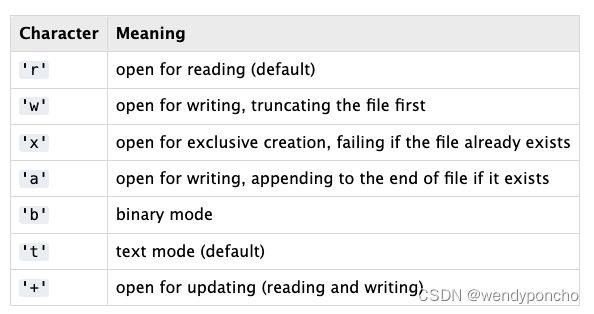

print(text)In the preceding example, we first open a file called textfile.txt in "read" mode. The file argument provides the path to the file.

We have given a "relative" path in the preceding snippet, meaning it looks for textfile.txt in the current directory in which we are running this Python code. We can also provide an "absolute" path with the full file path, with the syntax file=r'C:\Users\username\Documents\textfile.txt'.

Notice we prefix the absolute path string with r, just like we saw in the previous chapter. The r instructs Python to interpret the strings as a "raw" string, and means all characters are interpreted literally. Without the r, the backslash is part of special characters, like the \n newline character sequence.

However, a better way to do this is using a with statement, like so:

with open(file='textfile.txt', mode='r') as f:

text = f.readlines()

print(text)

The first line of the preceding code example opens the file with the open() function, and assigns it to the f variable. We can use the opened file within the indented lines after the with statement, like on the second line where we read all lines from the file.

Using the built-in JSON module

JavaScript Object Notation (JSON) is a text-based format for representing and storing data and is mostly used for web applications. We can use JSON to save data to a text file or to transfer data to and from an Application Programming Interface (API).

APIs allow us to send a request to a web service to accomplish various tasks. An example is sending some text (such as an email message, for example) to an API and getting the sentiment (positive, negative, or neutral) returned to us. JSON looks very much like a dictionary in Python. For example, here is an example dictionary in Python that stores data regarding how many books and articles we've read, and what their subjects include:

data_dictionary = {

'books': 12,

'articles': 100,

'subjects': ['math',

'programming',

'data science']} We can convert this to a JSON-format string in Python by first importing the built-in json module, then using json.dumps():

import json

json_string = json.dumps(data_dictionary)

print(json_string)The preceding json_string will look like this:

'{"books": 12, "articles": 100, "subjects": ["math", "programming", "data science"]}'

If we want to convert that string back to a dictionary, we can do so with json.loads():

data_dict = json.loads(json_string)

print(data_dict)

This will print out the Python dictionary we started with:

{'books': 12,

'articles': 100,

'subjects': ['math', 'programming', 'data science']} If we are sending some data to an API with Python, json.dumps() may come in handy. On the other hand, if we want to save JSON data to a text file, we can use json.dump():

with open('reading.json', 'w') as f:

json.dump(data_dictionary, f)

In the preceding example, we first open the file using a with statement, and the file object is stored in the f variable. Then we use json.dump(), giving it two arguments: the dictionary (data_dictionary) and the file object that has been opened for writing (f).

Saving credentials or data in a Python file

Sometimes we will want to save credentials or other data in a .py file. This can be useful for saving authentication credentials for an API, for example. We can do this by creating a .py file with some variables, such as the credentials.py file in this book's GitHub repository, which looks like this:

username = 'datasci'

password = 'iscool'

Loading these saved credentials is easy: we import the module (a Python .py file, named credentials.py here) and then we can access the variables:

import credentials as creds

print(f'username: {creds.username}\npassword: {creds.password}')

We first import the file/module with the import statement, and alias it as creds. Then we print out a formatted string (using f-string formatting), which displays the username and password variables from the file.

Recall that f-strings can incorporate variables into a string by putting Python code within curly brackets. Notice that we access these variables with the syntax creds.username – first the module name, followed by a period, and then the variable. We also include a line break character (\n) so the username and password print on separate lines.

Of course, we can use this method to save any arbitrary data that can be stored in a Python object in a Python file, but it's not typically used beyond a few small variables such as a username and password. For bigger Python objects (such as a dictionary with millions of elements), we can use other packages and modules such as the pickle module.

Saving Python objects with pickle

Sometimes we'll find ourselves wanting to directly save a Python object, such as a dictionary or some other Python object. This can happen if we are running Python code for data processing or collection and want to store the results for later analysis. An easy way to do this is using the built-in pickle module. As with many things in Python, pickle is a little humorous – it's like pickling vegetables, but we are pickling data instead. For example, using the same data dictionary we used earlier (with data on books and articles we've read), we can save this dictionary object to a pickle file like so:

import pickle as pk

data_dictionary = {

'books': 12,

'articles': 100,

'subjects': ['math',

'programming',

'data science']}

with open('readings.pk', 'wb') as f:

pk.dump(data_dictionary, f)

We first import the pickle module, and alias it as pk. Then we create our data_dictionary object containing our data. Finally, we open a file called readings.pk, and write the data_dictionary to the file with the pickle.dump() function (which is pk.dump() since we aliased pickle as pk). In our open() function, we are setting the mode argument to the value 'wb'. This stands for "write binary," meaning we are writing data to the file in binary format (0s and 1s, which are actually represented in hexadecimal format with pickle). Since pickle saves data in binary format, we must use this 'wb' argument to write data to a pickle file.

Once we have data in a pickle file, we can load it into Python like so:

with open('readings.pk', 'rb') as f:

data = pk.load(f) Note that we are using 'rb' now for our mode argument, meaning "read binary." Since the file is in binary format, we must specify this when opening the file. Once we open the file, we then load the contents of the file into the data variable with pickle.load(f) (which is pk.load(f) in the preceding code since we aliased pickle as pk). Pickle is a great way to save almost any Python object quickly and easily. However, for specific, organized data, it can be better to store it in a SQL database, which we'll cover now.

Using SQLite and SQL

SQL, or Structured Query Language, is a programming language for interacting with data in relational databases (sometimes called RDBMS, meaning Relational Database Management System). SQL has been around since the 1970s and continues to be used widely today. You will likely interact with a SQL database or use SQL queries sooner or later at your workplace if you haven't already. Its advantages in speed and momentum from decades of use sustain its widespread utilization today.

NoSQL databases are an alternative to SQL databases, and are used in situations where we might prefer to have more flexibility in our data model, or a different model completely (such as a graph database). For example, if we aren't sure of all the columns or fields we might collect with our data (and they could change frequently over time), a NoSQL document database such as MongoDB may be better than SQL. For big data, NoSQL used to have an advantage over SQL because NoSQL could scale horizontally (that is, adding more nodes to a cluster). Now, SQL databases have been developed to scale easily, and we can also use cloud services such as AWS Redshift and Google's BigQuery to scale SQL databases easily.

Much of the world's data is stored in relational databases using SQL. It's important to understand the basics of SQL so we can retrieve our own data for our data science work. We can interact directly with SQL databases through the command line or GUI tools and through Python packages such as pandas and SQLAlchemy. But first, we will practice SQL with SQLite databases, since SQLite3 comes installed with Python. SQLite is what it sounds like – a lightweight version of SQL. It lacks the richer functionality of other SQL databases such as MySQL, but is faster and easier to use. However, it can still hold a lot of data, with a maximum potential database size of around 281 TB for SQLite databases.

If we have a SQLite file, we can interact with it via the command line or other software such as SQLiteStudio (currently at SQLiteStudio). However, we can also use it with Python using the built-in sqlite3 module. To get started with this method, we import sqlite3, then connect to the database file, and create a cursor:

import sqlite3

connection = sqlite3.connect('chinook.db')

cursor = connection.cursor()

The string argument to sqlite3.connect() should be either the relative or absolute path to the database file. The relative path means it is relative to our current working directory (where we are running the Python code from). If we want to use an absolute path, we could supply something like the following:

connection = sqlite3.connect(r'C:\Users\my_username\github\Practical-Data-Science-with-Python\Chapter3\chinook.db')

Notice that the string has the r character before it. As we mentioned earlier, this stands for raw string and means it treats special characters (such as the backslash, \) as literal characters. With raw strings, the backslash is simply a backslash, and this allows us to copy and paste file paths from the Windows File Explorer to Python.

The preceding cursor is what allows us to run SQL commands. For example, to run the SELECT command that we already tried in the SQLite shell, we can use the cursor:

cursor.execute('SELECT * FROM artists LIMIT 5;')

cursor.fetchall()

We use the fetchall function to retrieve all the results from the query. There are also fetchone and fetchmany functions we could use instead, which are described in Python's sqlite3 documentation. These functions retrieve one record (fetchone) and several records (fetchmany, which retrieves a number of records we specify).

When we start executing bigger SQL queries, it helps to format them differently. We can break up a SQL command into multiple lines like so:

query = """

SELECT *

FROM artists

LIMIT 5;

"""

cursor.execute(query)

cursor.fetchall()

We are using a multi-line string for the query variable with the triple quotes and putting each SQL command on a separate line. Then we give this string variable, query, to the cursor.execute() function. Finally, we retrieve the results with fetchall.

When selecting data, it can be useful to sort it by one of the columns. Let's look at the invoices table and get the biggest invoices:

cursor.execute(

"""SELECT Total, InvoiceDate

FROM invoices

ORDER BY Total DESC

LIMIT 5;"""

)

cursor.fetchall()Another useful SQL command is WHERE, which allows us to filter data. It's similar to an if statement in Python. We can filter with Boolean conditions, such as equality (==), inequality (!=), or other comparisons (including less than, <, and greater than, >). Here is an example of getting invoices from Canada :

cursor.execute(

"""SELECT Total, BillingCountry

FROM invoices

WHERE BillingCountry == "Canada"

LIMIT 5;"""

)

cursor.fetchall()As a part of WHERE, we can filter by pattern matching using LIKE. This is similar to regular expressions, which we will cover in Chapter 18, Working with Text. We can find any country strings that contain the letters c, a, and n like so:

cursor.execute(

"""SELECT Total, BillingCountry

FROM invoices

WHERE BillingCountry LIKE "%can%"

LIMIT 5;"""

)

cursor.fetchall()We can see that the US has the top number of purchases (494) of the track with ID 99, but we don't know what song that is. In order to get results with the song titles, we need to combine our invoice and invoice_items tables with one more table – the tracks table, which has the song titles. We can do this with multiple JOIN clauses:

query = """

SELECT tracks.Name, COUNT(invoice_items.TrackId), invoices.BillingCountry

FROM invoices

JOIN invoice_items

ON invoices.InvoiceId = invoice_items.InvoiceId

JOIN tracks

ON tracks.TrackId = invoice_items.TrackId

GROUP BY invoices.BillingCountry

ORDER BY COUNT(invoice_items.TrackId) DESC

LIMIT 5;

"""

cursor.execute(query)

cursor.fetchall()Here, we are combining the invoices, invoice_items, and tracks tables to get the track name, the number of purchases, and the country, and then group the results by country. We can see we first select our columns, specifying which table each of the columns comes from, such as tracks.Name, to get each song's title from the tracks table. Then we specify our first table: invoices. Next, we join with invoice_items as we did before on the InvoiceId column. Then we join the tracks table, which shares a TrackId column with the invoice_items table. Finally, we group by country and sort by the count of TrackId as we did before and limit our results to the top five. The results look like this:

Once we are finished with a SQLite database in Python, it's usually a good idea to close it like this:

connection.close()