OpenSceneGraph源码分析:osg模块

osg库是OpenSceneGraph最为重要的一个模块,提供了内存管理、场景管理、图形绘制、状态管理等功能。

Ref. from OpenSceneGraph Quick Start Guide======================================

The osg library is the heart of OpenSceneGraph. It defines the core nodes that make up the scene graph, as well as several additional classes that aid in scene graph management and application development.

This library contains the scene graph node classes that your application uses to build scene graphs. It also contains classes for vector and matrix math, geometry, and rendering state specification and management. Other classes in osg provide additional functionality typically required by 3D applications, such as argument parsing, animation path management, and error and warning communication.

======================================Ref. from OpenSceneGraph Quick Start Guide

本文拟从基本概念、基本原理、基本框架、基本组件等方面对osg模块实现进行系统性分析。

注1:文章内容会随着后续研究不断更新。

注2:限于笔者认知水平与研究深度,难免表述不当,欢迎大家批评指正。

注3:部分引用未标明出处,敬请谅解。

预修1:OpenGL

由于OSG采用标准C++和OpenGL编写而成,为了对OSG进行系统性分析,因此,非常有必要了解OpenGL相关基础知识。

p1.1 OpenGL is a State Machine

Ref. from OpenGL Wiki =======================================================

An OpenGL context represents many things. A context stores all of the state associated with this instance of OpenGL. It represents the (potentially visible) default framebuffer that rendering commands will draw to when not drawing to a framebuffer object. Think of a context as an object that holds all of OpenGL; when a context is destroyed, OpenGL is destroyed.

Contexts are localized within a particular process of execution (an application, more or less) on an operating system. A process can create multiple OpenGL contexts. Each context can represent a separate viewable surface, like a window in an application.

Each context has its own set of OpenGL Objects, which are independent of those from other contexts. A context's objects can be shared with other contexts. Most OpenGL objects are sharable, including Sync Objects and GLSL Objects. Container Objects are not sharable, nor are Query Objects.

Any object sharing must be made explicitly, either as the context is created or before a newly created context creates any objects. However, contexts do not have to share objects; they can remain completely separate from one another.

In order for any OpenGL commands to work, a context must be current; all OpenGL commands affect the state of whichever context is current. The current context is a thread-local variable, so a single process can have several threads, each of which has its own current context. However, a single context cannot be current in multiple threads at the same time.

=======================================================Ref. from OpenGL Wiki

p1.2 Fixed Pipeline

Ref. from SongHo OpenGL,OpenGL Wiki,Microsoft OpenGL===========================

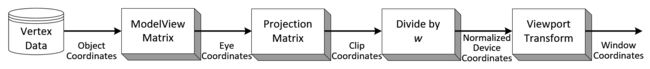

OpenGL Pipeline has a series of processing stages in order. Two graphical information, vertex-based data and pixel-based data, are processed through the pipeline, combined together then written into the frame buffer. Notice that OpenGL can send the processed data back to your application. (See the grey colour lines)

- Display List

Display list is a group of OpenGL commands that have been stored (compiled) for later execution. All data, geometry (vertex) and pixel data, can be stored in a display list. It may improve performance since commands and data are cached in a display list. When OpenGL program runs on the network, you can reduce data transmission over the network by using display list. Since display lists are part of server state and reside on the server machine, the client machine needs to send commands and data only once to server's display list. (See more details in Display List.)

- Vertex Operation

Each vertex and normal coordinates are transformed by GL_MODELVIEW matrix (from object coordinates to eye coordinates). Also, if lighting is enabled, the lighting calculation per vertex is performed using the transformed vertex and normal data. This lighting calculation updates new color of the vertex. (See more details in Transformation)

- Primitive Assembly

After vertex operation, the primitives (point, line, and polygon) are transformed once again by projection matrix then clipped by viewing volume clipping planes; from eye coordinates to clip coordinates. After that, perspective division by w occurs and viewport transform is applied in order to map 3D scene to window space coordinates. Last thing to do in Primitive Assembly is culling test if culling is enabled.

- Pixel Transfer Operation

After the pixels from client's memory are unpacked(read), the data are performed scaling, bias, mapping and clamping. These operations are called Pixel Transfer Operation. The transferred data are either stored in texture memory or rasterized directly to fragments.

- Texture Memory

Texture images are loaded into texture memory to be applied onto geometric objects.

- Raterization

Rasterization is the conversion of both geometric and pixel data into fragment. Fragments are a rectangular array containing color, depth, line width, point size and antialiasing calculations (GL_POINT_SMOOTH, GL_LINE_SMOOTH, GL_POLYGON_SMOOTH). If shading mode is GL_FILL, then the interior pixels (area) of polygon will be filled at this stage. Each fragment corresponds to a pixel in the frame buffer.

- Fragment Operation

It is the last process to convert fragments to pixels onto frame buffer. The first process in this stage is texel generation; A texture element is generated from texture memory and it is applied to the each fragment. Then fog calculations are applied. After that, there are several fragment tests follow in order; Scissor Test ⇒ Alpha Test ⇒ Stencil Test ⇒ Depth Test.

Finally, blending, dithering, logical operation and masking by bitmask are performed and actual pixel data are stored in frame buffer.

- Feedback

OpenGL can return most of current states and information through glGet*() and glIsEnabled() commands. Further more, you can read a rectangular area of pixel data from frame buffer using glReadPixels(), and get fully transformed vertex data using glRenderMode(GL_FEEDBACK). glCopyPixels() does not return pixel data to the specified system memory, but copy them back to the another frame buffer, for example, from front buffer to back buffer.

p1.3 Coordinate Transormation

Geometric data such as vertex positions and normal vectors are transformed via Vertex Operation and Primitive Assembly operation in OpenGL pipeline before raterization process.

Object Coordinates

It is the local coordinate system of objects and is initial position and orientation of objects before any transform is applied. In order to transform objects, use glRotatef(), glTranslatef(), glScalef().

Eye Coordinates

It is yielded by multiplying GL_MODELVIEW matrix and object coordinates. Objects are transformed from object space to eye space using GL_MODELVIEW matrix in OpenGL. GL_MODELVIEW matrix is a combination of Model and View matrices (

). Model transform is to convert from object space to world space. And, View transform is to convert from world space to eye space.

Note that there is no separate camera (view) matrix in OpenGL. Therefore, in order to simulate transforming the camera or view, the scene (3D objects and lights) must be transformed with the inverse of the view transformation. In other words, OpenGL defines that the camera is always located at (0, 0, 0) and facing to -Z axis in the eye space coordinates, and cannot be transformed. See more details of GL_MODELVIEW matrix in ModelView Matrix.

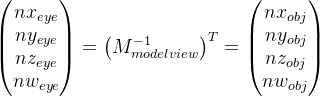

Normal vectors are also transformed from object coordinates to eye coordinates for lighting calculation. Note that normals are transformed in different way as vertices do. It is mutiplying the tranpose of the inverse of GL_MODELVIEW matrix by a normal vector. See more details in Normal Vector Transformation.

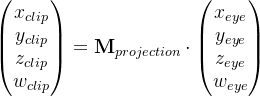

Clip Coordinates

The eye coordinates are now multiplied with GL_PROJECTION matrix, and become the clip coordinates. This GL_PROJECTION matrix defines the viewing volume (frustum); how the vertex data are projected onto the screen (perspective or orthogonal). The reason it is called clip coordinates is that the transformed vertex (x, y, z) is clipped by comparing with ±w.

See more details of GL_PROJECTION matrix in Projection Matrix.

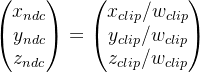

Normalized Device Coordinates (NDC)

It is yielded by dividing the clip coordinates by w. It is called perspective division. It is more like window (screen) coordinates, but has not been translated and scaled to screen pixels yet. The range of values is now normalized from -1 to 1 in all 3 axes.

Window Coordinates (Screen Coordinates)

It is yielded by applying normalized device coordinates (NDC) to viewport transformation. The NDC are scaled and translated in order to fit into the rendering screen. The window coordinates finally are passed to the raterization process of OpenGL pipeline to become a fragment. glViewport() command is used to define the rectangle of the rendering area where the final image is mapped. And, glDepthRange() is used to determine the z value of the window coordinates. The window coordinates are computed with the given parameters of the above 2 functions;

glViewport(x, y, w, h);

glDepthRange(n, f);

The viewport transform formula is simply acquired by the linear relationship between NDC and the window coordinates;

OpenGL Transformation Matrix

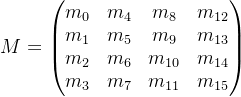

OpenGL uses 4 x 4 matrix for transformations. Notice that 16 elements in the matrix are stored as 1D array in column-major order. You need to transpose this matrix if you want to convert it to the standard convention, row-major format.

OpenGL has 4 different types of matrices; GL_MODELVIEW, GL_PROJECTION, GL_TEXTURE, and GL_COLOR. You can switch the current type by using glMatrixMode() in your code. For example, in order to select GL_MODELVIEW matrix, use glMatrixMode(GL_MODELVIEW).

Note that OpenGL performs matrices multiplications in reverse order if multiple transforms are applied to a vertex.

For example, The glRotatef function computes a matrix that performs a counterclockwise rotation of angle degrees about the vector from the origin through the point (x, y, z).

The current matrix (see glMatrixMode) is multiplied by this rotation matrix, with the product replacing the current matrix. That is, if M is the current matrix and R is the translation matrix, then M is replaced with M R.

============================Ref. from SongHo OpenGL,OpenGL Wiki,Microsoft OpenGL

p1.4 OpenGL APIs for Coordinate Transformation

The followings list the common OpenGL APIs for coordinate transformation along with the math specifications.

- Translate

void glTranslatef(GLfloat x, GLfloat y, GLfloat z);

void glTranslated(GLdouble x,GLdouble y,GLdouble z);

- Rotate

void glRotatef(GLfloat angle,GLfloat x,GLfloat y,GLfloat z);

void glRotated(GLdouble angle, GLdouble x, GLdouble y, GLdouble z);- Scale

void glScalef(GLfloat x, GLfloat y, GLfloat z);

void glScaled(GLdouble x, GLdouble y, GLdouble z);- View Transformation

void gluLookAt(GLdouble eyex, GLdouble eyey, GLdouble eyez, GLdouble centerx, GLdouble centery, GLdouble centerz, GLdouble upx, GLdouble upy, GLdouble upz);- Orthogonal Projection

void glOrtho(GLdouble left, GLdouble right, GLdouble bottom, GLdouble top, GLdouble zNear, GLdouble zFar)- Perspective Projection

void glFrustum( GLdouble left, GLdouble right, GLdouble bottom, GLdouble top, GLdouble zNear, GLdouble zFar )void gluPerspective(GLdouble fovy, GLdouble aspect, GLdouble zNear, GLdouble zFar);- Viewport Transformation

void glViewport(GLint x, GLint y, GLsizei width, GLsizei height);- Load Matrix

void WINAPI glLoadMatrixd(const GLdouble *m);- Matrix Stack

void glPushMatrix(void);

void glPopMatrix(void);p1.5 Shaders

Shaders are pieces of program (using a C-like language) that are build onto the GPU and executed during the rendering pipeline. Depending on the nature of the shaders (there are many types depending on the version of OpenGL you're using), they will act at different stage of the rendering pipeline.

Ref. from OpenGL Wiki =======================================================

The OpenGL rendering pipeline is initiated when you perform a rendering operation. Rendering operations require the presence of a properly-defined vertex array object and a linked Program Object or Program Pipeline Object which provides the shaders for the programmable pipeline stages.

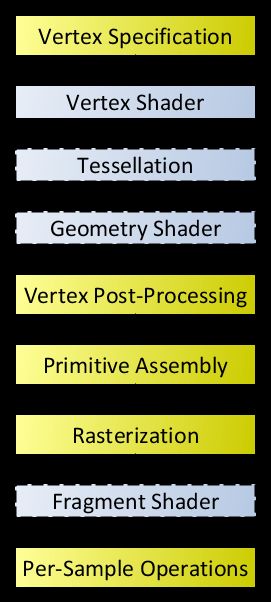

Once initiated, the pipeline operates in the following order:

- Vertex Processing:

- Each vertex retrieved from the vertex arrays (as defined by the VAO) is acted upon by a Vertex Shader. Each vertex in the stream is processed in turn into an output vertex.

- Optional primitive tessellation stages.

- Optional Geometry Shader primitive processing. The output is a sequence of primitives.

- Vertex Post-Processing, the outputs of the last stage are adjusted or shipped to different locations.

- Transform Feedback happens here.

- Primitive Assembly

- Primitive Clipping, the perspective divide, and the viewport transform to window space.

- Scan conversion and primitive parameter interpolation, which generates a number of Fragments.

- A Fragment Shader processes each fragment. Each fragment generates a number of outputs.

- Per-Sample_Processing, including but not limited to:

- Scissor Test

- Stencil Test

- Depth Test

- Blending

- Logical Operation

- Write Mask

=======================================================Ref. from OpenGL Wiki

预修2:C++ design paterns

OSG在OpenGL基础之上,借助于现代化的C++编程语言,使用了Singleton、Visitor等设计模式实现来场景图遍历、函数回调、场景渲染等功能。因此,非常有必要对OSG中使用的设计模式予以研究。

p2.1 Singleton Pattern

p2.2 Visitor Pattern

p2.3 Proxy Pattern

一、内存管理

OSG通过引用计数提供了一种自动管理内存的机制。在OSG中,引用计数机制由osg::ref_ptr和 osg::Referenced实现。

所有的OSG的节点和场景图形数据(状态信息 顶点数组 法线 纹理坐标)都派生于osg::Referenced类进行内存引用计数。

Ref. from OpenSceneGraph Quick Start Guide======================================

1.1 osg::Referenced

The Referenced class is the base class for all scene graph nodes and many other objects in OSG. It contains a reference count to track memory usage. If an object is of a type derived from Referenced and its reference count reaches zero, its destructor is called and memory associated with the object is deleted.

1.2 osg::ref_ptr<>

The ref_ptr<> template class defines a smart pointer to its template argument. The template argument must be derived from Referenced or support an identical reference counting interface. When the address of an object is assigned to a ref_ptr<>, the object reference count automatically increments. Similarly, clearing or deleting a ref_ptr<> decrements the object reference count.

1.3 osg::Object

The pure virtual Object class is the base class for any object in OSG that requires I/O support, cloning, and reference counting.

======================================Ref. from OpenSceneGraph Quick Start Guide

二、场景管理

OSG使用一个自顶向下、分层的场景树来组织场景中的几何信息与渲染状态。OSG基本节点主要包括osg::Node、osg::Geode、osg::Group。

Ref. from OpenSceneGraph Quick Start Guide======================================

A scene graph is a hierarchical tree data structure that organizes spatial data for efficient rendering.

The scene graph tree is headed by a top-level root node. Beneath the root node, group nodes organize geometry and the rendering state that controls their appearance. Root nodes and group nodes can have zero or more children. (However, group nodes with zero children are essentially no-ops.) At the bottom of the scene graph, leaf nodes contain the actual geometry that make up the objects in the scene.

Scene graphs usually offer a variety of different node types that offer a wide range of functionality, such as switch nodes that enable or disable their children, level of detail (LOD) nodes that select children based on distance from the viewer, and transform nodes that modify transformation state of child geometry.

2.1 osg::Node

Scene graph classes aid in scene graph construction. All scene graph classes in OSG are derived from osg::Node. Conceptually, root, group, and leaf nodes are all different node types. In OSG, these are all ultimately derived from osg::Node, and specialized classes provide varying scene graph functionality. Also, the root node in OSG is not a special node type; it’s simply an osg::Node that does not have a parent.

2.2 osg::Geode

The Geode class is the OSG leaf node, and it contains geometric data for rendering. Geode objects are leaf nodes in the scene graph that store Drawable objects. Geometry (one type of Drawable) stores vertex array data and vertex array rendering commands specific to that data.

2.3 osg::Group

OSG’s group node, osg::Group, allows your application to add any number of child nodes, which in turn can also be Group nodes with their own children. Group is the base class for many useful nodes, including osg::Transform, osg::LOD, and osg::Switch.

Group is really the heart of OSG, because it allows your application to organize data in the scene graph. The Group object’s strength comes from its interface for managing child nodes. Group also has an interface for managing parents, which it inherits from its base class, osg::Node.

2.4 osg::NodeVisitor

NodeVisitor is OSG’s implementation of the Visitor design pattern [Gamma95]. In essence, NodeVisitor traverses a scene graph and calls a function for each visited node.

NodeVisitor is a base class that your application never instantiates directly. Your application can use any NodeVisitor supplied by OSG, and you can code your own class derived from NodeVisitor. NodeVisitor consists of several apply() methods overloaded for most major OSG node types. When a NodeVisitor traverses a scene graph, it calls the appropriate apply() method for each node that it visits. Your custom NodeVisitor overrides only the apply() methods for the node types requiring processing. If your NodeVisitor overrides multiple apply() methods, OSG calls the most specific apply() method for a given node.

To traverse your scene graph with a NodeVisitor, pass the NodeVisitor as a parameter to Node::accept(). You can call accept() on any node, and the NodeVisitor will traverse the scene graph starting at that node. To search an entire scene graph, call accept() on the root node.

2.5 osg::Callback

OSG allows you to assign callbacks to Node and Drawable objects. OSG executes Node callbacks during the update and cull traversals, and executes Drawable callbacks during the cull and draw traversals.

OSG’s callback interface is based on the Callback design pattern [Gamma95].

To use a NodeCallback, your application should perform the following steps.

• Derive a new class from NodeCallback.

• Override the NodeCallback::operator()() method. Code this method to perform the dynamic modification on your scene graph.

• Instantiate your new class derived from NodeCallback, and attach it to the Node that you want to modify using the Node::setUpdateCallback() method.

OSG calls the operator()() method in your derived class during each update traversal, allowing your application to modify the Node.

OSG passes two parameters to your operator()() method. The first parameter is the address of the Node associated with your callback. This is the Node that your callback dynamically modifies within the operator()() method. The second parameter is an osg::NodeVisitor address.

To attach your NodeCallback to a Node, use the Node::setUpdateCallback() method. setUpdateCallback() takes one parameter, the address of a class derived from NodeCallback. The following code segment shows how to attach a NodeCallback to a node.

三、图形绘制

所有加入场景中的数据都会加入到一个osg::Group类对象中,而几何图形则由osg::Geode来组织管理。当几何对象构建完成之后,需要将其添加到一个osg::Geode对象中,然后再将这个osg::Geode加入到场景中,便可完成图形绘制。

3.1 坐标变换

坐标变换是OSG中比较重要的知识点,利用坐标变换有时可以实现一些看起来比较复杂的功能。因此,非常有必要掌握OSG坐标变换的一些基本概念。

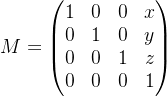

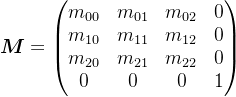

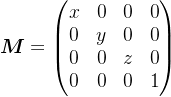

与OpenGL一样,OSG使用模型矩阵(Model Matrix)、观察矩阵(View Matrix)、投影矩阵(Projection Matrix)、视口矩阵(Viewport Matrix)等完成了局部坐标到屏幕窗口坐标的变换。需要特别指出的是,OSG将模型矩阵与视图矩阵进行了区分,并且使用行向量表示齐次坐标。

OSG中坐标变换可以表述为,

![]()

其中,

| 说明 | 相关类 | |

| local coordinates | ||

| window coordinates | ||

| model matrix | osg::MatrixTransform | |

| view matrix | osg::Camera | |

| projection matrix | osg::Camera | |

| viewport matrix | osg::Camera osg::Viewport |

通常,可以使用缩放(S)、旋转(R)、平移(T)等基本操作来修改模型矩阵、观察矩阵,此时,可以将模型矩阵、观察矩阵分解成SRT的形式。对于glOtho生成的正交投影矩阵,也可以将其分解成SRT的形式,但对于glFrustum/glPerspective生成的透视投影矩阵,却不能将其分解成SRT形式。

See References: Shoemake K , Duff T . Matrix animation and polar decomposition. 1992.

在OSG中,笔者在osg::Matrix::decompose()函数基础之上实现了SRT分解。

/** @brief Use polar decomposition to decompose matrix M into M= S * R * T.

*

* @param[in] M matrix to be decomposed

* @param[out] T tranlsation matrix

* @param[out] R rotation matrix

* @param[out] S scale matrix

*

* @note Reference: Hoemake K , Duff T . Matrix animation and polar decomposition. 1992.

*/

void decomposeMatrix(const osg::Matrixd& M, osg::Matrixd& S, osg::Matrixd& R, osg::Matrixd& T)

{

osg::Vec3d translation;

osg::Quat rotation;

osg::Vec3d scale;

osg::Quat so;

M.decompose(translation, rotation, scale, so);

// Fetch the result

T.makeTranslate(translation);

R.makeRotate(rotation);

// S = U * K * trans(U)

osg::Matrixd U;

U.makeRotate(so);

osg::Matrixd Ut;

Ut.transpose(U);

osg::Matrixd K;

K.makeScale(scale);

}3.2 图元绘制

狭义的图元是指图形系统中基本的图形元素,广义的图元还包括图像元素。图元可以分成几何图元、非几何图元等两类。几何图元包括点、直线段、多边形等;非几何图元包括像素矩形、位图等。

四、状态管理

OpenGL采用状态机机制。在渲染之前,首先要设置一系统的状态,比如线宽,材料属性,纹理坐标等等。

Ref. from OpenSceneGraph Quick Start Guide======================================

OSG provides a mechanism for storing the desired OpenGL rendering state in the scene graph. During the cull traversal, geometry with identical states is grouped together to minimize state changes. During the draw traversal, the state management code keeps track of the current state to eliminate redundant state changes.

Unlike other scene graphs, OSG allows state to be associated with any scene graph node, and state is inherited hierarchically during a traversal.

• StateSet—OSG stores a collection of state values (called modes and attributes) in the StateSet class. Any osg::Node in the scene graph can have a StateSet associated with it.

• Modes—Analogous to the OpenGL calls glEnable() and glDisable(), modes allow you to turn on and off features in the OpenGL fixed-function rendering pipeline, such as lighting, blending, and fog. Use the method osg::StateSet::setMode() to store a mode in a StateSet.

• Attributes—Attributes store state parameters, such as the blending function, material properties, and fog color. Use the method osg::StateSet::setAttribute() to store an attribute in a StateSet.

• Texture attributes and modes—These attributes and modes apply to a specific texture unit in OpenGL multitexturing. Unlike OpenGL, there is no default texture unit; your application must supply the texture unit when setting texture attributes and modes. To set these state values and specify their texture unit, use the StateSet methods setTextureMode() and setTextureAttribute().

• Inheritance flags—OSG provides flags for controlling how state is inherited

======================================Ref. from OpenSceneGraph Quick Start Guide

OSG对OpenGL的状态进行了封装。在OSG中涉及到状态管理的类有3个,分别是 osg::State, osg::StateSet, osg::StateAttribute。osg::State主要封装了当前OpenGL的模式、属性和顶点数组的设置;所有的需要设置属性的类都从osg::StateAttribute继承;所有状态由osg::StateSet管理。

4.1 Lighting

光照模型(Lighting Model)是模拟物体表面的光照物理现象的数学模型。

Phong光照模型(Phong Lighting Model)是真实图形学中提出的第一个有影响的光照模型。Phong光照模型是一种典型的简单光照模型,该模型只考虑物体对直接光照的反射作用,不考虑物体之间相互的反射光,物体间的反射光只用环境光表示。

Phong光照模型的数学表达式为:

其中,

:环境光反射系数,常数

:环境光反射系数,常数 :漫反射系数,常数

:漫反射系数,常数 :镜面高光反射系数,常数

:镜面高光反射系数,常数 :高光指数,常数

:高光指数,常数 :该点的法线

:该点的法线 :光源数目

:光源数目 :反射光的方向

:反射光的方向 :摄像机的方向

:摄像机的方向 :光源m的漫反射反射光照

:光源m的漫反射反射光照 :光源m的镜面反射光照

:光源m的镜面反射光照 :光源m的环境光照

:光源m的环境光照 :点p的总光照

:点p的总光照

从上面可以看出,光照效果受3个属性影响:光源属性;模型表面的材质属性;模型的法线属性。需要特别注意,只有几何体设置了正确的法线,才可以实现正确的光照,光源对没有法线的几何体是没有效果的。OSG最多支持八个光源,从GL_LIGHT0到GL_LIGHT7。

OSG全面支持OpenGL的光照特性,包括材质属性、光照属性和光照模型。OSG光照使用主要涉及osg::Material、osg::Light、osg::LightSource等类。osg::Material派生于从osg::StateAttribute,用于设置几何体的材质;osg::Light从osg::StateAttribute类派生,用于设置光源的属性值;osg::LightSource派生于osg::Group,只是起到光源管理的作用。

在OSG中使用光照,首先创建osg::Light对象,设置光照属性(光的位置,光的方向,光的颜色,常量衰减指数,线性衰减,二次衰减指数,可关闭衰减);然后创建一个osg::LightSource对象,并将osg::Light对象加入到osg::LightSource中;最后,将osg::LightSource加入到场景中便可以完成光照使用了。

// Light.cpp : This file contains the 'main' function. Program execution begins and ends there.

//

#include

#include

#include

#include

#include

int main(int argc, char* argv[])

{

osg::ArgumentParser arguments(&argc, argv);

osg::ref_ptr group = new osg::Group();

osg::ref_ptr ss = group->getOrCreateStateSet();

// Enable Lighting

//ss->setMode(GL_LIGHTING, osg::StateAttribute::ON);

ss->setMode(GL_LIGHT1, osg::StateAttribute::ON);

// Read geometry data

osg::ref_ptr glider = osgDB::readFile("../../OpenSceneGraph-Data/glider.osg");

group->addChild(glider);

// Light

osg::ref_ptr light = new osg::Light(1);

light->setPosition(osg::Vec4f(0.0, 0.0, 10.0, 1.0));

light->setAmbient(osg::Vec4f(1.0, 1.0, 1.0, 1.0));

light->setDiffuse(osg::Vec4f(1.0, 1.0, 1.0, 1.0));

light->setSpecular(osg::Vec4f(1.0, 0.0, 0.0, 1.0));

// LightSource

osg::ref_ptr lightSource = new osg::LightSource();

lightSource->setLight(light);

// Add to group

group->addChild(lightSource);

osg::ref_ptr viewer = new osgViewer::Viewer(arguments);

viewer->setSceneData(group);

return viewer->run();

} 4.2 Culling Test

面剔除(Face Culling)依据构成面片的顶点在窗口坐标系下的环绕方向,丢弃掉观察者不感兴趣的面片,从而改善渲染性能。

默认情况下,OpenGL面剔除处于禁用状态,需要调用glEnable(GL_CULL_FACE)开启面剔除。

在OpenGL中,glFrontFace()根据顶点环绕方向定义正面方向,即正面是顶点顺时针环绕还是逆时针环绕,默认是逆时针环绕方向为正面;glCullFace指定剔除掉正面、背面,亦或者正背面均剔除掉,默认是剔除掉背面。

void glFrontFace(GLenum mode);

void glCullFace(GLenum mode);Ref. from OpenGL Programming Guide =========================================

In more technical terms, deciding whether a face of a polygon is front or back-facing depends on the sign of the polygon’s area computed in window coordinates. One way to compute this area is

![]()

where ![]() and

and ![]() are the x and y window coordinates of the ith vertex of the n-vertex polygon and

are the x and y window coordinates of the ith vertex of the n-vertex polygon and ![]() is (i+1) mod n.

is (i+1) mod n.

Assuming that GL_CCW has been specified, if a > 0, the polygon corresponding to that vertex is considered to be front-facing; otherwise, it’s back-facing. If GL_CW is specified and if a < 0, then the corresponding polygon is front-facing; otherwise, it’s back-facing.

=========================================Ref. from OpenGL Programming Guide

Ref. from OpenGL Face Culling, Micorsoft OpenGL =================================

In a scene composed entirely of opaque closed surfaces, back-facing polygons are never visible. Eliminating these invisible polygons has the obvious benefit of speeding up the rendering of the image. You enable and disable elimination of back-facing polygons with glEnable and glDisable using argument GL_CULL_FACE.

The projection of a polygon to window coordinates is said to have clockwise winding if an imaginary object following the path from its first vertex, its second vertex, and so on, to its last vertex, and finally back to its first vertex, moves in a clockwise direction about the interior of the polygon. The polygon's winding is said to be counterclockwise if the imaginary object following the same path moves in a counterclockwise direction about the interior of the polygon. The glFrontFace function specifies whether polygons with clockwise winding in window coordinates, or counterclockwise winding in window coordinates, are taken to be front-facing. Passing GL_CCW to mode selects counterclockwise polygons as front-facing; GL_CW selects clockwise polygons as front-facing. By default, counterclockwise polygons are taken to be front-facing.

The glCullFace function specifies whether front-facing or back-facing facets are culled (as specified by mode) when facet culling is enabled. You enable and disable facet culling using glEnable and glDisable with the argument GL_CULL_FACE. Facets include triangles, quadrilaterals, polygons, and rectangles.

================================ Ref. from OpenGL Face Culling, Micorsoft OpenGL

在OSG中,调用osg::StateSet::setMode(GL_CULL_FACE, osg::StateAttribute::ON)开启面剔除; osg::FrontFace封装了glFrontFace(),用于指定正面的环绕方向;osg::CullFace封装了glCullFace()用于指定剔除正面还是反面。

4.3 Texture

纹理(Texture)指的是物体表面上的微观细节,可看作是一个或多个变量(纹理坐标)的函数。

在计算机中,纹理实际上以数组的形式进行存储,每个数组元素表示一个颜色值,称为纹素(Texel, Texture Element, 纹理元素)。

根据纹理定义域的不同,纹理可分为一维纹理、二维纹理、三维纹理。

根据纹理的表现形式,纹理又可分为颜色纹理、几何纹理、过程纹理。颜色纹理是在光滑表面上描绘花纹或图案,当花纹或图案绘上以后,表面依旧光滑如故。几何纹理(也称为凹凸纹理)是依据粗糙表面的光反射原理,通过一个扰动函数扰动物体表面参数,使表面呈现凹凸不平的的形状。

纹理映射(Texture Mapping)是通过将已经存在的纹理图像映射到物体表面,从而实现为物体表面增加表面细节的过程。因此,纹理映射需要同时使用几何流水线和像素流水线,通过像素与几何对象结合,营造出了非常复杂的视觉效果。

纹理映射本质上是一个二维纹理平面到三维景物表面的一个映射,关键是建立物体空间坐标(x‚ y‚ z)与纹理空间坐标(u‚ v)之间的对应关系。

![]()

纹理映射是真实感图像制作的一个重要部分,运用它可以方便的制作出极具真实感的图形而不必花过多时间来考虑物体的表面细节。

在OpenGL中,按照以下方式使用纹理映射(纹理贴图):

1. 启动纹理映射

2. 加载纹理数据

3. 设置纹理映射的参数

4. 生成纹理坐标

另外,在OpenGL中, 通过纹理对象,可以有效地减少纹理数据从主机内存到纹理内存的数据拷贝。

| 接口 | 描述 | 说明 |

| glGenTextures | generate texture names | |

| glBindTexture | bind a named texture to a texturing target | |

| glDeleteTextures | delete named textures | |

| glTexImage2D | specify a two-dimensional texture image | |

| glTexParameter | set texture parameters | 贴图过程中纹理缩放、重复的方式。 |

| glTexEnv | set texture environment parameters | 控制纹理如何与片元颜色进行计算。 |

| glTexGen | control the generation of texture coordinates | |

| glTexCoord | set the current texture coordinates | |

| glTexCoordPointer | define an array of texture coordinates |

OSG对OpenGL纹理映射进行了封装,osg::Texture(osg::Texture1D, osg::Texture2D, osg::Texture3D)定义了纹理数据;osg::TexEnv用来设置纹理环境参数,主要控制纹理如何与片元颜色进行计算的;osg::Geometry指定纹理坐标,osg::TexGen自动生成纹理坐标;。

// Texture.cpp : This file contains the 'main' function. Program execution begins and ends there.

//

#include

#include

#include

#include

#include

#include

#include

int main(int argc, char *argv[])

{

osg::ArgumentParser arguments(&argc, argv);

osg::ref_ptr viewer = new osgViewer::Viewer(arguments);

osg::ref_ptr root = new osg::Group();

viewer->setSceneData(root);

// Read geometry data

osg::ref_ptr geom = osgDB::readFile("../../OpenSceneGraph-Data/glider.osg");

root->addChild(geom);

// StateSet

//osg::ref_ptr ss = root->getOrCreateStateSet();

//osg::ref_ptr ss = geom->getOrCreateStateSet();

//ss->setMode(GL_LIGHTING, osg::StateAttribute::OFF);

osg::ref_ptr ss = new osg::StateSet();

geom->setStateSet(ss);

// Enable texture mapping

ss->setTextureMode(1, GL_TEXTURE_2D, osg::StateAttribute::ON|osg::StateAttribute::OVERRIDE);

// Load texture data

osg::ref_ptr img = new osg::Image();

img->setFileName("../../OpenSceneGraph-Data/Images/osg256.png");

osg::ref_ptr tex = new osg::Texture2D();

tex->setImage(img);

//ss->setTextureAttribute(1, tex, osg::StateAttribute::ON);

ss->setTextureAttributeAndModes(1, tex, osg::StateAttribute::ON | osg::StateAttribute::OVERRIDE);

// Texture parameters

osg::ref_ptr texenv = new osg::TexEnv;

texenv->setMode(osg::TexEnv::Mode::ADD);

texenv->setColor(osg::Vec4(0.6, 0.6, 0.6, 0.0));

ss->setTextureAttribute(1, texenv.get(), osg::StateAttribute::ON | osg::StateAttribute::OVERRIDE);

// Specify texture coordinates

osg::ref_ptr texgen = new osg::TexGen();

//texgen->setMode(osg::TexGen::SPHERE_MAP);

//ss->setTextureAttribute(1, texgen, osg::StateAttribute::ON);

ss->setTextureAttributeAndModes(1, texgen, osg::StateAttribute::ON | osg::StateAttribute::OVERRIDE);

// Execute a main frame loop

return viewer->run();

} 4.4 Fog

4.5 Scissor Test

4.6 Alpha Test

4.7 Stencil Test

4.8 Depth Test

在OSG中,顶点局部坐标经过模型矩阵、观察矩阵、投影矩阵等之后,就转化为了标准设备坐标,即

其中,![]() 就是即为顶点的深度值。

就是即为顶点的深度值。

当深度测试打开后,OpenGL会测试每个片元的深度值与depth-buffer中数值,如果测试通过,则会更新depth-buffer中的值,如果测试通不过,则会舍弃这个片元。深度测试保证了无论多边形以何种顺序进行绘制,结果总是唯一的。

Ref. from OpenGL Wiki Depth_Test ======================================

The Depth Test is a per-sample processing operation performed after the Fragment Shader (and sometimes before). The Fragment's output depth value may be tested against the depth of the sample being written to. If the test fails, the fragment is discarded. If the test passes, the depth buffer will be updated with the fragment's output depth, unless a subsequent per-sample operation prevents it (such as turning off depth writes).

====================================== Ref. from OpenGL Wiki Depth_Test

默认情况下,OpenGL深度测试处于禁用状态。

在OpenGL中,glEnable(GL_DEPTH_TEST)开启深度测试;glDepthFunc()修改深度测试的比较操作;glDepthMask()控制是否可以修改深度缓存;glDepthRange()设置视口变换之后的窗口坐标系下的深度值。

注意:当场景中几何体设置为半透明时,通常应该调用glDepthMask(false)使该几何体不能修改深度缓存。否则,该几何体则有可能遮挡后面需要绘制的几何体。

osg::Depth就是封装了glDepthFunc()、glDepthMask()、glDepthRange()等深度测试相关的参数配置。当然,要启用深度测试,需要调用osg::StateSet::setMode(GL_DEPTH_TEST, osg::StateAttribute::ON)。

4.9 Blending

混合(Blending,融合)就是把两种颜色混在一起。具体一点,就是把某一像素位置原来的颜色和将要画上去的颜色,通过某种方式混在一起,从而实现特殊的效果。混合通常是实现物体透明度(Transparency)的一种技术。透明就是说一个物体(或者其中的一部分)不是纯色(Solid Color)的,它的颜色是物体本身的颜色和它背后其它物体的颜色的不同强度结合。混合主要用于实现半透明效果。

在混合中,把将要画上去的颜色称为“源颜色”,把原来的颜色称为“目标颜色”。

默认情况下,OpenGL混合处于禁用状态。此时,OpenGL只是简单地使用源颜色取代颜色缓存中的目标颜色。

当使用glEnable(GL_BLEND)开启混合之后,OpenGL 会把源颜色和目标颜色各自取出,并乘以一个系数(源颜色乘以的系数称为“源因子”,目标颜色乘以的系数称为“目标因子”),然后相加,这样就得到了新的颜 色。(也可以不是相加,新版本的OpenGL可以设置运算方式,包括加、减、取两者中较大的、取两者中较小的、逻辑运算等)。

在默认状况下,混合⽅方程式采用相加求和方程(GL_FUNC_ADD)最终颜色值:,

![]()

其中,

:源颜色向量

:源颜色向量 :源因子

:源因子 :目标颜色向量

:目标颜色向量 :目标因子

:目标因子 :最终颜色

:最终颜色

在OpenGL中,使用glBlendFunc()来设置源因子、目标因子,通常分别取GL_SRC_ALPHA、GL_ONE_MINUS_SRC_ALPHA;glBlendEquation()用来设置混合算法,通常取GL_FUNC_ADD;glBlendColor用于设置GL_BLEND_COLOR, 默认情况下GL_BLEND_COLOR 取(0, 0, 0, 0)。

void glBlendFunc(GLenum sfactor,GLenum dfactor)

void glBlendEquation(GLenum mode)

void glBlendColor(GLclampf red, GLclampf green, GLclampf blue, GLclampf alpha)在OSG中,颜色混合涉及到osg::BlendColor、osg::BlendEquation、osg::BlendFunc。osg::BlendColor用于设置颜色常量值;osg::BlendEquation用于定义混合方程(默认值是GL_FUNC_ADD);osg::BlendFunc用于设置源因子、目标因子。启用颜色混合,需要调用osg::StateSet::setMode(GL_BLEND, osg::StateAttribute::ON)。

4.10 Shaders

五、讨论

附录:常见问题

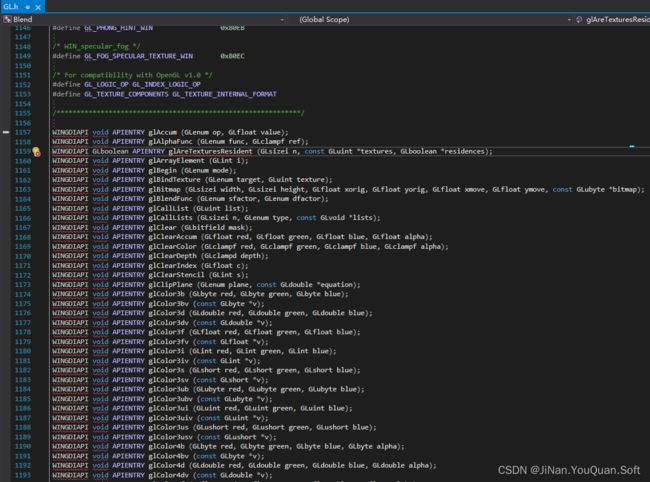

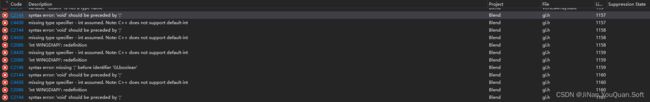

Q1. 在Windows下,使用Visual Studio 2019 编写测试程序时,编译器不识别GL.h中宏定义“WINGDIAPI”。

A1. 在Visual Studio 2019项目文件中,增加"WIN32"宏定义即可。

参考文献

王锐. OpenSceneGraph三维渲染引擎设计与实践. 清华大学出版社, 2009.

Paul Martz. OpenSceneGraph Quick Start Guide.

肖鹏. OpenSceneGraph三维渲染引擎编程指南. 清华大学出版社, 2010.

杨化斌. OpenSceneGraph 3.0 三维视景仿真技术开发详解. 国防工业出版社.

Phong, B.T., Illumination for computer generated pictures 1975.

Dave Shreiner. OpenGL Programming Guide (7th Edition). Addison-Wesley Professional, 2009.

Richard S. Wright, Jr. OpenGL SuperBible (5th Edition). Addison-Wesley, 2010.

段菲. OpenGL变成基础(第三版). 清华大学出版社, 2008.

Shoemake K , Duff T . Matrix animation and polar decomposition. 1992.

项杰. OSG中三维场景构建的关键技术. 地理空间信息, 2012,10(01):43-45+2.

网络资料

OpenSceneGraph![]() http://www.openscenegraph.org/

http://www.openscenegraph.org/

osgChina![]() http://osgchina/

http://osgchina/

OpenGL ![]() https://www.opengl.org/

https://www.opengl.org/

Microsoft OpenGL ![]() https://docs.microsoft.com/en-us/windows/win32/opengl/opengl%E2%80%8B

https://docs.microsoft.com/en-us/windows/win32/opengl/opengl%E2%80%8B

docs.GL![]() https://docs.gl/

https://docs.gl/

Modern OpenGL![]() https://glumpy.github.io/modern-gl.html

https://glumpy.github.io/modern-gl.html

SongHo OpenGL![]() http://www.songho.ca/

http://www.songho.ca/

Learn OpenGL![]() https://learnopengl.com/

https://learnopengl.com/