【Golang】基于ETCD的gRPC服务发现和负载均衡

文章目录

- 基本使用方法

-

- server端注册服务

- client端发现服务

- 使用租约

- 重写负载均衡策略

- 参考资料

负载均衡的三种模式:https://grpc.io/blog/grpc-load-balancing/

- Proxy

- Thick client(Client side)

- Lookaside Load Balancing (Client side)

基本使用方法

server端注册服务

服务启动时将服务信息注册到kv中心

import(

...

"go.etcd.io/etcd/client/v3"

"go.etcd.io/etcd/client/v3/naming/endpoints"

...

)

func Register(etcdBrokers []string, srvName, addr string, ttl int64) error {

var err error

if cli == nil {

cli, err = clientv3.New(clientv3.Config{

Endpoints: etcdBrokers,

DialTimeout: 5 * time.Second,

})

if err != nil {

log.Printf("connect to etcd err:%s", err)

return err

}

}

em, err := endpoints.NewManager(cli, srvName)

if err != nil {

return err

}

err = em.AddEndpoint(context.TODO(), fmt.Sprintf("%v/%v",srvName, addr), endpoints.Endpoint{Addr: addr})

if err != nil {

return err

}

//TODO withAlive

return nil

}

func UnRegister(srvName, addr string) error {

if cli != nil {

em, err := endpoints.NewManager(cli, srvName)

if err != nil {

return err

}

err = em.DeleteEndpoint(context.TODO(), fmt.Sprintf("%v/%v", srvName, addr))

if err != nil {

return err

}

return err

}

return nil

}

服务启动时调用Register方法将监听的端口注册到注册中心,服务停止时调用UnRegister取消注册。

client端发现服务

实现 google.golang.org/grpc/resolver 包下Builder接口

// Builder creates a resolver that will be used to watch name resolution updates.

type Builder interface {

// Build creates a new resolver for the given target.

//

// gRPC dial calls Build synchronously, and fails if the returned error is

// not nil.

Build(target Target, cc ClientConn, opts BuildOptions) (Resolver, error)

// Scheme returns the scheme supported by this resolver.

// Scheme is defined at https://github.com/grpc/grpc/blob/master/doc/naming.md.

Scheme() string

}

etcd的官方gRPC naming and discovery实现:https://etcd.io/docs/v3.5/dev-guide/grpc_naming/

client Dail时使用对应的负载均衡策略:

import(

...

"go.etcd.io/etcd/client/v3/naming/resolver"

...

)

func NewClient(etcdBrokers []string, srvName string) (*Client, error) {

cli, err := clientv3.NewFromURLs(etcdBrokers)

etcdResolver, err := resolver.NewBuilder(cli)

// Set up a connection to the server.

addr := fmt.Sprintf("etcd:///%s", srvName) // "schema://[authority]/service"

conn, err := grpc.Dial(addr, grpc.WithInsecure(), grpc.WithKeepaliveParams(kacp), grpc.WithResolvers(etcdResolver))

if err != nil {

return nil, err

}

return &Client{

FileSrvClient: NewFileSrvClient(conn),

conn: conn,

}, nil

}

使用租约

由于服务可能会出现异常退出,导致不会调用UnRegister接口,所以需要使用Lease保持Etcd对服务的心跳检测。

在使用lease时可能会出现如下警告⚠️:

"lease keepalive response queue is full; dropping response send"警告

原因:

KeepAlive 尝试使给定的租约永远有效。如果发布到频道的保活响应没有被及时消耗,频道可能会变满。当已满时,租用客户端将继续向etcd服务器发送保持活动请求,但会丢弃响应,直到通道上有能力发送更多响应。(这时会进入打印warn的分支)

注册带心跳的服务节点:

func etcdAdd(c *clientv3.Client, lid clientv3.LeaseID, service, addr string) error {

em := endpoints.NewManager(c, service)

return em.AddEndpoint(c.Ctx(), service+"/"+addr, endpoints.Endpoint{Addr:addr}, clientv3.WithLease(lid));

}

// 如何获取leaseID

func test(etcdBrokers []string, srvName, addr string, ttl int64) {

var err error

if cli == nil {

cli, err = clientv3.New(clientv3.Config{

Endpoints: etcdBrokers,

DialTimeout: 5 * time.Second,

})

if err != nil {

log.Printf("connect to etcd err:%s", err)

return err

}

}

//get leaseID

resp, err := cli.Grant(context.TODO(), ttl)

if err != nil {

return err

}

err = etcdAdd(cli, resp.ID, srvName, addr)

if err != nil {

return err

}

// withAlive

ch, kaerr := cli.KeepAlive(context.TODO(), resp.ID)

if kaerr != nil {

return err

}

go func() {

for { //需要不断的取出lease的response

ka := <-ch

fmt.Println("ttl:", ka.TTL)

}

}

return nil

}

分析:

每一个LeaseID对应于一个唯一的KeepAlive, KeepAlive结构如下:

type lessor struct {

...

keepAlives map[LeaseID]*keepAlive

...

}

// keepAlive multiplexes a keepalive for a lease over multiple channels

type keepAlive struct {

chs []chan<- *LeaseKeepAliveResponse

ctxs []context.Context

// deadline is the time the keep alive channels close if no response

deadline time.Time

// nextKeepAlive is when to send the next keep alive message

nextKeepAlive time.Time

// donec is closed on lease revoke, expiration, or cancel.

donec chan struct{}

}

调用KeepAlive时会为Lease初始化一个16缓冲大小的LeaseKeepAliveResponse channel,用于接收不断从etcd Server读取的LeaseKeepAlive Response,并将这个channel添加到该Lease绑定的KeepAlive的channel数组中,

func (l *lessor) KeepAlive(ctx context.Context, id LeaseID) (<-chan *LeaseKeepAliveResponse, error) {

ch := make(chan *LeaseKeepAliveResponse, LeaseResponseChSize)

l.mu.Lock()

...

ka, ok := l.keepAlives[id]

if !ok {

// create fresh keep alive

ka = &keepAlive{

chs: []chan<- *LeaseKeepAliveResponse{ch},

ctxs: []context.Context{ctx},

deadline: time.Now().Add(l.firstKeepAliveTimeout),

nextKeepAlive: time.Now(),

donec: make(chan struct{}),

}

l.keepAlives[id] = ka

} else {

// add channel and context to existing keep alive

ka.ctxs = append(ka.ctxs, ctx)

ka.chs = append(ka.chs, ch)

}

l.mu.Unlock()

go l.keepAliveCtxCloser(ctx, id, ka.donec)

l.firstKeepAliveOnce.Do(func() {

go l.recvKeepAliveLoop()

go l.deadlineLoop()

})

return ch, nil

}

将接收到的LeaseKeepAliveResponse发送到对应Lease绑定的KeepAlive的所有channel中。

// recvKeepAlive updates a lease based on its LeaseKeepAliveResponse

func (l *lessor) recvKeepAlive(resp *pb.LeaseKeepAliveResponse) {

karesp := &LeaseKeepAliveResponse{

ResponseHeader: resp.GetHeader(),

ID: LeaseID(resp.ID),

TTL: resp.TTL,

}

...

ka, ok := l.keepAlives[karesp.ID]

...

for _, ch := range ka.chs {

select {

case ch <- karesp:

default:

if l.lg != nil {

l.lg.Warn("lease keepalive response queue is full; dropping response send",

zap.Int("queue-size", len(ch)),

zap.Int("queue-capacity", cap(ch)),

)

}

}

// still advance in order to rate-limit keep-alive sends

ka.nextKeepAlive = nextKeepAlive

}

}

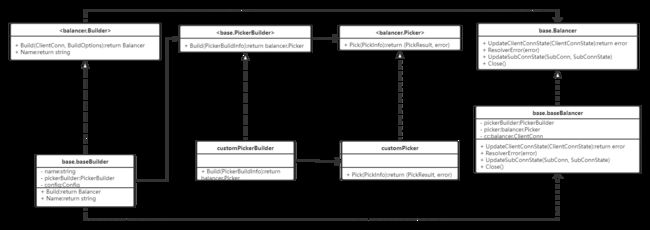

重写负载均衡策略

使用自定义的负载均衡策略需要在gRPC的 balancer.Register(balancer.Builder)注册解析器。

google.golang.org/grpc/balancer/base 包下的base.NewBalancerBuilder作为

实现了Balancer接口对节点状态的和子连接的管理。

所以我们只需要:

- 定义customPickerBuilder实现

,用于返回 。 - 定义customPicker实现

,用于返回具体的SubConn。

参考资料

老版本(仅参考):

- 【grpc-go基于etcd实现服务发现机制】http://morecrazy.github.io/2018/08/14/grpc-go%E5%9F%BA%E4%BA%8Eetcd%E5%AE%9E%E7%8E%B0%E6%9C%8D%E5%8A%A1%E5%8F%91%E7%8E%B0%E6%9C%BA%E5%88%B6/#l3–l4%E4%BC%A0%E8%BE%93%E5%B1%82%E4%B8%8El7%E5%BA%94%E7%94%A8

- 【gRPC负载均衡(自定义负载均衡策略)】https://www.cnblogs.com/FireworksEasyCool/p/12924701.html