Apache Ignite 使用SQL模式

Ignite 带有符合 ANSI-99、水平可扩展和容错的分布式 SQL 数据库。根据用例,通过跨集群节点对数据进行分区或完全复制来提供分布。

作为 SQL 数据库,Ignite 支持所有 DML 命令,包括 SELECT、UPDATE、INSERT 和 DELETE 查询,并且还实现了与分布式系统相关的 DDL 命令子集。

您可以通过连接来自外部工具和应用程序的JDBC或ODBC驱动程序与 Ignite 进行交互,就像与任何其他启用了 SQL 的存储一样。Java、.NET 和 C++ 开发人员可以利用本机 SQL API。

在内部,SQL 表与键值缓存具有相同的数据结构。这意味着您可以更改数据的分区分布并利用亲和力托管技术来获得更好的性能。

Schema定义

系统模式

ignite 有许多默认模式并支持创建自定义模式(SCHEMA)。

默认情况下有两种可用的模式:

-

SYS 模式,其中包含许多带有集群节点信息的系统视图。您不能在此架构中创建表。有关详细信息,请参阅系统视图页面。

-

PUBLIC 架构,在未指定架构时默认使用。

自定义模式

可以通过 的sqlSchemas属性设置自定义模式IgniteConfiguration。您可以在启动集群之前在配置中指定模式列表,然后在运行时在这些模式中创建对象。

IgniteConfiguration cfg = new IgniteConfiguration();

SqlConfiguration sqlCfg = new SqlConfiguration();

sqlCfg.setSqlSchemas("MY_SCHEMA", "MY_SECOND_SCHEMA" );

cfg.setSqlConfiguration(sqlCfg);JDBC 驱动程序

Ignite 附带 JDBC 驱动程序,允许使用标准 SQL 语句(如SELECT、INSERT)UPDATE或DELETE直接从 JDBC 端处理分布式数据。

目前,Ignite 支持两种驱动程序:本文档中描述的轻量级且易于使用的 JDBC Thin Driver 和通过客户端节点与集群交互的JDBC Client Driver 。

驱动程序类的名称是org.apache.ignite.IgniteJdbcThinDriver。例如,您可以通过以下方式打开与侦听 IP 地址 192.168.0.50 的集群节点的 JDBC 连接:

// Register JDBC driver.

Class.forName("org.apache.ignite.IgniteJdbcThinDriver");

// Open the JDBC connection.

Connection conn = DriverManager.getConnection("jdbc:ignite:thin://127.0.0.1");代码测试

创建JavaBean

import java.io.Serializable;

import java.util.concurrent.atomic.AtomicLong;

import org.apache.ignite.cache.affinity.AffinityKey;

import org.apache.ignite.cache.query.annotations.QuerySqlField;

import org.apache.ignite.cache.query.annotations.QueryTextField;

/**

* Person class.

*/

public class PersonNew implements Serializable {

/** */

private static final AtomicLong ID_GEN = new AtomicLong();

/** Name of index by two fields (orgId, salary). */

public static final String ORG_SALARY_IDX = "ORG_SALARY_IDX";

/** Person ID (indexed). */

@QuerySqlField(index = true)

public Long id;

/** Organization ID (indexed). */

@QuerySqlField(index = true, orderedGroups = @QuerySqlField.Group(name = ORG_SALARY_IDX, order = 0))

public Long orgId;

/** First name (not-indexed). */

@QuerySqlField

public String firstName;

/** Last name (not indexed). */

@QuerySqlField

public String lastName;

/** Resume text (create LUCENE-based TEXT index for this field). */

@QueryTextField

public String resume;

/** Salary (indexed). */

@QuerySqlField(index = true, orderedGroups = @QuerySqlField.Group(name = ORG_SALARY_IDX, order = 1))

public double salary;

/** Custom cache key to guarantee that person is always colocated with its organization. */

private transient AffinityKey key;

/**

* Default constructor.

*/

public PersonNew() {

// No-op.

}

// /**

// * Constructs person record.

// *

// * @param org Organization.

// * @param firstName First name.

// * @param lastName Last name.

// * @param salary Salary.

// * @param resume Resume text.

// */

// public Person(Organization org, String firstName, String lastName, double salary, String resume) {

// // Generate unique ID for this person.

// id = ID_GEN.incrementAndGet();

//

// orgId = org.id();

//

// this.firstName = firstName;

// this.lastName = lastName;

// this.salary = salary;

// this.resume = resume;

// }

/**

* Constructs person record.

*

* @param id Person ID.

* @param orgId Organization ID.

* @param firstName First name.

* @param lastName Last name.

* @param salary Salary.

* @param resume Resume text.

*/

public PersonNew(Long id, Long orgId, String firstName, String lastName, double salary, String resume) {

this.id = id;

this.orgId = orgId;

this.firstName = firstName;

this.lastName = lastName;

this.salary = salary;

this.resume = resume;

}

/**

* Constructs person record.

*

* @param id Person ID.

* @param firstName First name.

* @param lastName Last name.

*/

public PersonNew(Long id, String firstName, String lastName) {

this.id = id;

this.firstName = firstName;

this.lastName = lastName;

}

/**

* Gets cache affinity key. Since in some examples person needs to be collocated with organization, we create

* custom affinity key to guarantee this collocation.

*

* @return Custom affinity key to guarantee that person is always collocated with organization.

*/

public AffinityKey key() {

if (key == null)

key = new AffinityKey<>(id, orgId);

return key;

}

/**

* {@inheritDoc}

*/

@Override public String toString() {

return "Person [id=" + id +

", orgId=" + orgId +

", lastName=" + lastName +

", firstName=" + firstName +

", salary=" + salary +

", resume=" + resume + ']';

}

}

创建测试类

package ignite.sql;

import org.apache.ignite.Ignite;

import org.apache.ignite.IgniteCache;

import org.apache.ignite.Ignition;

import org.apache.ignite.cache.query.FieldsQueryCursor;

import org.apache.ignite.cache.query.SqlFieldsQuery;

import org.apache.ignite.configuration.CacheConfiguration;

import org.apache.ignite.configuration.IgniteConfiguration;

import org.apache.ignite.spi.discovery.tcp.TcpDiscoverySpi;

import org.apache.ignite.spi.discovery.tcp.ipfinder.multicast.TcpDiscoveryMulticastIpFinder;

import javax.cache.Cache;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

public class PersonBean {

/**

* Persons cache name.

*/

private static final String PERSON_CACHE = PersonBean.class.getSimpleName() + "PersonNew";

public static void main(String[] args) {

// Preparing IgniteConfiguration using Java APIs

IgniteConfiguration cfg = new IgniteConfiguration();

// The node will be started as a client node.

cfg.setClientMode(true);

// Classes of custom Java logic will be transferred over the wire from this app.

cfg.setPeerClassLoadingEnabled(true);

// Setting up an IP Finder to ensure the client can locate the servers.

TcpDiscoveryMulticastIpFinder ipFinder = new TcpDiscoveryMulticastIpFinder();

ArrayList strings = new ArrayList<>();

strings.add("127.0.0.1:47500..47509");

//ipFinder.setAddresses(Collections.singletonList("192.168.165.42:47500..47509"));

ipFinder.setAddresses(strings);

cfg.setDiscoverySpi(new TcpDiscoverySpi().setIpFinder(ipFinder));

try (Ignite ignite = Ignition.start(cfg)) {

CacheConfiguration personCacheCfg = new CacheConfiguration<>(PERSON_CACHE);

personCacheCfg.setIndexedTypes(Long.class, PersonNew.class);

IgniteCache personCache = ignite.getOrCreateCache(personCacheCfg);

// Insert persons.

// SqlFieldsQuery qry = new SqlFieldsQuery(

// "insert into PersonNew (_key, id, orgId, firstName, lastName, salary, resume) values (?, ?, ?, ?, ?, ?, ?)");

//

// personCache.query(qry.setArgs(1L, 1L, 1L, "John", "Doe", 4000.00, "Master"));

// personCache.query(qry.setArgs(2L, 2L, 1L, "Jane", "Roe", 2000.00, "Bachelor"));

// personCache.query(qry.setArgs(3L, 3L, 2L, "Mary", "Major", 5000.00, "Master"));

// personCache.query(qry.setArgs(4L, 4L, 2L, "Richard", "Miles", 3000.00, "Bachelor"));

PersonNew personNew = personCache.get(1L);

System.out.println(personNew);

PersonNew p2 = new PersonNew(5L, 5L, "city", "bob", 5000.00, "Master");

personCache.put(5L, p2);

SqlFieldsQuery query = new SqlFieldsQuery("SELECT * FROM PersonNew ");

FieldsQueryCursor> cursor = personCache.query(query);

Iterator> iterator = cursor.iterator();

System.out.println("Query result:");

while (iterator.hasNext()) {

List row = iterator.next();

// System.out.println(">>> " + row.get(0) + ", " + row.get(1));

//System.out.println(">>> " + row.get(0));

System.out.println(row);

}

// Cache cache = Ignition.ignite().cache();

// Create query which selects salaries based on range for all employees

// that work for a certain company.

// CacheQuery> qry = cache.queries().createSqlQuery(Person.class,

// "from Person, Organization where Person.orgId = Organization.id " +

// "and Organization.name = ? and Person.salary > ? and Person.salary <= ?");

// Query all nodes to find all cached Ignite employees

// with salaries less than 1000.

//qry.execute("Ignition", 0, 1000);

// Query only remote nodes to find all remotely cached Ignite employees

// with salaries greater than 1000 and less than 2000.

// qry.projection(grid.remoteProjection()).execute("Ignition", 1000, 2000);

}

}

}

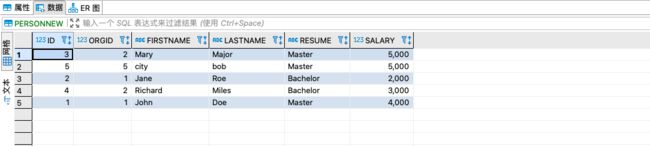

通过DBeaver查看创建的表,默认会创建一个库。

创建连接

查看表结构

查看表数据