ubuntu22.04@laptop OpenCV Get Started: 007_color_spaces

ubuntu22.04@laptop OpenCV Get Started: 007_color_spaces

- 1. 源由

- 2. 颜色空间

-

- 2.1 RGB颜色空间

- 2.2 LAB颜色空间

- 2.3 YCrCb颜色空间

- 2.4 HSV颜色空间

- 3 代码工程结构

-

- 3.1 C++应用Demo

- 3.2 Python应用Demo

- 4. 重点分析

-

- 4.1 interactive_color_detect

- 4.2 interactive_color_segment

- 4.3 data_analysis

-

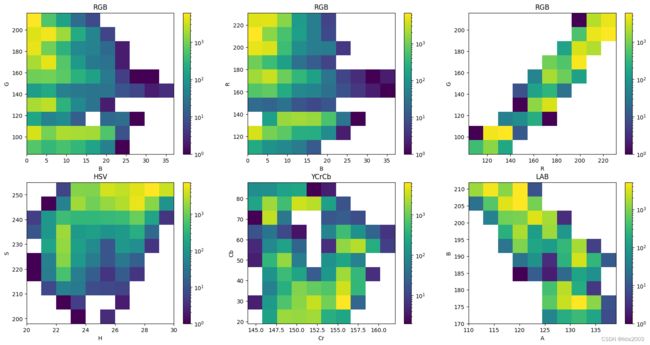

- 4.3.1 黄色

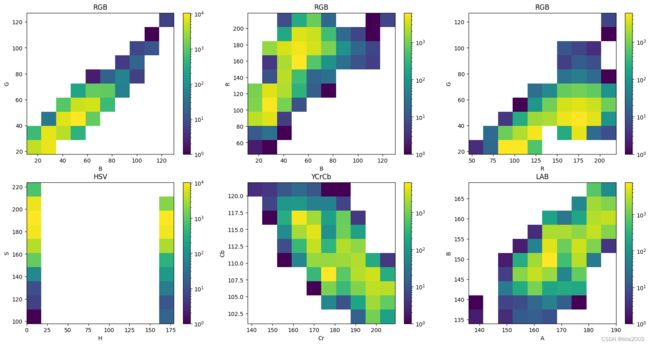

- 4.3.2 红色

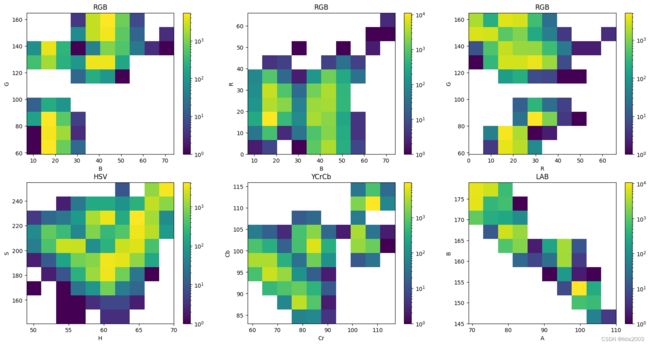

- 4.3.3 蓝色

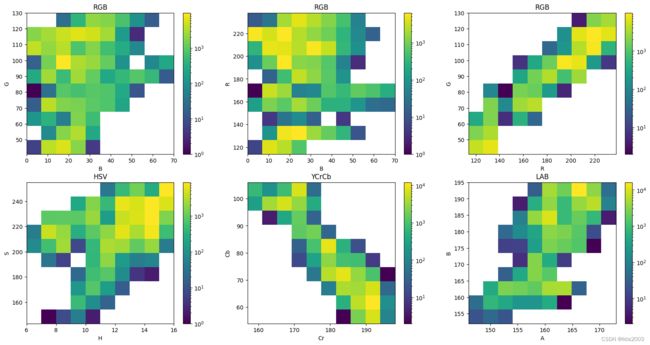

- 4.3.4 绿色

- 4.3.5 橙色

- 5. 总结

- 6. 参考资料

- 7. 补充

1. 源由

在本章中,将了解计算机视觉中使用的流行颜色空间,并将其用于基于颜色的分割。

- 不同颜色空间定义

- 基于颜色图像分割

记得曾经有人谈到,什么是科学? 或者说对于我们来说科学的定义是什么?

Science is a rigorous, systematic endeavor that builds and organizes knowledge in the form of testable explanations and predictions about the world.

这里话题稍微展开一些,因为,从工程技术的角度来说,个人感觉国内目前更多偏于浮躁,注重功利性。因为,目前工程技术在企业、社会上更多的认知是解决问题。

从个人的观点来看,其实科学的定义在wiki百科上讲的非常清楚,换言之,讲的是逻辑和道理。

- 知识体系

- 可解释

- 可预测

因为企业的逐利性,社会的导向性,过于表面的注重解决实际应用问题,而忽略了逻辑和道理。导致很多表现上只要解决问题,就觉得好了,成功了。而真正的本质没有讲清楚或者深入研究清楚,进而无法将科学更好的应用于生产力。

通常也是大家可以看到,很多人似乎能解决问题,但是无法用言语表达清楚,甚至用纸笔记录下来,因为这些问题的解决是一种表象,内部实质问题没有了解清楚。

我可能多说了很多“废话”,希望国内这种浮躁的科学技术作风能有所改善!

2. 颜色空间

颜色空间可以简单的理解为色彩在不同坐标系下的展开方式。

正交坐标系统,通常理解为不同单位向量之间是解耦的关系。对于非正交系统来说,单位向量有松耦合,甚至紧耦合的关系。而颜色在不同坐标系统下的展开也会影响到对于特征的判断。

在计算机视觉里面也有类似的问题,这是一个物理到计算机语言的表达过程。这里就凸显基础学科的重要性,通过定义,基本原理和逻辑来给出解决方案。当然应用方面,“拿来主义”也能出色的完成工作,但是背后的逻辑思路,以及遇到复杂问题的分析能力将会在后续的研发、研究上阻碍技术的发展。

希望通过这些点滴思考,能够对于当下社会浮躁研发氛围有所触发和讨论。当然,总的方向是好的,只不过。。。。

大体上计算机视觉上有以下颜色空间:

- RGB颜色空间

- LAB颜色空间

- YCrCb颜色空间

- HSV颜色空间

2.1 RGB颜色空间

定义:

A linear combination of Red, Green, and Blue values.

The three channels are correlated by the amount of light hitting the surface.

下面是同一个物体在不同光照条件下的对比分析:

- 显著的感知不一致性

- 色度(颜色相关信息)和亮度(强度相关信息)数据的混合

换句话说:其坐标系是非解耦的,有相关性;当亮度变化的时候,RGB都会发生变化。

默认读入的文件数据就是BGR格式:

C++:

//C++

bright = cv::imread('cube1.jpg')

dark = cv::imread('cube8.jpg')

Python:

#python

bright = cv2.imread('cube1.jpg')

dark = cv2.imread('cube8.jpg')

2.2 LAB颜色空间

定义:

L – Lightness ( Intensity ).

a – color component ranging from Green to Magenta.

b – color component ranging from Blue to Yellow.

- 感知均匀的颜色空间,近似于我们感知颜色的方式

- 独立于设备(捕捉或显示)

- 在Adobe Photoshop中广泛使用

- 通过一个复杂的变换方程与RGB颜色空间相关的。

BGR转换:

C++:

//C++

cv::cvtColor(bright, brightLAB, cv::COLOR_BGR2LAB);

cv::cvtColor(dark, darkLAB, cv::COLOR_BGR2LAB);

Python:

#python

brightLAB = cv2.cvtColor(bright, cv2.COLOR_BGR2LAB)

darkLAB = cv2.cvtColor(dark, cv2.COLOR_BGR2LAB)

2.3 YCrCb颜色空间

定义:

Y – Luminance or Luma component obtained from RGB after gamma correction.

Cr = R – Y ( how far is the red component from Luma ).

Cb = B – Y ( how far is the blue component from Luma ).

- 亮度与LAB类似的

- 与LAB相比,即使在室外图像中,红色和橙色之间的感知差异也较小

- 白色的所有三个组成部分都发生了变化

BGR转换:

C++:

//C++

cv::cvtColor(bright, brightYCB, cv::COLOR_BGR2YCrCb);

cv::cvtColor(dark, darkYCB, cv::COLOR_BGR2YCrCb);

Python:

#python

brightYCB = cv2.cvtColor(bright, cv2.COLOR_BGR2YCrCb)

darkYCB = cv2.cvtColor(dark, cv2.COLOR_BGR2YCrCb)

2.4 HSV颜色空间

定义:

H – Hue ( Dominant Wavelength ).

S – Saturation ( Purity / shades of the color ).

V – Value ( Intensity ).

- H分量在两个图像中都非常相似,这表明即使在照明变化的情况下颜色信息也是完整的

- S分量在两幅图像中也非常相似

- V分量捕捉落在其上的光量,因此它会因照明变化而变化

- 室外和室内图像的红色部分的值之间存在巨大差异。这是因为色调表示为一个圆形,而红色处于起始角度。因此,它可以取介于[300360]和[0,60]之间的值

BGR转换:

C++:

//C++

cv::cvtColor(bright, brightHSV, cv::COLOR_BGR2HSV);

cv::cvtColor(dark, darkHSV, cv::COLOR_BGR2HSV);

Python:

#python

brightHSV = cv2.cvtColor(bright, cv2.COLOR_BGR2HSV)

darkHSV = cv2.cvtColor(dark, cv2.COLOR_BGR2HSV)

3 代码工程结构

3.1 C++应用Demo

C++应用Demo工程结构:

007_color_spaces/CPP$ tree . -L 1

.

├── CMakeLists.txt

├── interactive_color_detect.cpp

├── interactive_color_segment.cpp

├── images

└── pieces

2 directories, 3 files

C++示例编译前,确认OpenCV安装路径:

$ find /home/daniel/ -name "OpenCVConfig.cmake"

/home/daniel/OpenCV/installation/opencv-4.9.0/lib/cmake/opencv4/

/home/daniel/OpenCV/opencv/build/OpenCVConfig.cmake

/home/daniel/OpenCV/opencv/build/unix-install/OpenCVConfig.cmake

$ export OpenCV_DIR=/home/daniel/OpenCV/installation/opencv-4.9.0/lib/cmake/opencv4/

C++应用Demo工程编译执行:

$ mkdir build

$ cd build

$ cmake ..

$ cmake --build . --config Release

$ cd ..

$ ./build/interactive_color_detect

$ ./build/interactive_color_segment

3.2 Python应用Demo

Python应用Demo工程结构:

007_color_spaces/Python$ tree . -L 1

.

├── data_analysis.py

├── interactive_color_detect.py

├── interactive_color_segment.py

├── images

└── pieces

2 directories, 3 files

Python应用Demo工程执行:

$ sudo apt-get install tcl-dev tk-dev python-tk python3-tk

$ workoncv-4.9.0

$ pip install PyQt5 PySide2

$ python interactive_color_detect.py

$ python interactive_color_segment.py

$ python data_analysis.py

4. 重点分析

4.1 interactive_color_detect

- cvtColor(src, dst, code)

获取图像数据中一个点的色彩坐标数据:

C++:

Vec3b bgrPixel(img.at(y, x));

Mat3b hsv,ycb,lab;

// Create Mat object from vector since cvtColor accepts a Mat object

Mat3b bgr (bgrPixel);

//Convert the single pixel BGR Mat to other formats

cvtColor(bgr, ycb, COLOR_BGR2YCrCb);

cvtColor(bgr, hsv, COLOR_BGR2HSV);

cvtColor(bgr, lab, COLOR_BGR2Lab);

//Get back the vector from Mat

Vec3b hsvPixel(hsv.at(0,0));

Vec3b ycbPixel(ycb.at(0,0));

Vec3b labPixel(lab.at(0,0));

Python:

# get the value of pixel from the location of mouse in (x,y)

bgr = img[y,x]

# Convert the BGR pixel into other colro formats

ycb = cv2.cvtColor(np.uint8([[bgr]]),cv2.COLOR_BGR2YCrCb)[0][0]

lab = cv2.cvtColor(np.uint8([[bgr]]),cv2.COLOR_BGR2Lab)[0][0]

hsv = cv2.cvtColor(np.uint8([[bgr]]),cv2.COLOR_BGR2HSV)[0][0]

4.2 interactive_color_segment

- inRange(src, lowerb, upperb, dst )

- bitwise_and(src1, src2, dst, mask)

使用mask过滤图像数据:

C++:

// Get values from the BGR trackbar

BMin = getTrackbarPos("BMin", "SelectBGR");

GMin = getTrackbarPos("GMin", "SelectBGR");

RMin = getTrackbarPos("RMin", "SelectBGR");

BMax = getTrackbarPos("BMax", "SelectBGR");

GMax = getTrackbarPos("GMax", "SelectBGR");

RMax = getTrackbarPos("RMax", "SelectBGR");

minBGR = Scalar(BMin, GMin, RMin);

maxBGR = Scalar(BMax, GMax, RMax);

// Get values from the HSV trackbar

HMin = getTrackbarPos("HMin", "SelectHSV");

SMin = getTrackbarPos("SMin", "SelectHSV");

VMin = getTrackbarPos("VMin", "SelectHSV");

HMax = getTrackbarPos("HMax", "SelectHSV");

SMax = getTrackbarPos("SMax", "SelectHSV");

VMax = getTrackbarPos("VMax", "SelectHSV");

minHSV = Scalar(HMin, SMin, VMin);

maxHSV = Scalar(HMax, SMax, VMax);

// Get values from the LAB trackbar

LMin = getTrackbarPos("LMin", "SelectLAB");

aMin = getTrackbarPos("AMin", "SelectLAB");

bMin = getTrackbarPos("BMin", "SelectLAB");

LMax = getTrackbarPos("LMax", "SelectLAB");

aMax = getTrackbarPos("AMax", "SelectLAB");

bMax = getTrackbarPos("BMax", "SelectLAB");

minLab = Scalar(LMin, aMin, bMin);

maxLab = Scalar(LMax, aMax, bMax);

// Get values from the YCrCb trackbar

YMin = getTrackbarPos("YMin", "SelectYCB");

CrMin = getTrackbarPos("CrMin", "SelectYCB");

CbMin = getTrackbarPos("CbMin", "SelectYCB");

YMax = getTrackbarPos("YMax", "SelectYCB");

CrMax = getTrackbarPos("CrMax", "SelectYCB");

CbMax = getTrackbarPos("CbMax", "SelectYCB");

minYCrCb = Scalar(YMin, CrMin, CbMin);

maxYCrCb = Scalar(YMax, CrMax, CbMax);

// Convert the BGR image to other color spaces

original.copyTo(imageBGR);

cvtColor(original, imageHSV, COLOR_BGR2HSV);

cvtColor(original, imageYCrCb, COLOR_BGR2YCrCb);

cvtColor(original, imageLab, COLOR_BGR2Lab);

// Create the mask using the min and max values obtained from trackbar and apply bitwise and operation to get the results

inRange(imageBGR, minBGR, maxBGR, maskBGR);

resultBGR = Mat::zeros(original.rows, original.cols, CV_8UC3);

bitwise_and(original, original, resultBGR, maskBGR);

inRange(imageHSV, minHSV, maxHSV, maskHSV);

resultHSV = Mat::zeros(original.rows, original.cols, CV_8UC3);

bitwise_and(original, original, resultHSV, maskHSV);

inRange(imageYCrCb, minYCrCb, maxYCrCb, maskYCrCb);

resultYCrCb = Mat::zeros(original.rows, original.cols, CV_8UC3);

bitwise_and(original, original, resultYCrCb, maskYCrCb);

inRange(imageLab, minLab, maxLab, maskLab);

resultLab = Mat::zeros(original.rows, original.cols, CV_8UC3);

bitwise_and(original, original, resultLab, maskLab);

// Show the results

imshow("SelectBGR", resultBGR);

imshow("SelectYCB", resultYCrCb);

imshow("SelectLAB", resultLab);

imshow("SelectHSV", resultHSV);

Python:

# Get values from the BGR trackbar

BMin = cv2.getTrackbarPos('BGRBMin','SelectBGR')

GMin = cv2.getTrackbarPos('BGRGMin','SelectBGR')

RMin = cv2.getTrackbarPos('BGRRMin','SelectBGR')

BMax = cv2.getTrackbarPos('BGRBMax','SelectBGR')

GMax = cv2.getTrackbarPos('BGRGMax','SelectBGR')

RMax = cv2.getTrackbarPos('BGRRMax','SelectBGR')

minBGR = np.array([BMin, GMin, RMin])

maxBGR = np.array([BMax, GMax, RMax])

# Get values from the HSV trackbar

HMin = cv2.getTrackbarPos('HMin','SelectHSV')

SMin = cv2.getTrackbarPos('SMin','SelectHSV')

VMin = cv2.getTrackbarPos('VMin','SelectHSV')

HMax = cv2.getTrackbarPos('HMax','SelectHSV')

SMax = cv2.getTrackbarPos('SMax','SelectHSV')

VMax = cv2.getTrackbarPos('VMax','SelectHSV')

minHSV = np.array([HMin, SMin, VMin])

maxHSV = np.array([HMax, SMax, VMax])

# Get values from the LAB trackbar

LMin = cv2.getTrackbarPos('LABLMin','SelectLAB')

AMin = cv2.getTrackbarPos('LABAMin','SelectLAB')

BMin = cv2.getTrackbarPos('LABBMin','SelectLAB')

LMax = cv2.getTrackbarPos('LABLMax','SelectLAB')

AMax = cv2.getTrackbarPos('LABAMax','SelectLAB')

BMax = cv2.getTrackbarPos('LABBMax','SelectLAB')

minLAB = np.array([LMin, AMin, BMin])

maxLAB = np.array([LMax, AMax, BMax])

# Get values from the YCrCb trackbar

YMin = cv2.getTrackbarPos('YMin','SelectYCB')

CrMin = cv2.getTrackbarPos('CrMin','SelectYCB')

CbMin = cv2.getTrackbarPos('CbMin','SelectYCB')

YMax = cv2.getTrackbarPos('YMax','SelectYCB')

CrMax = cv2.getTrackbarPos('CrMax','SelectYCB')

CbMax = cv2.getTrackbarPos('CbMax','SelectYCB')

minYCB = np.array([YMin, CrMin, CbMin])

maxYCB = np.array([YMax, CrMax, CbMax])

# Convert the BGR image to other color spaces

imageBGR = np.copy(original)

imageHSV = cv2.cvtColor(original,cv2.COLOR_BGR2HSV)

imageYCB = cv2.cvtColor(original,cv2.COLOR_BGR2YCrCb)

imageLAB = cv2.cvtColor(original,cv2.COLOR_BGR2LAB)

# Create the mask using the min and max values obtained from trackbar and apply bitwise and operation to get the results

maskBGR = cv2.inRange(imageBGR,minBGR,maxBGR)

resultBGR = cv2.bitwise_and(original, original, mask = maskBGR)

maskHSV = cv2.inRange(imageHSV,minHSV,maxHSV)

resultHSV = cv2.bitwise_and(original, original, mask = maskHSV)

maskYCB = cv2.inRange(imageYCB,minYCB,maxYCB)

resultYCB = cv2.bitwise_and(original, original, mask = maskYCB)

maskLAB = cv2.inRange(imageLAB,minLAB,maxLAB)

resultLAB = cv2.bitwise_and(original, original, mask = maskLAB)

# Show the results

cv2.imshow('SelectBGR',resultBGR)

cv2.imshow('SelectYCB',resultYCB)

cv2.imshow('SelectLAB',resultLAB)

cv2.imshow('SelectHSV',resultHSV)

4.3 data_analysis

在4.2章节中,已经通过一个范围来对图像进行过滤,通过示例,可以看出RGB来进行过滤,在亮度发生变化的时候,其分类的效果非常差,简直不可用。

从实际情况来看,期望在颜色分量上,能够存在一个稳定的区间来进行过滤或判别。

本章节采用颜色空间色坐标上的数值来看图像的一致性(可辨性)。

- RGB空间:GB,RB,GR

- HSV空间:SH

- YCrCb空间:CbCr

- LAB空间:BA

由图可以看出,总体上LAB颜色空间的线性比例关系是最好,最易于用来进行颜色判别的。

详见代码:data_analysis.py

4.3.1 黄色

4.3.2 红色

4.3.3 蓝色

4.3.4 绿色

4.3.5 橙色

5. 总结

经过上面实验和讨论,可以比较清晰的看出,在颜色空间做颜色分类的时候,亮度(光照)对于RGB色坐标的影响是比较大的。

在计算机视觉应用这块,当遇到颜色分类的时候,可以使用LAB颜色空间,基于该AB坐标与亮度非耦合的特性来做分割应用会更加合适。

6. 参考资料

【1】ubuntu22.04@laptop OpenCV Get Started

【2】ubuntu22.04@laptop OpenCV安装

【3】ubuntu22.04@laptop OpenCV定制化安装

7. 补充

学习是一种过程,这里关于《ubuntu22.04@laptop OpenCV Get Started》的记录也是过程。因此,很多重复的代码或者注释,就不会展开讨论,甚至提及。

有兴趣了解更多的朋友,请从[《ubuntu22.04@laptop OpenCV Get Started》](ubuntu22.04@laptop OpenCV Get Started)开始,一个章节一个章节的了解,循序渐进。