Centos7.9基于Kubeasz部署k8s 1.27.1高可用集群

一:kubeasz 的介绍

kubeasz 致力于提供快速部署高可用k8s集群的工具, 同时也努力成为k8s实践、使用的参考书;

基于二进制方式部署和利用ansible-playbook实现自动化;既提供一键安装脚本,

也可以根据安装指南分步执行安装各个组件。

kubeasz 从每一个单独部件组装到完整的集群,提供最灵活的配置能力,几乎可以设置任何组件的任何参数;

同时又为集群创建预置一套运行良好的默认配置,甚

至自动化创建适合大规模集群的BGP Route Reflector网络模式。

github:

GitHub - easzlab/kubeasz: 使用Ansible脚本安装K8S集群,介绍组件交互原理,方便直接,不受国内网络环境影响

二:安装环境准备

系统:Centos7.9x64

cat /etc/hosts

------------------

172.18.10.11 Kubernetes01

172.18.10.12 Kubernetes02

172.18.10.13 Kubernetes03

172.18.10.14 Kubernetes04

172.18.10.15 Kubernetes05

-----------------

其中 Kubernetes01 作为 master 节点Kubernetes02/Kubernetes03 为 k8s的worker 节点

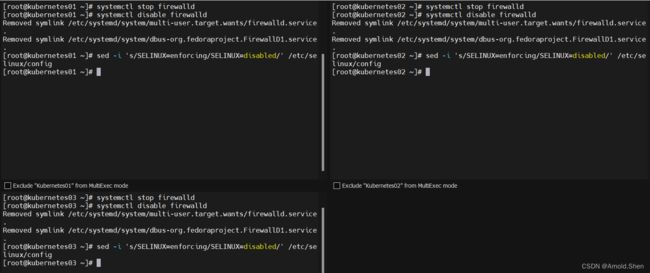

系统关闭selinux /firewalld 清空 iptables 规则

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

做好 系统的 ssh 无密钥认证:CentOS 7.9配置SSH免密登录(无需合并authorized_keys)_Arnold.Shen的博客-CSDN博客

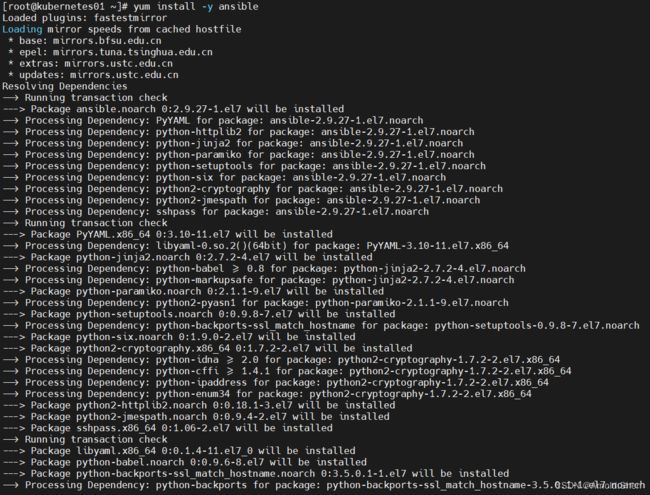

主节点 master 安装 ansible

yum -y install epel-release

yum -y install vconfig -y

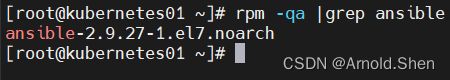

yum install -y ansible

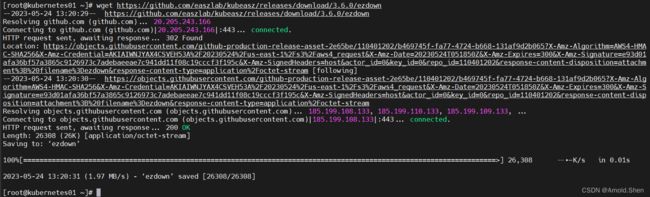

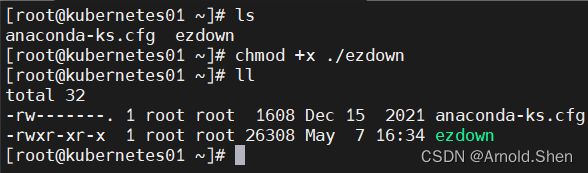

下载kubeasz 最新版本

export release=3.6.0

示例:wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

wget https://github.com/easzlab/kubeasz/releases/download/3.6.0/ezdown

chmod +x ./ezdown

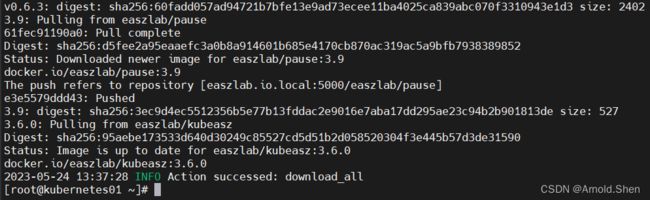

./ezdown -D 下载 镜像到本地

docker image |wc -l

-----------

23

-----------

docker images

docker images | wc -l

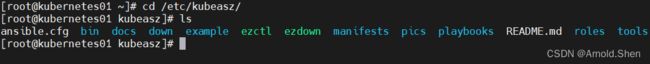

cd /etc/kubeasz/

./ezctl --help

cd /etc/kubeasz/

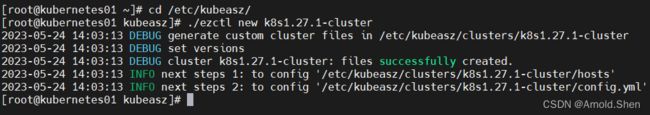

./ezctl new k8s1.27.1-cluster

cd /etc/kubeasz/clusters/k8s1.27.1-cluster/

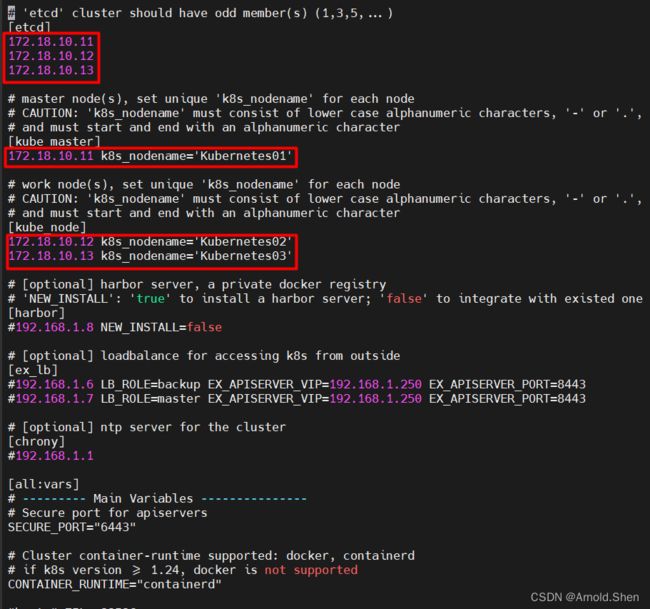

vim hosts

[etcd]

172.18.10.11

172.18.10.12

172.18.10.13

# master node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_master]

172.18.10.11 k8s_nodename='Kubernetes01'

# work node(s), set unique 'k8s_nodename' for each node

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character

[kube_node]

172.18.10.12 k8s_nodename='Kubernetes02'

172.18.10.13 k8s_nodename='Kubernetes03'

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.96.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.244.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-50000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/opt/kube/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s1.27.1-cluster"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Default 'k8s_nodename' is empty

k8s_nodename=''

vim config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# force to recreate CA and other certs, not suggested to set 'true'

CHANGE_CA: false

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.27.1"

# set unique 'k8s_nodename' for each node, if not set(default:'') ip add will be used

# CAUTION: 'k8s_nodename' must consist of lower case alphanumeric characters, '-' or '.',

# and must start and end with an alphanumeric character (e.g. 'example.com'),

# regex used for validation is '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'

K8S_NODENAME: "{%- if k8s_nodename != '' -%} \

{{ k8s_nodename|replace('_', '-')|lower }} \

{%- else -%} \

{{ inventory_hostname }} \

{%- endif -%}"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "easzlab.io.local:5000/easzlab/pause:3.9"

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG:

- "http://easzlab.io.local:5000"

- "https://{{ HARBOR_REGISTRY }}"

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

MASTER_CERT_HOSTS:

- "10.1.1.1"

- "k8s.easzlab.io"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# Flannel not using SubnetLen json key · Issue #847 · flannel-io/flannel · GitHub

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数

MAX_PODS: 110

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel]

flannel_ver: "v0.21.4"

# ------------------------------------------- calico

# [calico] IPIP隧道模式可选项有: [Always, CrossSubnet, Never],跨子网可以配置为Always与CrossSubnet(公有云建议使用always比较省事,其他的话需要修改各自公有云的网络配置,具体可以参考各个公有云说明)

# 其次CrossSubnet为隧道+BGP路由混合模式可以提升网络性能,同子网配置为Never即可.

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支持calico 版本: ["3.19", "3.23"]

calico_ver: "v3.24.5"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.13.2"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自动安装

dns_install: "yes"

corednsVer: "1.9.3"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "1.22.20"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

metricsserver_install: "yes"

metricsVer: "v0.6.3"

# dashboard 自动安装

dashboard_install: "yes"

dashboardVer: "v2.7.0"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "45.23.0"

# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自动安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.6.4"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_PATH: /var/data

HARBOR_TLS_PORT: 8443

HARBOR_REGISTRY: "{{ HARBOR_DOMAIN }}:{{ HARBOR_TLS_PORT }}"

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CHARTMUSEUM: true

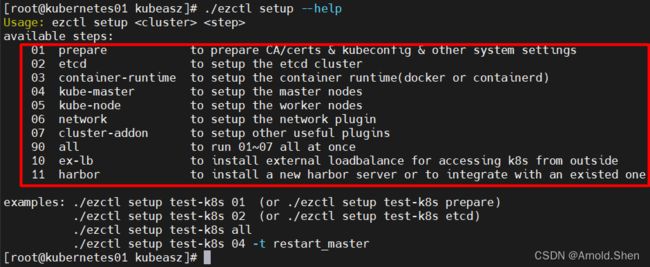

./ezctl setup --help(可以看到每步具体安装什么)

./ezctl setup k8s1.27.1-cluster 01 ---》 系统环境 初始化

./ezctl setup k8s1.27.1-cluster 02 ---》安装etcd 集群

etcd 集群验证

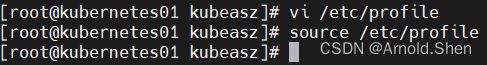

vim /etc/profile

----

PATH=$PATH:$HOME/bin:/opt/kube/bin

----

![]()

source /etc/profile

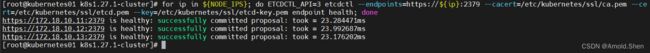

验证ETCD:

export NODE_IPS="172.18.10.11 172.18.10.12 172.18.10.13"

for ip in ${NODE_IPS}; do ETCDCTL_API=3 etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

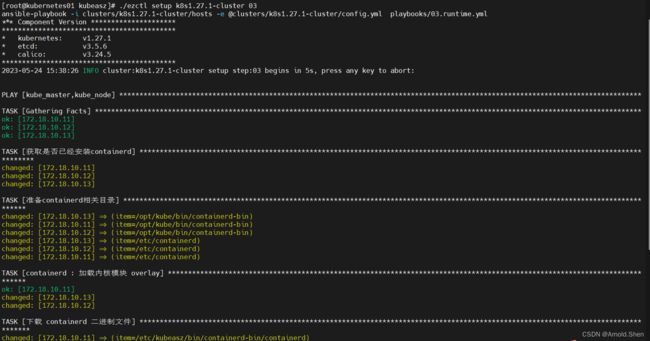

./ezctl setup k8s1.27.1-cluster 03 ---》 安装 容器运行时runtime

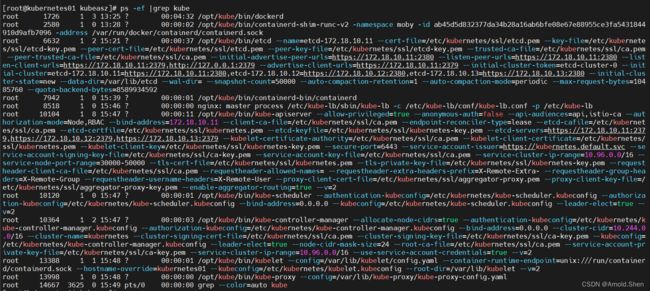

ps -ef |grep container

![]()

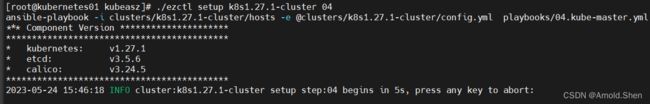

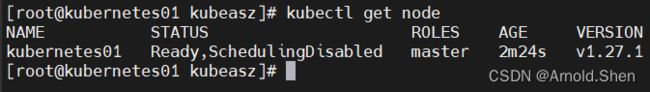

./ezctl setup k8s1.27.1-cluster 04 ---》 安装master

ps -ef |grep kube

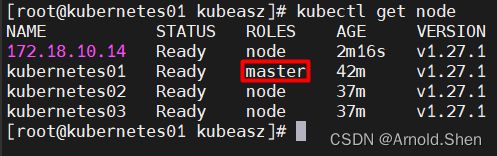

kubectl get node

./ezctl setup k8s1.27.1-cluster 05 ---》 安装node

kubectl get node

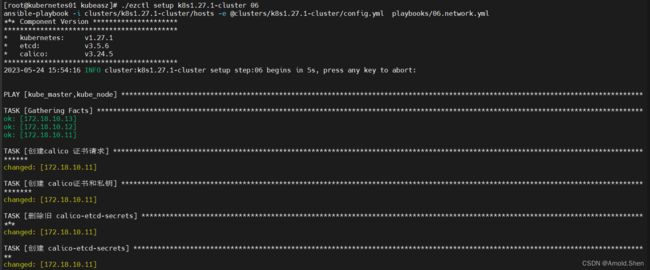

./ezctl setup k8s1.27.1-cluster 06 ---》 安装网络插件

kubectl get pod -n kube-system

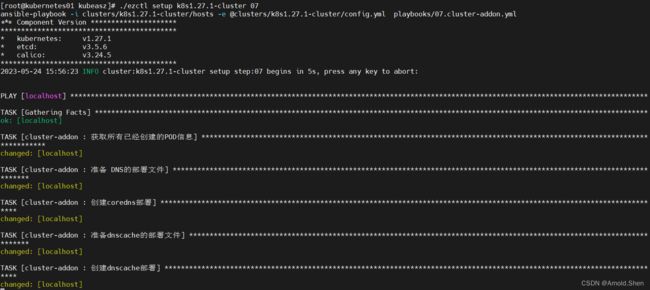

./ezctl setup k8s1.27.1-cluster 07 ---》 安装系统的其它应用插件

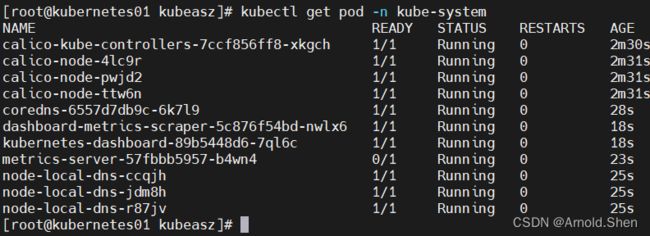

kubectl get pod -n kube-system

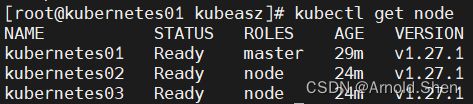

kubectl get node

kubectl get pod -n kube-system -o wide

kubectl top node

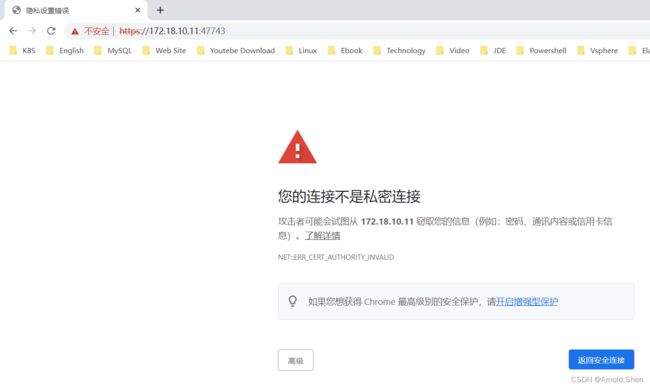

kubectl get svc -n kube-system

kubectl describe secrets -n kube-system $(kubectl get secret | awk '/dashboard-admin/{print $1}')

复制admin-user的token(注意空格)

eyJhbGciOiJSUzI1NiIsImtpZCI6IkxtLXEzbEdJXzlKXzE0UnN3RF9fTHBtdHpMZ2o1eHFTeDJrb0Y3SW9JWVEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwOTkwNGJhMy04MzVkLTQ4MTctYTZiZC02NDQ2MWMwZDk3ZTIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.cU54l9vEo3N-r1cFc5ux2A_OU5Te0-PZzR9lN-_TYK4QnRJGwJOSMzGauVsCA7p2kXux4ZLepS1W253TJ9WU7zFOZfP9b4LvhJU7M7dv6T_9qZ93kK14ga7jHY38M2d-KMQycaU4_f8nF3oZFY4mX3sdgtHCPu1AojtWyLF8mMA367NcHucq3H9FtZlmokf5rvJJgyH3nqG4G5Uihnp6AkVlna7r_Ybh7S5AZIXEtdoIPlNwLXZL0LOuBwHHZv3ZPNXKry7NIPXEZPl3W10H6W4qwKLm4SCB_bRLnylnyST03yOsC95FqsRTDjjCFNI6CN9Acu6sL3HeUygsAZzjdw

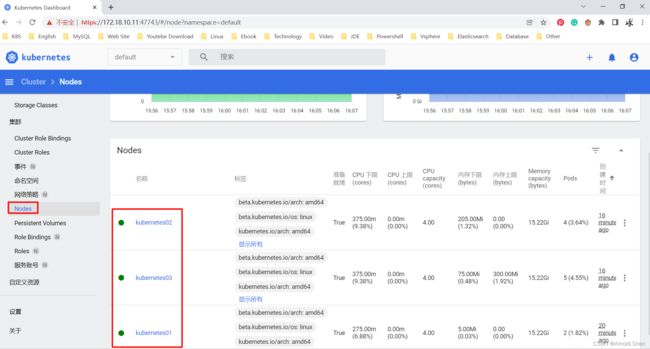

点击Node可以看到节点信息

pods相关信息

拿掉主节点的不可调度

kubectl uncordon Kubernetes01

kubectl get node

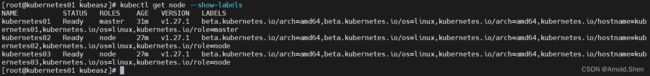

kubectl get node --show-labels

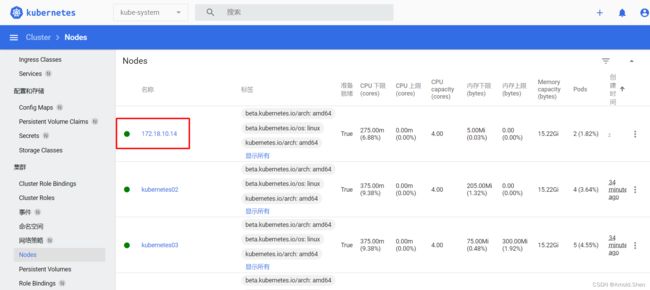

三:增加节点

增加Kubernetes04 worker节点为node 节点

./ezctl add-node k8s1.27.1-cluster 172.18.10.14

节点添加成功

kubectl get node

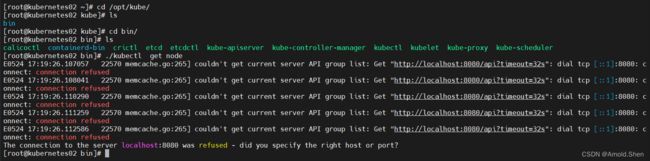

将Kubernetes02 作为 master

./ezctl add-master k8s1.27.1-cluster 172.18.10.12

cd /opt/kube/bin

./kubectl get node(发现无法使用)

从Kubernetes01将文件拷贝到Kubernetes02上

cd .kube/

scp -r .kube/ root@Kubernetes02:/root

scp /etc/profile root@Kubernetes02:/root/

![]()

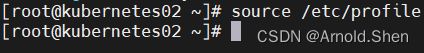

source /etc/profile

kubectl get node

四: 负载均衡高可用

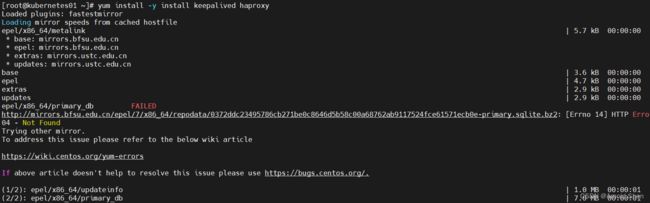

4.1 安装keepalived 与 haproxy

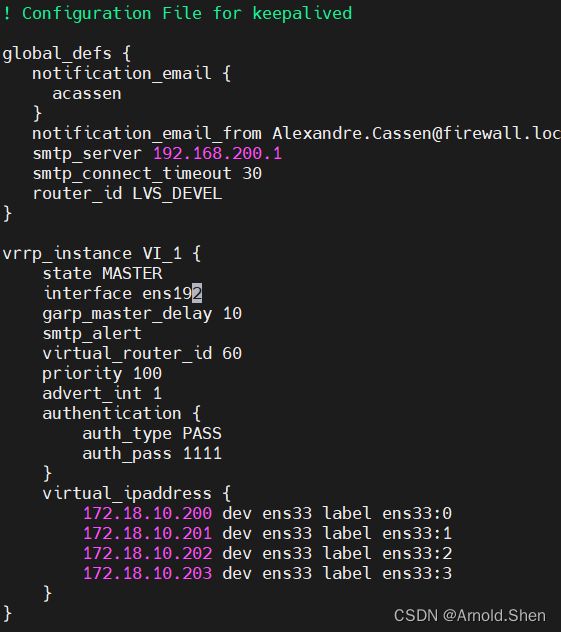

4.1.1 配置keepalived

系统环境初始化: [所有master 节点全部安装](安装时02作为Master,01作为Backup,根据实际情况做修改参数即可)

- 首先要安装 负载均衡器 haproxy + keepalived

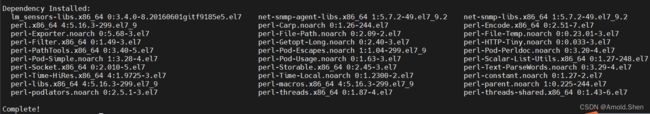

yum install -y install keepalived haproxy

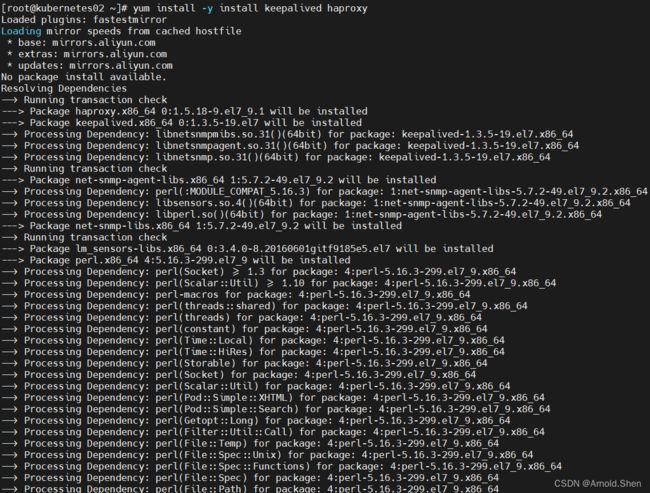

cd /etc/keepalived/

cp -p keepalived.conf keepalived.conf.bak

echo " " > keepalived.conf

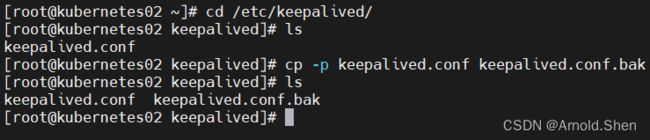

编辑前先查看网卡信息

vi keepalived.conf

---

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface ens192

garp_master_delay 10

smtp_alert

virtual_router_id 60

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.18.10.200 dev ens192 label ens192:0

172.18.10.201 dev ens192 label ens192:1

172.18.10.202 dev ens192 label ens192:2

172.18.10.203 dev ens192 label ens192:3

}

}

----

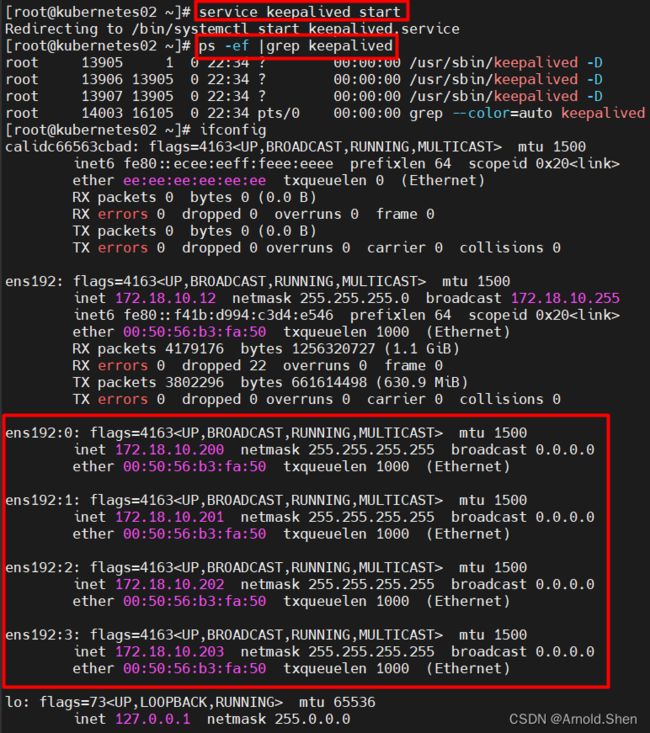

service keepalived start

systemctl enable keepalived

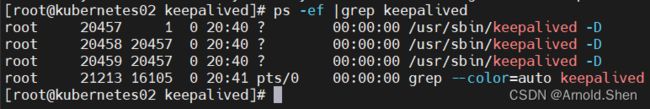

ps -ef |grep keepalived

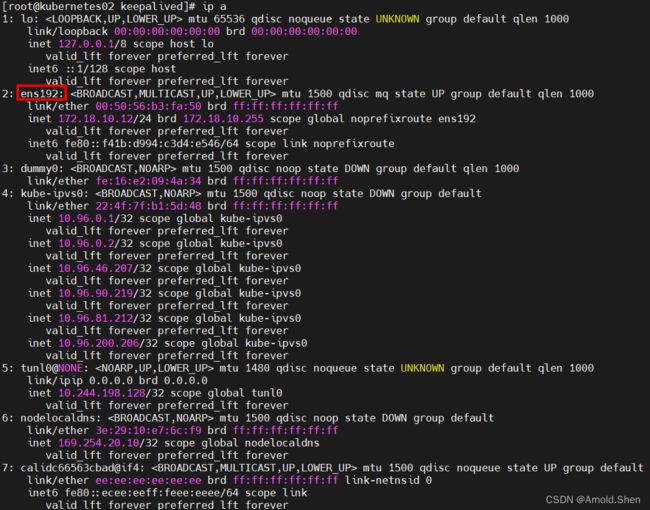

scp keepalived.conf root@Kubernetes01:/etc/keepalived/

cd /etc/keepalived

ls

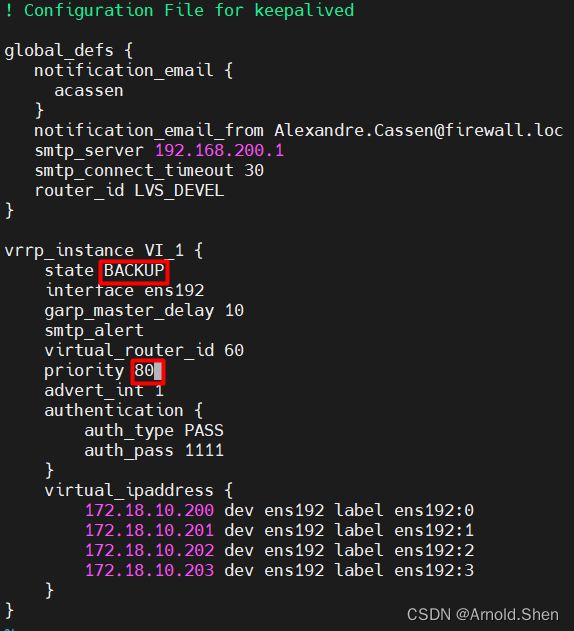

vi keepalived.conf

![]()

改成 BACKUP

优先级改成:80

service keepalived start

chkconfig keepalived on

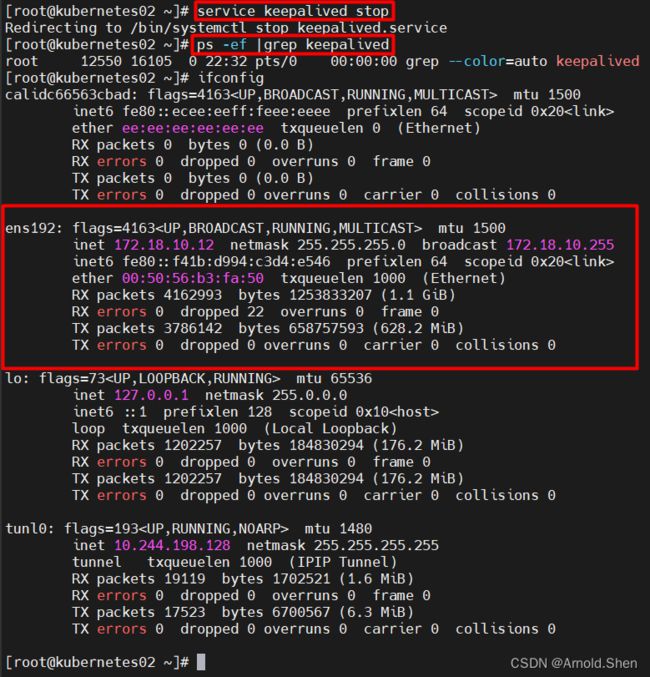

测试 keepalived的高可用

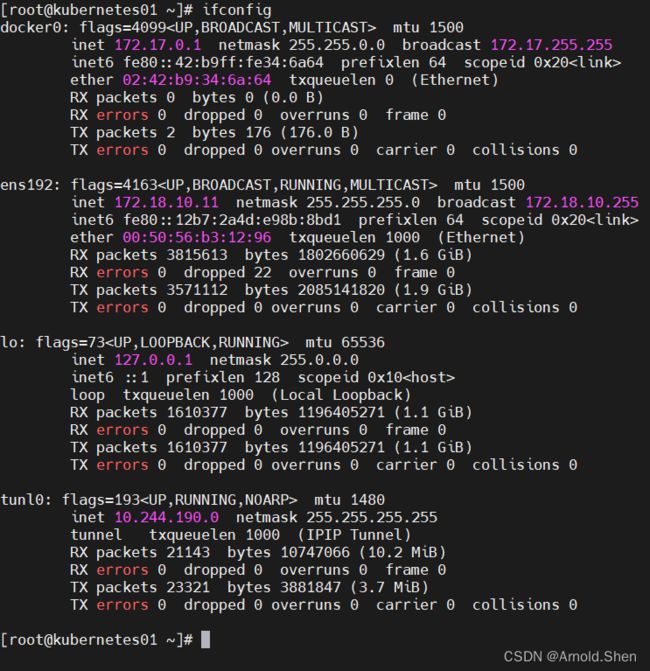

可以看到Kubernetes01网卡信息

停掉Kubernetes02 的 keepalived 负载 VIP 会票移到Kubernetes01上面

已经切换到Kubernetes01

在启动Kubernetes02的keepalived vip 会自动切回来

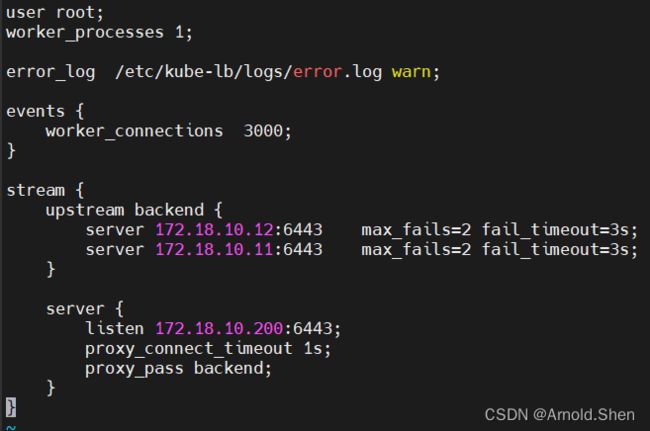

cd /etc/kube-lb/

cd conf

vi kube-lb.conf

两台都操作

service keepalived restart

4.1.2配置haproxy

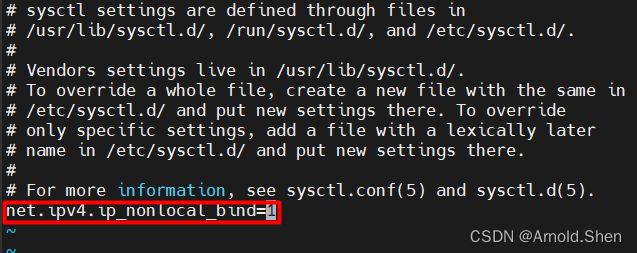

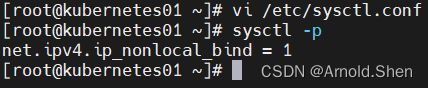

修改 /etc/sysctl.conf

vim /etc/sysctl.conf

---

net.ipv4.ip_nonlocal_bind=1

---

sysctl -p

scp /etc/sysctl.conf root@Kubernetes02:/etc/

cd /etc/haproxy/

mv haproxy.cfg haproxy.cfg.bak

cat > /etc/haproxy/haproxy.cfg << EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 172.18.10.200:6443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-masters

backend k8s-masters

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server Kubernetes01 172.18.10.11:6443 check

server Kubernetes02 172.18.10.12:6443 check

EOF

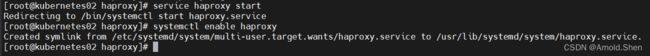

service haproxy start

systemctl enable haproxy

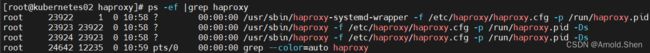

ps -ef |grep haproxy

scp haproxy.cfg root@Kubernetes01:/etc/haproxy/

service haproxy start

systemctl enable haproxy

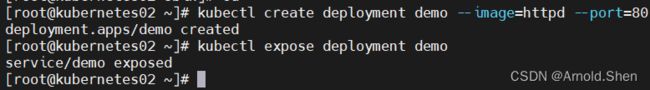

五:创建pod 测试

kubectl create deployment demo --image=httpd --port=80

kubectl expose deployment demo

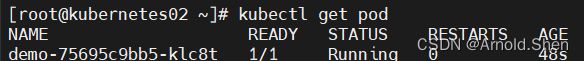

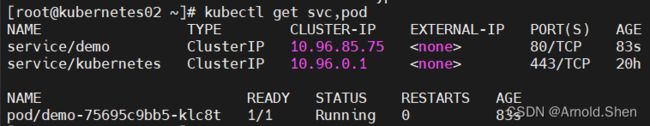

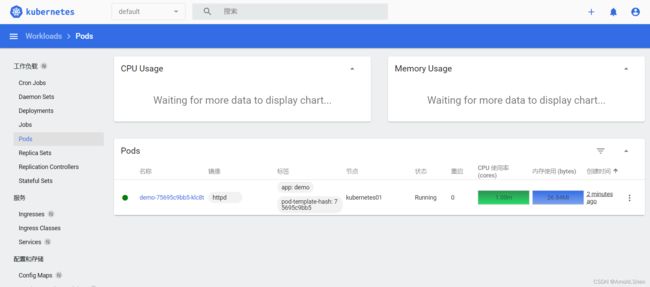

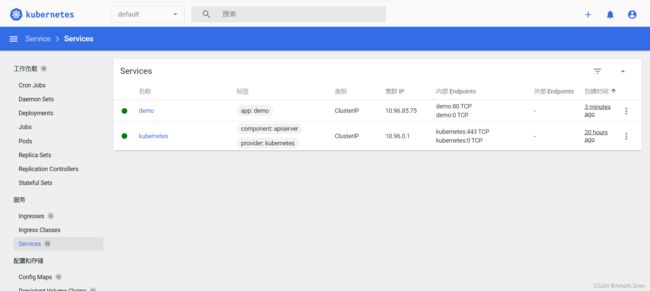

kubectl get pod

kubectl get svc,pod

kubectl get pod -n kube-system

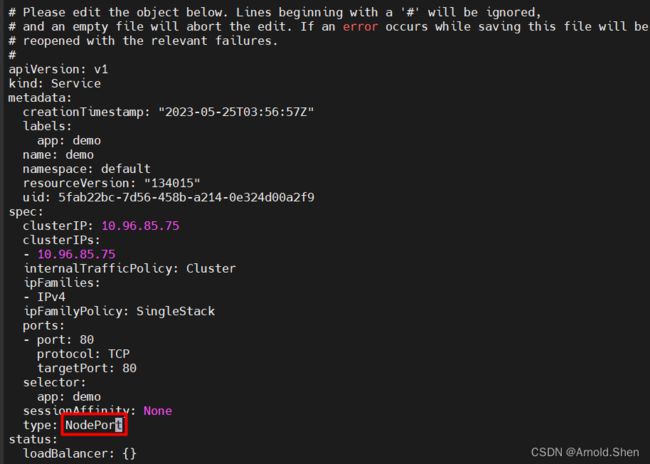

kubectl edit svc demo

http://172.18.10.11:49152/

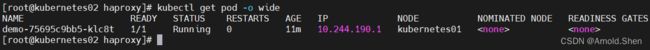

kubectl get pod -o wide

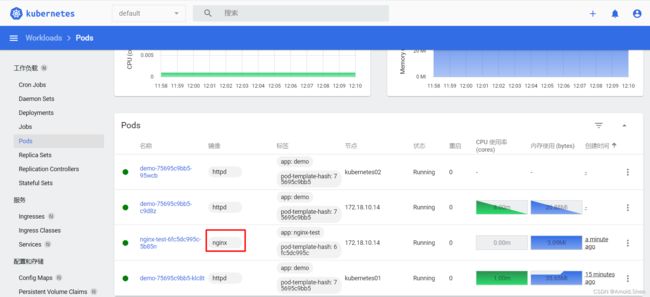

kubectl create deployment nginx-test --image=nginx --port=80

kubectl expose deployment nginx-test