Flutter学习第十三天,2021最新版超详细Flutter2.0实现百度语音转文字功能,Android和Flutter混合开发?

Flutter实现百度语音转文字功能

-

- 1.新建一个flutter项目,来实现View端。

- 2.新建android端的module

-

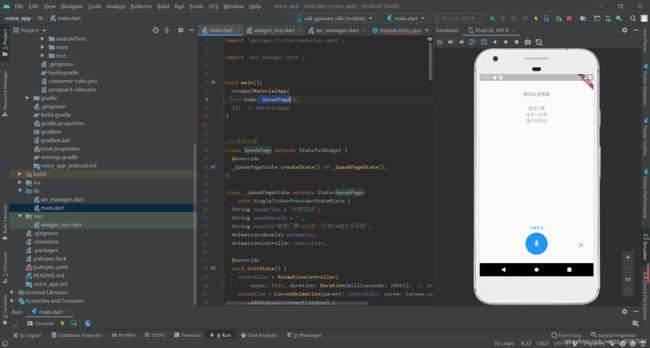

- 1.打开flutter里面的Android文件

- 1.新建一个module

- 3.配置百度语音转文字sdk

-

- 1.下载SDK

- 2.在asr_plugin里面配置SDK

-

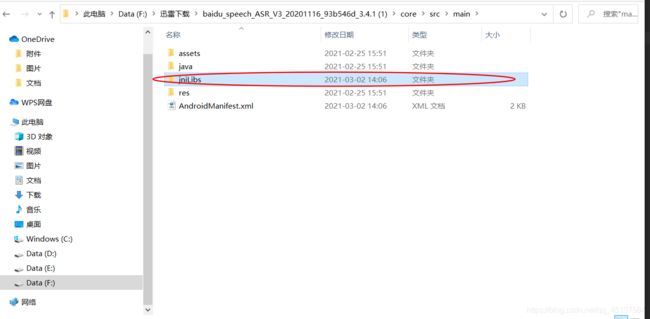

- 1.找到core文件夹

- 2.把下图文件放到asr_plugin文件的lib文件中

- 3.把jniLibs粘贴到你的src文件中

- 4.配置你的AndroidMainfest权限

- 5.在flutter的Android文件的build.gradle文件里面加载asr_plugin库

- 4.建立flutter和Android端的桥梁

-

- 1.配置flutter端的MethodChannel

- 2.配置Android端的MethodChannel

- 3.在flutter的Android文件中配置打包环境

- 5.注册plugin,实现通信功能

- 6.最后,编写一个dart界面来展示效果

虽然是实现Flutter的百度语音转文字功能,主要还是通过Android端来实现百度语音转文字功能,然后通过Flutter和Android端来传数据(如下图),来实现Flutter需求的功能。其中ios端也是可以实现百度语音转文字的功能,但是因为我的电脑是window系统,所以不能用flutter来开发ios,所以我下面的教程主要是针对Android端的实现。

原则肯定是先看一下效果呀,实例为证:

百度语音转汉字的时候一般都会在后面加一个句号。

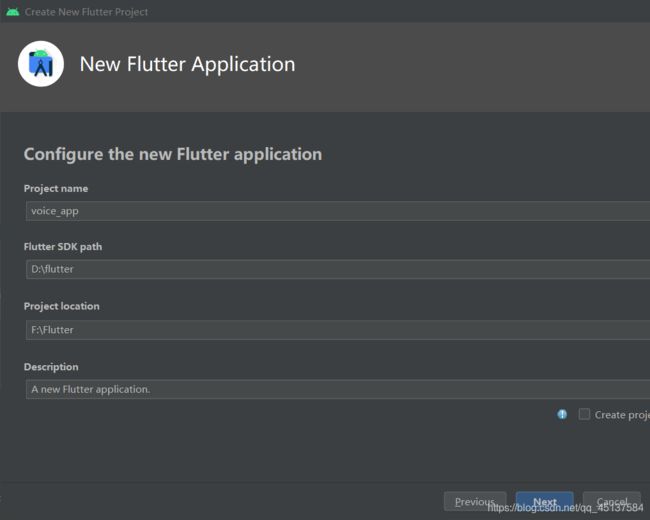

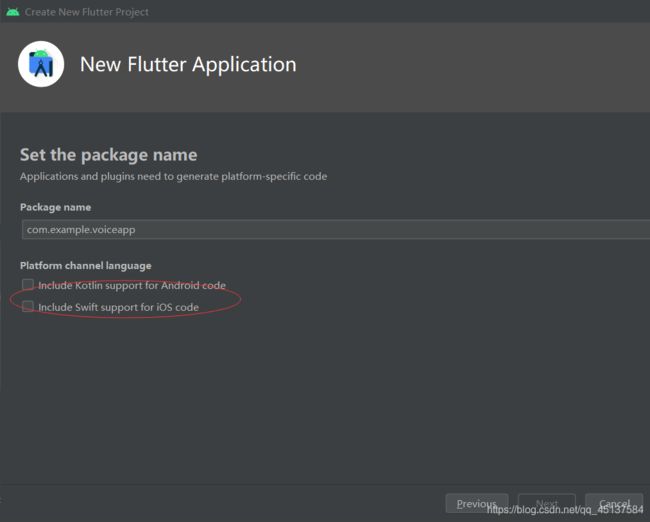

1.新建一个flutter项目,来实现View端。

2.新建android端的module

1.打开flutter里面的Android文件

找到flutter项目里面的Android文件,然后找到MainActivty,通过android studio,这里爆红不需要管他,开始build的时候可能会有点慢。

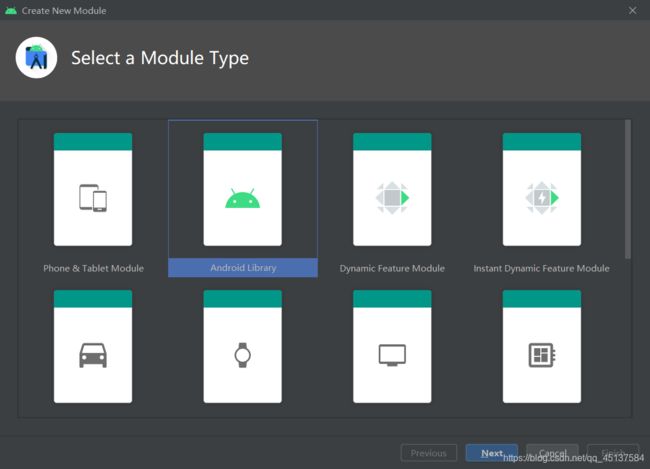

1.新建一个module

记得选择Android Library。

名字自己命名吧,我取名为asr_plugin,不细说了。

新建成功

3.配置百度语音转文字sdk

1.下载SDK

百度SDK下载地址:https://ai.baidu.com/sdk

2.在asr_plugin里面配置SDK

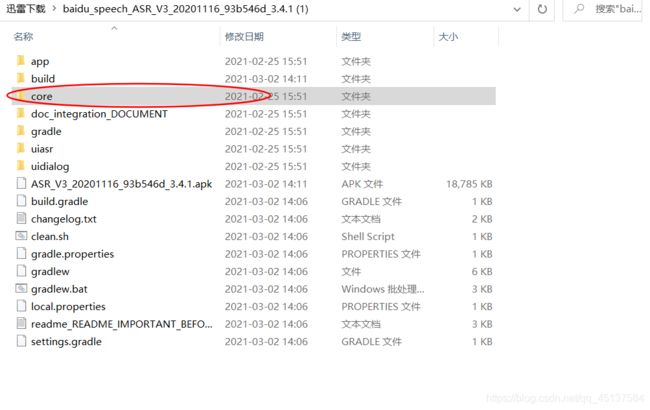

1.找到core文件夹

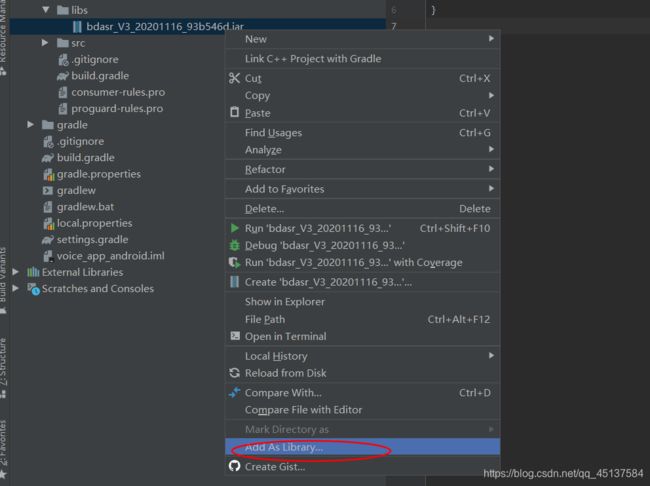

2.把下图文件放到asr_plugin文件的lib文件中

3.把jniLibs粘贴到你的src文件中

4.配置你的AndroidMainfest权限

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.example.asr_plugin">

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<application>

<meta-data

android:name="com.baidu.speech.APP_ID"

android:value="15519387" />

<meta-data

android:name="com.baidu.speech.API_KEY"

android:value="Ywyh8ErHPQXBSapdQZqVBtdl" />

<meta-data

android:name="com.baidu.speech.SECRET_KEY"

android:value="DFPpDWnOGrNGzxfX07D5GFLryh3d3Nne" />

application>

manifest>

我建立你们把API_ID,API_KEY改为自己创建的百度语音识别应用,因为我们项目的包名不相同,如果包名不相同的话,就可以会导致结果错误,创建应用的时候包名要设置与你的flutter端包名相同。可以根据错误码来查看错误文件,查看错误码的网址:https://ai.baidu.com/ai-doc/SPEECH/qk38lxh1q。

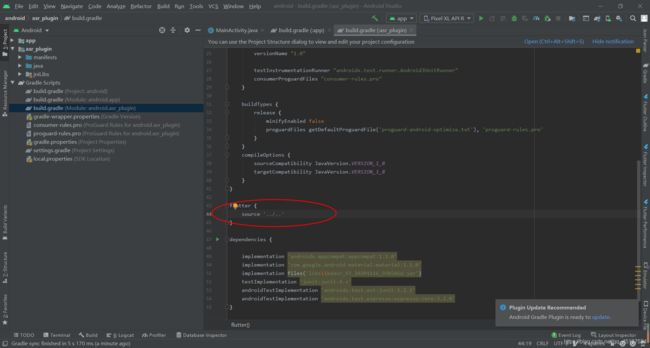

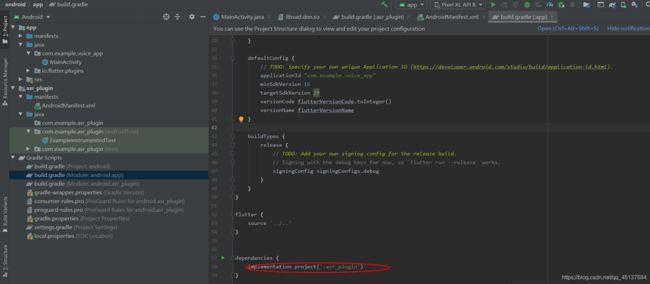

5.在flutter的Android文件的build.gradle文件里面加载asr_plugin库

此功能主要把asr_plugin加载到flutter里面,方便等下进行数据通信。

还需要一步,记得在

4.建立flutter和Android端的桥梁

1.配置flutter端的MethodChannel

建立一个asr_manager文件,通过MethodChannel 来进行通信。总共有三种通信方式,我们这里是属于方法通信。

总共有三个方法:开始录音、停止录音、取消录音。

代码如下:

import 'package:flutter/services.dart';

class AsrManager {

static const MethodChannel _channel = const MethodChannel('asr_plugin');

///开始录音

static Future<String> start({Map params}) async {

return await _channel.invokeMethod('start', params ?? {});

}

///停止录音

static Future<String> stop() async {

return await _channel.invokeMethod('stop');

}

///取消录音

static Future<String> cancel() async {

return await _channel.invokeMethod('cancel');

}

}

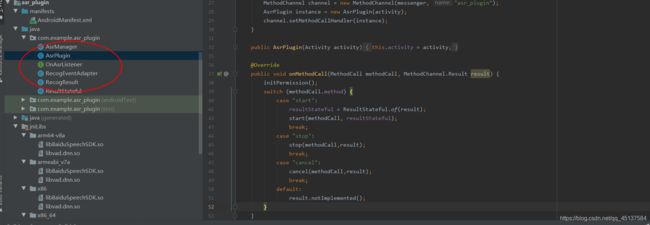

2.配置Android端的MethodChannel

这些文件除了AsrPlugin,其他你可以在你下载的那个百度SDK里面找到,但是有一些需要修改的地方。

这些文件你可以自己去下面我的百度网盘处下载,应该都是适配的:

链接:https://pan.baidu.com/s/1ODBeSEBfpSu3-GFOZgCvgw

提取码:gnkq

关于AsrPlugin文件,需要在Android端配置Flutter的环境:

在build.gradle文件中加入如图中代码:

def localProperties = new Properties()

def localPropertiesFile = rootProject.file('local.properties')

if (localPropertiesFile.exists()) {

localPropertiesFile.withReader('UTF-8') { reader ->

localProperties.load(reader)

}

}

def flutterRoot = localProperties.getProperty('flutter.sdk')

apply from: "$flutterRoot/packages/flutter_tools/gradle/flutter.gradle"

flutter {

source '../..'

}

配置成功之后,我们就可以实现Android端可以使用flutter的文件了。

如果你没用配置好这个环境的话,你就不能实现Android端与flutter端的通信,用下面代码来实现与flutter的start,stop,cancel操作:

代码如下:

import android.Manifest;

import android.app.Activity;

import android.content.pm.PackageManager;

import android.util.Log;

import androidx.annotation.Nullable;

import androidx.core.app.ActivityCompat;

import androidx.core.content.ContextCompat;

import java.util.ArrayList;

import java.util.Map;

import io.flutter.plugin.common.BinaryMessenger;

import io.flutter.plugin.common.MethodCall;

import io.flutter.plugin.common.MethodChannel;

public class AsrPlugin implements MethodChannel.MethodCallHandler {

private final static String TAG = "AsrPlugin";

private final Activity activity;

private ResultStateful resultStateful;

private AsrManager asrManager;

public static void registerWith(Activity activity, BinaryMessenger messenger) {

MethodChannel channel = new MethodChannel(messenger, "asr_plugin");

AsrPlugin instance = new AsrPlugin(activity);

channel.setMethodCallHandler(instance);

}

public AsrPlugin(Activity activity) {

this.activity = activity;

}

@Override

public void onMethodCall(MethodCall methodCall, MethodChannel.Result result) {

initPermission();

switch (methodCall.method) {

case "start":

resultStateful = ResultStateful.of(result);

start(methodCall, resultStateful);

break;

case "stop":

stop(methodCall,result);

break;

case "cancel":

cancel(methodCall,result);

break;

default:

result.notImplemented();

}

}

private void start(MethodCall call, ResultStateful result) {

if (activity == null) {

Log.e(TAG, "Ignored start, current activity is null.");

result.error("Ignored start, current activity is null.", null, null);

return;

}

if (getAsrManager() != null) {

getAsrManager().start(call.arguments instanceof Map ? (Map) call.arguments : null);

} else {

Log.e(TAG, "Ignored start, current getAsrManager is null.");

result.error("Ignored start, current getAsrManager is null.", null, null);

}

}

private void stop(MethodCall call, MethodChannel.Result result) {

if (asrManager != null) {

asrManager.stop();

}

}

private void cancel(MethodCall call, MethodChannel.Result result) {

if (asrManager != null) {

asrManager.cancel();

}

}

@Nullable

private AsrManager getAsrManager() {

if (asrManager == null) {

if (activity != null && !activity.isFinishing()) {

asrManager = new AsrManager(activity, onAsrListener);

}

}

return asrManager;

}

/**

* android 6.0 以上需要动态申请权限

*/

private void initPermission() {

String permissions[] = {Manifest.permission.RECORD_AUDIO,

Manifest.permission.ACCESS_NETWORK_STATE,

Manifest.permission.INTERNET,

Manifest.permission.READ_PHONE_STATE,

Manifest.permission.WRITE_EXTERNAL_STORAGE

};

ArrayList<String> toApplyList = new ArrayList<String>();

for (String perm :permissions){

if (PackageManager.PERMISSION_GRANTED != ContextCompat.checkSelfPermission(activity, perm)) {

toApplyList.add(perm);

//进入到这里代表没有权限.

}

}

String tmpList[] = new String[toApplyList.size()];

if (!toApplyList.isEmpty()){

ActivityCompat.requestPermissions(activity, toApplyList.toArray(tmpList), 123);

}

}

private OnAsrListener onAsrListener = new OnAsrListener() {

@Override

public void onAsrReady() {

}

@Override

public void onAsrBegin() {

}

@Override

public void onAsrEnd() {

}

@Override

public void onAsrPartialResult(String[] results, RecogResult recogResult) {

}

@Override

public void onAsrOnlineNluResult(String nluResult) {

}

@Override

public void onAsrFinalResult(String[] results, RecogResult recogResult) {

if (resultStateful != null) {

resultStateful.success(results[0]);

}

}

@Override

public void onAsrFinish(RecogResult recogResult) {

}

@Override

public void onAsrFinishError(int errorCode, int subErrorCode, String descMessage, RecogResult recogResult) {

if (resultStateful != null) {

resultStateful.error(descMessage, null, null);

}

}

@Override

public void onAsrLongFinish() {

}

@Override

public void onAsrVolume(int volumePercent, int volume) {

}

@Override

public void onAsrAudio(byte[] data, int offset, int length) {

}

@Override

public void onAsrExit() {

}

@Override

public void onOfflineLoaded() {

}

@Override

public void onOfflineUnLoaded() {

}

};

}

3.在flutter的Android文件中配置打包环境

主要为了避免环境冲突而导致功能不成功,配置如图所示:

ndk {

abiFilters "armeabi-v7a","arm64-v8a","x86_64","x86" /*只打包flutter所支持的架构,flutter没有armeabi架构的so,加x86的原因是为了能够兼容模拟器 abiFilters "armeabi-v7a" release 时打"armeabi-v7包 */

}

packagingOptions {

/* 确保app与asr_plugin都依赖的libflutter.so libapp.so merge时不冲突@https://github.com/card-io/card.io-Android-SDK/issues/186#issuecomment-427552552 */

pickFirst 'lib/x86_64/libflutter.so'

pickFirst 'lib/x86_64/libapp.so'

pickFirst 'lib/x86/libflutter.so'

pickFirst 'lib/arm64-v8a/libflutter.so'

pickFirst 'lib/arm64-v8a/libapp.so'

pickFirst 'lib/armeabi-v7a/libapp.so'

}

5.注册plugin,实现通信功能

主要是在flutter的android文件夹中调用我们上面建立的android的module里面通信文件AsrPlugin,然后用来通信数据通信,达到我们所需要的效果。

import android.os.Bundle;

import androidx.annotation.NonNull;

import com.example.asr_plugin.AsrPlugin;

import io.flutter.embedding.android.FlutterActivity;

import io.flutter.embedding.engine.FlutterEngine;

import io.flutter.plugins.GeneratedPluginRegistrant;

public class MainActivity extends FlutterActivity {

@Override

public void configureFlutterEngine(@NonNull FlutterEngine flutterEngine) {

GeneratedPluginRegistrant.registerWith(flutterEngine);

//flutter sdk >= v1.17.0 时使用下面方法注册自定义plugin

AsrPlugin.registerWith(this, flutterEngine.getDartExecutor().getBinaryMessenger());

}

@Override

protected void onCreate(Bundle savedInstanceState ) {

super.onCreate(savedInstanceState);

}

}

6.最后,编写一个dart界面来展示效果

import 'package:flutter/material.dart';

import 'asr_manager.dart';

void main(){

runApp(MaterialApp(

home: SpeakPage(),

));

}

///语音识别

class SpeakPage extends StatefulWidget {

@override

_SpeakPageState createState() => _SpeakPageState();

}

class _SpeakPageState extends State<SpeakPage>

with SingleTickerProviderStateMixin {

String speakTips = '长按说话';

String speakResult = '';

String result='故宫门票\n北京一日游\n迪士尼乐园';

Animation<double> animation;

AnimationController controller;

@override

void initState() {

controller = AnimationController(

vsync: this, duration: Duration(milliseconds: 1000));

animation = CurvedAnimation(parent: controller, curve: Curves.easeIn)

..addStatusListener((status) {

if (status == AnimationStatus.completed) {

controller.reverse();

} else if (status == AnimationStatus.dismissed) {

controller.forward();

}

});

super.initState();

}

@override

void dispose() {

controller.dispose();

super.dispose();

}

@override

Widget build(BuildContext context) {

return Scaffold(

body: Container(

padding: EdgeInsets.all(30),

child: Center(

child: Column(

mainAxisAlignment: MainAxisAlignment.spaceBetween,

children: <Widget>[_topItem(), _bottomItem()],

),

),

),

);

}

_speakStart() {

controller.forward();

setState(() {

speakTips = '- 识别中 -';

});

AsrManager.start().then((text) {

if (text != null && text.length > 0) {

setState(() {

speakResult = text;

result=speakResult;

});

/*Navigator.pop(context);

Navigator.push(context,MaterialPageRoute(builder: (context)=> SearchPage(

keyword: speakResult,

)));*/

print("错误" + text);

}

}).catchError((e) {

print("----------123" + e.toString());

});

}

_speakStop() {

setState(() {

speakTips = '长按说话';

});

controller.reset();

controller.stop();

AsrManager.stop();

}

_topItem() {

return Column(

children: <Widget>[

Padding(

padding: EdgeInsets.fromLTRB(0, 30, 0, 30),

child: Text('你可以这样说',

style: TextStyle(fontSize: 16, color: Colors.black54))),

Text(result,

textAlign: TextAlign.center,

style: TextStyle(

fontSize: 15,

color: Colors.grey,

)),

Padding(

padding: EdgeInsets.all(20),

child: Text(

speakResult,

style: TextStyle(color: Colors.blue),

),

)

],

);

}

_bottomItem() {

return FractionallySizedBox(

widthFactor: 1,

child: Stack(

children: <Widget>[

GestureDetector(

onTapDown: (e) {

_speakStart();

},

onTapUp: (e) {

_speakStop();

},

onTapCancel: () {

_speakStop();

},

child: Center(

child: Column(

children: <Widget>[

Padding(

padding: EdgeInsets.all(10),

child: Text(

speakTips,

style: TextStyle(color: Colors.blue, fontSize: 12),

),

),

Stack(

children: <Widget>[

Container(

//占坑,避免动画执行过程中导致父布局大小变得

height: MIC_SIZE,

width: MIC_SIZE,

),

Center(

child: AnimatedMic(

animation: animation,

),

)

],

)

],

),

),

),

Positioned(

right: 0,

bottom: 20,

child: GestureDetector(

onTap: () {

Navigator.pop(context);

},

child: Icon(

Icons.close,

size: 30,

color: Colors.grey,

),

),

)

],

),

);

}

}

const double MIC_SIZE = 80;

class AnimatedMic extends AnimatedWidget {

static final _opacityTween = Tween<double>(begin: 1, end: 0.5);

static final _sizeTween = Tween<double>(begin: MIC_SIZE, end: MIC_SIZE - 20);

AnimatedMic({Key key, Animation<double> animation})

: super(key: key, listenable: animation);

@override

Widget build(BuildContext context) {

final Animation<double> animation = listenable;

return Opacity(

opacity: _opacityTween.evaluate(animation),

child: Container(

height: _sizeTween.evaluate(animation),

width: _sizeTween.evaluate(animation),

decoration: BoxDecoration(

color: Colors.blue,

borderRadius: BorderRadius.circular(MIC_SIZE / 2),

),

child: Icon(

Icons.mic,

color: Colors.white,

size: 30,

),

),

);

}

}

步骤可能毕竟多,我可能有也很多没用考虑的地方哦,如果遇到问题可以提出来,这个功能的实现主要来自我学习flutter视频的那个老师,老师博客地址:https://blog.csdn.net/fengyuzhengfan。