2.关于Transformer

关于Transformer

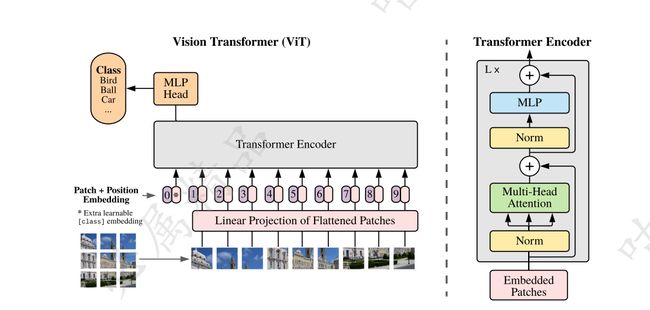

模型架构

举例输入图像为3x224x224

Embedded Patches

将一张图的多个区域进行卷积,将每个区域转换成多维度向量(多少卷积核就有多少维向量)

self.patch_embeddings = Conv2d(in_channels=in_channels, # 颜色通道 3

out_channels=config.hidden_size, # 卷积核个数,也就是输出通道数 768

kernel_size=patch_size, # 卷积核大小 16

stride=patch_size) # 步长 16

# 197个向量,每个向量768维度,196 = 14*14 剩下一个是cls_token (1,1,768)

self.position_embeddings = nn.Parameter(torch.zeros(1, n_patches+1, config.hidden_size))

# (1,1,768) 用来收集每个向量的信息

self.cls_token = nn.Parameter(torch.zeros(1, 1, config.hidden_size)) # 填充为0

Norm

类似于nlp,不使用batch norm,因为每个维度相同位置表示的信息不一样,所以对于transformer来说,只使用layer norm

# 类似于nlp,不使用batch norm,因为每个维度相同位置表示的信息不一样,所以对于transformer来说,只使用layer norm

self.encoder_norm = LayerNorm(config.hidden_size, eps=1e-6)

Multi-head Attention

这里是采用12头,输入数据位(16,197,768) 经过多头转换后(16,12,197,64)分别处理,最后在进行汇总

# 初始化多头机制

self.num_attention_heads = config.transformer["num_heads"]

self.attention_head_size = int(config.hidden_size / self.num_attention_heads) # 每一头处理一个向量中的一个数据 768/12 = 64

self.all_head_size = self.num_attention_heads * self.attention_head_size

# 初始化 attention 的q,k,v

self.query = Linear(config.hidden_size, self.all_head_size)

self.key = Linear(config.hidden_size, self.all_head_size)

self.value = Linear(config.hidden_size, self.all_head_size)

多头计算

def forward(self, hidden_states):

mixed_query_layer = self.query(hidden_states)#Linear(in_features=768, out_features=768, bias=True)

mixed_key_layer = self.key(hidden_states)

mixed_value_layer = self.value(hidden_states)

query_layer = self.transpose_for_scores(mixed_query_layer)

key_layer = self.transpose_for_scores(mixed_key_layer)

value_layer = self.transpose_for_scores(mixed_value_layer)

# 计算得分

attention_scores = torch.matmul(query_layer, key_layer.transpose(-1, -2))

attention_scores = attention_scores / math.sqrt(self.attention_head_size)

attention_probs = self.softmax(attention_scores)

weights = attention_probs if self.vis else None

attention_probs = self.attn_dropout(attention_probs)

# 将q计算出来的权重,对value进行加权,不改变value的维度

context_layer = torch.matmul(attention_probs, value_layer)

context_layer = context_layer.permute(0, 2, 1, 3).contiguous()

new_context_layer_shape = context_layer.size()[:-2] + (self.all_head_size,)

context_layer = context_layer.view(*new_context_layer_shape) # 重新升维成(16,197,768)

attention_output = self.out(context_layer)

attention_output = self.proj_dropout(attention_output)

return attention_output, weights

MPL

前面多维向量计算的结果进行汇总,一般会有两层的MPL

def forward(self, x):

# 全连接

x = self.fc1(x)

x = self.act_fn(x)

x = self.dropout(x)

# 全连接

x = self.fc2(x)

x = self.dropout(x)

return x

残差连接

class Block(nn.Module):

def __init__(self, config, vis):

super(Block, self).__init__()

self.hidden_size = config.hidden_size

self.attention_norm = LayerNorm(config.hidden_size, eps=1e-6)

self.ffn_norm = LayerNorm(config.hidden_size, eps=1e-6)

self.ffn = Mlp(config)

self.attn = Attention(config, vis)

def forward(self, x):

h = x # 保存原始数据,后续做残差连接

x = self.attention_norm(x) # 标准化

x, weights = self.attn(x) # 注意力机制

x = x + h # 做了一个残差连接

h = x

x = self.ffn_norm(x)

x = self.ffn(x)

x = x + h

return x, weights