rke管理k8s节点的新增与删除

1 删除worker节点

# 查看节点名

kubectl get nodes

# 标记节点不可调度

kubectl cordon worker8

# DaemonSet 确保集群中的所有(或某些)节点上都运行了一个 Pod 的副本

# 排空节点上的pod,确保该节点上的所有Pod都调度到其他节点

kubectl drain worker8 --ignore-daemonsets

# 删除节点

kubectl delete node worker8 实际命令如下

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

etcd1 Ready etcd 25d v1.19.3

etcd2 Ready etcd 25d v1.19.3

etcd3 Ready etcd 25d v1.19.3

master1 Ready controlplane 25d v1.19.3

master2 Ready controlplane 25d v1.19.3

master3 Ready controlplane 25d v1.19.3

worker1 Ready worker 25d v1.19.3

worker2 Ready worker 25d v1.19.3

worker3 Ready worker 25d v1.19.3

worker4 Ready worker 25d v1.19.3

worker5 Ready worker 25d v1.19.3

worker6 Ready worker 25d v1.19.3

worker7 Ready worker 25d v1.19.3

worker8 Ready worker 25d v1.19.3

worker9 Ready worker 25d v1.19.3

[root@master1 ~]# kubectl cordon worker8

node/worker8 cordoned

[root@master1 ~]# kubectl drain worker8 --ignore-daemonsets

node/worker8 already cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/canal-cgfh7

evicting pod middleware/nacos-0

pod/nacos-0 evicted

node/worker8 evicted

[root@master1 ~]# kubectl delete node worker8

node "worker8" deleted

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

etcd1 Ready etcd 25d v1.19.3

etcd2 Ready etcd 25d v1.19.3

etcd3 Ready etcd 25d v1.19.3

master1 Ready controlplane 25d v1.19.3

master2 Ready controlplane 25d v1.19.3

master3 Ready controlplane 25d v1.19.3

worker1 Ready worker 25d v1.19.3

worker2 Ready worker 25d v1.19.3

worker3 Ready worker 25d v1.19.3

worker4 Ready worker 25d v1.19.3

worker5 Ready worker 25d v1.19.3

worker6 Ready worker 25d v1.19.3

worker7 Ready worker 25d v1.19.3

worker9 Ready worker 25d v1.19.3如果要撤掉删掉,还需要删除cluster.yml中对应节点配置。

#

su - rancher

# 删除节点定义

vi cluster.yml

# 重新部署集群

rke up --update-only --config cluster.yml修改cluster.yml之后,再执行docker ps,可以看到rancher相关的才没有了。

2 新增worker节点

su - rancher

# 更新节点配置

rke up --update-only --config cluster.yml

# 修改日志级别的写法,实际没什么用

RKE_LOG_LEVEL=DEBUG rke up --update-only --config cluster.yml如果是原来的worker8节点恢复,需要在worker8节点重启docker,执行service docker restart,再回到master节点rke up --update-only --config cluster.yml执行才可以。

2.1 worker8:10250无法访问的问题

再master节点执行,可以检测到worker8

[rancher@master1 ~]$ ssh -i ~/.ssh/id_rsa rancher@worker8

Last login: Sat Aug 3 09:09:06 2024

出现下面的问题,原因是worker8节点移除后,立马执行rke up --update-only --config cluster.yml,是不行的,需要在worker8上执行service docker restart

FATA[0019] [[network] Host [master3] is not able to connect to the following ports: [worker8:10250]. Please check network policies and firewall rules] # 手工测试连接,是没有问题的。在master1上执行

su - rancher

[rancher@master1 ~]$ ssh -i ~/.ssh/id_rsa rancher@worker8

Last login: Sun Aug 4 07:40:49 2024 from master1

# 检查防火墙状态,在worker8上执行

[root@worker8 ~]# firewall-cmd --state

not running

# 检查端口监听情况

[root@worker8 ~]# netstat -tuln | grep :10250

tcp6 0 0 :::10250 :::* LISTEN

# 在有效的woker9节点中可以看到

[root@worker9 ~]# lsof -i:10250

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

kubelet 25464 root 27u IPv6 15603796 0t0 TCP *:10250 (LISTEN)

kubelet 25464 root 31u IPv6 55613041 0t0 TCP worker9:10250->worker2:58563 (ESTABLISHED)

# 检测有问题的worker8,应该是下面的服务启动有问题

# 为什么会多出这样的容器,是因为worker8没有重启docker,就重新安装集群节点了,这个是由问题的

# docker rm -f 88217223a4d6,将容器删掉

# service docker restart

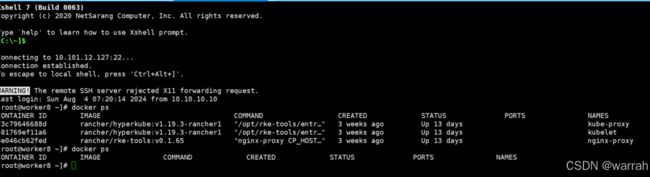

[root@worker8 kubernetes]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

88217223a4d6 rancher/rke-tools:v0.1.65 "/docker-entrypoint.…" 19 seconds ago Up 18 seconds 80/tcp, 0.0.0.0:10250->1337/tcp rke-worker-port-listener

2.2 worker1" not found

磁盘被清理掉,恢复worker1、worker4、worker7节点,提示下面的错误,

ERRO[0184] Host worker1 failed to report Ready status with error: [worker] Error getting node worker1: "worker1" not found

INFO[0184] [worker] Now checking status of node worker4, try #1

ERRO[0209] Host worker4 failed to report Ready status with error: [worker] Error getting node worker4: "worker4" not found

INFO[0209] [worker] Now checking status of node worker7, try #1

ERRO[0234] Host worker7 failed to report Ready status with error: [worker] Error getting node worker7: "worker7" not found # 检查网络策略,没有啥问题

[root@master1 ~]# kubectl get networkpolicies -A

No resources found换个策略,就是改名字

hostnamectl set-hostname worker01

hostnamectl set-hostname worker04

hostnamectl set-hostname worker07

vi /etc/hosts

然后再执行rke up --update-only --config cluster.yml

INFO[0015] [reconcile] Check etcd hosts to be added

INFO[0015] [hosts] Cordoning host [worker1]

WARN[0040] [hosts] Can't find node by name [worker1]

INFO[0040] [dialer] Setup tunnel for host [worker1]

INFO[0043] [dialer] Setup tunnel for host [worker1]

INFO[0046] [dialer] Setup tunnel for host [worker1]

WARN[0049] [reconcile] Couldn't clean up worker node [worker1]: Not able to reach the host: Can't retrieve Docker Info: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.24/info": Failed to dial ssh using address [worker1:22]: dial tcp: lookup worker1 on 223.5.5.5:53: no such host

INFO[0049] [hosts] Cordoning host [worker4]

WARN[0074] [hosts] Can't find node by name [worker4]

INFO[0074] [dialer] Setup tunnel for host [worker4]

INFO[0078] [dialer] Setup tunnel for host [worker4]

INFO[0081] [dialer] Setup tunnel for host [worker4]

WARN[0084] [reconcile] Couldn't clean up worker node [worker4]: Not able to reach the host: Can't retrieve Docker Info: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.24/info": Failed to dial ssh using address [worker4:22]: dial tcp: lookup worker4 on 223.5.5.5:53: no such host

INFO[0084] [hosts] Cordoning host [worker7]

WARN[0109] [hosts] Can't find node by name [worker7]

INFO[0109] [dialer] Setup tunnel for host [worker7]

INFO[0112] [dialer] Setup tunnel for host [worker7]

INFO[0116] [dialer] Setup tunnel for host [worker7]

WARN[0119] [reconcile] Couldn't clean up worker node [worker7]: Not able to reach the host: Can't retrieve Docker Info: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.24/info": Failed to dial ssh using address [worker7:22]: dial tcp: lookup worker7 on 223.5.5.5:53: no such host

但是worker01,worker04、worker07节点成功安装了