docker部署ELK日志系统+kafka

1.部署elasticsearch

docker pull elasticsearch:7.6.2

mkdir -p /data/elk/es/config

vi /data/elk/es/config/elasticsearch.yml

------------------------写入---------------------------

http.port: 9200

network.host: 0.0.0.0

cluster.name: "my-el"

http.cors.enabled: true

http.cors.allow-origin: "*"

------------------------结束---------------------------

docker run -itd --name es -p elasticsearch:7.6.2

docker cp 容器id:/usr/share/elasticsearch/data /data/elk/es

docker cp 容器id:/usr/share/elasticsearch/logs /data/elk/es

chown -R 1000:1000 /data/elk/es

docker run -itd -p 9200:9200 -p 9300:9300 --name es -e ES_JAVA_OPTS="-Xms512m -Xmx512m" -e "discovery.type=single-node" --restart=always -v /data/elk/es/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /data/elk/es/data:/usr/share/elasticsearch/data -v /data/elk/es/logs:/usr/share/elasticsearch/logs elasticsearch:7.6.2

2.elasticsearch-head可视化工具(可选)

docker pull mobz/elasticsearch-head:5

docker run -d --restart=always -p 9100:9100 --name elasticsearch-head mobz/elasticsearch-head:5

mkdir -p /data/elk/elasticsearch-head

cd /data/elk/elasticsearch-head

docker cp elasticsearch-head:/usr/src/app/_site/vendor.js ./

sed -i '/contentType:/s/application\/x-www-form-urlencoded/application\/json;charset=UTF-8/' vendor.js

sed -i '/var inspectData = s.contentType/s/application\/x-www-form-urlencoded/application\/json;charset=UTF-8/' vendor.js

docker rm -f elasticsearch-head

docker run -d --restart=always -p 9100:9100 -v /data/elk/elasticsearch-head/vendor.js:/usr/src/app/_site/vendor.js --name elasticsearch-head mobz/elasticsearch-head:5

3.部署kibana

docker pull kibana:7.6.2

docker inspect --format '{{ .NetworkSettings.IPAddress }}' es

mkdir -p /data/elk/kibana

vi /data/elk/kibana/kibana.yml

server.name: kibana

server.host: "0"

elasticsearch.hosts: ["http://elasticsearch容器ip:9200"]

xpack.monitoring.ui.container.elasticsearch.enabled: true

i18n.locale: "zh-CN"

kibana.index: ".kibana"

docker run -d --restart=always --log-driver json-file --log-opt max-size=100m --log-opt max-file=2 --name kibana -p 5601:5601 -v /data/elk/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml kibana:7.6.2

4.部署kafka(kafka不是必须的,用来解决io读取日志丢失问题,先将日志推送至kafka logstash异步读取从而缓解服务器压力)

docker pull wurstmeister/zookeeper

docker run -d --name zookeeper -p 2181:2181 -v /etc/localtime:/etc/localtime wurstmeister/zookeeper

docker pull wurstmeister/kafka

docker run -d --name kafka -p 9092:9092 \

-e KAFKA_BROKER_ID=0 \

-e KAFKA_ZOOKEEPER_CONNECT=localhost:2181 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://localhost:9092 \

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 \

-v /etc/localtime:/etc/localtime \

wurstmeister/kafka

docker exec -it 容器id bash

cd /opt/kafka/bin/

./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic elk-log

./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic elk-log

kafka-topics.sh --list --zookeeper localhost:2181

kafka-topics.sh --delete --zookeeper localhost -topic elk-log

./kafka-console-producer.sh --broker-list localhost:9092 --topic elk-log

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic elk-log

5.部署logstash

docker pull logstash:7.6.2

mkdir -p /data/elk/logstash

vi /data/elk/logstash/logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch容器ip:9200" ]

xpack.monitoring.elasticsearch.username: elastic

xpack.monitoring.elasticsearch.password: changeme

path.config: /data/docker/logstash/conf.d/*.conf

path.logs: /var/log/logstash

mkdir -p /data/elk/logstash/conf.d

vi /data/elk/logstash/conf.d/syslog.conf

input {

kafka {

bootstrap_servers => "localhost:9092"

topics => ["system-provder-log"]

codec => "json"

auto_offset_reset => "earliest"

decorate_events => true

type => "system_log"

}

}

filter {

ruby {

code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)"

}

grok {

match => {"message"=> "%{TIMESTAMP_ISO8601:timestamp}"}

}

mutate {

convert => ["timestamp", "string"]

gsub => ["timestamp", "T([\S\s]*?)Z", ""]

gsub => ["timestamp", "-", "."]

}

}

output {

stdout {

codec => rubydebug {metadata => true}

}

if [type] == "system_log" {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "system_log-%{timestamp}"

}

}

}

docker run -d --name logstash -p 5044:5044 --log-driver json-file --log-opt max-size=100m --log-opt max-file=2 -v /data/elk/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml -v /data/elk/logstash/conf.d/:/data/docker/logstash/conf.d/ logstash:7.6.2

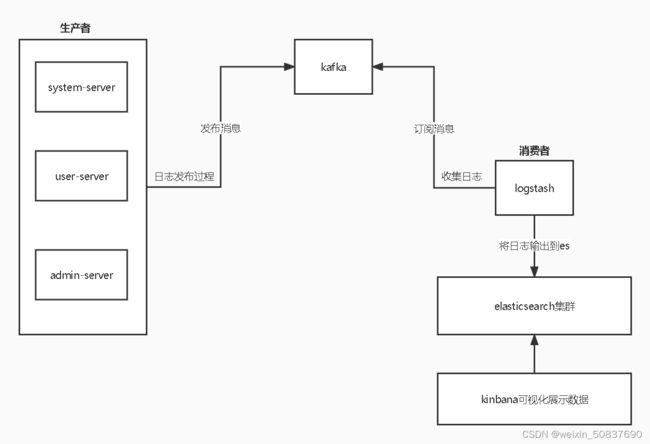

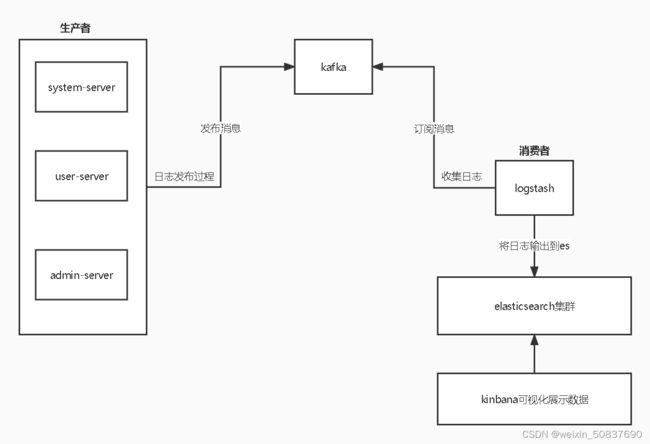

整合kafka后整体运行流程

springcloud服务集成ELK日志系统(建议放入公共模块中操作,业务模块直接导入即可,请勿导入其他日志框架避免出现冲突!)

1.导入maven依赖

com.github.danielwegener

logback-kafka-appender

0.2.0-RC2

ch.qos.logback

logback-classic

ch.qos.logback

logback-core

org.springframework.kafka

spring-kafka

net.logstash.logback

logstash-logback-encoder

6.6

2.创建LogIpConfig.java,后面打印日志时实现ip地址打印

package com.zxj.elk;

import ch.qos.logback.classic.pattern.ClassicConverter;

import ch.qos.logback.classic.spi.ILoggingEvent;

import lombok.extern.slf4j.Slf4j;

import java.net.InetAddress;

import java.net.UnknownHostException;

@Slf4j

public class LogIpConfig extends ClassicConverter {

private static String webIP;

static {

try {

webIP = InetAddress.getLocalHost().getHostAddress();

} catch (UnknownHostException e) {

log.error("获取日志Ip异常", e);

webIP = null;

}

}

@Override

public String convert(ILoggingEvent event) {

return webIP;

}

}

3.创建logback-spring.xml文件(将日志发布到kafka)

<configuration debug="false">

<conversionRule conversionWord="ip" converterClass="com.zxj.elk.LogIpConfig"/>

<springProperty scope="context" name="springAppName" source="spring.application.name"/>

<conversionRule conversionWord="clr"

converterClass="org.springframework.boot.logging.logback.ColorConverter"/>

<conversionRule conversionWord="wex"

converterClass="org.springframework.boot.logging.logback.WhitespaceThrowableProxyConverter"/>

<conversionRule conversionWord="wEx"

converterClass="org.springframework.boot.logging.logback.ExtendedWhitespaceThrowableProxyConverter"/>

<property name="CONSOLE_LOG_PATTERN"

value="${CONSOLE_LOG_PATTERN:-%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}}"/>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}

pattern>

encoder>

appender>

<appender name="kafkaAppender" class="com.github.danielwegener.logback.kafka.KafkaAppender">

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>GMT+8timeZone>

timestamp>

<pattern>

<pattern>

{

"ip":"%ip",

"severity": "%level",

"service": "${springAppName:-}",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"parent": "%X{X-B3-ParentSpanId:-}",

"exportable": "%X{X-Span-Export:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger{40}",

"rest": "%message",

"stack_trace": "%exception{30}"

}

pattern>

pattern>

providers>

encoder>

<topic>${springAppName:-}-logtopic>

<keyingStrategy class="com.github.danielwegener.logback.kafka.keying.NoKeyKeyingStrategy"/>

<deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy"/>

<producerConfig>bootstrap.servers=localhost:9092producerConfig>

<producerConfig>acks=0producerConfig>

<producerConfig>linger.ms=1000producerConfig>

<producerConfig>max.block.ms=0producerConfig>

<producerConfig>client.id=0producerConfig>

<appender-ref ref="CONSOLE"/>

appender>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="kafkaAppender"/>

root>

configuration>

访问elasticsearch-head(ip:9100)查看索引是否创建成功,如果有就说明成功了。