论文学习11:Boundary-Guided Camouflaged Object Detection

代码来源

GitHub - thograce/BGNet: Boundary-Guided Camouflaged Object Detection

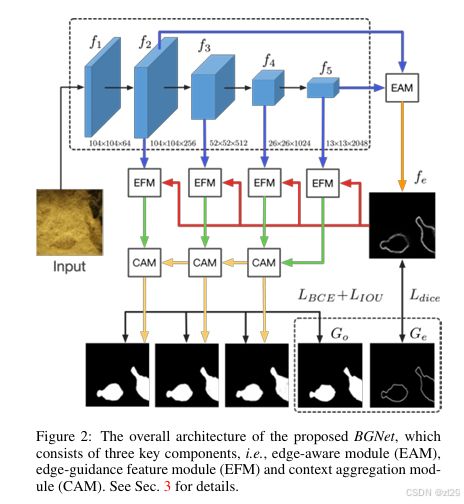

模块作用

BGNet利用额外的目标相关边缘语义信息来引导 COD 任务的特征学习,从而强制模型生成能够突出目标结构的特征。这一机制有助于提高目标边界的精准定位,从而提升伪装目标的检测性能。

模块结构

BGNet的架构基于Res2Net-50,编码器提取多级特征,解码器通过EAM、EFM和CAM生成分割图。

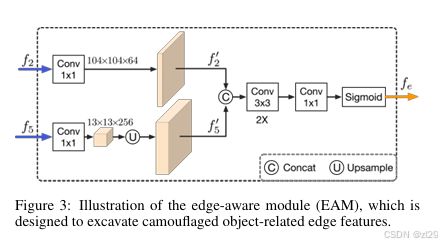

边缘感知模块(EAM)

- 功能:捕获与物体相关的边缘语义,结合低级(f2)和高级(f5)特征。

- 结构:f2通过1×1卷积调整为64通道,f5调整为256通道,连接后通过两个3×3卷积和一个1×1卷积加Sigmoid生成边缘图。

- 工作原理:通过边界地面真相监督,确保网络学习准确的边界信息,特别适合处理伪装物体的模糊边界。

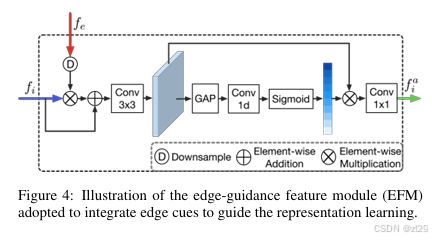

边缘引导特征模块(EFM)

- 功能:将边缘线索注入f2至f5的特征中,增强物体的结构表示。

- 结构:对每个特征级,元素乘以边缘图,跳跃连接加回原始特征,应用3×3卷积和LCA,LCA内核大小基于通道数计算。

- 工作原理:通过LCA动态调整通道权重,强调重要特征,改善伪装物体的边界定位。

上下文聚合模块(CAM)

- 功能:聚合多级融合特征,捕获多尺度上下文信息。

- 结构:自顶向下,扩张卷积(扩张率1、2、3、4)捕获上下文语义,包括残差连接和1×1、3×3卷积。

- 工作原理:通过多尺度上下文增强特征表示,确保网络能够处理复杂场景中的伪装物体。

代码

class EAM(nn.Module):

def __init__(self):

super(EAM, self).__init__()

self.reduce1 = Conv1x1(256, 64)

self.reduce4 = Conv1x1(2048, 256)

self.block = nn.Sequential(

ConvBNR(256 + 64, 256, 3),

ConvBNR(256, 256, 3),

nn.Conv2d(256, 1, 1))

def forward(self, x4, x1):

size = x1.size()[2:]

x1 = self.reduce1(x1)

x4 = self.reduce4(x4)

x4 = F.interpolate(x4, size, mode='bilinear', align_corners=False)

out = torch.cat((x4, x1), dim=1)

out = self.block(out)

return out

class EFM(nn.Module):

def __init__(self, channel):

super(EFM, self).__init__()

t = int(abs((log(channel, 2) + 1) / 2))

k = t if t % 2 else t + 1

self.conv2d = ConvBNR(channel, channel, 3)

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv1d = nn.Conv1d(1, 1, kernel_size=k, padding=(k - 1) // 2, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, c, att):

if c.size() != att.size():

att = F.interpolate(att, c.size()[2:], mode='bilinear', align_corners=False)

x = c * att + c

x = self.conv2d(x)

wei = self.avg_pool(x)

wei = self.conv1d(wei.squeeze(-1).transpose(-1, -2)).transpose(-1, -2).unsqueeze(-1)

wei = self.sigmoid(wei)

x = x * wei

return x

class CAM(nn.Module):

def __init__(self, hchannel, channel):

super(CAM, self).__init__()

self.conv1_1 = Conv1x1(hchannel + channel, channel)

self.conv3_1 = ConvBNR(channel // 4, channel // 4, 3)

self.dconv5_1 = ConvBNR(channel // 4, channel // 4, 3, dilation=2)

self.dconv7_1 = ConvBNR(channel // 4, channel // 4, 3, dilation=3)

self.dconv9_1 = ConvBNR(channel // 4, channel // 4, 3, dilation=4)

self.conv1_2 = Conv1x1(channel, channel)

self.conv3_3 = ConvBNR(channel, channel, 3)

def forward(self, lf, hf):

if lf.size()[2:] != hf.size()[2:]:

hf = F.interpolate(hf, size=lf.size()[2:], mode='bilinear', align_corners=False)

x = torch.cat((lf, hf), dim=1)

x = self.conv1_1(x)

xc = torch.chunk(x, 4, dim=1)

x0 = self.conv3_1(xc[0] + xc[1])

x1 = self.dconv5_1(xc[1] + x0 + xc[2])

x2 = self.dconv7_1(xc[2] + x1 + xc[3])

x3 = self.dconv9_1(xc[3] + x2)

xx = self.conv1_2(torch.cat((x0, x1, x2, x3), dim=1))

x = self.conv3_3(x + xx)

return x

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.resnet = res2net50_v1b_26w_4s(pretrained=True)

# if self.training:

# self.initialize_weights()

self.eam = EAM()

self.efm1 = EFM(256)

self.efm2 = EFM(512)

self.efm3 = EFM(1024)

self.efm4 = EFM(2048)

self.reduce1 = Conv1x1(256, 64)

self.reduce2 = Conv1x1(512, 128)

self.reduce3 = Conv1x1(1024, 256)

self.reduce4 = Conv1x1(2048, 256)

self.cam1 = CAM(128, 64)

self.cam2 = CAM(256, 128)

self.cam3 = CAM(256, 256)

self.predictor1 = nn.Conv2d(64, 1, 1)

self.predictor2 = nn.Conv2d(128, 1, 1)

self.predictor3 = nn.Conv2d(256, 1, 1)

# def initialize_weights(self):

# model_state = torch.load('./models/resnet50-19c8e357.pth')

# self.resnet.load_state_dict(model_state, strict=False)

def forward(self, x):

x1, x2, x3, x4 = self.resnet(x)

edge = self.eam(x4, x1)

edge_att = torch.sigmoid(edge)

x1a = self.efm1(x1, edge_att)

x2a = self.efm2(x2, edge_att)

x3a = self.efm3(x3, edge_att)

x4a = self.efm4(x4, edge_att)

x1r = self.reduce1(x1a)

x2r = self.reduce2(x2a)

x3r = self.reduce3(x3a)

x4r = self.reduce4(x4a)

x34 = self.cam3(x3r, x4r)

x234 = self.cam2(x2r, x34)

x1234 = self.cam1(x1r, x234)

o3 = self.predictor3(x34)

o3 = F.interpolate(o3, scale_factor=16, mode='bilinear', align_corners=False)

o2 = self.predictor2(x234)

o2 = F.interpolate(o2, scale_factor=8, mode='bilinear', align_corners=False)

o1 = self.predictor1(x1234)

o1 = F.interpolate(o1, scale_factor=4, mode='bilinear', align_corners=False)

oe = F.interpolate(edge_att, scale_factor=4, mode='bilinear', align_corners=False)

return o3, o2, o1, oe总结

BGNet 是一个由 EAM(边缘注意模块)、EFM(边缘特征融合模块)和 CAM(通道注意模块) 组成的统一整体,其中各个组件串行互相依赖,并相互补充。前两个模块(EAM 和 EFM)主要用于提取边界线索并引导特征学习,而 CAM 进一步增强关键特征表示。论文中的实验结果指出,CAM 和 EAM(边界线索相关模块)对性能提升的贡献比 EFM 更大。