解析大模型归一化:提升训练稳定性和性能的关键技术

引言

在深度学习领域,特别是在处理大型神经网络模型时,归一化(Normalization)是一项至关重要的技术。它可以提高模型的训练稳定性和性能,在加速收敛方面发挥了重要作用。本文将深入探讨大模型归一化的原理、常见方法及其应用场景,并结合实际案例和代码示例进行说明。

一、归一化的作用与理论基础

归一化的主要目的是为了提高模型的训练稳定性和性能。具体来说,归一化有以下几个关键作用:

-

提高训练稳定性: 在神经网络训练过程中,由于数据的分布差异较大,可能会导致梯度爆炸或梯度消失等问题。归一化可以将输入数据的分布调整到一个稳定的范围内,使得模型在训练过程中更加稳定。理论上,归一化有助于保持激活函数的输出在一个合理的范围内,从而避免梯度过大或过小的问题。

-

加速模型收敛: 归一化可以使得模型在训练过程中更快地收敛到最优解,从而提高训练效率。通过减少梯度更新的波动,模型能够更平稳地接近全局最小值。数学上,归一化相当于对每个层的输入进行了标准化处理,使得损失函数的曲面更加平滑,有利于优化算法找到更好的解。

-

提升模型性能: 通过归一化处理,可以使得模型在测试集上的性能得到提升。归一化有助于减少模型对输入数据的敏感性,从而使模型更加鲁棒和泛化能力强。实验表明,经过归一化的模型通常在各种任务中表现出更好的泛化能力。

二、常见的归一化方法及其工作原理与代码实现

不同的归一化方法适用于不同类型的数据和任务。以下是几种常见的归一化方法及其详细的工作原理和代码实现:

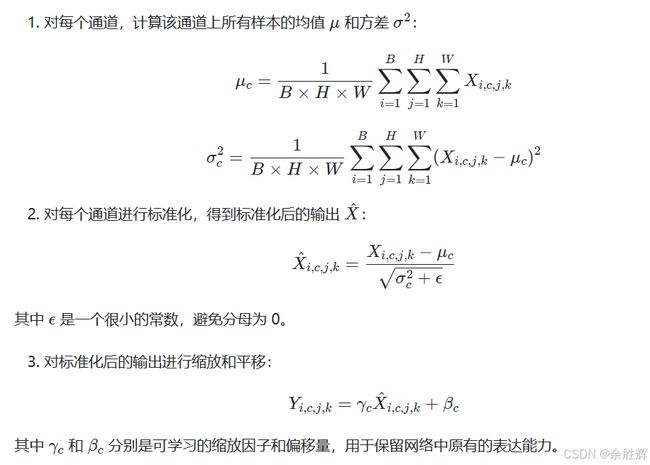

BatchNorm(批量归一化)

- 适用场景:主要用于图像数据。

- 原理:BatchNorm通过对每个批次的数据进行归一化处理,使得每个批次的数据分布保持一致。具体来说,BatchNorm计算每个批次内每个特征的均值和方差,并使用这些统计量对特征进行标准化。此外,BatchNorm还引入了两个可学习参数(缩放和平移),以恢复模型的表达能力。

- 局限性:对于序列数据(如文本数据),BatchNorm的效果并不理想,因为序列数据的长度通常是不一致的,且一个批次内的序列长度也可能不同。

import torch

import torch.nn as nn

# 定义一个简单的卷积神经网络

class CNNModel(nn.Module):

def __init__(self, num_classes=10):

super(CNNModel, self).__init__()

self.conv1 = nn.Conv2d(1, 32, kernel_size=3)

self.bn1 = nn.BatchNorm2d(32) # BatchNorm层

self.relu = nn.ReLU()

self.fc = nn.Linear(32 * 26 * 26, num_classes)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x) # 应用BatchNorm

x = self.relu(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

# 创建模型实例

model = CNNModel()LayerNorm(层归一化)

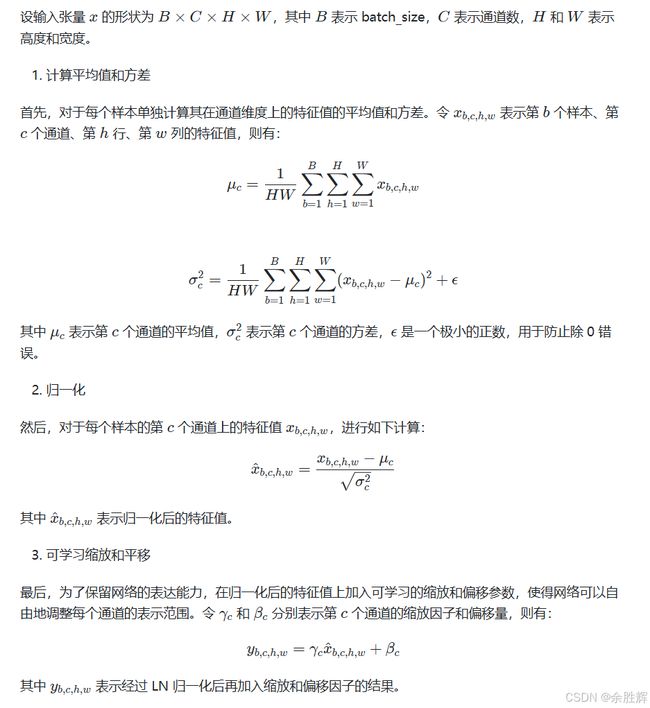

- 适用场景:主要用于处理序列数据,如自然语言处理(NLP)领域中的Transformer模型。

- 原理:LayerNorm针对每个样本的每个层进行归一化处理,使得每个层的输出分布保持一致。具体来说,LayerNorm计算每个样本每个层的均值和方差,并使用这些统计量对层的输出进行标准化。LayerNorm不需要依赖于批次信息,因此更适合处理长度不一致的序列数据。

- 优势:相比BatchNorm,LayerNorm更适合处理长度不一致的序列数据,因为它对每个样本独立进行归一化。

import torch

import torch.nn as nn

# 定义一个简单的Transformer编码器层

class TransformerEncoderLayer(nn.Module):

def __init__(self, d_model, nhead):

super(TransformerEncoderLayer, self).__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead)

self.norm1 = nn.LayerNorm(d_model) # LayerNorm层

self.linear1 = nn.Linear(d_model, 256)

self.norm2 = nn.LayerNorm(d_model) # LayerNorm层

self.dropout = nn.Dropout(0.1)

self.linear2 = nn.Linear(256, d_model)

def forward(self, src):

src2 = self.self_attn(src, src, src)[0]

src = src + self.dropout(src2)

src = self.norm1(src) # 应用LayerNorm

src2 = self.linear2(self.dropout(torch.relu(self.linear1(src))))

src = src + self.dropout(src2)

src = self.norm2(src) # 应用LayerNorm

return src

# 创建编码器层实例

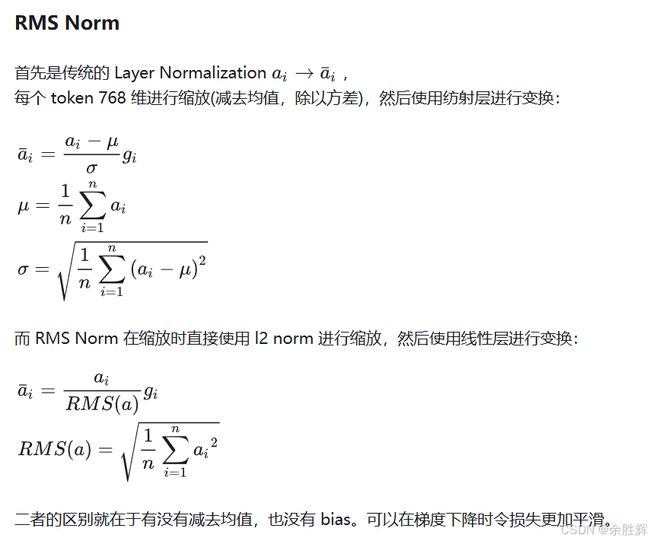

encoder_layer = TransformerEncoderLayer(d_model=512, nhead=8)RMSNorm(均方根归一化)

- 原理:RMSNorm是LayerNorm的一种变体,主要区别在于归一化的方法不同。RMSNorm利用均方根(Root Mean Square, RMS)进行归一化,相比LayerNorm去除了平移部分,只保留了缩放部分。具体来说,RMSNorm计算每个样本每个层的均方根,并使用该值对层的输出进行标准化。

- 优势:RMSNorm的训练速度更快,且效果与LayerNorm相当或有所提升。实验表明,RMSNorm在某些任务中表现出更好的性能。

import torch

import torch.nn as nn

class RMSNorm(nn.Module):

def __init__(self, normalized_shape, eps=1e-8):

super(RMSNorm, self).__init__()

self.eps = eps

self.weight = nn.Parameter(torch.ones(normalized_shape))

def forward(self, x):

rms = torch.sqrt(torch.mean(x ** 2, dim=-1, keepdim=True) + self.eps)

return self.weight * (x / rms)

# 使用RMSNorm替换LayerNorm

class TransformerEncoderLayerWithRMSNorm(nn.Module):

def __init__(self, d_model, nhead):

super(TransformerEncoderLayerWithRMSNorm, self).__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead)

self.norm1 = RMSNorm(d_model) # RMSNorm层

self.linear1 = nn.Linear(d_model, 256)

self.norm2 = RMSNorm(d_model) # RMSNorm层

self.dropout = nn.Dropout(0.1)

self.linear2 = nn.Linear(256, d_model)

def forward(self, src):

src2 = self.self_attn(src, src, src)[0]

src = src + self.dropout(src2)

src = self.norm1(src) # 应用RMSNorm

src2 = self.linear2(self.dropout(torch.relu(self.linear1(src))))

src = src + self.dropout(src2)

src = self.norm2(src) # 应用RMSNorm

return src

# 创建编码器层实例

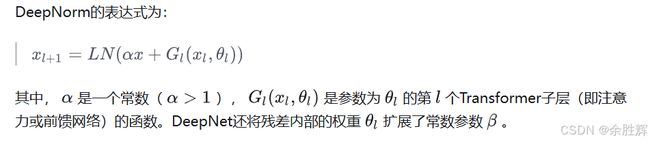

encoder_layer_rms = TransformerEncoderLayerWithRMSNorm(d_model=512, nhead=8)DeepNorm (由微软提出)

- 原理:DeepNorm主要对Transformer结构中的残差链接进行修正,缓解模型参数爆炸式更新的问题,把模型参数更新限制在一个常数域范围内,使得模型训练过程更加稳定。具体来说,DeepNorm通过对残差连接的权重进行特殊设计,确保模型参数更新不会过大或过小。

- 优势:兼具PreLN(预归一化)的训练稳定性和PostLN(后归一化)的效果性能。实验表明,DeepNorm在大规模Transformer模型中表现尤为出色。

import torch

import torch.nn as nn

def deep_norm_weight_init(layer, alpha):

if isinstance(layer, nn.Linear):

std = math.sqrt(2.0 / (layer.weight.shape[0] + layer.weight.shape[1]))

std = std * (alpha ** (-1 / (2 * len(layer.weight.shape) - 2)))

nn.init.normal_(layer.weight, mean=0.0, std=std)

# 定义一个带有DeepNorm初始化的Transformer编码器层

class TransformerEncoderLayerWithDeepNorm(nn.Module):

def __init__(self, d_model, nhead, alpha=0.81):

super(TransformerEncoderLayerWithDeepNorm, self).__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead)

self.norm1 = nn.LayerNorm(d_model) # LayerNorm层

self.linear1 = nn.Linear(d_model, 256)

self.norm2 = nn.LayerNorm(d_model) # LayerNorm层

self.dropout = nn.Dropout(0.1)

self.linear2 = nn.Linear(256, d_model)

self.apply(lambda m: deep_norm_weight_init(m, alpha))

def forward(self, src):

src2 = self.self_attn(src, src, src)[0]

src = src + self.dropout(src2)

src = self.norm1(src) # 应用LayerNorm

src2 = self.linear2(self.dropout(torch.relu(self.linear1(src))))

src = src + self.dropout(src2)

src = self.norm2(src) # 应用LayerNorm

return src

# 创建编码器层实例

encoder_layer_deepnorm = TransformerEncoderLayerWithDeepNorm(d_model=512, nhead=8, alpha=0.81)三、归一化的位置及其影响

在神经网络中,归一化的位置通常有两种选择:PreNorm(预归一化)和PostNorm(后归一化)。不同位置的选择会对模型的训练稳定性和性能产生显著影响。

- PreNorm:在层的计算之前进行归一化处理。PreNorm有助于提升训练稳定性,但可能会对性能产生一定影响。理论上,PreNorm使得每一层的输入分布更加稳定,从而减少了梯度消失或爆炸的风险。然而,由于归一化发生在激活函数之前,可能会影响模型的表达能力。

-

class PreNormLayer(nn.Module): def __init__(self, norm_layer, layer_fn): super(PreNormLayer, self).__init__() self.norm = norm_layer self.layer = layer_fn def forward(self, x): return self.layer(self.norm(x)) - PostNorm:在层的计算之后进行归一化处理。PostNorm通常具有较好的性能表现,但在某些情况下可能会导致训练不稳定。理论上,PostNorm使得每一层的输出分布更加稳定,从而有利于后续层的学习。然而,由于归一化发生在激活函数之后,可能会放大某些异常值的影响。

-

class PostNormLayer(nn.Module): def __init__(self, norm_layer, layer_fn): super(PostNormLayer, self).__init__() self.norm = norm_layer self.layer = layer_fn def forward(self, x): return self.norm(self.layer(x))在实际应用中,可以根据具体任务和模型结构选择合适的归一化方法和位置。例如,在Transformer模型中,通常会结合使用PreNorm和PostNorm来平衡训练稳定性和性能。实验表明,这种组合方式能够在多种任务中取得最佳效果。

四、归一化的应用实例及实验结果

以NLP领域中的Transformer模型为例,该模型在处理文本数据时采用了LayerNorm进行归一化处理。LayerNorm针对每个样本的每个层进行归一化处理,使得每个层的输出分布保持一致,从而提高模型的训练稳定性和性能。

此外,一些改进的Transformer模型(如LLama2)还采用了RMSNorm进行归一化处理,以进一步提高模型的训练速度和效果。以下是一个具体的实验案例,展示了不同归一化方法在Transformer模型中的表现:

import torch

from torch.utils.data import DataLoader, Dataset

from transformers import BertTokenizer, BertForSequenceClassification

# 加载预训练的BERT模型和分词器

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertForSequenceClassification.from_pretrained('bert-base-uncased')

# 替换模型中的归一化层

for name, module in model.named_modules():

if isinstance(module, nn.LayerNorm):

model._modules[name] = RMSNorm(module.normalized_shape, eps=module.eps)

# 准备数据集和数据加载器

class TextDataset(Dataset):

def __init__(self, texts, labels, tokenizer, max_len):

self.texts = texts

self.labels = labels

self.tokenizer = tokenizer

self.max_len = max_len

def __len__(self):

return len(self.texts)

def __getitem__(self, idx):

text = self.texts[idx]

label = self.labels[idx]

encoding = self.tokenizer.encode_plus(

text,

add_special_tokens=True,

max_length=self.max_len,

return_token_type_ids=False,

padding='max_length',

return_attention_mask=True,

return_tensors='pt',

truncation=True

)

return {

'input_ids': encoding['input_ids'].flatten(),

'attention_mask': encoding['attention_mask'].flatten(),

'labels': torch.tensor(label, dtype=torch.long)

}

# 训练和评估模型

def train_and_evaluate(model, dataloader, optimizer, criterion, device):

model.train()

total_loss = 0

for batch in dataloader:

input_ids = batch['input_ids'].to(device)

attention_mask = batch['attention_mask'].to(device)

labels = batch['labels'].to(device)

optimizer.zero_grad()

outputs = model(input_ids=input_ids, attention_mask=attention_mask, labels=labels)

loss = outputs.loss

loss.backward()

optimizer.step()

total_loss += loss.item()

avg_loss = total_loss / len(dataloader)

print(f'Average Loss: {avg_loss:.4f}')

# 示例数据集

texts = ["This is a sample sentence.", "Another example sentence."]

labels = [0, 1]

dataset = TextDataset(texts, labels, tokenizer, max_len=128)

dataloader = DataLoader(dataset, batch_size=2, shuffle=True)

# 定义优化器和损失函数

optimizer = torch.optim.AdamW(model.parameters(), lr=2e-5)

criterion = nn.CrossEntropyLoss()

# 训练模型

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

train_and_evaluate(model, dataloader, optimizer, criterion, device)五、未来研究方向

- 自适应归一化:如何根据数据的动态特性自动调整归一化策略,以更好地适应不同任务的需求。

- 多模态归一化:如何在多模态数据(如图像和文本的联合处理)中应用归一化技术,以提升模型的整体性能。

- 轻量化归一化:如何在保持性能的前提下,减少归一化操作的计算开销,以适用于资源受限的环境。

总结

大模型归一化是深度学习领域中的重要技术。通过选择合适的归一化方法和位置,可以显著提高模型的训练稳定性和性能。无论是BatchNorm、LayerNorm、RMSNorm还是DeepNorm,每种方法都有其独特的优势和适用场景。理解并合理应用这些归一化技术,将有助于构建更高效、更稳定的深度学习模型。

参考资料

- Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

- Layer Normalization

- RMSNorm: Towards Faster Normalized Activation Functions

- DeepNorm: Scaling Transformer to 1,000 Layers