Apache+lvs高可用+keepalive(主从+双主模型)

Apache+lvs高可用+keepalive(主从+双主模型)

keepalive实验准备环境:

httpd-2.2.15-39.el6.centos.x86_64

keepalived-1.2.13-1.el6.x86_64.rpm

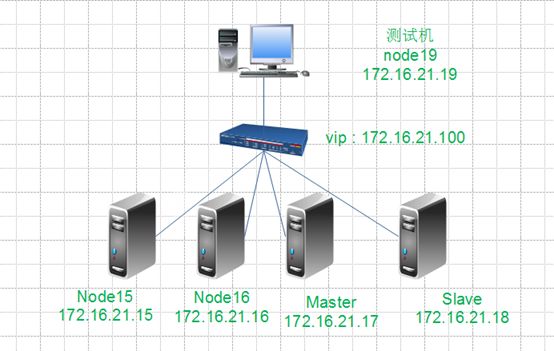

实验拓扑图:

LVS+Keepalived 实现高可用的前端负载均衡器

node15:

1.安装httpd

[root@node15 ~]# yum intsall -y httpd

2.配置httpd

[root@node15 ~]# vim /var/www/html/index.html

<h1>RS1.stu21.com</h1>

3.启动httpd

[root@node15 ~]# service httpd start

4.测试

5.设置开机自启动

[root@node15 ~]# chkconfig httpd on

[root@node15 ~]# chkconfig --list httpd

httpd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

6.配置node1

[root@node15 ~]# mkdir src

[root@node15 ~]# cd src/

[root@node15 src]# vim realserver.sh

#!/bin/bash

#

# Script to start LVS DR real server.

# description: LVS DR real server

#

. /etc/rc.d/init.d/functions

VIP=172.16.21.100 #修改你的VIP

host=`/bin/hostname`

case "$1" in

start)

# Start LVS-DR real server on this machine.

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:0

;;

stop)

# Stop LVS-DR real server loopback device(s).

/sbin/ifconfig lo:0 down

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

;;

status)

# Status of LVS-DR real server.

islothere=`/sbin/ifconfig lo:0 | grep $VIP`

isrothere=`netstat -rn | grep "lo:0" | grep $VIP`

if [ ! "$islothere" -o ! "isrothere" ];then

# Either the route or the lo:0 device

# not found.

echo "LVS-DR real server Stopped."

else

echo "LVS-DR real server Running."

fi

;;

*)

# Invalid entry.

echo "$0: Usage: $0 {start|status|stop}"

exit 1

;;

esac

[root@node15 src]# chmod +x realserver.sh

[root@node15 src]# ll

total 4

-rwxr-xr-x 1 root root 1602 Jan 16 11:01 realserver.sh

[root@node15 src]# ./realserver.sh start

[root@node15 src]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:01:0D:11

inet addr:172.16.21.15 Bcast:172.16.255.255 Mask:255.255.0.0

inet6 addr: fe80::20c:29ff:fe01:d11/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8587 errors:0 dropped:0 overruns:0 frame:0

TX packets:1659 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:788734 (770.2 KiB) TX bytes:245057 (239.3 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:684 (684.0 b) TX bytes:684 (684.0 b)

lo:0 Link encap:Local Loopback

inet addr:172.16.21.100 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1

[root@node15 src]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.16.21.100 0.0.0.0 255.255.255.255 UH 0 0 0 lo

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

0.0.0.0 172.16.0.1 0.0.0.0 UG 0 0 0 eth0

[root@node15 src]# cat /proc/sys/net/ipv4/conf/lo/arp_ignore

1

[root@node15 src]# cat /proc/sys/net/ipv4/conf/lo/arp_announce

2

[root@node15 src]# cat /proc/sys/net/ipv4/conf/all/arp_ignore

1

[root@node15 src]# cat /proc/sys/net/ipv4/conf/all/arp_announce

2

好了,node15到这里基本配置完成,下面我们来配置node16。

node16:

1.安装httpd

[root@node16 ~]# yum -y install httpd

2.配置httpd

[root@node16 ~]# vim /var/www/html/index.html

<h1>RS2.stu21.com</h1>

3.启动httpd

[root@node16 ~]# service httpd start

4.测试

5.设置开机自启动

[root@node16 ~]# chkconfig httpd on

[root@node16 ~]# chkconfig --list httpd

httpd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

6.配置node16

[root@node16 ~]# mkdir src

[root@node16 ~]# cd src/

[root@node16 src]# vim realserver.sh

#!/bin/bash

#

# Script to start LVS DR real server.

# description: LVS DR real server

#

. /etc/rc.d/init.d/functions

VIP=172.16.21.100

host=`/bin/hostname`

case "$1" in

start)

# Start LVS-DR real server on this machine.

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:0

;;

stop)

# Stop LVS-DR real server loopback device(s).

/sbin/ifconfig lo:0 down

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

;;

status)

# Status of LVS-DR real server.

islothere=`/sbin/ifconfig lo:0 | grep $VIP`

isrothere=`netstat -rn | grep "lo:0" | grep $VIP`

if [ ! "$islothere" -o ! "isrothere" ];then

# Either the route or the lo:0 device

# not found.

echo "LVS-DR real server Stopped."

else

echo "LVS-DR real server Running."

fi

;;

*)

# Invalid entry.

echo "$0: Usage: $0 {start|status|stop}"

exit 1

;;

esac

[root@node16 src]# chmod +x realserver.sh

[root@node16 src]# ./realserver.sh start

[root@node16 src]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:C4:F0:75

inet addr:172.16.21.16 Bcast:172.16.255.255 Mask:255.255.0.0

inet6 addr: fe80::20c:29ff:fec4:f075/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:195309 errors:0 dropped:0 overruns:0 frame:0

TX packets:11581 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:185306532 (176.7 MiB) TX bytes:1258818 (1.2 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:24 errors:0 dropped:0 overruns:0 frame:0

TX packets:24 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2056 (2.0 KiB) TX bytes:2056 (2.0 KiB)

lo:0 Link encap:Local Loopback

inet addr:172.16.21.100 Mask:255.255.255.255

UP LOOPBACK RUNNING MTU:65536 Metric:1

[root@node16 src]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.16.21.100 0.0.0.0 255.255.255.255 UH 0 0 0 lo

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

0.0.0.0 172.16.0.1 0.0.0.0 UG 0 0 0 eth0

好了,到这里node16也基本配置完成。下面我们来配置master与slave。

master 与 slave:

1.安装keepalived与ipvsadm

master(节点node17):

[root@node17 ~]# yum install -y ipvsadm

[root@node17 ~]# yum -y install keepalived

[root@node18 ~]# yum install -y ipvsadm

[root@node18 ~]# yum -y install keepalived

2.修改配置文件

master(node17)

[root@node17 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER #配置模式

interface eth0

virtual_router_id 51

priority 101 #配置优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

3.将配置文件同步到slave

[root@master ~]# scp /etc/keepalived/keepalived.conf [email protected]:/etc/keepalived/ |

4.简单修改一下slave配置文件

[root@node18 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP #配置模式 #修改为BACKUP

interface eth0

virtual_router_id 51

priority 100 #配置优先级 #修改优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

5.启动master与slave的keepalived服务

[root@node17 ~]# service keepalived start

Starting keepalived:

[root@node18 ~]# service keepalived start

Starting keepalived: [ OK ]

6.查看一下LVS状态

[root@node17 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.21.100:80 rr

-> 172.16.21.15:80 Route 1 0 0

-> 172.16.21.16:80 Route 1 0 0

[root@node18 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.21.100:80 rr

-> 172.16.21.15:80 Route 1 0 0

-> 172.16.21.16:80 Route 1 0 0

7.测试

8.模拟故障

(1).停止一下node15上的httpd

[root@node15 ~]# service httpd stop

Stopping httpd:

(2).查看一下的lvs(master)

[root@node17 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.21.100:80 rr

-> 172.16.21.16:80 Route 1 0 0

You have mail in /var/spool/mail/root

(3).测试一下

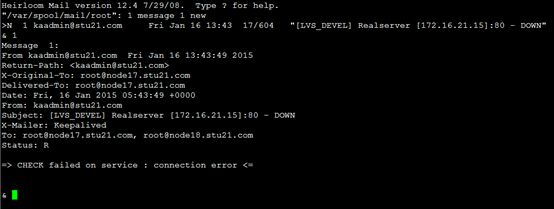

(4).查看一下邮件

[root@node17 ~]# mail

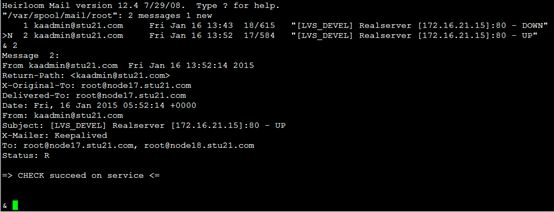

(5).重新启动一下node15上的httpd

(6).再查看一下lvs状态(master)

[root@node17 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.21.100:80 rr

-> 172.16.21.15:80 Route 1 0 0

-> 172.16.21.16:80 Route 1 0 0

You have new mail in /var/spool/mail/root

(7).再查看一下邮件

[root@node17 ~]# mail

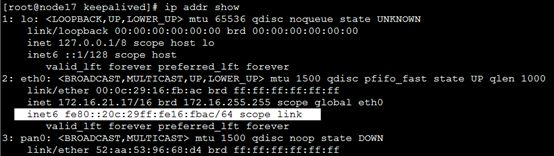

(8).关闭master上keepalived

[root@node17 ~]# service keepalived stop

Stopping keepalived: [ OK ]

[root@node17 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

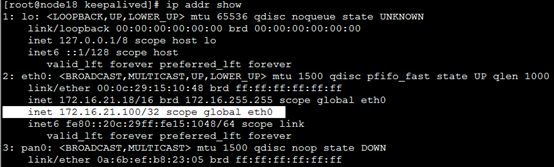

(9).查看一下slave状态

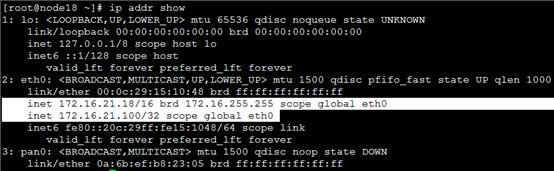

[root@node18 ~]# ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:15:10:48 brd ff:ff:ff:ff:ff:ff

inet 172.16.21.18/16 brd 172.16.255.255 scope global eth0

inet 172.16.21.100/32 scope global eth0

inet6 fe80::20c:29ff:fe15:1048/64 scope link

valid_lft forever preferred_lft forever

3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether 0a:6b:ef:b8:23:05 brd ff:ff:ff:ff:ff:ff

You have new mail in /var/spool/mail/root

[root@node18 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.21.100:80 rr

-> 172.16.21.15:80 Route 1 0 0

-> 172.16.21.16:80 Route 1 0 0

(10).再次测试一下

注,大家可以看到,经过上面的演示我们现在LVS的高可用即前端负载均衡的高可用,同时实现对后端realserver监控,也实现后端realserver宕机时会给管理员发送邮件。但还有几个问题我们还没有解决,问题如下:

- 所有realserver都down机,怎么处理?是不是用户就没法打开,还是提供一下维护页面。

- 怎么完成维护模式keepalived切换?

- 如何在keepalived故障时,发送警告邮件给指定的管理员?

- 9.所有realserver都down机,怎么处理?

- 问 题:在集群中如果所有real server全部宕机了,客户端访问时就会出现错误页面,这样是很不友好的,我们得提供一个维护页面来提醒用户,服务器正在维护,什么时间可以访问等,下 面我们就来解决一下这个问题。解决方案有两种,一种是提供一台备用的real server当所有的服务器宕机时,提供维护页面,但这样做有点浪费服务器。另一种就是在负载均衡器上提供维护页面,这样是比较靠谱的,也比较常用。下面 我们就来具体操作一下。

(1).master(node17)与slave(node18)安装上httpd

[root@node17 ~]# yum -y install httpd

[root@node18 ~]# yum -y install httpd

(2).配置维护页面

[root@node17 ~]# vim /var/www/html/index.html

Website is currently under maintenance, please come back later!

[root@node18 ~]# vim /var/www/html/index.html

Website is currently under maintenance, please come back later!

(3).启动httpd服务并测试

[root@node17 ~]# service httpd start

Starting httpd: [ OK ]

[root@node18 ~]# service httpd start

Starting httpd: [ OK ]

(4).修改配置文件

master(node17):

[root@node17 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER #配置模式

interface eth0

virtual_router_id 51

priority 101 #配置优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

slave(node18):

[root@node18 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP #配置模式 #修改为BACKUP

interface eth0

virtual_router_id 51

priority 100 #配置优先级 #修改优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

(5).关闭所有的real server并重新启动一下master与slave的keepalived

[root@node15 ~]# service httpd stop

Stopping httpd: [ OK ]

[root@node16 ~]# service httpd stop

Stopping httpd: [ OK ]

[root@node17 ~]# service keepalived restart

Stopping keepalived: [ OK ]

Starting keepalived: [ OK ]

[root@node18 ~]# service keepalived restart

Stopping keepalived: [ OK ]

Starting keepalived: [ OK ]

(6).查看一下lvs

[root@node17 ~]# ipvsadm -L -n

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.16.21.100:80 rr

-> 127.0.0.1:80 Local 1 0 0

(7).测试

注,sorry_server测试成功,下面我们继续。

10.怎么完成维护模式keepalived切换?

问 题:我们一般进行主从切换测试时都是关闭keepalived或关闭网卡接口,有没有一种方法能实现在不关闭keepalived下或网卡接口来实现维护 呢?方法肯定是有的,在keepalived新版本中,支持脚本vrrp_srcipt,具体如何使用大家可以man keepalived.conf查看。下面我们来演示一下具体怎么实现。

(1).定义脚本

vrrp_srcipt chk_schedown {

script "[ -e /etc/keepalived/down ] && exit 1 || exit 0"

interval 1 #监控间隔

weight -5 #减小优先级

fall 2 #监控失败次数

rise 1 #监控成功次数

}

(2).执行脚本

track_script {

chk_schedown #执行chk_schedown脚本

}

(3).修改配置文件

master(node17):只需在原来的配置上增加两处代码,是标记绿色的

[root@node17 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_schedown { #定义vrrp执行脚本

script "[ -e /etc/keepalived/down ] && exit 1 || exit 0" #查看是否有down文件,有就进入维护模式

interval 1 #监控间隔时间

weight -5 #降低优先级

fall 2 #失败次数

rise 1 #成功数次

}

vrrp_instance VI_1 {

state MASTER #配置模式

interface eth0

virtual_router_id 51

priority 101 #配置优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

track_script { #执行脚本

chk_schedown

}

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

slave(node18): 只需在原来的配置上增加三处代码,是标记绿色的

[root@node18 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_schedown {

script "[ -e /etc/keepalived/down ] && exit 1 || exit 0"

interval 1

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP #配置模式 #修改为BACKUP

interface eth0

virtual_router_id 51

priority 100 #配置优先级 #修改优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

track_script {

chk_schedown

}

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

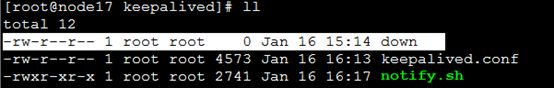

(4).测试

[root@node17 ~]# cd /etc/keepalived/

[root@node17 keepalived]# touch down

[root@node17 keepalived]# ll

total 8

-rw-r--r-- 1 root root 0 Jan 16 15:14 down

-rw-r--r-- 1 root root 4330 Jan 16 15:10 keepalived.conf

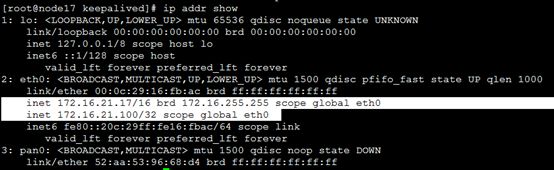

[root@node17 keepalived]# ip add show #查看VIP

[root@node18 ~]# ip addr show #查看一下VIP已转移到slave上

测试:我再把 /etc/keepalived/ 目录下的down 文件移除或是改变

[root@node17 keepalived]# mv down down.bak

[root@node17 keepalived]# ll

total 8

-rw-r--r-- 1 root root 0 Jan 16 15:14 down.bak

-rw-r--r-- 1 root root 4330 Jan 16 15:10 keepalived.conf

[root@node17 keepalived]# ip addr show #查看一下VIP又回到master上

好了,自写监测脚本,完成维护模式切换,到这里就演示成功,下面我们来解决最后一个问题,就是keepalived主从切换的邮件通告。

11.如何在keepalived故障时(或主备切换时),发送警告邮件给指定的管理员?

(1).keepalived通知脚本进阶示例

下面的脚本可以接受选项,其中

- -s, --service SERVICE,...:指定服务脚本名称,当状态切换时可自动启动、重启或关闭此服务;

- -a, --address VIP: 指定相关虚拟路由器的VIP地址;

- -m, --mode {mm|mb}:指定虚拟路由的模型,mm表示主主,mb表示主备;它们表示相对于同一种服务而方,其VIP的工作类型;

- -n, --notify {master|backup|fault}:指定通知的类型,即vrrp角色切换的目标角色;

- -h, --help:获取脚本的使用帮助;

------------------------------------------------------------------------------------------------------------------------

#!/bin/bash

# Author: freeloda

# description: An example of notify script

# Usage: notify.sh -m|--mode {mm|mb} -s|--service SERVICE1,... -a|--address VIP -n|--notify {master|backup|falut} -h|--help

contact='[email protected]' #设置管理员的邮箱

helpflag=0

serviceflag=0

modeflag=0

addressflag=0

notifyflag=0

Usage() {

echo "Usage: notify.sh [-m|--mode {mm|mb}] [-s|--service SERVICE1,...] <-a|--address VIP> <-n|--notify {master|backup|falut}>"

echo "Usage: notify.sh -h|--help"

}

ParseOptions() {

local I=1;

if [ $# -gt 0 ]; then

while [ $I -le $# ]; do

case $1 in

-s|--service)

[ $# -lt 2 ] && return 3

serviceflag=1

services=(`echo $2|awk -F"," '{for(i=1;i<=NF;i++) print $i}'`)

shift 2 ;;

-h|--help)

helpflag=1

return 0

shift

;;

-a|--address)

[ $# -lt 2 ] && return 3

addressflag=1

vip=$2

shift 2

;;

-m|--mode)

[ $# -lt 2 ] && return 3

mode=$2

shift 2

;;

-n|--notify)

[ $# -lt 2 ] && return 3

notifyflag=1

notify=$2

shift 2

;;

*)

echo "Wrong options..."

Usage

return 7

;;

esac

done

return 0

fi

}

#workspace=$(dirname $0)

RestartService() {

if [ ${#@} -gt 0 ]; then

for I in $@; do

if [ -x /etc/rc.d/init.d/$I ]; then

/etc/rc.d/init.d/$I restart

else

echo "$I is not a valid service..."

fi

done

fi

}

StopService() {

if [ ${#@} -gt 0 ]; then

for I in $@; do

if [ -x /etc/rc.d/init.d/$I ]; then

/etc/rc.d/init.d/$I stop

else

echo "$I is not a valid service..."

fi

done

fi

}

Notify() {

mailsubject="`hostname` to be $1: $vip floating"

mailbody="`date '+%F %H:%M:%S'`, vrrp transition, `hostname` changed to be $1."

echo $mailbody | mail -s "$mailsubject" $contact

}

# Main Function

ParseOptions $@

[ $? -ne 0 ] && Usage && exit 5

[ $helpflag -eq 1 ] && Usage && exit 0

if [ $addressflag -ne 1 -o $notifyflag -ne 1 ]; then

Usage

exit 2

fi

mode=${mode:-mb}

case $notify in

'master')

if [ $serviceflag -eq 1 ]; then

RestartService ${services[*]}

fi

Notify master

;;

'backup')

if [ $serviceflag -eq 1 ]; then

if [ "$mode" == 'mb' ]; then

StopService ${services[*]}

else

RestartService ${services[*]}

fi

fi

Notify backup

;;

'fault')

Notify fault

;;

*)

Usage

exit 4

;;

esac

(2).在keepalived.conf配置文件中,其调用方法如下所示:

- notify_master "/etc/keepalived/notify.sh -n master -a 172.16.21.100"

- notify_backup "/etc/keepalived/notify.sh -n backup -a 172.16.21.100"

- notify_fault "/etc/keepalived/notify.sh -n fault -a 172.16.21.100"

(3).修改配置文件

master(node17):只需要在原配置基础上加上绿色着色出的代码

[root@node17 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_schedown { #定义vrrp执行脚本

script "[ -e /etc/keepalived/down ] && exit 1 || exit 0" #查看是否有down文件,有就进入维护模式

interval 1 #监控间隔时间

weight -5 #降低优先级

fall 2 #失败次数

rise 1 #成功数次

}

vrrp_instance VI_1 {

state MASTER #配置模式

interface eth0

virtual_router_id 51

priority 101 #配置优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

track_script { #执行脚本

chk_schedown

}

#增加以下三行

notify_master "/etc/keepalived/notify.sh -n master -a 172.16.21.100"

notify_backup "/etc/keepalived/notify.sh -n backup -a 172.16.21.100"

notify_fault "/etc/keepalived/notify.sh -n fault -a 172.16.21.100"

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

slave(node18):只需要在原配置的基础上增加下面绿色着色处的代码

[root@node18 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected] #配置管理员邮箱

}

notification_email_from [email protected] #配置发件人

smtp_server 127.0.0.1 #配置邮件服务器

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_schedown {

script "[ -e /etc/keepalived/down ] && exit 1 || exit 0"

interval 1

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP #配置模式 #修改为BACKUP

interface eth0

virtual_router_id 51

priority 100 #配置优先级 #修改优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.100 #配置虚拟IP地址

}

track_script {

chk_schedown

}

#增加以下三行

notify_master "/etc/keepalived/notify.sh -n master -a 172.16.21.100"

notify_backup "/etc/keepalived/notify.sh -n backup -a 172.16.21.100"

notify_fault "/etc/keepalived/notify.sh -n fault -a 172.16.21.100"

}

virtual_server 172.16.21.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

#virtual_server#10.10.10.2 1358 {

# delay_loop 6

# lb_algo rr

# lb_kind NAT

# persistence_timeout 50

# protocol TCP

# sorry_server 192.168.200.200 1358

# real_server 192.168.200.2 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.3 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334c

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

#virtual_server#10.10.10.3 1358 {

# delay_loop 3

# lb_algo rr

# lb_kind NAT

# nat_mask 255.255.255.0

# persistence_timeout 50

# protocol TCP

# real_server 192.168.200.4 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

# real_server 192.168.200.5 1358 {

# weight 1

# HTTP_GET {

# url {

# path /testurl/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl2/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# url {

# path /testurl3/test.jsp

# digest 640205b7b0fc66c1ea91c463fac6334d

# }

# connect_timeout 3

# nb_get_retry 3

# delay_before_retry 3

# }

# }

#}

(4).增加脚本

[root@node18 keepalived]# pwd

/etc/keepalived

[root@node18 keepalived]# vim notify.sh

[root@node18 keepalived]# cat notify.sh

#!/bin/bash

# Author: freeloda

# description: An example of notify script

# Usage: notify.sh -m|--mode {mm|mb} -s|--service SERVICE1,... -a|--address VIP -n|--notify {master|backup|falut} -h|--help

contact=' [email protected]'

contact=' [email protected]'

helpflag=0

serviceflag=0

modeflag=0

addressflag=0

notifyflag=0

Usage() {

echo "Usage: notify.sh [-m|--mode {mm|mb}] [-s|--service SERVICE1,...] <-a|--address VIP> <-n|--notify {master|backup|falut}>"

echo "Usage: notify.sh -h|--help"

}

ParseOptions() {

local I=1;

if [ $# -gt 0 ]; then

while [ $I -le $# ]; do

case $1 in

-s|--service)

[ $# -lt 2 ] && return 3

serviceflag=1

services=(`echo $2|awk -F"," '{for(i=1;i<=NF;i++) print $i}'`)

shift 2 ;;

-h|--help)

helpflag=1

return 0

shift

;;

-a|--address)

[ $# -lt 2 ] && return 3

addressflag=1

vip=$2

shift 2

;;

-m|--mode)

[ $# -lt 2 ] && return 3

mode=$2

shift 2

;;

-n|--notify)

[ $# -lt 2 ] && return 3

notifyflag=1

notify=$2

shift 2

;;

*)

echo "Wrong options..."

Usage

return 7

;;

esac

done

return 0

fi

}

#workspace=$(dirname $0)

RestartService() {

if [ ${#@} -gt 0 ]; then

for I in $@; do

if [ -x /etc/rc.d/init.d/$I ]; then

/etc/rc.d/init.d/$I restart

else

echo "$I is not a valid service..."

fi

done

fi

}

StopService() {

if [ ${#@} -gt 0 ]; then

for I in $@; do

if [ -x /etc/rc.d/init.d/$I ]; then

/etc/rc.d/init.d/$I stop

else

echo "$I is not a valid service..."

fi

done

fi

}

Notify() {

mailsubject="`hostname` to be $1: $vip floating"

mailbody="`date '+%F %H:%M:%S'`, vrrp transition, `hostname` changed to be $1."

echo $mailbody | mail -s "$mailsubject" $contact

}

# Main Function

ParseOptions $@

[ $? -ne 0 ] && Usage && exit 5

[ $helpflag -eq 1 ] && Usage && exit 0

if [ $addressflag -ne 1 -o $notifyflag -ne 1 ]; then

Usage

exit 2

fi

mode=${mode:-mb}

case $notify in

'master')

if [ $serviceflag -eq 1 ]; then

RestartService ${services[*]}

fi

Notify master

;;

'backup')

if [ $serviceflag -eq 1 ]; then

if [ "$mode" == 'mb' ]; then

StopService ${services[*]}

else

RestartService ${services[*]}

fi

fi

Notify backup

;;

'fault')

Notify fault

;;

*)

Usage

exit 4

;;

esac

[root@node18 keepalived]# chmod +x notify.sh

(6).将slave(node18)上脚本复制到master(node17)上

[root@node18 keepalived]# scp -rp notify.sh node17:/etc/keepalived/

notify.sh

(7).测试一下脚本

[root@node18 keepalived]# ./notify.sh -h

Usage: notify.sh [-m|--mode {mm|mb}] [-s|--service SERVICE1,...] <-a|--address VIP> <-n|--notify {master|backup|falut}>

Usage: notify.sh -h|--help

[root@node18 keepalived]# ./notify.sh --help

Usage: notify.sh [-m|--mode {mm|mb}] [-s|--service SERVICE1,...] <-a|--address VIP> <-n|--notify {master|backup|falut}>

Usage: notify.sh -h|--help

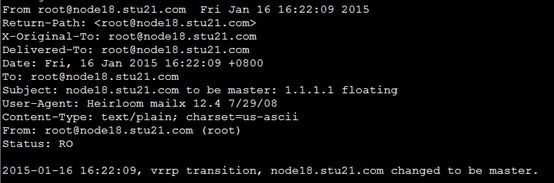

[root@node18 keepalived]# ./notify.sh -m mb -a 1.1.1.1 -n master

(9).模拟故障,切记在模拟故障时先重启一下keepalived服务。

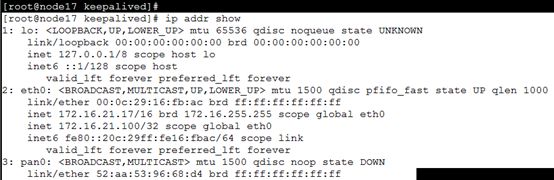

[root@node17 keepalived]# ip addr show #查看一下VIP

[root@node17 keepalived]#touch down #进入维护模式

[root@node18 keepalived]# ip addr show #大家可以看到VIP成功移动到slave上

在有vip的节点上查看一下邮件:

[root@node18 keepalived]# mail

主备切换时,会发送邮件报警,好了到这里所有演示全部完成。

思考题一:

还有个小问题是:如果两个装有keepalived 的节点,在/etc/keepalived/keepalived.conf 中都是主模型,且优先级都是一样的,那么vip 会在哪个节点上?

答案:会根据节点ip最后一个字节大些来确定。vip会在大的字节上。

思考题二:

设置双主模型,即两个vip,同样只需要两台服务器(node17,node18):每个节点既是主又是从。

只需对两个配置文件各自添加 vrrp_instance VI_2 ,virtual_server 两段

node17:

---------------------------------------------------------------------------------------------------------------

vrrp_instance VI_2 {

state BACKUP #配置模式 #修改为BACKUP

#state MASTER #测试配置模式

interface eth0

virtual_router_id 52

priority 99 #配置优先级 #修改优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.101 #配置虚拟IP地址

}

track_script {

chk_schedown

}

#增加以下三行

notify_master "/etc/keepalived/notify.sh -n master -a 172.16.21.101"

notify_backup "/etc/keepalived/notify.sh -n backup -a 172.16.21.101"

notify_fault "/etc/keepalived/notify.sh -n fault -a 172.16.21.101"

}

----------------------------------------------------------------------------------------------------------------------

virtual_server 172.16.21.101 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

--------------------------------------------------------------------------------------------------------------------

node18:

vrrp_instance VI_2 {

#state BACKUP #配置模式 #修改为BACKUP

state MASTER #测试配置模式

interface eth0

virtual_router_id 52

priority 101 #配置优先级 #修改优先级

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.21.101 #配置虚拟IP地址

}

track_script {

chk_schedown

}

#增加以下三行

notify_master "/etc/keepalived/notify.sh -n master -a 172.16.21.101"

#增加以下三行

notify_master "/etc/keepalived/notify.sh -n master -a 172.16.21.101"

notify_backup "/etc/keepalived/notify.sh -n backup -a 172.16.21.101"

notify_fault "/etc/keepalived/notify.sh -n fault -a 172.16.21.101"

}

virtual_server 172.16.21.101 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

#persistence_timeout 50

protocol TCP

real_server 172.16.21.15 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.21.16 80 { #配置realaserver

weight 1

HTTP_GET {#监控配置

url {

path /

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

#digest ff20ad2481f97b1754ef3e12ecd3a9cc

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

sorry_server 127.0.0.1 80 #增加一行sorry_server

}

----------------------------------------------------------------------------------------------------------------------

到这里思考题2 还没有结束,因为我们通过前面LVS-DR在节点node15,node16上只设置了一个vip=172.16.21.100 所以keepalived的双主模型(节点node17,node18)上无法实现RS1(node15),RS2(node16)高可用。

此时我们分别设置节点node15,node16上的/root/src/ realserver.sh的脚本配置(文章前面已经创建过)

node15:只需要添加我着色的代码

[root@node15 src]# pwd

/root/src

[root@node15 src]# ls

realserver.sh

[root@node15 src]# vim realserver.sh

#!/bin/bash

#

# Script to start LVS DR real server.

# description: LVS DR real server

#

. /etc/rc.d/init.d/functions

VIP=172.16.21.100

VIP1=172.16.21.101 #添加增加的VIP,双主模型用到

host=`/bin/hostname`

case "$1" in

start)

# Start LVS-DR real server on this machine.

/sbin/ifconfig lo down

/sbin/ifconfig lo up

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

/sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up

/sbin/ifconfig lo:1 $VIP1 broadcast $VIP1 netmask 255.255.255.255 up

/sbin/route add -host $VIP dev lo:0

/sbin/route add -host $VIP1 dev lo:1

;;

stop)

# Stop LVS-DR real server loopback device(s).

/sbin/ifconfig lo:0 down

/sbin/ifconfig lo:1 down

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

;;

status)

# Status of LVS-DR real server.

islothere=`/sbin/ifconfig lo:0 | grep $VIP`

islothere=`/sbin/ifconfig lo:1 | grep $VIP1`

isrothere=`netstat -rn | grep "lo:0" | grep $VIP`

isrothere=`netstat -rn | grep "lo:1" | grep $VIP1`

if [ ! "$islothere" -o ! "isrothere" ];then

# Either the route or the lo:0 device

# not found.

echo "LVS-DR real server Stopped."

else

echo "LVS-DR real server Running."

fi

;;

*)

# Invalid entry.

echo "$0: Usage: $0 {start|status|stop}"

exit 1

;;

esac

node16:

只需要node15上的/root/src/ realserver.sh的脚本配置复制一份给node16:

[root@node15 src]# scp realserver.sh node16:/root/src/

realserver.sh 100% 1881 1.8KB/s 00:00

接下来测试一下:

VIP=172.16.21.100 实现高可用

VIP=172.16.21.101 实现高可用