Globalization Step-by-Step: Unicode Enabled

Globalization Step-by-Step: Unicode Enabled

Globalization Step-by-Step

On This Page

Overview and Description

The complex programming methods required for working with mixed-byte encodings, the involved process of creating new code pages every time another language requires computer support, and the importance of mixing and sharing information in a variety of languages across different systems were some of the factors motivating the creators of the Unicode encoding standard. Unicode originated through collaboration between Xerox and Apple. An ad hoc committee of several companies then formed, and others, including IBM and Microsoft, rapidly joined. In 1991, this group founded the Unicode Consortium whose membership now includes several leading Information Technology (IT) companies. (For more information on Unicode, visit the Unicode Consortium's site at http://www.Unicode.org.)

Unicode is an especially good fit for the age of the Internet, since the worldwide nature of the Internet demands solutions that work in any language. The World Wide Web Consortium (W3C) has recognized this fact and now expects all new RFCs to use Unicode for text. Many other products and standards now require or allow use of Unicode; for example, XML, HTML, Microsoft JScript, Java, Perl, Microsoft C#, and Microsoft Visual Basic 7 (VB.NET). Today, Unicode is the de facto character encoding standard accepted by all major computer companies, while ISO 10646 is the corresponding worldwide de jure standard approved by all ISO member countries. The two standards include identical character repertoires and binary representations.

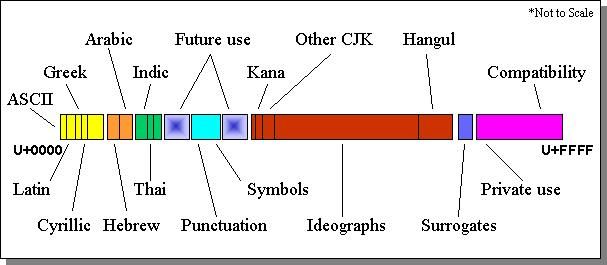

Unicode encompasses virtually all characters used widely in computers today. It is capable of addressing more than 1.1 million code points. The standard has provisions for 8-bit, 16-bit and 32-bit encoding forms. The 16-bit encoding is used as its default encoding and allows for its million plus code points to be distributed across 17 "planes" with each plane addressing over 65,000 characters each. The characters in Plane 0-or as it is commonly called the "Basic Multilingual Plane" (BMP)-are used to represent most of the world's written scripts, characters used in publishing, mathematical and technical symbols, geometric shapes, basic dingbats (including all level-100 Zapf Dingbats), and punctuation marks. But in addition to the support for characters in modern languages and for the symbols and shapes just mentioned, Unicode also offers coverage for other characters, such as less commonly used Chinese, Japanese, and Korean (CJK) ideographs, Arabic presentation forms, and musical symbols. Many of these additional characters are mapped beyond the original plane using an extension mechanism called "surrogate pairs." With Unicode 3.2, over 95,000 code points have already been assigned characters; the rest have been set aside for future use. Unicode also provides Private Use Areas of over 131,000 locations available to applications for user-defined characters, which typically are rare ideographs representing names of people or places.

The figure below shows the Unicode encoding layout for the BMP (Plane 0) in abstract form.

Figure 1: Unicode encoding layout for the BMP (Plane 0)

Unicode rules, however, are strict about code-point assignment-each code point has a distinct representation. There are also many cases in which Unicode deliberately does not provide code points. Variants of existing characters are not given separate code points, because to do so would represent duplicate encoding of what is underlying the same character. Examples are font variants (such as bold and italic) and glyph variants, which basically are different ways of representing the same characters.

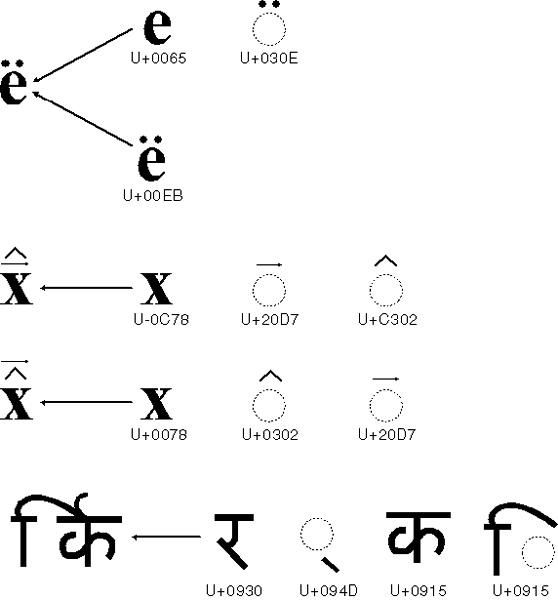

For the most part, Unicode defines characters uniquely, but some characters can be combined to form others, such as accented characters. The most common accented characters, which are used in French, German, and many other European languages, exist in their precomposed forms and are assigned code points. These same characters can be expressed by combining a base character with one or more nonspacing diacritic marks. For example, "a" followed by a nonspacing accent mark is displayed as "à." Nonspacing accent marks make it possible to have a large set of accented characters without assigning them all distinct code points. This is useful for representing accented characters in written languages that are less widely used, such as some African languages. It's also useful for creating a variety of mathematical symbols. The precomposed characters are encoded in the Unicode Standard primarily for compatibility with other encodings. The Unicode Standard contains strict rules for determining the equivalence of precomposed characters to combining character sequences. The Win32 API function FoldStringW maps multiple combining characters into precomposed forms. Also, MultiByteToWideChar can be used with either the MB_PRECOMPOSED or the MB_COMPOSITE flags for mapping characters to their precomposed or composite forms.

For all their advantages, Unicode Standards are far from a panacea for internationalization. The code-point positions of Unicode elements do not imply a sort order, and Unicode does not encode font information. It is the operating system that defines these rules, as in the case of Win32-based applications, which need to obtain sorting and font information from the operating system.

In addition, basing your software on the Unicode Standard is only one step in the internationalization process. You still need to write code that adapts to cultural preferences or language rules. (For more information on other globalization considerations, see "Locale Model", "Input, Display, and Output", and "Multilanguage User Interface [MUI].")

As a further caveat, not all Unicode-based text processing is a matter of simple character-by-character parsing. Complex text-based operations such as hyphenation, line breaking, and glyph formation need to take into account the context in which they are being used (the relation to surrounding characters, for instance). The complexity of these operations hinges on language rules and has nothing to do with Unicode as an encoding standard. Instead, the software implementation should define a higher-level protocol for handling these operations.

In contrast, there are unusual characters that have very specific semantic rules attached to them; these characters are detailed in the The Unicode Standard. Some characters always allow a line break (for example, most spaces), whereas others never do (for instance, nonspacing or nonbreaking characters). Still other characters, including many used in Arabic and Hebrew, are defined as having strong or weak text directionality. The Unicode Standard defines an algorithm for determining the display order of bidirectional text, and it also defines sev eral "directional formatting codes" as overrides for cases not handled by the implicit bidirectional ordering rules to help create comprehensible bidirectional text. These formatting codes allow characters to be stored in logical order but displayed appropriately depending on their directionality. Neutral characters, such as punctuation marks, assume the directionality of the strong or weak characters nearby. Formatting codes can be used to delineate embedded text or to specify the directionality of characters. (For more information on displaying bidirectional Unicode-based text, see The Unicode Standard book.)

Figure 2: Precomposed and composite characters

You have now seen some of the capabilities that Unicode offers. The sections that follow delve deeper into Unicode's functions to provide helpful information as you work with Unicode Standards and encodings. For instance, what is the function of byte-order marks (BOMs)? What are surrogate pairs, and how do they enable you to go from encoding 65,000 characters to over 1 million additional characters? These and other questions will be explored in the following sections.

Transformations of Unicode Code Points

There are different techniques to represent each one of the Unicode code points in binary format. Each of the following techniques uses a different mapping to represent unique Unicode characters. The Unicode encodings are:

- UTF-8: To meet the requirements of byte-oriented and ASCII-based systems, UTF-8 has been defined by the Unicode Standard. Each character is represented in UTF-8 as a sequence of up to 4 bytes, where the first byte indicates the number of bytes to follow in a multibyte sequence, allowing for efficient string parsing. UTF-8 is commonly used in transmission via Internet protocols and in Web content.

- UTF-16: This is the 16-bit encoding form of the Unicode Standard where characters are assigned a unique 16-bit value, with the exception of characters encoded by surrogate pairs, which consist of a pair of 16-bit values. The Unicode 16-bit encoding form is identical to the International Organization for Standardization/International Electrotechnical Commision (ISO/IEC) transformation format UTF-16. In UTF-16, any characters that are mapped up to the number 65,535 are encoded as a single 16-bit value; characters mapped above the number 65,535 are encoded as pairs of 16-bit values. (For more information on surrogate pairs, see "Surrogate Pairs" later in this chapter.) UTF-16 little-endian is the encoding standard at Microsoft (and in the Windows operating system).

- UTF-32: Each character is represented as a single 32-bit integer.

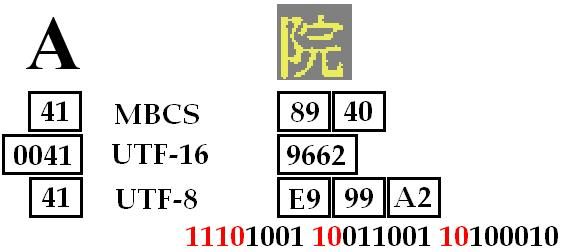

The figure below shows two characters encoded in both code pages and Unicode, using UTF-16 and UTF-8.

Figure 3: The character "A" and a kanji character encoded in code pages and in Unicode with both UTF-16 and UTF-8.

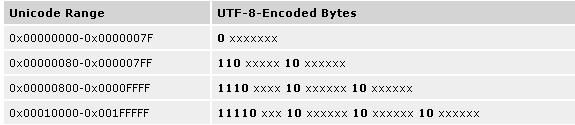

Since UTF-8 is so commonly used in Web content, it's helpful to know how Unicode code points get mapped into this encoding without introducing the hassle of MBCS characters. Table 1 shows the relationship between Unicode code points and a UTF-8-encoded character. The starting byte of a chain of bytes in a UTF-8 encoded character tells how many bytes are used to encode that character. All the following bytes start with the mark "10" and the xxx's denote the binary representation of the encoding within the given range.

Table 1: Relationship between Unicode code points and a UTF-8-encoded character. In UTF-8, the first byte indicates the number of bytes to follow in a multibyte-encoded sequence.

Byte-Order Marks

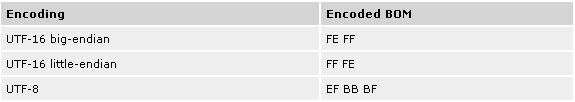

Another concept to be familiar with as you work with Unicode is that of byte- order marks. A BOM is used to indicate how a processor places serialized text into a sequence of bytes. If the least significant byte is placed in the initial position, this is referred to as "little-endian," whereas if the most significant byte is placed in the initial position, the method is known as "big-endian." A BOM can also be used as a reference to identify the encoding of the text file. Notepad, for example, adds the BOM to the beginning of each file, depending on the encoding used in saving the file. This signature will allow Notepad to reopen the file later. Table 2 shows byte-order marks for various encodings. The UTF-8 BOM identifies the encoding format rather than the BOM of the document-since each character is represented by a sequence of bytes.

Table 2: Binary representation of the byte-order mark (U+FEFF) for specific encodings.

Surrogate Pairs

With the Unicode 16-bit encoding system, over 65,000 characters can be encoded (2^16 = 65536). However, the total number of characters that needs to be encoded has actually exceeded that limit (mainly to accommodate the CJK extension of characters). To find additional place for new characters, developers of the Unicode Standard decided to introduce the notion of surrogate pairs. With surrogate pairs, a Unicode code point from range U+D800 to U+DBFF (called "high surrogate") gets combined with another Unicode code point from range U+DC00 to U+DFFF (called "low surrogate") to generate a whole new character, allowing the encoding of over 1 million additional characters. Unlike MBCS characters, high and low surrogates cannot be interpreted when they do not appear as part of a surrogate pair (one of the major challenges with lead-byte and trail-byte processing of MBCS text).

For the first time, in Unicode 3.01 characters are encoded beyond the original 16-bit code space or the BMP (Plane 0). These new characters, encoded at code positions of U+10000 or higher, are synchronized with the international standard ISO/IEC 10646-2. In addition to two Private Use Areas-plane 15 (U+F0000 - U+FFFFD) and plane 16 (U+100000 - U+10FFFD)-Unicode 3.1 and 10646-2 define three new supplementary planes:

- Supplementary Multilingual Plane (SMP)-with code positions from U+10000 through U+1FFFF

- Supplementary Ideographic Plane (SIP)-with code positions from U+20000 through U+2FFFF

- Supplementary Special-purpose Plane (SSP)-with code positions from (SSP) U+E0000 through U+EFFFF

The SMP, or Plane 1, contains several historic scripts and several sets of symbols: Old Italic, Gothic, Deseret, Byzantine Musical Symbols, (Western) Musical Symbols, and Mathematical Alphanumeric Symbols. Together these comprise 1,594 newly encoded characters. The SIP, or Plane 2, contains a very large collection of additional unified Han ideographs known as "CJK Extension B," comprising 42,711 characters, as well as 542 additional CJK Compatibility ideographs. The SSP, or Plane 14, contains a set of 97 tag characters.

Creating Win32 Unicode Applications

You've now learned more about the benefits and capabilities that Unicode offers, in addition to looking more closely at its functionality. You might also be wondering about the extent to which Windows supports Unicode's features. Microsoft Windows NT 3.1 was the first major operating system to support Unicode, and since then Microsoft Windows NT 4, Microsoft Windows 2000, and Microsoft Windows XP have extended this support, with Unicode being their native encoding. In fact, when you run a non-Unicode application on them, the operating system converts the application's text internally to Unicode before any processing is done. The operating system then converts the text back to the expected code-page encoding before passing the information back to the application.

In addition, Windows XP supports a majority of the Unicode code points with fonts, keyboard drivers, and other system files necessary to the input and display of content in all supported languages. Once again, the fundamental representation of text in Windows NT-based operating systems is UTF-16, and the WCHAR data type is a UTF-16 code unit. Windows does provide interfaces for other encodings in order to be backward-compatible, but converts such text to UTF-16 internally. The system also provides interfaces to convert between UTF-16 and UTF-8 and to inquire about the basic properties of a UTF-16 code point (for example, whether it is a letter, a digit, or a punctuation mark). Since Microsoft Windows 95, Microsoft Windows 98, and Windows Me are not Unicode-based, they provide only a small subset of the Unicode support available in the Windows NT-based versions of Windows. Thus by working with Unicode and Windows NT-based operating systems, you are yet one step closer toward the goal of creating world-ready applications. The remaining sections will show you practical techniques and examples for creating Win32 Unicode applications, as well as tips for using encoding for Web pages, in the .NET Framework, and in console or text-mode programming.

Porting existing code page-based applications to Unicode is easier than you might think. In fact, Unicode was implemented in such a way as to make writing Unicode applications almost transparent to developers. Unicode also needed to be implemented in such a way as to ensure that non-Unicode applications remain functional whenever running in a pure Unicode platform. To accommodate these needs, the implementation of Unicode required changes in two major areas:

- Creation of a data-type variable (WCHAR) to handle 16-bit characters

- Creation of a set of APIs that accept string parameters with 16-bit character encoding

WCHAR, a 16-Bit Data Type

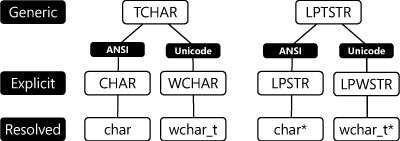

Most string operations for Unicode can be coded with the same logic used for handling the Windows character set. The difference is that the basic unit of operation is a 16-bit quantity instead of an 8-bit one. The header files provide a number of type definitions that make it easy to create sources that can be compiled for Unicode or the Windows character set.

For 8-bit (ANSI) and double-byte characters:typedef char CHAR; // 8-bit charactertypedef char *LPSTR; // pointer to 8-bit string

For Unicode (wide) characters:typedef unsigned short WCHAR; // 16-bit charactertypedef WCHAR *LPWSTR; // pointer to 16-bit stringThe figure below shows the method by which the Win32 header files define three sets of types:

- One set of generic type definitions (TCHAR, LPTSTR), which depend on the state of the _UNICODE manifest constant.

- Two sets of explicit type definitions (one set for those that are based on code pages or ANSI and one set for Unicode).

With generic declarations, it is possible to maintain a single set of source files and compile them for either Unicode or ANSI support.

Figure 4: WCHAR, a new data type.

W Function Prototypes for Win32 APIs

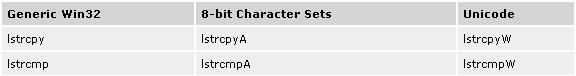

All Win32 APIs that take a text argument either as an input or output variable have been provided with a generic function prototype and two definitions: a version that is based on code pages or ANSI (called "A") to handle code page-based text argument and a wide version (called "W ") to handle Unicode. The generic function prototype consists of the standard API function name implemented as a macro. The generic prototype gets resolved into one of the explicit function prototypes ("A " or "W "), depending on whether the compile-time manifest constant UNICODE is defined in a #define statement. The letter "W" or "A" is added at the end of the API function name in each explicit function prototype.

// windows.h

#ifdef UNICODE

#define SetWindowText SetWindowTextW

#else

#define SetWindowText SetWindowTextA

#endif // UNICODEWith this mechanism, an application can use the generic API function to work transparently with Unicode, depending on the #define UNICODE manifest constant. It can also make mixed calls by using the explicit function name with "W" or "A."

One function of particular importance in this dual compile design is RegisterClass (and RegisterClassEx). A window class is implemented by a window procedure. A window procedure can be registered with either the RegisterClassA or RegisterClassW function. By using the function's "A" version, the program tells the system that the window procedure of the created class expects messages with text or parameters that are based on code pages; other objects associated with the window are created using a Windows code page as the encoding of the text. By registering the window class with a call to the wide-character version of the function, the program can request that the system pass text parameters of messages as Unicode. The IsWindowUnicode function allows programs to query the nature of each window.

On Windows NT 4, Windows 2000, and Windows XP, "A" routines are wrappers that convert text that is based on code pages or ANSI to Unicode-using the system-locale code page-and that then call the corresponding "W" routine. On Windows 95, Windows 98, and Windows Me, "A" routines are native, and most "W" routines are not implemented. If a "W" routine is called and yet not implemented, the ERROR_CALL_NOT_ IMPLEMENTED error message is returned. (For more information on how to write Unicode-based applications for non-Unicode platforms, see " Microsoft Layer for Unicode (MSLU).")

Unicode Text Macro

Visual C++ lets you prefix a literal with an "L" to indicate it is a Unicode string, as shown here:

LPWSTR str = L"This is a Unicode string";In the source file, the string is expressed in the code page that the editor or compiler understands. When compiled, the characters are converted to Unicode. The Win32 SDK resource compiler also supports the "L" prefix notation, even though it can interpret Unicode source files directly. WINDOWS.H defines a macro called TEXT() that will mark string literals as Unicode, depending on whether the UNICODE compile flag is set.

#ifdef UNICODE

#define TEXT(string) L#string

#else

#define TEXT(string) string

#endif // UNICODESo, the generic version of a string of characters should become:

LPTSTR str = TEXT("This is a generic string");C Run-Time Extensions

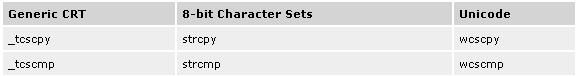

The Unicode data type is compatible with the wide-character data type wchar_t in ANSI C, thus allowing access to the wide-character string functions. Most of the C run-time (CRT) libraries contain wide-character versions of the strxxx string functions. The wide-character versions of the functions all start with wcs.

Table 3: Examples of C run-time library routines used for string manipulation.

The C run-time library also provides such functions as mbtowc and wctomb, which can translate the C character set to and from Unicode. The more general set of functions of the Win32 API can perform the same functions as the C run-time libraries including conversions between Unicode, Windows character sets, and MS-DOS code pages. In Windows programming, it is highly recommended that you use the Win32 APIs instead of the CRT libraries in order to take advantage of locale-aware functionality provided by the system, as described in Use Locale Model.

Table 4: Equivalent Win32 API functions for the C run-time library routines.

Conversion Functions Between Code Page and Unicode

Since a large number of applications are still code page-based, and since you might want to support Unicode internally, there are a lot of occasions where a conversion between code-page encodings and Unicode is necessary. The pair of Win32 APIs, MultiByteToWideChar and WideCharToMultiByte, allow you to convert code-page encoding to Unicode and Unicode data to code-page encoding, respectively. Each of these APIs takes as an argument the value of the code page to be used for that conversion. You can, therefore, either specify the value of a given code page (example: 1256 for Arabic) or use predefined flags such as:

- CP_ACP: for the currently selected system Windows code page

- CP_OEMCP: for the currently selected system OEM code page

- CP_UTF8: for conversions between UTF-16 and UTF-8

(For more information, see the Microsoft Developer Network [MSDN] documentation at http://msdn2.microsoft.com.)

By using MultiByteToWideChar and WideCharToMultiByte consecutively, using the same code-page information, you do what is called a "round trip." If the code-page number that is used in this encoding conversion is the same as the code-page number that was used in encoding the original string, the round trip should allow you to retrieve the initial character string.

Compiling Unicode Applications in Visual C++

By using the generic data types and function prototypes, you have the liberty of creating a non-Unicode application or compiling your software as Unicode. To compile an application as Unicode in Visual C/C++, go to Project/Settings/C/C++ /General, and include UNICODE and _UNICODE in Preprocessor Definitions. The UNICODE flag is the preprocessor definition for all Win32 APIs and data types, and _UNICODE is the preprocessor definition for C run-time functions.

Migration to Unicode

Creating a new program based on Unicode is fairly easy. Unicode has a few features that require special handling, but you can isolate these in your code. Converting an existing program that uses code-page encoding to one that uses Unicode or generic declarations is also straightforward. Here are the steps to follow:

- Modify your code to use generic data types. Determine which variables declared as char or char* are text, and not pointers to buffers or binary byte arrays. Change these types to TCHAR and TCHAR*, as defined in the Win32 file WINDOWS.H, or to _TCHAR as defined in the Visual C++ file TCHAR.H. Replace instances of LPSTR and LPCH with LPTSTR and LPTCH. Make sure to check all local variables and return types. Using generic data types is a good transition strategy because you can compile both ANSI and Unicode versions of your program without sacrificing the readability of the code. Don't use generic data types, however, for data that will always be Unicode or always stays in a given code page. For example, one of the string parameters to MultiByteToWideChar and WideCharToMultiByte should always be a code page-based data type, and the other should always be a Unicode data type.

- Modify your code to use generic function prototypes. For example, use the C run-time call _tcslen instead of strlen, and use the Win32 API SetWindowText instead of SetWindowTextA. This rule applies to all APIs and C functions that handle text arguments.

- Surround any character or string literal with the TEXT macro. The TEXT macro conditionally places an "L" in front of a character literal or a string literal definition. Be careful with escape sequences. For example, the Win32 resource compiler interprets L/" as an escape sequence specifying a 16-bit Unicode double-quote character, not as the beginning of a Unicode string.

- Create generic versions of your data structures. Type definitions for string or character fields in structures should resolve correctly based on the UNICODE compile-time flag. If you write your own string-handling and character-handling functions, or functions that take strings as parameters, create Unicode versions of them and define generic prototypes for them.

- Change your build process. When you want to build a Unicode version of your application, both the Win32 compile-time flag -DUNICODE and the C run-time compile-time flag -D_UNICODE must be defined.

- Adjust pointer arithmetic. Subtracting char* values yields an answer in terms of bytes; subtracting wchar_t* values yields an answer in terms of 16-bit chunks. When determining the number of bytes (for example, when allocating memory for a string), multiply the length of the string in symbols by sizeof(TCHAR). When determining the number of characters from the number of bytes, divide by sizeof(TCHAR). You can also create macros for these two operations, if you prefer. C makes sure that the ++ and -- operators increment and decrement by the size of the data type. Or even better, use Win32 APIs CharNext and CharPrev.

- Check for any code that assumes a character is always 1 byte long. Code that assumes a character's value is always less than 256 (for example, code that uses a character value as an index into a table of size 256) must be changed. Make sure your definition of NULL is 16 bits long.

- Add code to support special Unicode characters. These include Unicode characters in the compatibility zone, characters in the Private Use Area, combining characters, and characters with directionality. Other special characters include the Private Use Area noncharacter U+FFFF, which can be used as a placeholder, and the byte-order marks U+FEFF and U+FFFE, which can serve as flags that indicate a file is stored in Unicode. The byte-order marks are used to indicate whether a text stream is little-endian or big-endian. In plaintext, the line separator U+2028 marks an unconditional end of line. Inserting a paragraph separator, U+2029, between paragraphs makes it easier to lay out text at different line widths.

- Debug your port by enabling your compiler's type-checking. Do this with and without the UNICODE flag defined. Some warnings that you might be able to ignore in the code page-based world will cause problems with Unicode. If your original code compiles cleanly with type-checking turned on, it will be easier to port. The warnings will help you make sure that you are not passing the wrong data type to code that expects wide-character data types. Use the Win32 National Language Support API (NLS API) or equivalent C run-time calls to get character typing and sorting information. Don't try to write your own logic for handling locale-specific type checking-your application will end up carrying very large tables!

In the following example, a string is loaded from the resources and is used in two scenarios:

- As a body to a message box

- To be drawn at run time in a given window

For the purpose of simplification, this example will ignore where and how irrelevant variables have been defined. Suppose you want to migrate the following code page-based code to Unicode:

char g_szTemp[MAX_STR]; // Definition of a char data type

// Loading IDS_SAMPLE from the resources in our char variable

LoadString(g_hInst, IDS_SAMPLE, g_szTemp, MAX_STR);

// Using the loaded string as the body of the message box

MessageBox(NULL, g_szTemp, "This is an ANSI message box!", MB_OK);

// Using the loaded string in a call to TextOut for drawing at

// run time

ExtTextOut(hDC, 10, 10, ETO_CLIPPED , NULL, g_szTemp,

strlen(g_szTemp), NULL);Migrating this code to Unicode is as easy as following the generic coding conventions and properly replacing the data type, Win32 APIs, and C run-time API definitions. You can see the changes in bold typeface.

#include

// Include wchar specific header file

TCHAR g_szTemp[MAX_STR]; // Definition of the data type as a

// generic variable

// Calling the generic LoadString and not W or A versions explicitly

LoadString(g_hInst, IDS_SAMPLE, g_szTemp, MAX_STR);

// Using the appropriate text macro for the title of our message box

MessageBox(NULL, g_szTemp, TEXT("This is a Unicode message box."),

MB_OK);

// Using the generic run-time version of strlen

ExtTextOut(hDC, 10, 10, ETO_CLIPPED , NULL, g_szTemp,

_tcslen(g_szTemp), NULL);After implementing these simple steps, all that is left to do in order to create a Unicode application is to compile your code as Unicode by defining the compiling flags UNICODE and _UNICODE.

Options to Migrate to Unicode

Depending on your needs and your target operating systems, there are several options for migration from an application that is based on code pages or to one that is based on Unicode. Some of these options do have certain caveats, however.

- Create two binaries: default compile for Windows 95, Windows 98, and Windows Me, and Unicode compile for Windows NT, Windows 2000, and Windows XP.

Disadvantage: Maintaining two versions of your software is messy and goes against the principle of a single, worldwide binary.- Always register as a non-Unicode application, converting to and from Unicode as needed.

Disadvantage: Since Windows does not support the creation of custom code pages, you will not be able to use scripts that are supported only through Unicode (such as those in the Indic family of languages, Armenian, and Georgian). Also, this option makes multilingual computing impossible since, when it comes to displaying, your application is always limited to the system's code page.- Create a pure Unicode application.

Disadvantage: This works only on Windows NT, Windows 2000, and Windows XP, since only limited Unicode support is provided on legacy platforms. This is the preferred approach if you are only targeting Unicode platforms.- Use Microsoft Layer for Unicode (MSLU). In this easy approach, you merely create a pure Unicode application, and then link the Unicows.lib file provided by the SDK platform to your project. You will also need to ship the Unicows.dll file along with your deliverables. MSLU is essentially wrapping all explicit "W" version calls made in your code, at run time, to "A" versions, if a non-Unicode platform is detected at run time. This approach is by far the best solution for migrating to Unicode and for ensuring backward-compatibility. (For more information, see " MSLU".)

Best Practices

When writing Unicode code, there are many points to consider, such as when to use UTF-16, when to use UTF-8, what to use for compression, and so forth. The following are recommended practices that will help ensure you choose the best method based on the circumstance at hand.

- Choose UTF-16 as the fundamental representation of text in your application. UTF-8 should be used for application interoperability only (for example, for content sent to be displayed in browsers that do not support Unicode, or over networks and servers that do not support Unicode). Avoid character-by-character processing and use the existing WCHAR system interfaces and resources wherever possible. The interaction between characters in some languages requires expert knowledge of those languages. Microsoft has developed and tested the system interfaces with most of the languages represented by Unicode-unless you are a multilingual expert, it will be difficult to reproduce this support.

- If your application must run on Windows 95, Windows 98, or Windows Me, keep UTF-16 as your fundamental text representation and use MSLU on these operating systems. If you must support non-Unicode text, keep data internally in UTF-16 and convert to other encodings via a gateway. Use system interfaces such as MultiByteToWideChar to convert when necessary. Ensure your application supports Unicode characters that require two UTF-16 code points (surrogate pairs). This should be automatic if you use existing system interfaces, but will require careful development and testing when you do not. Avoid the trap of likening surrogate pairs to the older East Asian double-byte encodings (DBCS). Instead, centralize the needed string operations in a few subroutines. These subroutines should take surrogate pairs into consideration, but should also handle combining characters and other characters that require special handling. A well-written application can confine surrogate processing to just a few such routines. Don't use UTF-8 for compression-it actually expands the size of the data for most languages. If you need a real compression algorithm for Unicode, refer to the Unicode Consortium technical standard "Unicode Technical Standard #6: A Standard Compression Scheme for Unicode" available on their site, http://www.unicode.org.

- Don't choose UTF-32 merely to avoid surrogate processing. Data size will double and the processing benefits are elusive. If you follow the earlier advice on surrogate processing, UTF-16 should be adequate.

- Test your Unicode support with a mix of unrelated languages such as Arabic, Hindi, and Korean. For a well-written Unicode application, the system-locale setting should be irrelevant-test to verify this is the case.

You've now seen techniques and code samples for creating Win32 Unicode applications. Unicode is also extremely useful for dealing with Web content in the global workplace and market. Knowing how to handle encoding in Web pages will help bridge the gap between the plethora of languages that are in use today within Web content.

Overview and Description

Overview and Description