Hadoop伪分布式安装

安装环境

- Hadoop版本:1.1.2

- 虚拟机:virtualbox 4.3.8.0

- 服务器:Ubuntu service 13.10 x64

- java:openJDK 7

安装步骤

环境安装

安装系统

安装虚拟机

安装操作系统

切换系统软件源

切换软件源为oschina的软件源(使用原软件源,速度太慢)

<!-- lang: shell --> sudo cp /etc/apt/sources.list /etc/apt/sources.list.backup sudo vi/etc/apt/sources.list复制以下代码:

deb http://mirrors.oschina.net/ubuntu/ saucy main restricted universe multiverse deb http://mirrors.oschina.net/ubuntu/ saucy-backports main restricted universe multiverse deb http://mirrors.oschina.net/ubuntu/ saucy-proposed main restricted universe multiverse deb http://mirrors.oschina.net/ubuntu/ saucy-security main restricted universe multiverse deb http://mirrors.oschina.net/ubuntu/ saucy-updates main restricted universe multiverse deb-src http://mirrors.oschina.net/ubuntu/ saucy main restricted universe multiverse deb-src http://mirrors.oschina.net/ubuntu/ saucy-backports main restricted universe multiverse deb-src http://mirrors.oschina.net/ubuntu/ saucy-proposed main restricted universe multiverse deb-src http://mirrors.oschina.net/ubuntu/ saucy-security main restricted universe multiverse deb-src http://mirrors.oschina.net/ubuntu/ saucy-updates main restricted universe multiverse保存退出

最后更新源索引

<!-- lang: shell --> sudo apt-get update安装jdk

<!-- lang: shell --> sudo apt-get install openjdk-7-jdk安装完成之后,可以使用java -version 来判断是否安装成功,安装目录为:/usr/lib/jvm/java-7-openjdk-amd64

安装ssh服务

安装ssh-service

<!-- lang: shell --> sudo apt-get install ssh openssh-server配置免密码登录localhost

<!-- lang: shell --> ssh-keygen -t rsa -P "" cp ~/.ssh/id_rsa.pub ~/.ssh/authorized_keys测试

<!-- lang: shell --> ssh localhost

创建用户

创建新的用户组和用户来操作Hadoop

创建hadoop用户组

<!-- lang: shell --> sudo addgroup hadoop4group创建Hadoop用户

<!-- lang: shell --> sudo adduser -ingroup hadoop4group hadoop4user给hadoop用户添加权限,打开/etc/sudoers文件

<!-- lang: shell --> sudo vi /etc/sudoers在root ALL=(ALL:ALL) ALL下添加hadoop4userALL=(ALL:ALL) ALL

<!-- lang: shell --> hadoop4user ALL=(ALL:ALL) ALL

hadoop安装

解压hadoop到user/local下

<!-- lang: shell --> sudo cp hadoop-1.1.2.tar.gz /usr/local/ cd /usr/local sudo tar -zxf hhadoop-1.1.2.tar.gz sudo mv hadoop-1.1.2 hadoop设置该hadoop文件夹的权限

<!-- lang: shell --> sudo chown -R hadoop4group:hadoop4user hadoop配置conf/hadoop-env.sh

在hadoop-env.sh中加入jdk路径<!-- lang: shell --> sudo vi hadoop/conf/hadoop-env.sh //添加如下代码: export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64打开conf/core-site.xml文件

<!-- lang: shell --> sudo vi hadoop/conf/core-site.xml修改成如下:

<!-- lang: shell --> <configuration> <property> <name>fs.default.name</name> <value>hdfs://192.168.1.121:9000</value> </property> </configuration>打开conf/mapred-site.xml文件

<!-- lang: shell --> sudo vi hadoop/conf/mapred-site.xml修改成如下:

<!-- lang: shell --> <configuration> <property> <name>mapred.job.tracker</name> <value>192.168.1.121:9001</value> </property> </configuration>打开conf/hdfs-site.xml文件

<!-- lang: shell --> sudo vi hadoop/conf/hdfs-site.xml修改成如下:

<!-- lang: shell --> <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop4user/hadoop</value> </property> <property> <name>dfs.data.dir</name> <value>/home/hadoop4user/hadoop/data</value> </property> <property> <name>dfs.name.dir</name> <value>/home/hadoop4user/hadoop/name</value> </property> </configuration>注意:安装上述代码创建相对应的目录,并设置目录权限为755

运行Hadoop

进入Hadoop目录,格式化hdfs文件系统

<!-- lang: shell --> cd /usr/local/hadoop/ bin/hadoop namenode -format启动Hadoop

<!-- lang: shell --> bin/start-all.sh通过jps工具检查hadoop运行状况

<!-- lang: shell --> $ jps 4590 TaskTracker 4368 JobTracker 4270 SecondaryNameNode 4642 Jps 4028 DataNode 3801 NameNode也可以通过 netstat 命令来检查 hadoop 是否正常运行

<!-- lang: shell --> $ sudo netstat -plten | grep java tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 1001 9236 2471/java tcp 0 0 0.0.0.0:50010 0.0.0.0:* LISTEN 1001 9998 2628/java tcp 0 0 0.0.0.0:48159 0.0.0.0:* LISTEN 1001 8496 2628/java tcp 0 0 0.0.0.0:53121 0.0.0.0:* LISTEN 1001 9228 2857/java tcp 0 0 127.0.0.1:54310 0.0.0.0:* LISTEN 1001 8143 2471/java tcp 0 0 127.0.0.1:54311 0.0.0.0:* LISTEN 1001 9230 2857/java tcp 0 0 0.0.0.0:59305 0.0.0.0:* LISTEN 1001 8141 2471/java tcp 0 0 0.0.0.0:50060 0.0.0.0:* LISTEN 1001 9857 3005/java tcp 0 0 0.0.0.0:49900 0.0.0.0:* LISTEN 1001 9037 2785/java tcp 0 0 0.0.0.0:50030 0.0.0.0:* LISTEN 1001 9773 2857/java可以进入logs目录查看启动情况

<!-- lang: shell --> tail -f conf/..log在浏览器查看是否启动成功

http://192.168.1.121:50030/

http://192.168.1.121:50070/

windows下eclipse远程连接hadoop开发

eclipse的插件式google搜索找到的

安装好插件,将下载的jar复制到eclipse的plugins目录

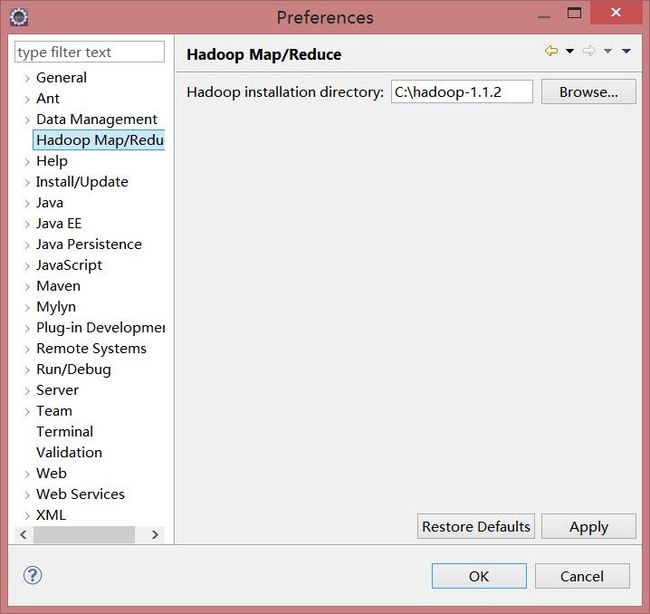

配置hadoop安装目录

这个安装目录只是你下载的hadoop存放的目录。

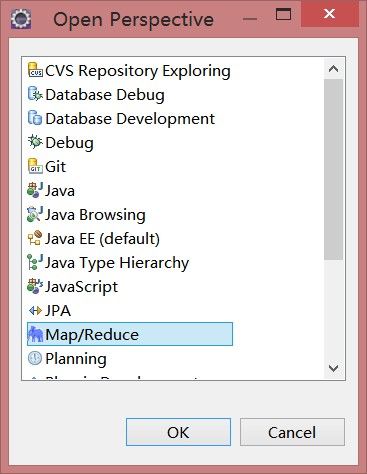

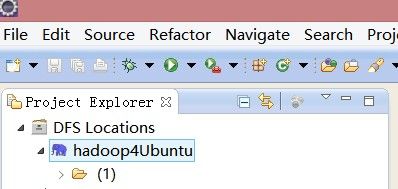

- 切换至“Map/Reduce”工作目录

选择“Window”菜单下选择“Open Perspective–>Other”,弹出一个窗体,从中选择“Map/Reduce”选项即可进行切换至“Map/Reduce”工作目录。

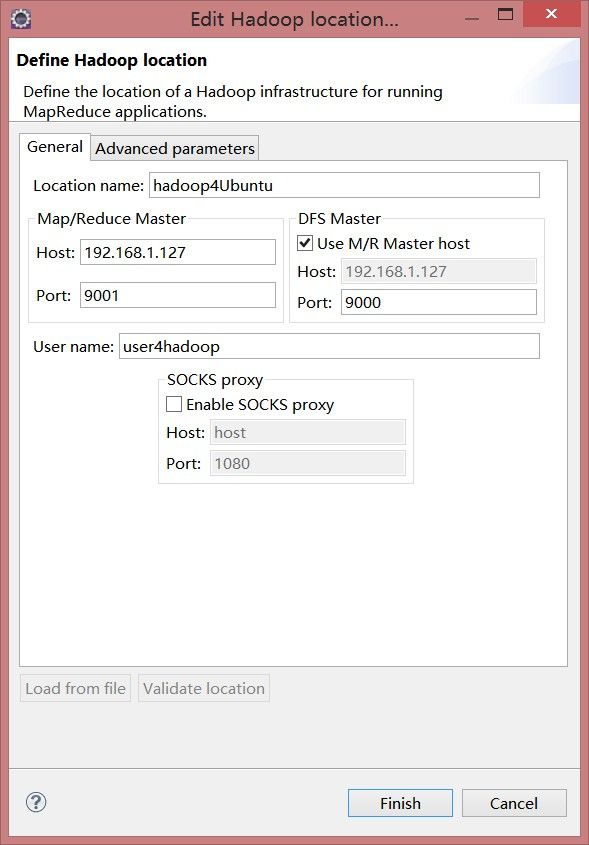

- 配置eclipse中的Map/Reduce Locations

点击新建hadoop location

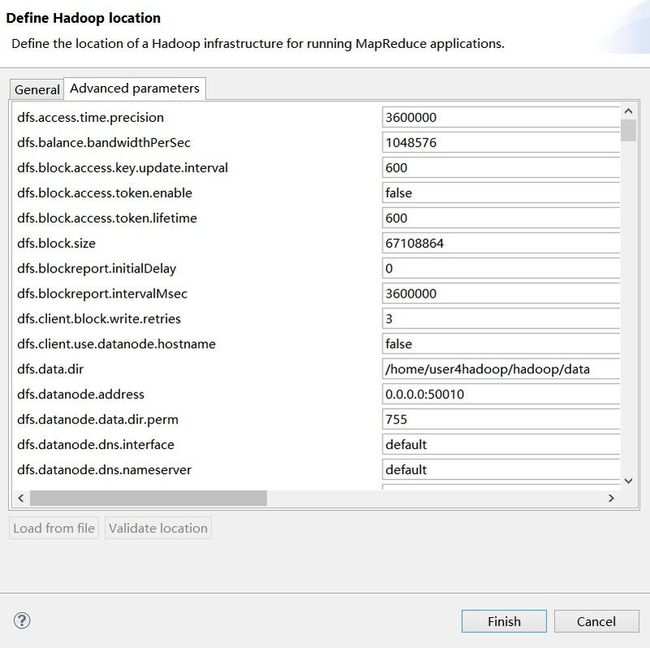

配置hadoop location可以参考eclipse Workspace目录下的.metadata.plugins\org.apache.hadoop.eclipse\locations的xml插件配置文件:

<?xml version="1.0" encoding="UTF-8" standalone="no"?><configuration>

<property><!--Loaded from Unknown--><name>fs.s3n.impl</name><value>org.apache.hadoop.fs.s3native.NativeS3FileSystem</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.counters.max</name><value>120</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.cache.levels</name><value>2</value></property>

<property><!--Loaded from Unknown--><name>dfs.client.use.datanode.hostname</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>hadoop.tmp.dir</name><value>/home/user4hadoop/hadoop</value></property>

<property><!--Loaded from Unknown--><name>hadoop.native.lib</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>map.sort.class</name><value>org.apache.hadoop.util.QuickSort</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.decommission.nodes.per.interval</name><value>5</value></property>

<property><!--Loaded from Unknown--><name>dfs.https.need.client.auth</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>ipc.client.idlethreshold</name><value>4000</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.data.dir.perm</name><value>755</value></property>

<property><!--Loaded from Unknown--><name>mapred.system.dir</name><value>/home/user4hadoop/hadoop/tmp/mapred/system</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker.persist.jobstatus.hours</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.address</name><value>0.0.0.0:50010</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.logging.level</name><value>info</value></property>

<property><!--Loaded from Unknown--><name>dfs.block.access.token.enable</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>io.skip.checksum.errors</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>fs.default.name</name><value>hdfs://192.168.1.127:9000/</value></property>

<property><!--Loaded from Unknown--><name>mapred.cluster.reduce.memory.mb</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>mapred.child.tmp</name><value>./tmp</value></property>

<property><!--Loaded from Unknown--><name>fs.har.impl.disable.cache</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>dfs.safemode.threshold.pct</name><value>0.999f</value></property>

<property><!--Loaded from Unknown--><name>mapred.skip.reduce.max.skip.groups</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.handler.count</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>dfs.blockreport.initialDelay</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>mapred.heartbeats.in.second</name><value>100</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.dns.nameserver</name><value>default</value></property>

<property><!--Loaded from Unknown--><name>io.sort.factor</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.timeout</name><value>600000</value></property>

<property><!--Loaded from Unknown--><name>mapred.max.tracker.failures</name><value>4</value></property>

<property><!--Loaded from Unknown--><name>hadoop.rpc.socket.factory.class.default</name><value>org.apache.hadoop.net.StandardSocketFactory</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker.jobhistory.lru.cache.size</name><value>5</value></property>

<property><!--Loaded from Unknown--><name>fs.hdfs.impl</name><value>org.apache.hadoop.hdfs.DistributedFileSystem</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.jobtracker.port</name><value>9001</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.stale.datanode.interval</name><value>30000</value></property>

<property><!--Loaded from Unknown--><name>dfs.block.access.key.update.interval</name><value>600</value></property>

<property><!--Loaded from Unknown--><name>mapred.skip.map.auto.incr.proc.count</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.complete.cancel.delegation.tokens</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>io.mapfile.bloom.size</name><value>1048576</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.reduce.shuffle.connect.timeout</name><value>180000</value></property>

<property><!--Loaded from Unknown--><name>dfs.safemode.extension</name><value>30000</value></property>

<property><!--Loaded from Unknown--><name>mapred.jobtracker.blacklist.fault-timeout-window</name><value>180</value></property>

<property><!--Loaded from Unknown--><name>tasktracker.http.threads</name><value>40</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.shuffle.merge.percent</name><value>0.66</value></property>

<property><!--Loaded from Unknown--><name>fs.ftp.impl</name><value>org.apache.hadoop.fs.ftp.FTPFileSystem</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.kerberos.internal.spnego.principal</name><value>${dfs.web.authentication.kerberos.principal}</value></property>

<property><!--Loaded from Unknown--><name>mapred.output.compress</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>io.bytes.per.checksum</name><value>512</value></property>

<property><!--Loaded from Unknown--><name>mapred.combine.recordsBeforeProgress</name><value>10000</value></property>

<property><!--Loaded from Unknown--><name>mapred.healthChecker.script.timeout</name><value>600000</value></property>

<property><!--Loaded from Unknown--><name>topology.node.switch.mapping.impl</name><value>org.apache.hadoop.net.ScriptBasedMapping</value></property>

<property><!--Loaded from Unknown--><name>dfs.https.server.keystore.resource</name><value>ssl-server.xml</value></property>

<property><!--Loaded from Unknown--><name>mapred.reduce.slowstart.completed.maps</name><value>0.05</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.safemode.min.datanodes</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>mapred.reduce.max.attempts</name><value>4</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.ifile.readahead.bytes</name><value>4194304</value></property>

<property><!--Loaded from Unknown--><name>fs.ramfs.impl</name><value>org.apache.hadoop.fs.InMemoryFileSystem</value></property>

<property><!--Loaded from Unknown--><name>dfs.block.access.token.lifetime</name><value>600</value></property>

<property><!--Loaded from Unknown--><name>dfs.name.edits.dir</name><value>/home/user4hadoop/hadoop/name</value></property>

<property><!--Loaded from Unknown--><name>mapred.skip.map.max.skip.records</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>mapred.cluster.map.memory.mb</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>hadoop.security.group.mapping</name><value>org.apache.hadoop.security.ShellBasedUnixGroupsMapping</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker.persist.jobstatus.dir</name><value>/jobtracker/jobsInfo</value></property>

<property><!--Loaded from Unknown--><name>dfs.block.size</name><value>67108864</value></property>

<property><!--Loaded from Unknown--><name>fs.s3.buffer.dir</name><value>${hadoop.tmp.dir}/s3</value></property>

<property><!--Loaded from Unknown--><name>job.end.retry.attempts</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>fs.file.impl</name><value>org.apache.hadoop.fs.LocalFileSystem</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.max.xcievers</name><value>4096</value></property>

<property><!--Loaded from Unknown--><name>mapred.local.dir.minspacestart</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>mapred.output.compression.type</name><value>RECORD</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.ipc.address</name><value>0.0.0.0:50020</value></property>

<property><!--Loaded from Unknown--><name>dfs.permissions</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>topology.script.number.args</name><value>100</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.counters.groups.max</name><value>50</value></property>

<property><!--Loaded from Unknown--><name>io.mapfile.bloom.error.rate</name><value>0.005</value></property>

<property><!--Loaded from Unknown--><name>mapred.cluster.max.reduce.memory.mb</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>mapred.max.tracker.blacklists</name><value>4</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.profile.maps</name><value>0-2</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.https.address</name><value>0.0.0.0:50475</value></property>

<property><!--Loaded from Unknown--><name>mapred.userlog.retain.hours</name><value>24</value></property>

<property><!--Loaded from Unknown--><name>dfs.secondary.http.address</name><value>0.0.0.0:50090</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.replication.work.multiplier.per.iteration</name><value>2</value></property>

<property><!--Loaded from Unknown--><name>dfs.replication.max</name><value>512</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker.persist.jobstatus.active</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>hadoop.security.authorization</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>local.cache.size</name><value>10737418240</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.jobtracker.host</name><value>192.168.1.127</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.delegation.token.renew-interval</name><value>86400000</value></property>

<property><!--Loaded from Unknown--><name>mapred.min.split.size</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>mapred.map.tasks</name><value>2</value></property>

<property><!--Loaded from Unknown--><name>mapred.child.java.opts</name><value>-Xmx200m</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.user.name</name><value>user4hadoop</value></property>

<property><!--Loaded from Unknown--><name>dfs.https.client.keystore.resource</name><value>ssl-client.xml</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.queue.name</name><value>default</value></property>

<property><!--Loaded from Unknown--><name>dfs.https.address</name><value>0.0.0.0:50470</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker.retiredjobs.cache.size</name><value>1000</value></property>

<property><!--Loaded from Unknown--><name>dfs.balance.bandwidthPerSec</name><value>1048576</value></property>

<property><!--Loaded from Unknown--><name>ipc.server.listen.queue.size</name><value>128</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.invalidate.work.pct.per.iteration</name><value>0.32f</value></property>

<property><!--Loaded from Unknown--><name>job.end.retry.interval</name><value>30000</value></property>

<property><!--Loaded from Unknown--><name>mapred.inmem.merge.threshold</name><value>1000</value></property>

<property><!--Loaded from Unknown--><name>mapred.skip.attempts.to.start.skipping</name><value>2</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.tasktracker.outofband.heartbeat.damper</name><value>1000000</value></property>

<property><!--Loaded from Unknown--><name>hadoop.security.use-weak-http-crypto</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>fs.checkpoint.dir</name><value>/home/user4hadoop/hadoop/dfs/namesecondary</value></property>

<property><!--Loaded from Unknown--><name>mapred.reduce.tasks</name><value>1</value></property>

<property><!--Loaded from Unknown--><name>mapred.merge.recordsBeforeProgress</name><value>10000</value></property>

<property><!--Loaded from Unknown--><name>mapred.userlog.limit.kb</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.reduce.memory.mb</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>dfs.max.objects</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>webinterface.private.actions</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>hadoop.security.token.service.use_ip</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>io.sort.spill.percent</name><value>0.80</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.shuffle.input.buffer.percent</name><value>0.70</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.socks.proxy.port</name><value>1080</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.dns.nameserver</name><value>default</value></property>

<property><!--Loaded from Unknown--><name>mapred.map.tasks.speculative.execution</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>hadoop.http.authentication.type</name><value>simple</value></property>

<property><!--Loaded from Unknown--><name>hadoop.util.hash.type</name><value>murmur</value></property>

<property><!--Loaded from Unknown--><name>dfs.blockreport.intervalMsec</name><value>3600000</value></property>

<property><!--Loaded from Unknown--><name>mapred.map.max.attempts</name><value>4</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.acl-view-job</name><value> </value></property>

<property><!--Loaded from Unknown--><name>mapreduce.ifile.readahead</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>dfs.client.block.write.retries</name><value>3</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker.handler.count</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.reduce.shuffle.read.timeout</name><value>180000</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.expiry.interval</name><value>600000</value></property>

<property><!--Loaded from Unknown--><name>dfs.secondary.namenode.kerberos.internal.spnego.principal</name><value>${dfs.web.authentication.kerberos.principal}</value></property>

<property><!--Loaded from Unknown--><name>dfs.https.enable</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>mapred.jobtracker.maxtasks.per.job</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>mapred.jobtracker.job.history.block.size</name><value>3145728</value></property>

<property><!--Loaded from Unknown--><name>keep.failed.task.files</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.use.datanode.hostname</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.failed.volumes.tolerated</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.profile.reduces</name><value>0-2</value></property>

<property><!--Loaded from Unknown--><name>ipc.client.tcpnodelay</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>mapred.output.compression.codec</name><value>org.apache.hadoop.io.compress.DefaultCodec</value></property>

<property><!--Loaded from Unknown--><name>io.map.index.skip</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>hadoop.http.authentication.token.validity</name><value>36000</value></property>

<property><!--Loaded from Unknown--><name>ipc.server.tcpnodelay</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>mapred.jobtracker.blacklist.fault-bucket-width</name><value>15</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.delegation.key.update-interval</name><value>86400000</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.map.memory.mb</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>dfs.default.chunk.view.size</name><value>32768</value></property>

<property><!--Loaded from Unknown--><name>hadoop.logfile.size</name><value>10000000</value></property>

<property><!--Loaded from Unknown--><name>mapred.reduce.tasks.speculative.execution</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.tasktracker.outofband.heartbeat</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.reduce.input.limit</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.du.reserved</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>hadoop.security.authentication</name><value>simple</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.socks.proxy.host</name><value>host</value></property>

<property><!--Loaded from Unknown--><name>fs.checkpoint.period</name><value>3600</value></property>

<property><!--Loaded from Unknown--><name>dfs.web.ugi</name><value>webuser,webgroup</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.reuse.jvm.num.tasks</name><value>1</value></property>

<property><!--Loaded from Unknown--><name>mapred.jobtracker.completeuserjobs.maximum</name><value>100</value></property>

<property><!--Loaded from Unknown--><name>dfs.df.interval</name><value>60000</value></property>

<property><!--Loaded from Unknown--><name>dfs.data.dir</name><value>/home/user4hadoop/hadoop/data</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.tracker.task-controller</name><value>org.apache.hadoop.mapred.DefaultTaskController</value></property>

<property><!--Loaded from Unknown--><name>fs.s3.maxRetries</name><value>4</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.dns.interface</name><value>default</value></property>

<property><!--Loaded from Unknown--><name>mapred.cluster.max.map.memory.mb</name><value>-1</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.reduce.shuffle.maxfetchfailures</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.acl-modify-job</name><value> </value></property>

<property><!--Loaded from Unknown--><name>dfs.permissions.supergroup</name><value>supergroup</value></property>

<property><!--Loaded from Unknown--><name>mapred.local.dir</name><value>/home/user4hadoop/hadoop/tmp/mapred/local</value></property>

<property><!--Loaded from Unknown--><name>fs.hftp.impl</name><value>org.apache.hadoop.hdfs.HftpFileSystem</value></property>

<property><!--Loaded from Unknown--><name>fs.trash.interval</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>fs.s3.sleepTimeSeconds</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>dfs.replication.min</name><value>1</value></property>

<property><!--Loaded from Unknown--><name>mapred.submit.replication</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>fs.har.impl</name><value>org.apache.hadoop.fs.HarFileSystem</value></property>

<property><!--Loaded from Unknown--><name>mapred.map.output.compression.codec</name><value>org.apache.hadoop.io.compress.DefaultCodec</value></property>

<property><!--Loaded from Unknown--><name>hadoop.relaxed.worker.version.check</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.dns.interface</name><value>default</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.decommission.interval</name><value>30</value></property>

<property><!--Loaded from Unknown--><name>dfs.http.address</name><value>0.0.0.0:50070</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.namenode.port</name><value>9000</value></property>

<property><!--Loaded from Unknown--><name>dfs.heartbeat.interval</name><value>3</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker</name><value>192.168.1.127:9001</value></property>

<property><!--Loaded from Unknown--><name>hadoop.http.authentication.signature.secret.file</name><value>${user.home}/hadoop-http-auth-signature-secret</value></property>

<property><!--Loaded from Unknown--><name>io.seqfile.sorter.recordlimit</name><value>1000000</value></property>

<property><!--Loaded from Unknown--><name>dfs.name.dir</name><value>/home/user4hadoop/hadoop/name</value></property>

<property><!--Loaded from Unknown--><name>mapred.line.input.format.linespermap</name><value>1</value></property>

<property><!--Loaded from Unknown--><name>mapred.jobtracker.taskScheduler</name><value>org.apache.hadoop.mapred.JobQueueTaskScheduler</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.http.address</name><value>0.0.0.0:50075</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.masters.colocate</name><value>yes</value></property>

<property><!--Loaded from Unknown--><name>fs.webhdfs.impl</name><value>org.apache.hadoop.hdfs.web.WebHdfsFileSystem</value></property>

<property><!--Loaded from Unknown--><name>mapred.local.dir.minspacekill</name><value>0</value></property>

<property><!--Loaded from Unknown--><name>dfs.replication.interval</name><value>3</value></property>

<property><!--Loaded from Unknown--><name>io.sort.record.percent</name><value>0.05</value></property>

<property><!--Loaded from Unknown--><name>hadoop.http.authentication.kerberos.principal</name><value>HTTP/localhost@LOCALHOST</value></property>

<property><!--Loaded from Unknown--><name>fs.kfs.impl</name><value>org.apache.hadoop.fs.kfs.KosmosFileSystem</value></property>

<property><!--Loaded from Unknown--><name>mapred.temp.dir</name><value>${hadoop.tmp.dir}/mapred/temp</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.reduce.tasks.maximum</name><value>2</value></property>

<property><!--Loaded from Unknown--><name>dfs.replication</name><value>3</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.socks.proxy.enable</name><value>no</value></property>

<property><!--Loaded from Unknown--><name>fs.checkpoint.edits.dir</name><value>/home/user4hadoop/hadoop/dfs/namesecondary</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.tasks.sleeptime-before-sigkill</name><value>5000</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.location.name</name><value>hadoop4Ubuntu</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.reduce.input.buffer.percent</name><value>0.0</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.indexcache.mb</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.split.metainfo.maxsize</name><value>10000000</value></property>

<property><!--Loaded from Unknown--><name>mapred.skip.reduce.auto.incr.proc.count</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>hadoop.logfile.count</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>io.seqfile.compress.blocksize</name><value>1000000</value></property>

<property><!--Loaded from Unknown--><name>fs.s3.block.size</name><value>67108864</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.taskmemorymanager.monitoring-interval</name><value>5000</value></property>

<property><!--Loaded from Unknown--><name>hadoop.http.authentication.simple.anonymous.allowed</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>mapred.queue.default.state</name><value>RUNNING</value></property>

<property><!--Loaded from Unknown--><name>mapred.acls.enabled</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.jobtracker.staging.root.dir</name><value>/home/user4hadoop/hadoop/tmp/mapred/staging</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.check.stale.datanode</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>mapred.queue.names</name><value>default</value></property>

<property><!--Loaded from Unknown--><name>dfs.access.time.precision</name><value>3600000</value></property>

<property><!--Loaded from Unknown--><name>fs.hsftp.impl</name><value>org.apache.hadoop.hdfs.HsftpFileSystem</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.tracker.http.address</name><value>0.0.0.0:50060</value></property>

<property><!--Loaded from Unknown--><name>mapred.disk.healthChecker.interval</name><value>60000</value></property>

<property><!--Loaded from Unknown--><name>mapred.reduce.parallel.copies</name><value>5</value></property>

<property><!--Loaded from Unknown--><name>io.seqfile.lazydecompress</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>eclipse.plug-in.namenode.host</name><value>192.168.1.127</value></property>

<property><!--Loaded from Unknown--><name>io.sort.mb</name><value>100</value></property>

<property><!--Loaded from Unknown--><name>ipc.client.connection.maxidletime</name><value>10000</value></property>

<property><!--Loaded from Unknown--><name>mapred.compress.map.output</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>hadoop.security.uid.cache.secs</name><value>14400</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.tracker.report.address</name><value>127.0.0.1:0</value></property>

<property><!--Loaded from Unknown--><name>mapred.healthChecker.interval</name><value>60000</value></property>

<property><!--Loaded from Unknown--><name>ipc.client.kill.max</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>ipc.client.connect.max.retries</name><value>10</value></property>

<property><!--Loaded from Unknown--><name>fs.s3.impl</name><value>org.apache.hadoop.fs.s3.S3FileSystem</value></property>

<property><!--Loaded from Unknown--><name>hadoop.socks.server</name><value>host:1080</value></property>

<property><!--Loaded from Unknown--><name>mapred.user.jobconf.limit</name><value>5242880</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.counters.group.name.max</name><value>128</value></property>

<property><!--Loaded from Unknown--><name>mapred.job.tracker.http.address</name><value>0.0.0.0:50030</value></property>

<property><!--Loaded from Unknown--><name>io.file.buffer.size</name><value>4096</value></property>

<property><!--Loaded from Unknown--><name>mapred.jobtracker.restart.recover</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>io.serializations</name><value>org.apache.hadoop.io.serializer.WritableSerialization</value></property>

<property><!--Loaded from Unknown--><name>dfs.datanode.handler.count</name><value>3</value></property>

<property><!--Loaded from Unknown--><name>mapred.task.profile</name><value>false</value></property>

<property><!--Loaded from Unknown--><name>dfs.replication.considerLoad</name><value>true</value></property>

<property><!--Loaded from Unknown--><name>jobclient.output.filter</name><value>FAILED</value></property>

<property><!--Loaded from Unknown--><name>dfs.namenode.delegation.token.max-lifetime</name><value>604800000</value></property>

<property><!--Loaded from Unknown--><name>hadoop.http.authentication.kerberos.keytab</name><value>${user.home}/hadoop.keytab</value></property>

<property><!--Loaded from Unknown--><name>mapred.tasktracker.map.tasks.maximum</name><value>2</value></property>

<property><!--Loaded from Unknown--><name>mapreduce.job.counters.counter.name.max</name><value>64</value></property>

<property><!--Loaded from Unknown--><name>io.compression.codecs</name><value>org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.SnappyCodec</value></property>

<property><!--Loaded from Unknown--><name>fs.checkpoint.size</name><value>67108864</value></property>

</configuration>

差不多这样eclipse就可以远程连接hadoop了,

可以查看是否连接成功:

上传一个文件尝试,如果报告错误:

org.apache.hadoop.security.AccessControlException: Permission denied: user=administrator, access=EXECUTE, inode=“job_201111031322_0003”:heipark:supergroup:rwx-.

查看conf/hdfs-site.xml是否添加配置:

dfs.permissions属性为false(默认为true)。可查看上面Hadoop安装配置过程。

请注意conf下配置文件配置时不要使用localhost ,而使用局域网下的ip地址