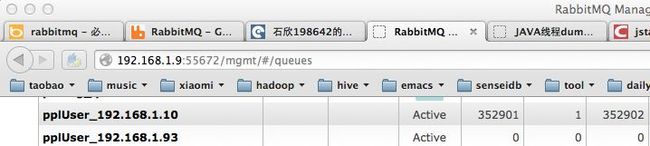

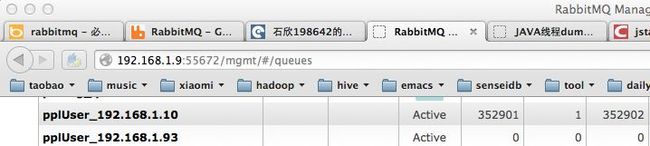

今天发现rabbitMQ消息堆积

发现有三十多万的消息堆积在10的queue里没有被消费

记录一下查看问题的步骤:

1 jps

找出程序的PID

2 jstack ${PID}

查看线程dump,发现rabbitMQ的consumer worker线程block住了:

"Thread-33" prio=10 tid=0x00002aaac8013000 nid=0x3264 waiting for monitor entry [0x00000000437e4000]

java.lang.Thread.State: BLOCKED (on object monitor)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3525)

- waiting to lock <0x000000072039ce18> (a java.lang.Object)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3505)

at com.xiaomi.miliao.mt.fulltextindex.UserIndexUpdater.updatePlUserIndex(UserIndexUpdater.java:229)

- locked <0x0000000711ab59c8> (a java.lang.Object)

at com.xiaomi.miliao.mt.fulltextindex.SearcherDelegate.updatePlUserIndex(SearcherDelegate.java:522)

at com.xiaomi.miliao.mt.fulltextindex.MessageQueueDelegate$PlUserIndexMessageHandler.handleMessage(MessageQueueDelegate.java:342)

at com.xiaomi.miliao.rabbitmq.ConsumerWorker.run(ConsumerWorker.java:107)

at java.lang.Thread.run(Thread.java:662)

这个线程的状态是wating for monitor entry,但是它在等待这个锁(0x000000072039ce18)已经被下面的这个线程占有了,而且下面的这个线程一直在run,没有返回。根据它的栈信息,查看java.io.FileDescriptor.sync方法,怀疑是系统IO很繁忙,一直没有返回。

"Thread-24" prio=10 tid=0x00002aaac8007000 nid=0x3257 runnable [0x00000000431d8000]

java.lang.Thread.State: RUNNABLE

at java.io.FileDescriptor.sync(Native Method)

at org.apache.lucene.store.FSDirectory.sync(FSDirectory.java:321)

at org.apache.lucene.index.IndexWriter.startCommit(IndexWriter.java:4801)

at org.apache.lucene.index.IndexWriter.prepareCommit(IndexWriter.java:3461)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3534)

- locked <0x000000072039ce18> (a java.lang.Object)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3505)

at com.xiaomi.miliao.mt.fulltextindex.UserIndexUpdater.updatePlUserIndex(UserIndexUpdater.java:229)

- locked <0x0000000711d6b558> (a java.lang.Object)

at com.xiaomi.miliao.mt.fulltextindex.SearcherDelegate.updatePlUserIndex(SearcherDelegate.java:522)

at com.xiaomi.miliao.mt.fulltextindex.MessageQueueDelegate$PlUserIndexMessageHandler.handleMessage(MessageQueueDelegate.java:342)

at com.xiaomi.miliao.rabbitmq.ConsumerWorker.run(ConsumerWorker.java:107)

at java.lang.Thread.run(Thread.java:662)

3 ssh到指定服务器,查看cpu负载

top -d 5 五秒刷新一次,然后按1,查看所有的cpu的情况,没有发现异常,但是发现我的服务确实很占cpu啊,给力!lucene确实需要优化,赶紧切换到sensei吧

[root@MT1-10 logs]# top -d 5

top - 20:13:51 up 439 days, 16:15, 7 users, load average: 4.56, 4.82, 4.84

Tasks: 244 total, 1 running, 243 sleeping, 0 stopped, 0 zombie

Cpu0 : 17.6%us, 1.6%sy, 0.0%ni, 73.6%id, 7.2%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu1 : 6.6%us, 1.4%sy, 0.0%ni, 6.0%id, 85.8%wa, 0.0%hi, 0.2%si, 0.0%st

Cpu2 : 5.6%us, 0.8%sy, 0.0%ni, 84.1%id, 9.6%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu3 : 4.4%us, 0.4%sy, 0.0%ni, 95.2%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu4 : 3.0%us, 0.6%sy, 0.0%ni, 93.8%id, 2.6%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu5 : 2.2%us, 0.6%sy, 0.0%ni, 94.4%id, 2.8%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu6 : 2.2%us, 0.2%sy, 0.0%ni, 95.6%id, 2.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu7 : 15.0%us, 2.4%sy, 0.0%ni, 70.1%id, 12.2%wa, 0.0%hi, 0.4%si, 0.0%st

Cpu8 : 5.4%us, 0.6%sy, 0.0%ni, 91.6%id, 2.2%wa, 0.0%hi, 0.2%si, 0.0%st

Cpu9 : 5.2%us, 1.2%sy, 0.0%ni, 66.9%id, 26.7%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu10 : 2.6%us, 0.6%sy, 0.0%ni, 92.4%id, 4.4%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu11 : 6.2%us, 0.8%sy, 0.0%ni, 93.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu12 : 2.4%us, 0.4%sy, 0.0%ni, 95.4%id, 1.8%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu13 : 11.8%us, 1.2%sy, 0.0%ni, 84.7%id, 2.4%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu14 : 2.2%us, 0.2%sy, 0.0%ni, 97.6%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu15 : 35.8%us, 5.2%sy, 0.0%ni, 41.8%id, 12.8%wa, 0.2%hi, 4.2%si, 0.0%st

Mem: 32956180k total, 32849076k used, 107104k free, 547628k buffers

Swap: 6144820k total, 228k used, 6144592k free, 18353516k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

12681 root 18 0 5168m 2.4g 13m S 129.3 7.7 1675:27 java

4 使用iostat -d -x 1 100,查看系统io的使用情况(-d 是查看disk, -c是查看cpu), -x是查看更多信息,1是1秒刷新一次,100是查看一百次

[root@MT1-10 logs]# iostat -d -x 1 1

Linux 2.6.18-194.el5 (MT1-10) 10/09/2012

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

cciss/c0d0 0.00 776.00 3.00 259.00 24.00 11360.00 43.45 51.73 352.21 3.82 100.10

cciss/c0d0p1

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p2

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p3

0.00 23.00 0.00 3.00 0.00 208.00 69.33 0.18 61.00 61.00 18.30

cciss/c0d0p4

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p5

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p6

0.00 753.00 3.00 256.00 24.00 11152.00 43.15 51.55 355.59 3.86 100.10

具体名咯解析可查看

http://wenku.baidu.com/view/5991f2781711cc7931b7168c.html

发现cciss/c0d0p6的%util达到了100%,太繁忙了。

平均IO请求的队列长度avgqu-sz是51.55

平均使用的扇区数量是43.15

平均每个IO的等待时间的355.59毫秒

说明这快磁盘已经出现性能瓶颈了!

使用iopp可查看每个进程的IO情况!

记录一下查看问题的步骤:

1 jps

找出程序的PID

2 jstack ${PID}

查看线程dump,发现rabbitMQ的consumer worker线程block住了:

"Thread-33" prio=10 tid=0x00002aaac8013000 nid=0x3264 waiting for monitor entry [0x00000000437e4000]

java.lang.Thread.State: BLOCKED (on object monitor)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3525)

- waiting to lock <0x000000072039ce18> (a java.lang.Object)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3505)

at com.xiaomi.miliao.mt.fulltextindex.UserIndexUpdater.updatePlUserIndex(UserIndexUpdater.java:229)

- locked <0x0000000711ab59c8> (a java.lang.Object)

at com.xiaomi.miliao.mt.fulltextindex.SearcherDelegate.updatePlUserIndex(SearcherDelegate.java:522)

at com.xiaomi.miliao.mt.fulltextindex.MessageQueueDelegate$PlUserIndexMessageHandler.handleMessage(MessageQueueDelegate.java:342)

at com.xiaomi.miliao.rabbitmq.ConsumerWorker.run(ConsumerWorker.java:107)

at java.lang.Thread.run(Thread.java:662)

这个线程的状态是wating for monitor entry,但是它在等待这个锁(0x000000072039ce18)已经被下面的这个线程占有了,而且下面的这个线程一直在run,没有返回。根据它的栈信息,查看java.io.FileDescriptor.sync方法,怀疑是系统IO很繁忙,一直没有返回。

"Thread-24" prio=10 tid=0x00002aaac8007000 nid=0x3257 runnable [0x00000000431d8000]

java.lang.Thread.State: RUNNABLE

at java.io.FileDescriptor.sync(Native Method)

at org.apache.lucene.store.FSDirectory.sync(FSDirectory.java:321)

at org.apache.lucene.index.IndexWriter.startCommit(IndexWriter.java:4801)

at org.apache.lucene.index.IndexWriter.prepareCommit(IndexWriter.java:3461)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3534)

- locked <0x000000072039ce18> (a java.lang.Object)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3505)

at com.xiaomi.miliao.mt.fulltextindex.UserIndexUpdater.updatePlUserIndex(UserIndexUpdater.java:229)

- locked <0x0000000711d6b558> (a java.lang.Object)

at com.xiaomi.miliao.mt.fulltextindex.SearcherDelegate.updatePlUserIndex(SearcherDelegate.java:522)

at com.xiaomi.miliao.mt.fulltextindex.MessageQueueDelegate$PlUserIndexMessageHandler.handleMessage(MessageQueueDelegate.java:342)

at com.xiaomi.miliao.rabbitmq.ConsumerWorker.run(ConsumerWorker.java:107)

at java.lang.Thread.run(Thread.java:662)

3 ssh到指定服务器,查看cpu负载

top -d 5 五秒刷新一次,然后按1,查看所有的cpu的情况,没有发现异常,但是发现我的服务确实很占cpu啊,给力!lucene确实需要优化,赶紧切换到sensei吧

[root@MT1-10 logs]# top -d 5

top - 20:13:51 up 439 days, 16:15, 7 users, load average: 4.56, 4.82, 4.84

Tasks: 244 total, 1 running, 243 sleeping, 0 stopped, 0 zombie

Cpu0 : 17.6%us, 1.6%sy, 0.0%ni, 73.6%id, 7.2%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu1 : 6.6%us, 1.4%sy, 0.0%ni, 6.0%id, 85.8%wa, 0.0%hi, 0.2%si, 0.0%st

Cpu2 : 5.6%us, 0.8%sy, 0.0%ni, 84.1%id, 9.6%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu3 : 4.4%us, 0.4%sy, 0.0%ni, 95.2%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu4 : 3.0%us, 0.6%sy, 0.0%ni, 93.8%id, 2.6%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu5 : 2.2%us, 0.6%sy, 0.0%ni, 94.4%id, 2.8%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu6 : 2.2%us, 0.2%sy, 0.0%ni, 95.6%id, 2.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu7 : 15.0%us, 2.4%sy, 0.0%ni, 70.1%id, 12.2%wa, 0.0%hi, 0.4%si, 0.0%st

Cpu8 : 5.4%us, 0.6%sy, 0.0%ni, 91.6%id, 2.2%wa, 0.0%hi, 0.2%si, 0.0%st

Cpu9 : 5.2%us, 1.2%sy, 0.0%ni, 66.9%id, 26.7%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu10 : 2.6%us, 0.6%sy, 0.0%ni, 92.4%id, 4.4%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu11 : 6.2%us, 0.8%sy, 0.0%ni, 93.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu12 : 2.4%us, 0.4%sy, 0.0%ni, 95.4%id, 1.8%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu13 : 11.8%us, 1.2%sy, 0.0%ni, 84.7%id, 2.4%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu14 : 2.2%us, 0.2%sy, 0.0%ni, 97.6%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu15 : 35.8%us, 5.2%sy, 0.0%ni, 41.8%id, 12.8%wa, 0.2%hi, 4.2%si, 0.0%st

Mem: 32956180k total, 32849076k used, 107104k free, 547628k buffers

Swap: 6144820k total, 228k used, 6144592k free, 18353516k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

12681 root 18 0 5168m 2.4g 13m S 129.3 7.7 1675:27 java

4 使用iostat -d -x 1 100,查看系统io的使用情况(-d 是查看disk, -c是查看cpu), -x是查看更多信息,1是1秒刷新一次,100是查看一百次

[root@MT1-10 logs]# iostat -d -x 1 1

Linux 2.6.18-194.el5 (MT1-10) 10/09/2012

Device: rrqm/s wrqm/s r/s w/s rsec/s wsec/s avgrq-sz avgqu-sz await svctm %util

cciss/c0d0 0.00 776.00 3.00 259.00 24.00 11360.00 43.45 51.73 352.21 3.82 100.10

cciss/c0d0p1

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p2

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p3

0.00 23.00 0.00 3.00 0.00 208.00 69.33 0.18 61.00 61.00 18.30

cciss/c0d0p4

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p5

0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

cciss/c0d0p6

0.00 753.00 3.00 256.00 24.00 11152.00 43.15 51.55 355.59 3.86 100.10

具体名咯解析可查看

http://wenku.baidu.com/view/5991f2781711cc7931b7168c.html

发现cciss/c0d0p6的%util达到了100%,太繁忙了。

平均IO请求的队列长度avgqu-sz是51.55

平均使用的扇区数量是43.15

平均每个IO的等待时间的355.59毫秒

说明这快磁盘已经出现性能瓶颈了!

使用iopp可查看每个进程的IO情况!