1. The essential difference between multiple processes and multiple threads is that while each process has a complete set of its own variables, threads share the same data. However, shared variables make communication between threads more efficient and easier to program than interprocess communication. Moreover, on some operating systems, threads are more “lightweight” than processes—it takes less overhead to create and destroy individual threads than it does to launch new processes.

2. sleep is a static method of the Thread class that temporarily stops the activity of the current thread for the given number of milliseconds. The sleep method can throw an InterruptedException.

3. Here is a simple procedure for running a task in a separate thread:

a) Place the code for the task into the run method of a class that implements the Runnable interface.

b) Construct an object of your class.

c) Construct a Thread object from the Runnable object you constructed.

d) Start the thread by start method.

4. Typically, interruption is used to request that a thread terminates. Accordingly, the run method exits when an InterruptedException occurs.

5. You can also define a thread by forming a subclass of the Thread class, then you construct an object of the subclass and call its start method. This approach is no longer recommended. You should decouple the task that is to be run in parallel from the mechanism of running it. If you have many tasks, it is too expensive to create a separate thread for each one of them. Instead, you can use a thread pool.

6. The interrupt method can be used to request termination of a thread. When the interrupt method is called on a thread, the interrupted status of the thread is set. This is a boolean flag that is present in every thread. Each thread should occasionally check whether it has been interrupted. To find out whether the interrupted status was set, first call the static Thread.currentThread method to get the current thread, and then call the isInterrupted method:

public void run()

{

try

{

. . .

while (!Thread.currentThread().isInterrupted() && more work to do)

{

do more work

}

}

catch(InterruptedException e)

{

// thread was interrupted during sleep or wait

}

finally

{

cleanup, if required

}

// exiting the run method terminates the thread

}

7. The isInterrupted check is neither necessary nor useful if you call the sleep method (or another interruptible method) after every work iteration. If you call the sleep method when the interrupted status is set, it doesn’t sleep. Instead, it clears the status (!) and throws an InterruptedException.

8. There are two very similar methods, interrupted and isInterrupted. The interrupted method is a static method that checks whether the current thread has been interrupted. Furthermore, calling the interrupted method clears the interrupted status of the thread. On the other hand, the isInterrupted method is an instance method that you can use to check whether any thread has been interrupted. Calling it does not change the interrupted status.

9. If you can’t think of anything good to do in the catch clause for sleep method ( or other interruptible method), you still have two reasonable choices:

a) In the catch clause, call Thread.currentThread().interrupt() to set the interrupted status. Then the caller can test it.

b) Tag your method with throws InterruptedException and drop the try block. Then the caller (or, ultimately, the run method) can catch it.

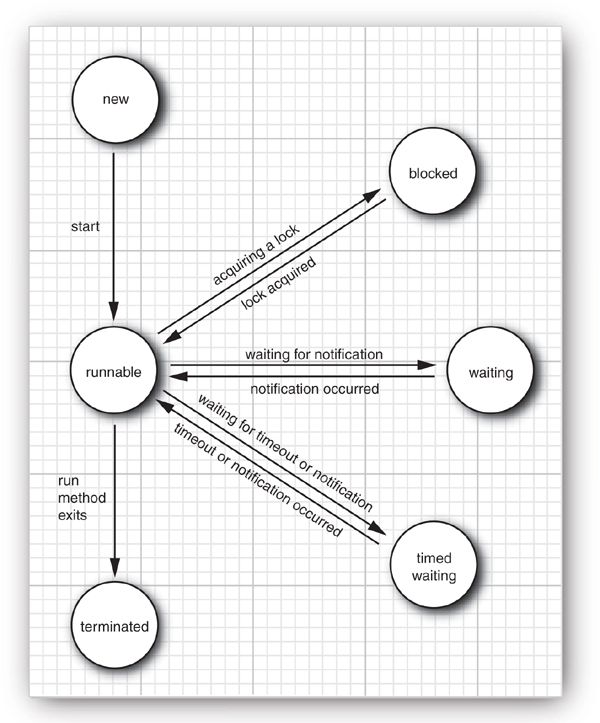

10. Threads can be in one of six states:

a) New

b) Runnable

c) Blocked

d) Waiting

e) Timed waiting

f) Terminated

To determine the current state of a thread, simply call the getState method.

11. When you create a thread with the new operator, the thread is not yet running. This means that it is in the new state.

12. Once you invoke the start method, the thread is in the runnable state. A runnable thread may or may not actually be running. It is up to the operating system to give the thread time to run. (The Java specification does not call this a separate state, though. A running thread is still in the runnable state.)

13. All modern desktop and server operating systems use preemptive scheduling which gives each runnable thread a slice of time to perform its task. When that slice of time is exhausted, the operating system preempts the thread and gives another thread an opportunity to work. However, small devices such as cell phones may use cooperative scheduling. In such a device, a thread loses control only when it calls the yield method, or it is blocked or waiting.

14. When the thread tries to acquire an intrinsic object lock (but not a Lock in the java.util.concurrent library) that is currently held by another thread, it becomes blocked. The thread becomes unblocked when all other threads have relinquished the lock and the thread scheduler has allowed this thread to hold it.

Commented by Sean : if the thread is blocked by Lock.lock , then its state is also WAITING.

15. When the thread waits for another thread to notify the scheduler of a condition, it enters the waiting state. This happens by calling the Object.wait or Thread.join method, or by waiting for a Lock or Condition in the java.util.concurrent library. In practice, the difference between the blocked and waiting state is not significant.

16. Several methods have a timeout parameter. Calling them causes the thread to enter the timed waiting state. This state persists either until the timeout expires or the appropriate notification has been received. Methods with timeout include Thread.sleep and the timed versions of Object.wait, Thread.join, Lock.tryLock, and Condition.await.

17. A thread is terminated for one of two reasons:

a) It dies a natural death because the run method exits normally.

b) It dies abruptly because an uncaught exception terminates the run method.

18. When a thread is blocked or waiting (or, when it terminates), another thread will be scheduled to run. When a thread is reactivated, the scheduler checks to see if it has a higher priority than the currently running threads. If so, it preempts one of the current threads and picks a new thread to run.

19. Every thread has a priority. By default, a thread inherits the priority of the thread that constructed it. You can increase or decrease the priority of any thread with the setPriority method. You can set the priority to any value between MIN_PRIORITY (defined as 1 in the Thread class) and MAX_PRIORITY (defined as 10). NORM_PRIORITY is defined as 5. Whenever the thread scheduler has a chance to pick a new thread, it prefers threads with higher priority. However, thread priorities are highly system-dependent. When the virtual machine relies on the thread implementation of the host platform, the Java thread priorities are mapped to the priority levels of the host platform, which may have more or fewer thread priority levels. You should certainly never structure your programs so that their correct functioning depends on priority levels.

20. The static yield method of Thread causes the currently executing thread to yield. If there are other runnable threads with a priority at least as high as the priority of this thread, they will be scheduled next.

21. A daemon is simply a thread that has no other role in life than to serve others. When only daemon threads remain, the virtual machine exits. A daemon thread should never access a persistent resource such as a file or database since it can terminate at any time, even in the middle of an operation. You can turn a thread into a daemon thread by calling setDaemon method and this method must be called before the thread is started.

22. There is no catch clause to which the unchecked exception thrown from the run method of a thread can be propagated. Instead, just before the thread dies, the exception is passed to a handler for uncaught exceptions. The handler must belong to a class that implements the Thread.UncaughtExceptionHandler interface which has a single method:

void uncaughtException(Thread t, Throwable e)You can install a handler into any thread with the setUncaughtExceptionHandler method. You can also install a default handler for all threads with the static method setDefaultUncaughtExceptionHandler of the Thread class.

23. If you don’t install a default handler, the default handler is null. However, if you don’t install a handler for an individual thread, the handler is the thread’s ThreadGroup object. The ThreadGroup class implements the Thread.UncaughtExceptionHandler interface. Its uncaughtException method takes the following action:

a) If the thread group has a parent, then the uncaughtException method of the parent group is called.

b) Otherwise, if the Thread.getDefaultUncaughtExceptionHandler method returns a non-null handler, it is called.

c) Otherwise, if the Throwable is an instance of ThreadDeath(which is generated by the deprecated stop method), nothing happens.

d) Otherwise, the name of the thread and the stack trace of the Throwable are printed on System.err.

24. Problem occurs when two threads are simultaneously trying to update a field by non-atomic operation. (i.e. accounts[to] += amount; )

25. To peek at the virtual machine bytecodes that execute each statement in the class. Run the command:

javap -c -v ClassName

26. The basic outline for protecting a code block with a ReentrantLock is:

myLock.lock(); // a ReentrantLock object

try

{

critical section

}

finally

{

myLock.unlock(); // make sure the lock is unlocked even if an exception is thrown

}

This construct guarantees that only one thread at a time can enter the critical section. As soon as one thread locks the lock object, no other thread can get past the lock statement. When other threads call lock, they are deactivated until the first thread unlocks the lock object.

27. The lock is called reentrant because a thread can repeatedly acquire a lock that it already owns. The lock has a hold count that keeps track of the nested calls to the lock method. The thread has to call unlock for every call to lock in order to relinquish the lock. Because of this feature, code protected by a lock can call another method that uses the same locks.

28. A lock object can have one or more associated condition objects. You obtain a condition object with the newCondition method. It is customary to give each condition object a name that evokes the condition that it represents.

29. After calling await method of Condition object, the current thread is deactivated and gives up the lock. There is an essential difference between a thread that is waiting to acquire a lock and a thread that has called await. Once a thread calls the await method, it enters a wait set for that condition. The thread is not made runnable when the lock is available. Instead, it stays deactivated until another thread has called the signalAll method on the same condition. When the threads are removed from the wait set, they are again runnable and the scheduler will eventually activate them again. At that time, they will attempt to reenter the object. As soon as the lock is available, one of them will acquire the lock and continue where it left off, returning from the call to await. At this time, the thread should test the condition again. There is no guarantee that the condition is now fulfilled.

30. In general, a call to await should be inside a loop of the form:

while (!(ok to proceed)) condition.await();

31. A thread can only call await, signalAll, or signal on a condition if it owns the lock of the condition.

32. Key points about locks and conditions:

a) A lock protects sections of code, allowing only one thread to execute the code at a time.

b) A lock manages threads that are trying to enter a protected code segment.

c) A lock can have one or more associated condition objects.

d) Each condition object manages threads that have entered a protected code section but that cannot proceed.

33. The Lock and Condition interfaces give programmers a high degree of control over locking. However, in most situations, you don’t need that control—you can use a mechanism that is built into the Java language. Every object in Java has an intrinsic lock. If a method is declared with the synchronized keyword, the object’s lock protects the entire method. That is, to call the method, a thread must acquire the intrinsic object lock.

34. The intrinsic object lock has a single associated condition. The wait method adds a thread to the wait set, and the notifyAll/notify methods unblock waiting threads.

35. It is also legal to declare static methods as synchronized. If such a method is called, it acquires the intrinsic lock of the associated class object.

36. The intrinsic locks and conditions have some limitations:

a) You cannot interrupt a thread that is trying to acquire a lock. (while explicit lock can be interrupted by Lock.lockInterruptibly.)

b) You cannot specify a timeout when trying to acquire a lock.

c) Having a single condition per lock can be inefficient.

37. Whether to use Lock and Condition objects or synchronized methods:

a) It is best to use neither Lock/Condition nor the synchronized keyword. In many situations, you can use one of the mechanisms of the java.util.concurrent package that do all the locking for you.(i.e. BlockingQueue)

b) If the synchronized keyword works for your situation, by all means, use it. You’ll write less code and have less room for error.

c) Use Lock/Condition if you really need the additional power that these constructs give you.

38. When a thread enters a block of the form:

synchronized (obj) // this is the syntax for a synchronized block

{

critical section

}

Then it acquires the lock for obj.

39. A monitor has these properties:

a) A monitor is a class with only private fields.

b) Each object of that class has an associated lock.

c) All methods are locked by that lock. Since all fields are private, this arrangement ensures that no thread can access the fields while another thread manipulates them.

d) The lock can have any number of associated conditions.

A Java object differs from a monitor in three important ways, compromising thread safety:

a) Fields are not required to be private.

b) Methods are not required to be synchronized.

c) The intrinsic lock is available to clients.

40. Computers with multiple processors can temporarily hold memory values in registers or local memory caches. As a consequence, threads running in different processors may see different values for the same memory location! Compilers can reorder instructions for maximum throughput. Compilers won’t choose an ordering that changes the meaning of the code, but they make the assumption that memory values are only changed when there are explicit instructions in the code. However, a memory value can be changed by another thread! If you use locks to protect code that can be accessed by multiple threads, you won’t have these problems. Compilers are required to respect locks by flushing local caches as necessary and not inappropriately reordering instructions. The details are explained in the Java Memory Model and Thread Specification (see www.jcp.org/en/jsr/detail?id=133). A more accessible overview article is available at http://www-106.ibm.com/developerworks/java/library/j-jtp02244.html.

41. The volatile keyword offers a lock-free mechanism for synchronizing access to an instance field. If you declare a field as volatile, then the compiler and the virtual machine take into account that the field may be concurrently updated by another thread. Volatile variables do not provide any atomicity.

42. There is one other situation in which it is safe to access a shared field—when it is declared final.

43. You can declare shared variables as volatile provided you perform no operations other than assignment. There are a number of classes in the java.util.concurrent.atomic package that use efficient machine-level instructions to guarantee atomicity of other operations without using locks. For example, the AtomicInteger class has methods incrementAndGet and decrementAndGet that atomically increment or decrement an integer. You can safely use an AtomicInteger as a shared counter without synchronization.

44. When the program hangs, type Ctrl+\. You will get a thread dump that lists all threads. Each thread has a stack trace, telling you where it is currently blocked.

45. You can avoid sharing by giving each thread its own instance, using the ThreadLocal helper class. The first time you call get in a given thread, the initialValue method which you may override is called. From then on, the get method returns the instance belonging to the current thread.

46. Java SE 7 provides a convenience class for you. Simply make a call:

int random = ThreadLocalRandom.current().nextInt(upperBound);

The call ThreadLocalRandom.current() returns an instance of the Random class that is unique to the current thread.

47. The tryLock method of Lock interface (which ReentrantLock implements) tries to acquire a lock and returns true if it was successful. Otherwise, it immediately returns false, and the thread can go off and do something else. You can call tryLock with a timeout parameter. If you call tryLock with a timeout, an InterruptedException is thrown if the thread is interrupted while it is waiting. The lockInterruptibly method has the same meaning as tryLock with an infinite timeout.

48. The lock method cannot be interrupted. If a thread is interrupted while it is waiting to acquire a lock, the interrupted thread continues to be blocked until the lock is available. If a deadlock occurs, then the lock method can never terminate.

49. When you wait on a condition, you can also supply a timeout. The await method returns if another thread has activated this thread by calling signalAll or signal, or if the timeout has elapsed, or if the thread was interrupted. The await methods throw an InterruptedException if the waiting thread is interrupted. In the case that you’d rather continue waiting, use the awaitUninterruptibly method instead.

50. The java.util.concurrent.locks package defines two lock classes, the ReentrantLock that we already discussed and the ReentrantReadWriteLock. The latter is useful when there are many threads that read from a data structure and fewer threads that modify it. In that situation, it makes sense to allow shared access for the readers. Of course, a writer must still have exclusive access.

51. Here are the steps that are necessary to use a read/write lock:

a) Construct a ReentrantReadWriteLock object.

b) Extract the read and write locks by readLock and writeLock method of the ReentrantReadWriteLock object.

c) Use the read lock in all accessors.

d) Use the write lock in all mutators.

52. The initial release of Java defined a stop method that simply terminates a thread, and a suspend method that blocks a thread until another thread calls resume. The stop and suspend methods have something in common: Both attempt to control the behavior of a given thread without the thread’s cooperation. When a thread is stopped, it immediately gives up the locks on all objects that it has locked. This can leave objects in an inconsistent state. When a thread wants to stop another thread, it has no way of knowing when the stop method is safe and when it leads to damaged objects. Therefore, the method has been deprecated. You should interrupt a thread when you want it to stop. The interrupted thread can then stop when it is safe to do so. If you suspend a thread that owns a lock, then the lock is unavailable until the thread is resumed. If the thread that will call the resume method tries to acquire the same lock, the program deadlocks: The suspended thread waits to be resumed, and the resuming thread waits for the lock. If you want to safely suspend a thread, introduce a variable suspendRequested and test it in a safe place of your run method—in a place where your thread doesn’t lock objects that other threads need. When your thread finds that the suspendRequested variable has been set, it should keep waiting until it becomes available again.

53. A stopped thread exits all synchronized methods it has called—technically, by throwing a ThreadDeath exception. As a consequence, the thread relinquishes the intrinsic object locks that it holds.

54. It is much easier and safer to use higher-level structures that have been implemented by concurrency experts.

55. A blocking queue causes a thread to block when you try to add an element when the queue is currently full or to remove an element when the queue is empty. Blocking queues are a useful tool for coordinating the work of multiple threads. Worker threads can periodically deposit intermediate results into a blocking queue. Other worker threads remove the intermediate results and modify them further. The queue automatically balances the workload.

56. The blocking queue methods fall into three categories that differ by the action they perform when the queue is full or empty. If you use the queue as a thread management tool, use the put and take methods. The add, remove, and element operations throw an exception when you try to add to a full queue or get the head of an empty queue. The offer, poll, and peek methods simply return with a failure indicator instead of throwing an exception if they cannot carry out their tasks. The poll and peek methods return null to indicate failure. Therefore, it is illegal to insert null values into these queues.

57. There are also variants of the offer and poll methods with a timeout. The put method blocks if the queue is full, and the take method blocks if the queue is empty. These are the equivalents of offer and poll with infinite timeout.

58. The java.util.concurrent package supplies several variations of blocking queues. By default, the LinkedBlockingQueue has no upper bound on its capacity, but a maximum capacity can be optionally specified. The LinkedBlockingDeque is a double-ended version. The ArrayBlockingQueue is constructed with a given capacity and an optional parameter to require fairness. If fairness is specified, then the longest-waiting threads are given preferential treatment. As always, fairness exacts a significant performance penalty, and you should only use it if your problem specifically requires it. The PriorityBlockingQueue is a priority queue. Elements are removed in order of their priority. The queue has unbounded capacity, but retrieval will block if the queue is empty.

59. A DelayQueue contains objects that implement the Delayed interface:

interface Delayed extends Comparable<Delayed>

{

long getDelay(TimeUnit unit);

}

The getDelay method returns the remaining delay of the object. A negative value indicates that the delay has elapsed. Elements can only be removed from a DelayQueue if their delay has elapsed. You also need to implement the compareTo method. The DelayQueue uses that method to sort the entries.

60. Java SE 7 adds a TransferQueue interface that allows a producer thread to wait until a consumer is ready to take on an item. When a producer calls

q.transfer(item);

the call blocks until another thread removes it. The LinkedTransferQueue class implements this interface.

61. If multiple threads concurrently modify a data structure, such as a hash table, it is easy to damage that data structure. You can protect a shared data structure by supplying a lock, but it is usually easier to choose a thread-safe implementation instead.

62. The java.util.concurrent package supplies efficient thread-safe implementations for maps, sorted sets, and queues: ConcurrentHashMap, ConcurrentSkipListMap, ConcurrentSkipListSet, and ConcurrentLinkedQueue. These collections use sophisticated algorithms that minimize contention by allowing concurrent access to different parts of the data structure. The size method of these classes does not necessarily operate in constant time. Determining the current size of one of these collections usually requires traversal. The collections return weakly consistent iterators. That means that the iterators may or may not reflect all modifications that are made after they were constructed, but they will not return a value twice and they will not throw a ConcurrentModificationException.

63. The concurrent hash map can efficiently support a large number of readers and a fixed number of writers. By default, it is assumed that there are up to 16 simultaneous writer threads. There can be many more writer threads, but if more than 16 write at the same time, the others are temporarily blocked. You can specify a higher number in the constructor.

64. The ConcurrentHashMap and ConcurrentSkipListMap classes have useful methods for atomic insertion and removal of associations. The putIfAbsent method atomically adds a new association provided there wasn’t one before. The opposite operation is remove which atomically removes the key and value if they are present in the map. replace method atomically replaces the old value with the new one, provided the old value was associated with the given key.

65. The CopyOnWriteArrayList and CopyOnWriteArraySet are thread-safe collections in which all mutators make a copy of the underlying array. This arrangement is useful if the threads that iterate over the collection greatly outnumber the threads that mutate it. When you construct an iterator, it contains a reference to the current array. If the array is later mutated, the iterator still has the old array, but the collection’s array is replaced. As a consequence, the older iterator has a consistent (but potentially outdated) view that it can access without any synchronization expense.

Commented By Sean: mutators are mutually exclusive while accessors are not.

66. Any collection class can be made thread-safe by means of a synchronization wrapper:

List<E> synchArrayList = Collections.synchronizedList(new ArrayList<E>()); Set<E> synchHashSet = Collections.synchronizedSet(new HashSet<E>()); Map<K, V> synchHashMap = Collections.synchronizedMap(new HashMap<K, V>());

The methods of the resulting collections are protected by a lock, providing thread-safe access. You should make sure that no thread accesses the data structure through the original unsynchronized methods. You still need to use “client-side” locking if you want to iterate over the collection while another thread has the opportunity to mutate it.

67. If an array list is frequently mutated, a synchronized ArrayList can outperform a CopyOnWriteArrayList.

68. A Callable is similar to a Runnable, but it returns a value. The Callable interface is a parameterized type, with a single method call:

public interface Callable<V>

{

V call() throws Exception;

}

The type parameter is the type of the returned value.

69. A Future holds the result of an asynchronous computation. The owner of the Future object can obtain the result when it is ready. The Future interface has the following methods:

public interface Future<V>

{

V get() throws . . .;

V get(long timeout, TimeUnit unit) throws . . .;

void cancel(boolean mayInterrupt);

boolean isCancelled();

boolean isDone();

}

A call to the first get method blocks until the computation is finished. The second method throws a TimeoutException if the call timed out before the computation finished. If the thread running the computation is interrupted, both methods throw an InterruptedException. If the computation has already finished, get returns immediately. The isDone method returns false if the computation is still in progress, true if it is finished. You can cancel the computation with the cancel method. If the computation has not yet started, it is canceled and will never start. If the computation is currently in progress, it is interrupted if the mayInterrupt parameter is true.

70. The FutureTask wrapper is a convenient mechanism for turning a Callable into both a Future and a Runnable—it implements both interfaces:

Callable<Integer> myComputation = . . .; FutureTask<Integer> task = new FutureTask<Integer>(myComputation); Thread t = new Thread(task); // it's a Runnable t.start(); Integer result = task.get(); // it's a Future

71. A thread pool contains a number of idle threads that are ready to run. You give a Runnable to the pool, and one of the threads calls the run method. When the run method exits, the thread doesn’t die but stays around to serve the next request.

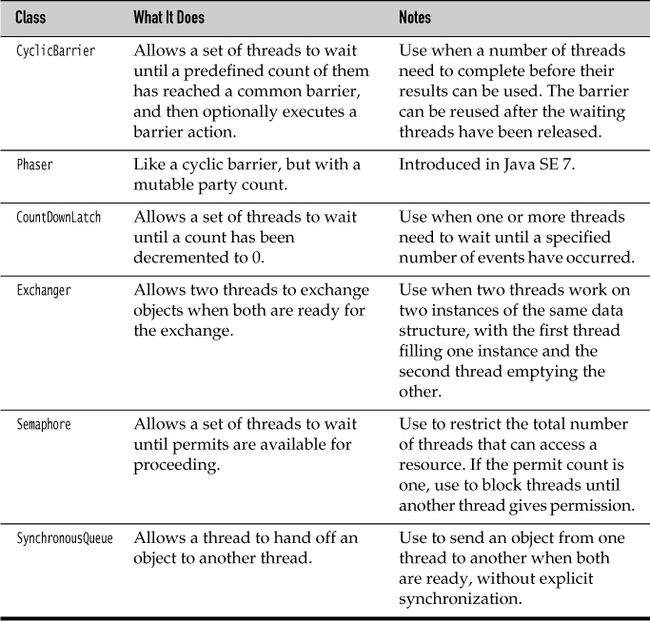

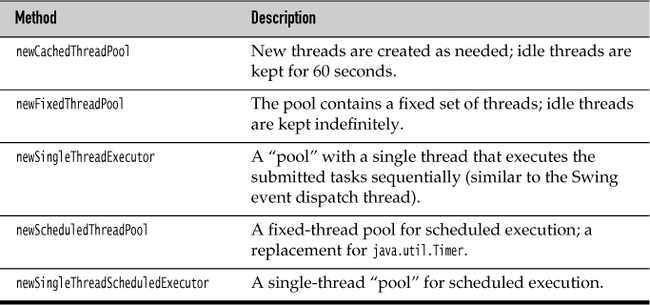

72. The Executors class has a number of static factory methods for constructing thread pools:

The newCachedThreadPool method constructs a thread pool that executes each task immediately, using an existing idle thread when available and creating a new thread otherwise. The newFixedThreadPool method constructs a thread pool with a fixed size. If more tasks are submitted than there are idle threads, the unserved tasks are placed on a queue. They are run when other tasks have completed. The newSingleThreadExecutor is a degenerate pool of size 1 where a single thread executes the submitted tasks, one after another. These three methods return an object of the ThreadPoolExecutor class that implements the ExecutorService interface.

73. You can submit a Runnable or Callable to an ExecutorService with one of the following methods:

Future<?> submit(Runnable task) Future<T> submit(Runnable task, T result) Future<T> submit(Callable<T> task)

The first submit method returns an odd-looking Future<?>. You can use such an object to call isDone, cancel, or isCancelled, but the get method simply returns null upon completion. The second version of submit also submits a Runnable, and the get method of the Future returns the given result object upon completion. The third version submits a Callable, and the returned Future gets the result of the computation when it is ready.

74. When you are done with a thread pool, call shutdown. This method initiates the shutdown sequence for the pool. An executor that is shut down accepts no new tasks. When all tasks are finished, the threads in the pool die. Alternatively, you can call shutdownNow. The pool then cancels all tasks that have not yet begun and attempts to interrupt the running threads.

75. The getLargestPoolSize method of ThreadPoolExecutor returns the largest size of the thread pool during the life of this executor even after the executor is shut down.

76. The ScheduledExecutorService interface has methods for scheduled or repeated execution of tasks. It is a generalization of java.util.Timer that allows for thread pooling. The newScheduledThreadPool and newSingleThreadScheduledExecutor methods of the Executors class return objects that implement the ScheduledExecutorService interface. You can schedule a Runnable or Callable to run once, after an initial delay. You can also schedule a Runnable to run periodically:

//The following methods schedule the given task after the given time has elapsed. ScheduledFuture<V> schedule(Callable<V> task, long time, TimeUnit unit) ScheduledFuture<?> schedule(Runnable task, long time, TimeUnit unit) //The above method schedules the given task to run periodially, every period units, after the initial delay has elapsed. ScheduledFuture<?> scheduleAtFixedRate(Runnable task, long initialDelay, long period, TimeUnit unit) /The following method schedules the given task to run periodially, with delay units between completion of one invocation and the start of the next, after the initial delay has elapsed. ScheduledFuture<?> scheduleWithFixedDelay(Runnable task, long initialDelay, long delay, TimeUnit unit)

77. The invokeAny method of ExecutorService submits all objects in a collection of Callable objects and returns the result of a completed task. You don’t know which task that is—presumably, it was the one that finished most quickly. Use this method for a search problem in which you are willing to accept any solution.

78. The invokeAll method submits all objects in a collection of Callable objects, blocks until all of them complete, and returns a list of Future objects that represent the solutions to all tasks.

79. The ExecutorCompletionService manages a blocking queue of Future objects, containing the results of the submitted tasks as they become available:

ExecutorCompletionService service = new ExecutorCompletionService(executor); for (Callable<T> task : tasks) service.submit(task); for (int i = 0; i < tasks.size(); i++) processFurther(service.take().get());

80. The fork-join framework, which appeared in Java SE 7, is designed to support those applications that use one thread per processor core, in order to carry out computationally intensive tasks. Suppose you have a processing task that naturally decomposes into subtasks, like this:

if (problemSize < threshold)

solve problem directly

else

{

break problem into subproblems

recursively solve each subproblem

combine the results

}

If you have enough idle processors, those subproblems can be solved in parallel. To put the recursive computation in a form that is usable by the framework, supply a class that extends RecursiveTask<T> (if the computation produces a result of type T) or RecursiveAction (if it doesn’t produce a result). Override the compute method to generate and invoke subtasks, and to combine their results.: