深入浅出Lucene Analyzer

Analyzer,或者说文本分析的过程,实质上是将输入文本转化为文本特征向量的过程。这里所说的文本特征,可以是词或者是短语。它主要包括以下四个步骤:

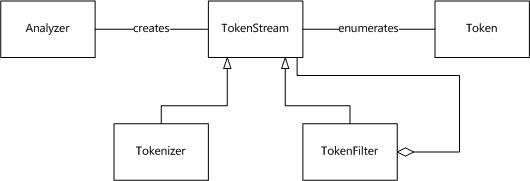

Lucene Analyzer包含两个核心组件,Tokenizer以及TokenFilter。两者的区别在于,前者在字符级别处理流,而后者则在词语级别处理流。Tokenizer是Analyzer的第一步,其构造函数接收一个Reader作为参数,而TokenFilter则是一个类似拦截器的东东,其参数可以使TokenStream、Tokenizer,甚至是另一个TokenFilter。整个Lucene Analyzer的过程如下图所示:

上图中的一些名词的解释如下表所示:

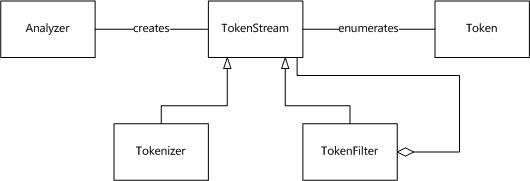

Lucene Analyzer中,Tokenizer和TokenFilter的组织架构如下图所示:

Lucene提供了数十种内置的Tokenizer、TokenFilter以及Analyzer供开发人员使用,事实上大部分时候我们只会需要使用其中的某几种,比如标准分词器StandardTokenizer、空格分词器WhitespaceTokenizer、转化为小写格式的LowCaseFilter、提取词干的PoterStemFilter以及组合使用StandardTokenizer和多种TokenFilter的StandardAnalyzer,有关这些的资料网上很多了,这里就不再详细介绍了。

有些时候,Lucene内置提供的Analyzer或许不能满足我们的需求。下面一个例子叙述了如何使用定制的Analyzer。它的功能是提取词干、将输入规范化为小写并且使用指定的停用词表:

从结果我们可以看出:

之后的文章,会介绍一下如何在这个个Analyzer中引入短语检测和同义词检测。

- 分词,将文本解析为单词或短语

- 归一化,将文本转化为小写

- 停用词处理,去除一些常用的、无意义的词

- 提取词干,解决单复数、时态语态等问题

Lucene Analyzer包含两个核心组件,Tokenizer以及TokenFilter。两者的区别在于,前者在字符级别处理流,而后者则在词语级别处理流。Tokenizer是Analyzer的第一步,其构造函数接收一个Reader作为参数,而TokenFilter则是一个类似拦截器的东东,其参数可以使TokenStream、Tokenizer,甚至是另一个TokenFilter。整个Lucene Analyzer的过程如下图所示:

上图中的一些名词的解释如下表所示:

| 类 | 说明 |

| Token | 表示文中出现的一个词,它包含了词在文本中的位置信息 |

| Analyzer | 将文本转化为TokenStream的工具 |

| TokenStream | 文本符号的流 |

| Tokenizer | 在字符级别处理输入符号流 |

| TokenFilter | 在字符级别处理输入符号流,其输入可以是TokenStream、Tokenizer或者TokenFilter |

Lucene Analyzer中,Tokenizer和TokenFilter的组织架构如下图所示:

Lucene提供了数十种内置的Tokenizer、TokenFilter以及Analyzer供开发人员使用,事实上大部分时候我们只会需要使用其中的某几种,比如标准分词器StandardTokenizer、空格分词器WhitespaceTokenizer、转化为小写格式的LowCaseFilter、提取词干的PoterStemFilter以及组合使用StandardTokenizer和多种TokenFilter的StandardAnalyzer,有关这些的资料网上很多了,这里就不再详细介绍了。

有些时候,Lucene内置提供的Analyzer或许不能满足我们的需求。下面一个例子叙述了如何使用定制的Analyzer。它的功能是提取词干、将输入规范化为小写并且使用指定的停用词表:

public class PorterStemStopWordAnalyzer extends Analyzer {

public static final String[] stopWords = { "and", "of", "the", "to", "is",

"their", "can", "all", "i", "in" };

public TokenStream tokenStream(String fieldName, Reader reader) {

Tokenizer tokenizer = new StandardTokenizer(reader);

TokenFilter lowerCaseFilter = new LowerCaseFilter(tokenizer);

TokenFilter stopFilter = new StopFilter(lowerCaseFilter, stopWords);

TokenFilter stemFilter = new PorterStemFilter(stopFilter);

return stemFilter;

}

public static void main(String[] args) throws IOException {

Analyzer analyzer = new PorterStemStopWordAnalyzer();

String text = "Holmes, who first appeared in publication in 1887, was featured in four novels and 56 short stories. The first story, A Study in Scarlet, appeared in Beeton's Christmas Annual in 1887 and the second, The Sign of the Four, in Lippincott's Monthly Magazine in 1890. The character grew tremendously in popularity with the beginning of the first series of short stories in Strand Magazine in 1891; further series of short stories and two novels published in serial form appeared between then and 1927. The stories cover a period from around 1880 up to 1914.";

Reader reader = new StringReader(text);

TokenStream ts = analyzer.tokenStream(null, reader);

Token token = ts.next();

while (token != null) {

System.out.println(token.termText());

token = ts.next();

}

}

}

从结果我们可以看出:

- StandardTokenizer将文本按照空格或标点分成词语

- LowerCaseFilter所有的输入都被转化为小写

- StopFilter消除了文中的停用词,比如"appeared between then and 1927"中的"and"

- PorterStemFilter提取了文中的词根,比如story和stories都被转化为stori

之后的文章,会介绍一下如何在这个个Analyzer中引入短语检测和同义词检测。