用maven的ant run插件自动部署MR job依赖的jar到HDFS

背景

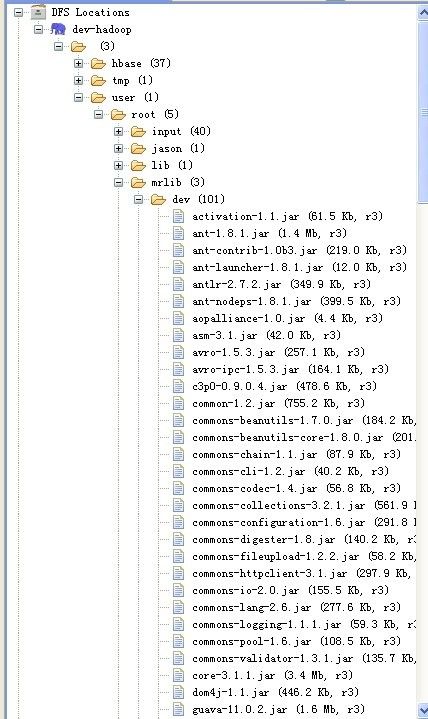

远程提交MR job时将MR job依赖的所有jar都发布到HDFS中,同时将这些jar加到hadoop job的classpath上。

如果每次手动上传就太费劲。项目集成maven。所以打算在maven package时将所有jar通过脚本上传到远程HDFS上

编写ant脚本

使用了,haddop的ant 插件。说白了就是一些util方法,调用了fsshell。没有文档,写的时候需要参考plugin的源代码。呵呵。

关键:

<hdfs cmd="rm" args="@{mapred.lib.dir}/*.jar" conf="@{hadoop.conf.dir}">

cmd:命令,参考hadoop fs

args:参数,逗号分隔

conf:core-site.xml的所在的文件目录

build.xml

<?xml version="1.0" encoding="UTF-8"?>

<!-- ========================================项目构建文件 ====================================================================== -->

<project>

<import file="build.property.xml" />

<!--

<path id="dev_classpath">-->

<!--

<pathelement location="${resource.dir}"/>

<pathelement location="${classes.dir}"/>-->

<!--

<fileset dir="${dev.ant.contrib.dir}">

<include name="**/*.jar" />

</fileset>

<fileset dir="${dev.ant.contrib.lib.dir}">

<include name="**/*.jar" />

</fileset>

<fileset dir="${dev.hadoop.home}">

<include name="**/*.jar" />

</fileset>

<fileset dir="${dev.hadoop.home.lib}">

<include name="**/*.jar" />

</fileset>

</path>-->

<target name="init">

<echo message="init copy and generate mapred libs">

</echo>

<taskdef resource="org/apache/maven/artifact/ant/antlib.xml">

</taskdef>

<taskdef resource="net/sf/antcontrib/antcontrib.properties">

</taskdef>

<!--<echo message="set classpath success"/>-->

<taskdef resource="net/sf/antcontrib/antlib.xml">

</taskdef>

<taskdef resource="org/apache/hadoop/ant/antlib.xml">

</taskdef>

<echo message="import external antcontrib and hadoop ant extention task success">

</echo>

<!--

<delete file="${resource.dir}/${mapred.lib.outfile}"/>

<echo message="delete ${resource.dir}/${mapred.lib.outfile} success">

</echo>

<touch file="${resource.dir}/${mapred.lib.outfile}">

</touch>

<echo message="create ${resource.dir}/${mapred.lib.outfile} success">

</echo>-->

</target>

<target name="dev_copy_libs">

<foreach param="local.file" target="dev_copy_lib">

<fileset dir="${lib.dir}" casesensitive="yes">

<exclude name="**/hadoop*.jar" />

<exclude name="**/hbase*.jar" />

<exclude name="**/zookeeper*.jar" />

</fileset>

<fileset dir="target" casesensitive="yes">

<include name="${project.jar}" />

</fileset>

</foreach>

</target>

<target name="dev_copy_lib">

<hdfs cmd="copyFromLocal" args="${local.file},${dev.mapred.lib.dir}" conf="${dev.hadoop.conf.dir}">

</hdfs>

<echo message="copy ${local.file} to remote hdfs files file system: ${dev.mapred.lib.dir} success">

</echo>

</target>

<macrodef name="macro_upload_mapred_lib" description="upload mapred lib">

<attribute name="hadoop.conf.dir" />

<attribute name="mapred.lib.dir" />

<sequential>

<property name="mapred.lib.dir" value="@{mapred.lib.dir}">

</property>

<echo message="hadoop conf dir: @{hadoop.conf.dir}">

</echo>

<hdfs cmd="rm" args="@{mapred.lib.dir}/*.jar" conf="@{hadoop.conf.dir}">

</hdfs>

<echo message="rm remote dir @{mapred.lib.dir}">

</echo>

</sequential>

</macrodef>

<target name="dev_upload_jars" depends="init">

<macro_upload_mapred_lib hadoop.conf.dir="${dev.hadoop.conf.dir}" mapred.lib.dir="${dev.mapred.lib.dir}" />

<echo message="----------------------------------------">

</echo>

<echo message="begin to copy libs to ${dev.mapred.lib.dir} exclude hadoop*, hbase*,">

</echo>

<antcall target="dev_copy_libs">

</antcall>

<echo message="all files has been copied to ${dev.mapred.lib.dir}">

</echo>

<echo message="----------------------------------------">

</echo>

</target>

</project>

build.properties.xml

<?xml version="1.0" encoding="UTF-8"?>

<project>

<property file="build.properties"></property>

<property name="classes.dir" value="target/classes"></property>

<property name="lib.dir" value="lib"></property>

<property name="resource.dir" value="src/main/resource"></property>

<property name="mapred.lib.outfile" value="mapred_lib.properties"></property>

<property name="lib.dir" value="lib"></property>

<property name="project.jar" value="${project.name}-${project.version}.jar"/>

<!--

<property name="dev.ant.contrib.dir" value="${dev.ant.contrib.dir}"></property>

<property name="dev.ant.contrib.lib.dir" value="${dev.ant.contrib.dir}/lib"></property>

<property name="dev.hadoop.home" value="${dev.hadoop.home}"></property>

<property name="dev.hadoop.home.lib" value="${dev.hadoop.home}/lib"></property>-->

</project>

build.properties

src.conf.dir=src/main/conf

target.dir=target

#dev

dev.mapred.lib.dir=/user/root/mrlib/dev

dev.hadoop.conf.dir=${src.conf.dir}/dev

#test

test.mapred.lib.dir=/user/mrlib/test

test.hadoop.conf.dir=${src.conf.dir}/test

#testout

testout.mapred.lib.dir=/user/mrlib/testout

testout.hadoop.conf.dir=${src.conf.dir}/testout

配置pom.xml,因为我们使用了很多第三方的ant plugin。加入到ant plugin的dependency中。

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-antrun-plugin</artifactId>

<version>1.7</version>

<executions>

<execution>

<id>upload mapred jars</id>

<phase>package</phase>

<configuration>

<target>

<ant antfile="${basedir}/build.xml" inheritRefs="true">

<target name="${envcfg.dir}_upload_jars" />

</ant>

</target>

</configuration>

<goals>

<goal>run</goal>

</goals>

</execution>

</executions>

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase</artifactId>

<version>0.94.1</version>

<exclusions>

<exclusion>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-core</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>ant-contrib</groupId>

<artifactId>ant-contrib</artifactId>

<version>1.0b3</version>

<exclusions>

<exclusion>

<groupId>ant</groupId>

<artifactId>ant</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.ant</groupId>

<artifactId>ant-nodeps</artifactId>

<version>1.8.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-core</artifactId>

<version>${hadoop.version}-modified</version>

<optional>true</optional>

<exclusions>

<exclusion>

<groupId>hsqldb</groupId>

<artifactId>hsqldb</artifactId>

</exclusion>

<exclusion>

<groupId>net.sf.kosmosfs</groupId>

<artifactId>kfs</artifactId>

</exclusion>

<exclusion>

<groupId>org.eclipse.jdt</groupId>

<artifactId>core</artifactId>

</exclusion>

<exclusion>

<groupId>net.java.dev.jets3t</groupId>

<artifactId>jets3t</artifactId>

</exclusion>

<exclusion>

<groupId>oro</groupId>

<artifactId>oro</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-ant</artifactId>

<version>${hadoop.version}</version>

<optional>true</optional>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.maven</groupId>

<artifactId>maven-artifact-ant</artifactId>

<version>2.0.4</version>

</dependency>

</dependencies>

</plugin>

运行:

执行maven install,看到所有jar都发布到了hdfs上。hoho。