hadoop 2..30的官方tarball中 ./lib/native中的库只适合32位操作系统,在64位下安装会报一些错误,使用hadoop启动不起来。所以需要在64位上重新编译。

1. enviroment

hadoop 2.3.0

ubuntu 12.04 64

2. follow these steps to recompile hadoop

sudo apt-get install g++ autoconf automake libtool make cmake zlib1g-dev pkg-config libssl-dev

a. install maven 3

b.install oracle java

c. install protobuf-2.5.0

d. install ant

e. download hadoop src tarball and decompress it

f. enter the hadoop src dir and run commands

$ mvn package -Pdist,native -DskipTests -Dtar

--------------------------------------------------------------------------------------------------------------

The following steps are adopted by centos6.5

a.install maven3

#wget http://apache.fayea.com/apache-mirror/maven/maven-3/3.0.5/binaries/apache-maven-3.0.5-bin.tar.gz

# tar -xzf apache-maven-3.0.5-bin.tar.gz

# mv apache-maven-3.0.5 /usr/local/maven

edit /etc/profile

MAVEN_HOME=/usr/local/maven

export MAVEN_HOME

export PATH=${PATH}:${MAVEN_HOME}/bin

#source /etc/profile

#mvn -version

b install ant

#yum install ant

#ant

Error: Could not find or load main class org.apache.tools.ant.launch.Launcher

google some results show that add ANT_HOME to /etc/profile. I did this but fail.

When I run #ant --execdebug I found the error

so the solution is simple,just create a dir mentioned above

#mkdir /usr/lib/jvm-exports/jdk1.7.0_51

install ant through yum will install ant 1.7.1, but this leads to some errors when compile hadoop

so I install ant1.9.3

#yum remove ant

#tar xzf apache-ant-1.9.3-bin.tar.gz

#mv apache-ant-1.9.3 ant

#mv ant /usr/local/

# echo 'export ANT_HOME=/usr/local/ant'>>/etc/profile

# echo 'export PATH=$PATH:$ANT_HOME/bin'>>/etc/profile

# echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$ANT_HOME/lib'>>/etc/profile

# source /etc/profile

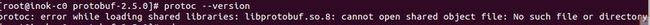

c install protobuf

#yum install protobuf

this will install protobuf2.3.0,and not work

#protoc --version

install it from source

#tar xzf protobuf-2.5.0.tar.gz

#cd protobuf-2.5.0

#./configure

#make

#make install (need root privilege)

but error comes

Solution:

# echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib'>>/etc/profile

# source /etc/profile

d.install findbugs

#tar xzf findbugs-2.0.3.tar.gz

#mv findbugs-2.0.3 findbugs

#mv findbugs /usr/local/

#echo 'export FINDBUGS_HOME=/usr/local/findbugs'>>/etc/profile

# echo 'export PATH=$PATH:$FINDBUGS_HOME/bin'>>/etc/profile

# source /etc/profile

e.compile hadoop

#tar -xzf hadoop-2.3.0-src.tar.gz

#cd hadoop-2.3.0-src

#mvn package -Pdist,native -DskipTests -Dtar

When compiled successfully. copy all file in hadoop-dist/target/hadoop-2.3.0/lib/native to your cluster nodes' hadoop corresponding dir.

参考:

http://www.csrdu.org/nauman/2014/01/23/geting-started-with-hadoop-2-2-0-building/

http://www.debugo.com/hadoop2-3-setup/

http://blog.csdn.net/cruise_h/article/details/18709969