安装hadoop错误一则:/tmp/hadoop-grid/mapred/system/jobtracker.info could only be replicated to 0 nodes

现象:

--节点进程显示都是正常的呀

[grid@gc logs]$ /usr/java/jdk1.6.0_18/bin/jps

4434 JobTracker

4346 SecondaryNameNode

4194 NameNode

8291 Jps

[grid@rac1 conf]$ /usr/java/jdk1.6.0_18/bin/jps

32423 Jps

29224 DataNode

29348 TaskTracker

[grid@rac2 logs]$ /usr/java/jdk1.6.0_18/bin/jps

26358 DataNode

26457 TaskTracker

1210 Jps

--master节点的jobtracker日志

[grid@gc logs]$ tail -100f hadoop-grid-jobtracker-gc.localdomain.log

2012-11-23 15:49:26,973 WARN org.apache.hadoop.hdfs.DFSClient: Error Recovery for block null bad datanode[0] nodes == null

2012-11-23 15:49:26,973 WARN org.apache.hadoop.hdfs.DFSClient: Could not get block locations. Source file "/tmp/hadoop-grid/mapred/system/jobtracker.info" - Aborting...

2012-11-23 15:49:26,974 WARN org.apache.hadoop.mapred.JobTracker: Writing to file hdfs://gc.localdomain:9000/tmp/hadoop-grid/mapred/system/jobtracker.info failed!

2012-11-23 15:49:26,975 WARN org.apache.hadoop.mapred.JobTracker: FileSystem is not ready yet!

2012-11-23 15:49:26,977 WARN org.apache.hadoop.mapred.JobTracker: Failed to initialize recovery manager.

org.apache.hadoop.ipc.RemoteException: java.io.IOException: File /tmp/hadoop-grid/mapred/system/jobtracker.info could only be replicated to 0 nodes, instead of 1

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:1271)

at org.apache.hadoop.hdfs.server.namenode.NameNode.addBlock(NameNode.java:422)

at sun.reflect.GeneratedMethodAccessor8.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:508)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:959)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:955)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:953)

at org.apache.hadoop.ipc.Client.call(Client.java:740)

at org.apache.hadoop.ipc.RPC$Invoker.invoke(RPC.java:220)

at $Proxy4.addBlock(Unknown Source)

at sun.reflect.GeneratedMethodAccessor8.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25)

at java.lang.reflect.Method.invoke(Method.java:597)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:82)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:59)

at $Proxy4.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.locateFollowingBlock(DFSClient.java:2937)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.nextBlockOutputStream(DFSClient.java:2819)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream.access$2000(DFSClient.java:2102)

at org.apache.hadoop.hdfs.DFSClient$DFSOutputStream$DataStreamer.run(DFSClient.java:2288)

--slave 1节点日志

[grid@rac1 logs]$ more hadoop-grid-datanode-rac1.localdomain.log

2012-11-23 15:33:28,815 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.101:9000. Already tried 7 time(s).

2012-11-23 15:33:29,817 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.101:9000. Already tried 8 time(s).

2012-11-23 15:33:30,818 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.101:9000. Already tried 9 time(s).

2012-11-23 15:33:30,819 INFO org.apache.hadoop.ipc.RPC: Server at /192.168.2.101:9000 not available yet, Zzzzz...

2012-11-23 15:33:32,820 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.101:9000. Already tried 0 time(s).

2012-11-23 15:33:33,821 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.101:9000. Already tried 1 time(s).

--slave 2节点日志

[grid@rac2 logs]$ more hadoop-grid-datanode-rac2.localdomain.log

2012-11-23 15:34:19,661 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 9 time(s).

2012-11-23 15:34:19,663 INFO org.apache.hadoop.ipc.RPC: Server at /192.168.2.102:9001 not available yet, Zzzzz...

2012-11-23 15:34:21,665 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 0 time(s).

2012-11-23 15:34:22,666 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 1 time(s).

2012-11-23 15:34:23,667 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 2 time(s).

2012-11-23 15:34:24,674 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 3 time(s).

2012-11-23 15:34:25,683 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 4 time(s).

2012-11-23 15:34:26,685 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 5 time(s).

2012-11-23 15:34:27,690 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 6 time(s).

2012-11-23 15:34:28,703 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: /192.168.2.102:9001. Already tried 7 time(s).

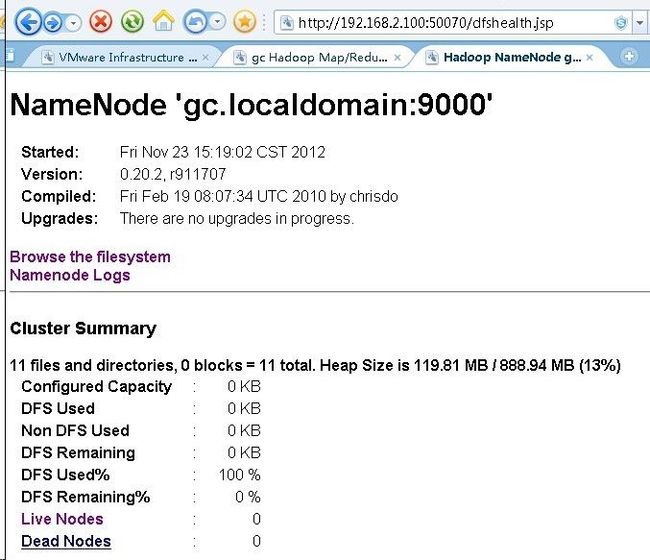

jobtracker监控界面:

原因:

是因为两个slave节点的core-site.xml和mapred-site.xml文件配置的问题

之前对安装说明解决有误,一直以为在ore-site.xml和mapred-site.xml配置文件中IP或机器名应为各自机器的IP或机器名。

所以我之前把两个slave节点的core-site.xml和mapred-site.xml文件配置成了自己机器的IP。

应该都改成master机器的IP或机器名,如下:

--1 master 及 2 slave nodes 的 core-site.xml 和 mapred-site.xml 配置

[grid@gc conf]$ cat core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://gc:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/grid/hadoop/tmp</value>

</property>

</configuration>

[grid@gc conf]$ cat mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>gc:9001</value>

</property>

</configuration>

然后重启hadoop正常

问题帖子:http://f.dataguru.cn/thread-32858-1-1.html

总结:

在安装配置hadoop时,如果出现问题,可以主要按下面的步骤检查:

1、节点的映射,master,slave1;slave2。。。;2、ssh互信,master—》slave1;master—》slave2;

3、各个服务器上的hadoop的配置文件确认正确,安装目录一致,tmp,data目录也要一致;

4、防火墙要关闭:/etc/init.d/iptables stop