分类算法之决策树

一、算法

Key points:

- 决策树是一个分类算法,分类结果是离散值(对应输出结果是连续值的回归算法);

- 有监督的分类算法;

- 是一种贪婪算法,生成的每一步都是局部最优值

- 容易over fitting

- noise影响不大

- 空间划分,通过递归的方法把特征空间划分成不重叠的矩形

例子 (from Machine Learning in Action):

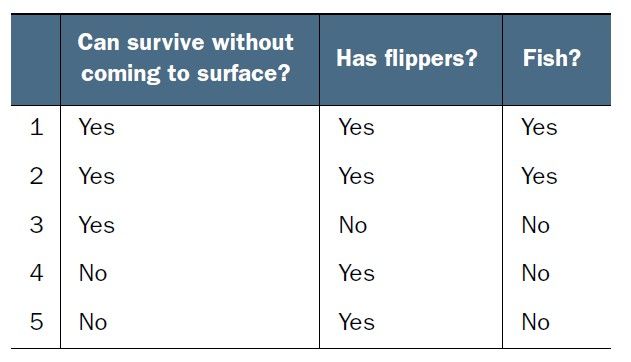

判断是否是Fish,有两个特征Can survive without coming to surface?和 Has flippers?

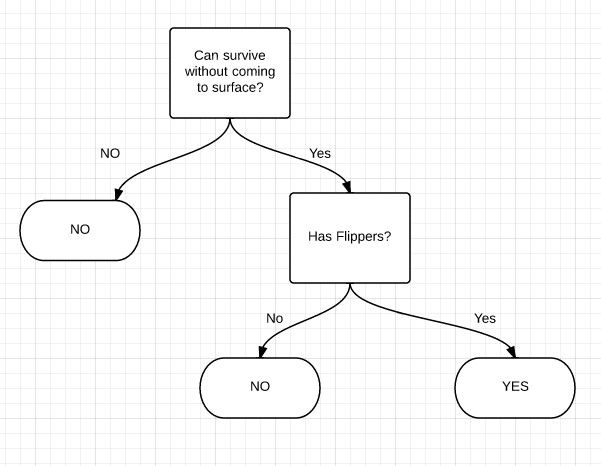

最后实现的决策树为:

生成决策树时要考虑的问题:

- How should the training records be split?

每次split的时候,都要挑选一个最佳的特征。

The method developed for selecting the best split are often based on the degree of impurity of the child node.

例如:集合{'yes':10,'no':0}的不纯洁度最低,集合{'yes':5,'no':5}不纯洁度最高,显然要挑不纯度低 的特征;描述不纯度的方法有以下几种:

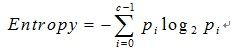

信息熵

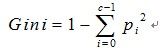

Gini

Gini impurity is the expected error rate if one of the results from a set is randomly applied to one of the items in the set.(集体智慧编程) ???

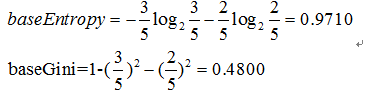

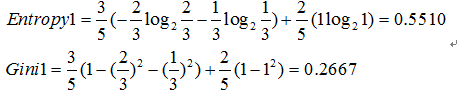

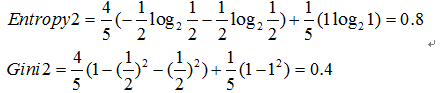

计算出每个子集的不纯度后,再计算split以后总不纯度。与split前的不纯度相比较

信息增益(information gain)就是指信息熵的有效减少量

选择差值最大的

例如:原集合为[yes,yes,no,no,no]

选择“surface”, split后的集合为:[yes,yes,no]和[no,no]

选择"Flippers", split后的集合为:[yes,yes,no,no]和[no]

最后选择差值最大的,即”surface“

- How should the splitting procedure stop?

当所有的records属于同一类,即分类完毕;或者features用完了

或者information gain=0??

当达到以上两种情况时,往往会发生过拟合,见以下

如何处理过拟合的问题:

- Pre-pruning:

- 设定一个阈值,该阈值可以是决策树高度,节点实例个数,信息增益值等,该节点成为叶节点

- 该叶节点持有其数据集中样本最多的类或者其概率分布

- Post-pruning:

-

首先构造完整的决策树,允许决策树过度拟合训练数据

- 对置信度不够的节点的子树用叶节点或树枝来替代

- 该叶节点持有其子树的数据集中样本最多的类或者其概率分布

什么时候需要剪枝:

- 数据噪音,因此有的分类不准

- 训练数据量少,或者不具有代表性

- 过拟合

ID3, C4.5, C5.0, CART

ID3 1986年 Quilan

选择具有最高信息增益的属性作为测试属性

ID3(DataSet, featureList):

- 创建根节点R

- 如果当前DataSet中的数据都属于同一类,则标记R的类别为该类

- 如果当前featureList集合为空,则标记R的类别为当前DataSet中样本最多的类别

- 递归情况:

# 从featureList中选择属性(选择Gain(DataSet,F)最大的属性)

# 根据F的每一个值v,将DataSet划分为不同的子集DS,对每一个Ds:

- 创建节点C

- 如果DS为空,节点C标记为DataSet中样本最多的类别

- 如果DS不为空,节点C=ID3(DS,featureList-F)

- 将节点C添加为R的子节点

C4.5 1993年 by Quilab(对ID3的改进)

- 信息增益率(information gain ratio)

- 连续值属性

离散化处理:将连续型的属性变量进行离散化处理,形成决策树的训练集

- 把需要处理的样本按照连续变量的大小从小到大进行排序

- 假设该属性对应的不同的属性值一共有N个,那么总共有N-1个可能的候选分割阈值点,每个候选的分割阈值点的值为上述排序后的属性值中两两前后元素的中点

- 用信息增益率选择最佳划分

- 缺失值

- 复杂一点的办法是为每个可能值赋一个概率

- 最简单的办法是丢弃这些样本

- 后剪枝(基于错误剪枝EBP-Error Based Pruning)

C5.0 1998年

加入了Boosting算法框架

CART (Classification and Regression Trees)

- 二元划分

- 不纯性度量

- 连续目标:最小平方残差、最小绝对残差

- 剪枝

分类树:

CART_classification(DataSet, featureList, alpha,):

创建根节点R

如果当前DataSet中的数据的类别相同,则标记R的类别标记为该类

如果决策树高度大于alpha,则不再分解,标记R的类别classify(DataSet)

递归情况:

标记R的类别classify(DataSet)

从featureList中选择属性F(选择Gini(DataSet, F)最小的属性划分,连续属性参考C4.5的离散化过程(以Gini最小作为划分标准))

根据F,将DataSet做二元划分DS_L 和 DS_R:

如果DS_L或DS_R为空,则不再分解

如果DS_L和DS_R都不为空,节点

C_L= CART_classification(DS_L, featureList, alpha);

C_R= CART_classification(DS_R featureList, alpha)

将节点C_L和C_R添加为R的左右子节点

回归树

CART_regression(DataSet, featureList, alpha, delta):

创建根节点R

如果当前DataSet中的数据的值都相同,则标记R的值为该值

如果最大的phi值小于设定阈值delta,则标记R的值为DataSet应变量均值

如果其中一个要产生的节点的样本数量小于alpha,则不再分解,标记R的值为DataSet应变量均值

递归情况:

从featureList中选择属性F(选择phi(DataSet, F)最大的属性,连续属性(或使用多个属性的线性组合)参考C4.5的离散化过程 (以phi最大作为划分标准))

根据F,将DataSet做二元划分DS_L 和 DS_R:

如果DS_L或DS_R为空,则标记节点R的值为DataSet应变量均值

如果DS_L和DS_R都不为空,节点

C_L= CART_regression(DS_L, featureList, alpha, delta);

C_R= CART_regression(DS_R featureList, alpha, delta)

将节点C_L和C_R添加为R的左右子节点

分类树与回归树的差别在于空间划分方法一个是线性一个是非线性

二、python实现

如何在python中存储一棵决策树. 如果每个特征只有两种选择,就是一棵二叉树。但往往不局限于二叉树。

- 采用字典数据结构,如上例中的决策树最后可以表示成这样:

{'no surfacing': {0: 'no', 1: {'flippers': {0: 'no', 1: 'yes'}}}}

新建一个结点:{'label':{}}, split以后{'label':{cond1:{},cond2:{},cond3:{}}},如果满足if语句,{}就是一个label,如果不满足,就建结点

- 一个节点作为一个类

class decisionNode(object): def __init__(self,test_cond=-1,value=None,labels=None,trueBranch=None,falseBranch=None): self.test_cond = test_cond #该节点上判断条件 self.value=value #非叶子节点才有 self.labels=labels #叶子节点才有 self.trueBranch=trueBranch #左节点 self.falseBranch=falseBranch #右节点

输入训练样本:

# This Python file uses the following encoding: utf-8

from math import log

def createDataSet():

dataSet = [[1, 1, 'yes'],

[1, 1, 'yes'],

[1, 0, 'no'],

[0, 1, 'no'],

[0, 1, 'no']]

features = ['no surfacing','flippers']

#change to discrete values

return dataSet, features

用递归的方法建造决策树,代码如下:

用字典表示:

def treeGrowth(dataSet,features):

classList=[example[-1] for example in dataSet]

if classList.count(classList[0])==len(classList): #所有E中只有一种class

return classList[0]

if len(dataSet[0])==1: #没有多余的features

return classify(classList)

bestFeat=findBestSplit(dataSet)

bestFeatLabel=features[bestFeat]

mytree={bestFeatLabel:{}}

featValues=[example[bestFeat] for example in dataSet]

uniqueFeatValues=set(featValues)

del(features[bestFeat])

for values in uniqueFeatValues:

subDataSet=splitDataSet(dataSet,bestFeat,values)

mytree[bestFeatLabel][values] =treeGrowth(subDataSet,features)

return mytree

用节点类表示:

def treeGrowth(dataSet,features): classList=[example[-1] for example in dataSet] #stop condition if classList.count(classList[0])==len(classList) : return decisionNode(labels=classList[0]) if features==[] : return decisionNode(labels=classify(classList)) root=decisionNode() bestFeature = findBestSplit(dataSet) bestFeatureLabel=features[bestFeature] #print bestFeatureLabel root.test_cond=bestFeatureLabel featureValues=[example[bestFeature] for example in dataSet] uniqueFeatureValues =set(featureValues) del(features[bestFeature]) for value in uniqueFeatureValues: subDataSet=splitDataSet(dataSet,bestFeature,value) if value==1: trueChild=treeGrowth(subDataSet,features) root.trueBranch=trueChild root.value=value falseChild=treeGrowth(subDataSet,features) root.falseBranch=falseChild return root

找出子集合中的大多数:

def classify(classList):

classCount={}

for vote in classList:

if vote not in classCount.keys():

classCount[vote]=0

classCount[vote]+=1

sortedClassCount = sorted(classCount.iteritems(), key=operator.itemgetter(1), reverse=True)

return sortedClassCount[0][0]

找出最佳特征:

def findBestSplit(dataSet): numFeatures=len(dataSet[0])-1 baseEntropy = calcShannonEnt(dataSet) bestInfoGain = 0.0 bestFeat=-1 for i in range(numFeatures): featValues=[example[i] for example in dataSet] uniqueFeatValues=set(featValues) newEntropy=0.0 for val in uniqueFeatValues: subDataSet=splitDataSet(dataSet,i,val) prob=len(subDataSet)/float(len(dataSet)) newEntropy+=prob*calcShannonEnt(subDataSet) if (baseEntropy- newEntropy)>bestInfoGain: bestInfoGain=baseEntropy- newEntropy bestFeat=i return bestFeat

def splitDataSet(dataSet,feat,values):

retDataSet = []

for featVec in dataSet:

if featVec[feat]==values:

reducedFeatVec=featVec[:feat]

reducedFeatVec.extend(featVec[feat+1:])

retDataSet.append(reducedFeatVec)

return retDataSet

def calcShannonEnt(dataSet):

numEntries = len(dataSet)

labelCounts = {}

for featVec in dataSet:

currentLabel = featVec[-1]

if currentLabel not in labelCounts.keys():

labelCounts[currentLabel]=0

labelCounts[currentLabel]+=1

shannonEnt=0.0

for key in labelCounts:

prob=float(labelCounts[key])/numEntries

if prob!=0:

shannonEnt-=prob*log(prob,2)

return shannonEnt

三、R实现

creatDataSet<-function(){

dataSet<-data.frame(c(1,1,1,0,0),c(1,1,0,1,1),c("yes","yes","no","no","no"))

names(dataSet)<-c("no surfacing","flippers","results")

return (dataSet)

}

setClass("decisionNode",

representation(

test_cond = "ANY",

value = "ANY",

labels = "ANY",

trueBranch = "ANY",

falseBranch = "ANY"

),

prototype(

test_cond = NA,

value = NA,

labels = NA,

trueBranch = NA,

falseBranch = NA

)

)

findBestSplit<-function(dataSet){

classList<-dataSet$result

numFeatures<-length(dataSet)-1

baseEntropy<-calcShannonEnt(dataSet)

bestInfoGain<-0

for(i in range(1:numFeatures)){

newEntropy<-0

for(j in range(unique(dataSet[,i]))){

subDataSet<-splitDataSet(dataSet,i,j)

prob<-length(subDataSet[,1])/length(dataSet[,1])

newEntropy<-newEntropy+prob*calcShannonEnt(subDataSet)

}

if((baseEntropy-newEntropy)>bestInfoGain){

bestInfoGain<-baseEntropy-newEntropy

bestFeature<-i

}

}

return (bestFeature)

}

calcShannonEnt <- function(dataSet){

end<-length(dataSet)

sum<-length(dataSet[,1])

labelCounts<-as.data.frame(table(dataSet[,end]))

shannonEnt<-0

for(i in range(1:length(labelCounts[,1]))){

prob<- labelCounts[i,-1]/sum

if(prob!=0){

shannonEnt<-shannonEnt-prob*log(prob,2)

}

}

return (shannonEnt)

}

splitDataSet<- function(dataSet,feature,value){

subDataSet<-dataSet[which(dataSet[,feature]==value),]

subDataSet[,feature]<-NULL

return (subDataSet)

}

treeGrowth<-function(dataSet){

classList<-dataSet$result

if(length(which(classList==classList[1]))==length(classList)) return (new("decisionNode",labels=classList[1]))

if(length(dataSet)==1) return (new("decisonNode",labels=classify(classList)))

root<-new("decisionNode")

bestFeature<-findBestSplit(dataSet)

bestFeatureLabel<-names(dataSet)[bestFeature]

root@test_cond<-bestFeatureLabel

uniqueValues<-unique(dataSet[bestFeature])

for(i in range(uniqueValues)){

root@value<-i

subDataSet<-splitDataSet(dataSet,bestFeature,i)

if (i==1){

trueChild<-treeGrowth(subDataSet)

root@trueBranch<-trueChild

}else{

falseChild<-treeGrowth(subDataSet)

root@falseBranch<-falseChild

}

}

return (root)

}