lvs+keepalived高可用负载均衡集群双主实现

项目说明

1、 使用LVS负载均衡用户请求到后端web服务器,并且实现健康状态检查

2、 使用keepalived高可用LVS,避免LVS单点故障

3、 集群中分别在LK-01和LK-02运行一个VIP地址,实现LVS双主

4、 用户通过DNS轮训的方式实现访问集群的负载均衡(不演示)

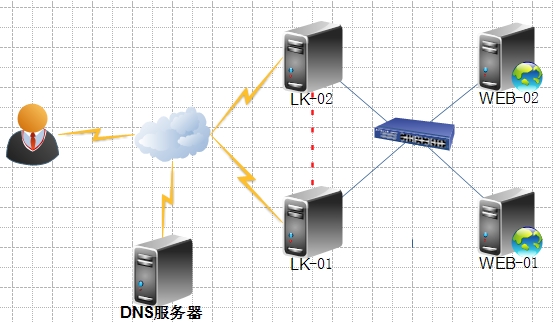

环境拓扑

环境介绍

IP地址 |

功能描述 |

|

LK-01 |

172.16.4.100 |

调度用户请求到后端web服务器,并且和LK-02互为备份 |

LK-02 |

172.16.4.101 |

调度用户请求到后端web服务器,并且和LK-01互为备份 |

WEB-01 |

172.16.4.102 |

提供web服务 |

WEB-02 |

172.16.4.103 |

提供web服务 |

DNS |

172.16.4.10 |

实现DNS轮训解析用户请求域名地址 |

VIP1 |

172.16.4.1 |

用户访问集群的入口地址,可能在LK-01,也可能在LK-02 |

VIP2 |

172.16.4.2 |

用户访问集群的入口地址,可能在LK-01,也可能在LK-02 |

环境配置

后端WEB服务器配置

Web服务器的配置极其简单,只需要提供测试页面启动web服务即可,配置如下:

Web-01配置

[root@WEB-01 ~]# echo "web-01" >/var/www/html/index.html [root@WEB-01 ~]# service httpd start

Web-02配置

[root@WEB-02 ~]# echo "web-02" >/var/www/html/index.html [root@WEB-02 ~]# service httpd start

LVS访问后端web服务器,验证web服务提供成功

[root@LK-01 ~]# curl 172.16.4.102 web-01 [root@LK-01 ~]# curl 172.16.4.103 web-02

出现设置的页面,就说明web服务是正常

WEB服务器使用脚本进行配置LVS-DR模式客户端环境

由于是双主模式,客户端需要启用两个子接口配置两个VIP地址。

#!/bin/bash

#

vip1=172.16.4.1

vip2=172.16.4.2

interface1="lo:0"

interface2="lo:1"

case $1 in

start)

echo 1> /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1> /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2> /proc/sys/net/ipv4/conf/all/arp_announce

echo 2> /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig$interface1 $vip1 broadcast $vip1 netmask 255.255.255.255 up

ifconfig$interface2 $vip2 broadcast $vip2 netmask 255.255.255.255 up

route add-host $vip1 dev $interface1

route add-host $vip2 dev $interface2

;;

stop)

echo 0 >/proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 >/proc/sys/net/ipv4/conf/all/arp_announce

echo 0 >/proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig$interface1 down

ifconfig$interface2 down

;;

status)

if ifconfiglo:0 |grep $vip1 &> /dev/null; then

echo"ipvs1 is running."

else

echo"ipvs1 is stopped."

fi

if ifconfiglo:1 |grep $vip2 &> /dev/null; then

echo"ipvs2 is running."

else

echo"ipvs2 is stopped."

fi

;;

*)

echo"Usage: `basename $0` {start|stop|status}"

exit 1

esac

LVS+keepalived设置

Keepalived安装

Centos6.6已经收录了keepalived,直接使用yum安装即可

[root@LK-01 ~]# yum -y install keepalived [root@LK-02 ~]# yum -y install keepalived

HK-01节点配置

[root@LK-01 ~]# vim /etc/keepalived/keepalived.conf

global_defs {

router_idLVS_DEVEL

}

vrrp_script chk_mt_down {

script"[[ -f /etc/keepalived/down ]] && exit 1 || exit 0" #定义一个脚本,判断down文件是否存在,存在就退出执行,不存在继续执行

interval 1 #1秒检测一次

weight -5 #如果down文件存在,VRRP实例优先级减5

}

vrrp_instance VI_1 { #定义VRRP热备实例

state MASTER #MASTER表示主节点

interfaceeth 0 #承载VIP的物理接口

virtual_router_id 51 #虚拟路由器的ID号

priority 100 #优先级

advert_int 1 #通知间隔秒数(心跳频率)

authentication { #设置主备节点直接的认证信息

auth_type PASS #

auth_pass asdfgh #

}

virtual_ipaddress { #设置集群中的VIP地址

172.16.4.1/32 brd 172.16.4.1 dev eth0 label eth0:0

}

track_script {

chk_mt_down #调用上面定义的脚本,如果这里没有调用,那么上面定义的脚本是无法生效的

}

}

vrrp_instance VI_2 { #定义实例为HK-02的备份节点

state BACKUP

interfaceeth 0

virtual_router_id 52

priority 99 #设置优先级,低于主服务器

advert_int 1

authentication {

auth_type PASS

auth_pass qwerty

}

virtual_ipaddress {

172.16.4.2

}

track_script {

chk_mt_down

}

}

virtual_server 172.16.4.1 80 { #定义一个VIP地址和端口

delay_loop 6

lb_algo rr #轮训算法

lb_kind DR #调度类型

nat_mask 255.255.0.0

# persistence_timeout 50 #设置长连接时长,单位秒

protocolTCP

real_server 172.16.4.102 80 { #定义后端RS服务器的相关信息

weight 1 #设置权重

HTTP_GET { #设置健康状态检测方式

url { #健康检查判断后端服务器首页是否访问正常(返回200状态码),如果正常则认为服务器正常

path /index.html

status 200

}

connect_timeout 3 #连接超时

nb_get_retry 3 #重试次数

delay_before_retry 1 #重试时间间隔

}

}

real_server 172.16.4.103 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

}

virtual_server 172.16.4.2 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

# persistence_timeout 50

protocol TCP

real_server 172.16.4.102 80 {

weight 1

HTTP_GET {

url {

path /index.html

status 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.4.103 80 {

weight 1

HTTP_GET {

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

}

LK-02节点设置

LK-02节点设置中的参数在LK-01节点都有注释

[root@LK-02 ~]# vim /etc/keepalived/keepalived.conf

global_defs {

router_idLVS_DEVEL

}

vrrp_script chk_mt_down {

script"[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -5

}

vrrp_instance VI_1 {

state BACKUP

interfaceeth 0

virtual_router_id 51

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass asdfgh

}

virtual_ipaddress {

172.16.4.1/32 brd 172.16.4.1 dev eth0 label eth0:0

}

track_script {

chk_mt_down

}

}

vrrp_instance VI_2 {

state MASTER

interfaceeth 0

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass qwerty

}

virtual_ipaddress {

172.16.4.2

}

track_script{

chk_mt_down

}

}

virtual_server 172.16.4.1 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

# persistence_timeout 50

protocol TCP

real_server 172.16.4.102 80 {

weight 1

HTTP_GET{

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.4.103 80 {

weight 1

HTTP_GET{

url {

path /index.html

status 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

}

virtual_server 172.16.4.2 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.0.0

# persistence_timeout 50

protocol TCP

real_server 172.16.4.102 80 {

weight 1

HTTP_GET{

url {

path /index.html

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.4.103 80 {

weight 1

HTTP_GET{

url {

path /index.html

status 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 1

}

}

}

设置完成启动keepalived

[root@LK-01 ~]# service keepalived start [root@LK-02 ~]# service keepalived start

验证

Vip地址和集群规则验证

LK-01查看,VIP地址启动正常,而且ipvs命令自动设置成功

[root@LK-01 ~]# ip addr show dev eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP>mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:22:c5:c2 brd ff:ff:ff:ff:ff:ff inet172.16.4.100/16 brd 172.16.255.255 scope global eth0 inet172.16.4.1/32 brd 172.16.4.1 scope global eth0:0 inet6fe80::20c:29ff:fe22:c5c2/64 scope link valid_lft forever preferred_lft forever [root@LK-01 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags ->RemoteAddress:Port ForwardWeight ActiveConn InActConn TCP 172.16.4.1:80 rr ->172.16.4.102:80 Route 1 0 0 ->172.16.4.103:80 Route 1 0 0 TCP 172.16.4.2:80 rr ->172.16.4.102:80 Route 1 0 0 ->172.16.4.103:80 Route 1 0 0

LK-02查看,VIP和ipvs策略都有

[root@LK-02 ~]# ip addr show dev eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP>mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:f1:dd:b2 brd ff:ff:ff:ff:ff:ff inet172.16.4.101/16 brd 172.16.255.255 scope global eth0 inet172.16.4.2/32 scope global eth0 inet6fe80::20c:29ff:fef1:ddb2/64 scope link valid_lft forever preferred_lft forever [root@LK-02 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags ->RemoteAddress:Port ForwardWeight ActiveConn InActConn TCP 172.16.4.1:80 rr ->172.16.4.102:80 Route 1 0 0 ->172.16.4.103:80 Route 1 0 0 TCP 172.16.4.2:80 rr ->172.16.4.102:80 Route 1 0 0 ->172.16.4.103:80 Route 1 0 0

负载均衡验证

无论访问vip1还是vip2都已经实现了负载均衡功能

[root@localhost ~]# curl 172.16.4.1 web-02 [root@localhost ~]# curl 172.16.4.1 web-01 [root@localhost ~]# curl 172.16.4.1 web-02 [root@localhost ~]# curl 172.16.4.1 web-01 [root@localhost ~]# curl 172.16.4.2 web-02 [root@localhost ~]# curl 172.16.4.2 web-01 [root@localhost ~]# curl 172.16.4.2 web-02 [root@localhost ~]# curl 172.16.4.2 web-01

高可用验证

使用down文件关闭LK-02节点

[root@LK-02 ~]# touch /etc/keepalived/down

查看LK-01节点的VIP地址,发现VIP1和VIP2都已经在LK-01节点启动了

[root@LK-01 ~]# ip addr show dev eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP>mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:22:c5:c2 brd ff:ff:ff:ff:ff:ff inet172.16.4.100/16 brd 172.16.255.255 scope global eth0 inet172.16.4.1/32 brd 172.16.4.1 scope global eth0:0 inet172.16.4.2/32 scope global eth0 inet6fe80::20c:29ff:fe22:c5c2/64 scope link valid_lft forever preferred_lft forever

集群访问正常

[root@localhost ~]# curl 172.16.4.1 web-02 [root@localhost ~]# curl 172.16.4.1 web-01 [root@localhost ~]# curl 172.16.4.2 web-02 [root@localhost ~]# curl 172.16.4.2 web-01

健康检测验证

手动关闭web-02节点

[root@WEB-02 ~]# service httpd stop

查看集群规则设置,已经自动移除了web-02节点

[root@LK-01 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags ->RemoteAddress:Port ForwardWeight ActiveConn InActConn TCP 172.16.4.1:80 rr ->172.16.4.102:80 Route 1 0 1 TCP 172.16.4.2:80 rr ->172.16.4.102:80 Route 1 0 1 [root@LK-02 ~]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags ->RemoteAddress:Port ForwardWeight ActiveConn InActConn TCP 172.16.4.1:80 rr ->172.16.4.102:80 Route 1 0 0 TCP 172.16.4.2:80 rr ->172.16.4.102:80 Route 1 0 0