Puppet系列之六:如何更加安全高效地实现Puppet的推送更新功能

1 MCollective介绍

MCollective 是一个构建服务器编排(ServerOrchestration)和并行工作执行系统的框架。首先,MCollective是一种针对服务器集群进行可编程控制的系统管理解决方案。在这一点上,它的功能类似:Func,Fabric和Capistrano。

其次,MCollective的设计打破基于中心存储式系统和像SSH 这样的工具,不再仅仅痴迷于SSH 的For 循环。它使用发布订阅中间件(PublishSubscribe Middleware)这样的现代化工具和通过目标数据(metadata)而不是主机名(hostnames)来实时发现网络资源这样的现代化理念。提供了一个可扩展的而且迅速的并行执行环境。

MCollective工具为命令行界面,但它可与数千个应用实例进行通信,而且传输速度惊人。无论部署的实例位于什么位置,通信都能以线速进行传输,使用的是一个类似多路传送的推送信息系统。MCollective工具没有可视化用户界面,用户只能通过检索来获取需要应用的实例。PuppetDashboard 提供有这部分功能。

MCollective特点:

能够与小到大型服务器集群交互

使用广播范式(broadcastparadigm)来进行请求分发,所有服务器会同时收到请求,而只有与请求所附带的过滤器匹配的服务器才会去执行这些请求。没有中心数据库来进行同步,网络是唯一的真理

打破了以往用主机名作为身份验证手段的复杂命名规则。使用每台机器自身提供的丰富的目标数据来定位它们。目标数据来自于:Puppet,Chef, Facter, Ohai 或者自身提供的插件

使用命令行调用远程代理

能够写自定义的设备报告

大量的代理来管理包,服务和其他来自于社区的通用组件

允许写SimpleRPC 风格的代理、客户端和使用Ruby 实现Web UIs

外部可插件化(pluggable)实现本地需求

中间件系统已有丰富的身份验证和授权模型,利用这些作为控制的第一道防线。

重用中间件来做集群、路由和网络隔离以实现安全和可扩展安装。

相对于Puppet来说,MCollective是一个Puppet 的超强管理方案,监控、运行、管理puppet agent 都是很方便的。

2 Middleware(RabbitMQ、ActiveMQ)介绍

RabbitMQ是一个实现了高级消息排队协议(AMQP)的消息队列服务。RabbitMQ基于OTP(OpenTelecom Platform,开发电信平台)进行构建,并使用Erlang语言和运行时环境来实现

ActiveMQ是Apache出品,最流行的,能力强劲的开源消息总线。ActiveMQ是一个完全支持JMS1.1和J2EE1.4规范的JMSProvider实现

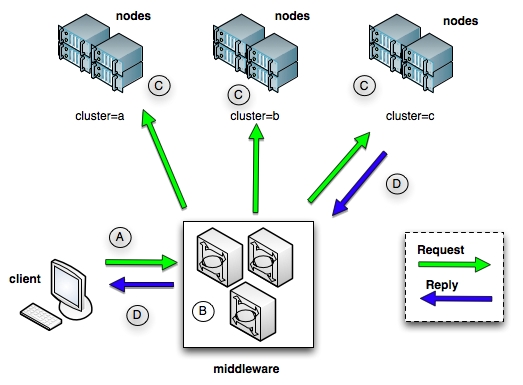

备注:MCollective是基于ApacheActiveMQ中间件来进行开发和测试的,然而其对java和XML格式的配置文件的依赖使我们将更多的注意力和兴趣转移到RabbitMQ中间件服务上。如果考虑到性能和扩展性,部署ActiveMQ是一个更好的选择。工作原理图如下,

3 安装和配置Middleware

此处的部署环境基于Puppet系列搭建的环境,其中:

puppet master配置为:

MCollective客户端+ACtiveMQ/RabbitMQ服务端+Puppet服务端

puppet clients配置为:

MCollective服务端+Puppet客户端

备注:ActiveMQ和RabbitMQ选其一进行部署即可

3.1 安装和配置ActiveMQ

3.1.1安装ActiveMQ

yum install tanukiwrapper activemq activemq-info-provider

3.1.2 配置ActiveMQ

主要配置MCollective连接的端口以及账号、密码及权限

vim /etc/activemq/activemq.xml

…

<simpleAuthenticationPlugin>

<users>

<!-- <authenticationUser username="${activemq.username}"password="${activemq.password}" groups="admins,everyone"/> --> #禁用

<authenticationUser username="mcollective" password="secret" groups="mcollective,admins,everyone"/> #配置通信的账号及密码

</users>

</simpleAuthenticationPlugin>

…

<authorizationPlugin> #配置权限,默认即可

<map>

<authorizationMap>

<authorizationEntries>

<authorizationEntryqueue=">" write="admins" read="admins"admin="admins" />

<authorizationEntrytopic=">" write="admins" read="admins"admin="admins" />

<authorizationEntrytopic="mcollective.>" write="mcollective"read="mcollective" admin="mcollective" />

<authorizationEntrytopic="mcollective.>" write="mcollective"read="mcollective" admin="mcollective" />

<authorizationEntrytopic="ActiveMQ.Advisory.>" read="everyone"write="everyone" admin="everyone"/>

</authorizationEntries>

</authorizationMap>

</map>

</authorizationPlugin>

…

<transportConnectors>

<transportConnectorname="openwire" uri="tcp://0.0.0.0:61616"/>

<transportConnectorname="stomp+nio" uri="stomp://0.0.0.0:61613"/>#配置通信协议为stomp,监听61613端口

</transportConnectors>

…

3.1.3 启动ActiveMQ

/etc/rc.d/init.d/activemq start

Starting ActiveMQ Broker...

chkconfig activemq on

netstat -nlatp | grep 61613 #查看监听端口

tcp 0 0 :::61613 :::* LISTEN 26710/java

3.2 安装和配置RabbitMQ

3.2.1 安装RabbitMQ

yum install erlang #RabbitMQ依赖erlang语言,需要安装大概65个左右的erlang依赖包

yum install rabbitmq-server

ll /usr/lib/rabbitmq/lib/rabbitmq_server-3.1.5/plugins/#默认已经安装了stomp插件,老版本需要下载安装

-rw-r--r--1 root root 242999 Aug 24 17:42 amqp_client-3.1.5.ez

-rw-r--r--1 root root 85847 Aug 24 17:42 rabbitmq_stomp-3.1.5.ez

…

3.2.2 启动rabbitmq-server

/etc/rc.d/init.d/rabbitmq-server start #启动rabbitmq服务

Starting rabbitmq-server: SUCCESS

rabbitmq-server.

/etc/rc.d/init.d/rabbitmq-server status #查看rabbitmq状态

Status of node rabbit@puppetserver ...

[{pid,43198},

{running_applications,[{rabbit,"RabbitMQ","3.1.5"},

{mnesia,"MNESIA CXC 138 12","4.5"},

{os_mon,"CPO CXC 138 46","2.2.7"},

{xmerl,"XMLparser","1.2.10"},

{sasl,"SASL CXC 138 11","2.1.10"},

{stdlib,"ERTS CXC 138 10","1.17.5"},

{kernel,"ERTS CXC 138 10","2.14.5"}]},

{os,{unix,linux}},

{erlang_version,"ErlangR14B04 (erts-5.8.5) [source] [64-bit] [rq:1] [async-threads:30][kernel-poll:true]\n"},

{memory,[{total,27101856},

{connection_procs,2648},

{queue_procs,5296},

{plugins,0},

{other_proc,9182320},

{mnesia,57456},

{mgmt_db,0},

{msg_index,21848},

{other_ets,765504},

{binary,3296},

{code,14419185},

{atom,1354457},

{other_system,1289846}]},

{vm_memory_high_watermark,0.4},

{vm_memory_limit,838362726},

{disk_free_limit,1000000000},

{disk_free,15992676352},

{file_descriptors,[{total_limit,924},

{total_used,3},

{sockets_limit,829},

{sockets_used,1}]},

{processes,[{limit,1048576},{used,122}]},

{run_queue,0},

{uptime,4}]

...done.

netstat -nlp | grep beam #默认监听端口为5672

tcp 0 0 0.0.0.0:44422 0.0.0.0:* LISTEN 43198/beam

tcp 0 0 :::5672 :::* LISTEN 43198/beam

4 安装和配置MCollective

4.1 安装MCollective

4.1.1 测试端(master)安装MCollective客户端

yum install mcollective-common mcollective-client #依赖包rubygem-stomp

4.1.2 节点(client)安装MCollective服务端

[root@vm1 ~]# yum install mcollective mcollective-common #依赖rubygem-stomp、rubygems和ruby相关包

4.2 配置MCollective

4.2.1 测试端(master)配置MCollective客户端:vim /etc/mcollective/client.cfg:

#topicprefix = /topic/ #mcollective 2.4版本中此选项被删除

main_collective = mcollective

collectives = mcollective

libdir = /usr/libexec/mcollective

logger_type = console

loglevel = warn

# Plugins

securityprovider = psk

plugin.psk = a36cd839414370e10fd281b8a38a4f48 #MCollective通信共享密钥,和MCollective服务端保持一致

connector = activemq #通信协议,mcollective 2.4 版本无法用stomp通信,应选用activemq/rabbitmq connector

plugin.activemq.pool.size = 1

plugin.activemq.pool.1.host = 192.168.56.1 #Middleware地址

plugin.activemq.pool.1.port = 61613 #Middleware监听端口

plugin.activemq.pool.1.user = mcollective #Middleware通信账号

plugin.activemq.pool.1.password = secret #Middleware通信密码

plugin.activemq.heartbeat_interval = 30 #activemq 5.8以上版本设置此选项,默认会选择stomp 1.1版本

# Facts

factsource = yaml

plugin.yaml = /etc/mcollective/facts.yaml

4.2.3 节点(client)配置MCollective服务端:与客户端配置几乎一致

[root@vm1 ~]# vim /etc/mcollective/server.cfg

#topicprefix = /topic/

main_collective = mcollective

collectives = mcollective

libdir = /usr/libexec/mcollective

logfile = /var/log/mcollective.log

loglevel = info

daemonize = 1

# Plugins

securityprovider = psk

plugin.psk = a36cd839414370e10fd281b8a38a4f48

connector = activemq

#plugin.stomp.host = 192.168.56.1

#plugin.stomp.port = 61613

#plugin.stomp.user = mcollective

#plugin.stomp.password = secret

plugin.activemq.pool.size = 1

plugin.activemq.pool.1.host = 192.168.56.1

plugin.activemq.pool.1.port = 61613

plugin.activemq.pool.1.user = mcollective

plugin.activemq.pool.1.password = secret

plugin.activemq.heartbeat_interval = 30 #activemq 5.8以上版本设置此选项,默认会选择stomp 1.1版本

# Facts

factsource = yaml

plugin.yaml = /etc/mcollective/facts.yaml

启动mcollective服务端:

[root@vm1 ~]# /etc/rc.d/init.d/mcollective start

Starting mcollective: [ OK ]

[root@vm1 ~]# chkconfig mcollective on

5 测试MCollective与Middleware通信

[root@rango ~]# mco help

The Marionette Collective version 2.4.1

completion Helper for shell completion systems

facts Reports on usage for a specific fact

find Find hosts using the discovery system matching filter criteria

help Application list and help

inventory General reporting tool for nodes, collectives and subcollectives

ping Ping all nodes

plugin MCollective Plugin Application

puppet Schedule runs, enable, disable and interrogate the Puppet Agent

rpc Generic RPC agent client application

[root@rango ~]# mco ping

vm1.sysu time=71.77 msvm3.sysu time=78.11 ms

vm2.sysu time=80.98 ms

vm4.sysu time=84.78 ms

---- ping statistics ----

4 replies max: 84.78 min: 71.77 avg: 78.91

2 replies max: 159.31 min: 119.98 avg: 139.64

[root@rango ~]# mco find

vm3.sysu

vm1.sysu

vm4.sysu

vm2.sysu

6 MCollective插件的安装和测试

MCollective可以使用多种方式进行扩展。最普遍的一种扩展MCollective的方式就是重用已经写好的agent插件。这些小的Ruby库可以让MCollective在整个集群中执行自定义的命令。

一个agent插件通常包含一个Ruby库,它必须被分发到所有运行MCollectiveagent的节点上。另外,一个数据定义文件(DDL)提供了插件接受的传入参数的具体描述,整个DDL文件需要放在MCollective客户端系统上。最后,一个使用指定的agent插件运行MCollective的脚步也需要被安装到所有的MCollective客户端系统上。

备注:更多插件可以在https://github.com/puppetlabs/mcollective-plugins找到。

6.1 安装puppet agent插件

MCollective本身并不包含一个可以立即使用的Puppet agent插件,需要安装使用。这一插件可以让操作员在需要时运行Puppetagent。他不需要等待Puppetagent的默认运行间隔,也不需要使用其他工具来开始这些任务。

6.1.1 安装MCollective的Agent插件

[root@vm1 ]# yum install mcollective-puppet-agent mcollective-puppet-common[root@vm1 ]#

ll /usr/libexec/mcollective/mcollective/agent/

total 36

-rw-r--r-- 1 root root 1033 May 21 01:34 discovery.rb

-rw-r--r-- 1 root root 8346 May 14 07:28 puppet.ddl

-rw-r--r-- 1 root root 7975 May 14 07:25 puppet.rb

-rw-r--r-- 1 root root 5999 May 21 01:34 rpcutil.ddl

-rw-r--r-- 1 root root 3120 May 21 01:34 rpcutil.rb

[root@rango ]# yum install mcollective-puppet-client mcollective-puppet-common

[root@rango ]# ll /usr/libexec/mcollective/mcollective/agent/total 28

-rw-r--r-- 1 root root 1033 May 21 01:34 discovery.rb

-rw-r--r-- 1 root root 8346 May 14 07:28 puppet.ddl

-rw-r--r-- 1 root root 5999 May 21 01:34 rpcutil.ddl

-rw-r--r-- 1 root root 3120 May 21 01:34 rpcutil.rb

6.1.2 载入Agent插件

[root@rango ~]# mco #客户端默认在自动载入

The Marionette Collective version 2.4.1

usage: /usr/bin/mco command <options>

Known commands:

completion facts find

help inventory ping

plugin puppet rpc

Type '/usr/bin/mco help' for a detailed list of commands and '/usr/bin/mco help command'

to get detailed help for a command

[root@vm1 mcollective]# /etc/init.d/mcollective restart

Shutting down mcollective: [ OK ]

Starting mcollective: [ OK ]

6.1.3 验证Agent插件是否被载入

[root@rango ~]# mco inventory vm1.sysu

warn 2014/03/21 10:28:29: activemq.rb:274:in `connection_headers' Connecting without STOMP 1.1 heartbeats, if you are using ActiveMQ 5.8 or newer consider setting plugin.activemq.heartbeat_interval

Inventory for vm1.sysu:

Server Statistics:

Version: 2.4.1

Start Time: Fri Mar 21 10:17:32 +0800 2014

Config File: /etc/mcollective/server.cfg

Collectives: mcollective

Main Collective: mcollective

Process ID: 22042

Total Messages: 7

Messages Passed Filters: 7

Messages Filtered: 0

Expired Messages: 0

Replies Sent: 6

Total Processor Time: 0.24 seconds

System Time: 1.07 seconds

Agents:

discovery puppet rpcutil

Data Plugins:

agent fstat puppet

resource

Configuration Management Classes:

No classes applied

Facts:

mcollective => 1

6.1.4 从MCollective中运行Puppet

在运行命令之前,可以在节点查看puppet日志和puppetd服务的启停来判断命令是否调用了puppetd进程。

[root@rango ~]# mco puppet --noop --verbose status #查看节点agent守护进程状态

Discovering hosts using the mc method for 2 second(s) .... 4

* [ =========================================================> ] 4 / 4

vm1.sysu: Currently stopped; last completed run 44 minutes 34 seconds ago

vm3.sysu: Currently stopped; last completed run 44 minutes 45 seconds ago

vm4.sysu: Currently stopped; last completed run 41 minutes 59 seconds ago

vm2.sysu: Currently stopped; last completed run 44 minutes 31 seconds ago

Summary of Applying:

false = 4

Summary of Daemon Running:

stopped = 4

Summary of Enabled:

enabled = 4

Summary of Idling:

false = 4

Summary of Status:

stopped = 4

---- rpc stats ----

Nodes: 4 / 4

Pass / Fail: 4 / 0

Start Time: Mon Jan 06 16:55:22 +0800 2014

Discovery Time: 2003.21ms

Agent Time: 439.50ms

Total Time: 2442.71ms

备注:当使用MCollective运行Puppet时,要求在所有被管理的节点上Puppet agent守护进程都需要被关闭。在每次使用mco puppet -v runonce命令调用puppetd agent时,MCollective都会产生一个新的Puppet进程。这个进程会和任何已经运行的Puppetagent守护进程产生功能性的重复。

当Puppet使用--runonce参数运行时,agent会在后台运行。所以虽然MCollective成功运行了Puppet,但实际上的Puppet agent运行可能并不成功。需要查看Puppet报告来确定每一个Puppet agent运行的结果。MCollective返回的OK值表示MCollective服务器成功地启动了puppetd进程并且没有得到任何输出。

6.2 安装facter插件(测试发现有不稳定性)

注意:通过facter插件获取节点facter变量信息不是很稳定,因此可将节点facts信息通过inline_template写入/etc/mcollective/facts.yaml中,并在/etc/mcollective/server.cfg中设置factsource=yaml,这样MCollective客户端只需要每次读取这个文件中的facter变量即可。而且在本地目录/var/lib/puppet/yaml/facts/也会生成一份节点的facter信息。

安装facter插件:

[root@vm1 ~]# yum install mcollective-facter-facts

[root@vm1 ~]# ll /usr/libexec/mcollective/mcollective/facts/

total 12

-rw-r--r-- 1 root root 422 Feb 21 2013 facter_facts.ddl

-rw-r--r-- 1 root root 945 Feb 21 2013 facter_facts.rb

-rw-r--r-- 1 root root 1536 Feb 11 01:00 yaml_facts.rb

[root@vm1 ~]# vim /etc/mcollective/server.cfg

...

# Facts

#factsource = yaml

factsource = facter

plugin.yaml = /etc/mcollective/facts.yaml

[root@rango ~]# mco inventory vm1.sysu #查看节点vm1是否加载了facts插件

Inventory for vm1.sysu:

Server Statistics:

Version: 2.4.1

Start Time: Fri Mar 21 10:45:49 +0800 2014

Config File: /etc/mcollective/server.cfg

Collectives: mcollective

Main Collective: mcollective

Process ID: 22611

Total Messages: 1

Messages Passed Filters: 1

Messages Filtered: 0

Expired Messages: 0

Replies Sent: 0

Total Processor Time: 0.25 seconds

System Time: 0.09 seconds

Agents:

discovery puppet rpcutil

Data Plugins:

agent fstat puppet

resource

Configuration Management Classes:

No classes applied

Facts: #可以看到获取的节点的facter信息(获取信息需要一些等待时间)

architecture => x86_64

augeasversion => 1.0.0

bios_release_date => 12/01/2006

bios_vendor => innotek GmbH

bios_version => VirtualBox

blockdevice_sda_model => VBOX HARDDISK

blockdevice_sda_size => 8589934592

blockdevice_sda_vendor => ATA

blockdevice_sr0_model => CD-ROM

blockdevice_sr0_size => 1073741312

blockdevice_sr0_vendor => VBOX

blockdevice_sr1_model => CD-ROM

blockdevice_sr1_size => 1452388352

blockdevice_sr1_vendor => VBOX

blockdevices => sda,sr0,sr1

boardmanufacturer => Oracle Corporation

boardproductname => VirtualBox

boardserialnumber => 0

domain => fugue.com

facterversion => 1.7.5

filesystems => ext4,iso9660

fqdn => vm1.fugue.com

hardwareisa => x86_64

hardwaremodel => x86_64

hostname => vm1

id => root

interfaces => eth0,eth1,lo

ipaddress => 10.0.2.15

ipaddress_eth0 => 10.0.2.15

ipaddress_eth1 => 192.168.56.101

ipaddress_lo => 127.0.0.1

is_virtual => true

kernel => Linux

kernelmajversion => 2.6

kernelrelease => 2.6.32-431.5.1.el6.x86_64

kernelversion => 2.6.32

lib => /var/lib/puppet/lib/facter:/var/lib/puppet/facts

macaddress => 08:00:27:C0:8D:B6

macaddress_eth0 => 08:00:27:C0:8D:B6

macaddress_eth1 => 08:00:27:31:0A:74

manufacturer => innotek GmbH

memoryfree => 311.51 MB

memoryfree_mb => 311.51

memorysize => 490.29 MB

memorysize_mb => 490.29

memorytotal => 490.29 MB

mtu_eth0 => 1500

mtu_eth1 => 1500

mtu_lo => 16436

netmask => 255.255.255.0

netmask_eth0 => 255.255.255.0

netmask_eth1 => 255.255.255.0

netmask_lo => 255.0.0.0

network_eth0 => 10.0.2.0

network_eth1 => 192.168.56.0

network_lo => 127.0.0.0

operatingsystem => CentOS

operatingsystemmajrelease => 6

operatingsystemrelease => 6.5

osfamily => RedHat

path => /sbin:/usr/sbin:/bin:/usr/bin

physicalprocessorcount => 1

processor0 => Intel(R) Core(TM) i3-2130 CPU @ 3.40GHz

processor1 => Intel(R) Core(TM) i3-2130 CPU @ 3.40GHz

processorcount => 2

productname => VirtualBox

ps => ps -ef

puppetversion => 3.4.3

rubysitedir => /usr/lib/ruby/site_ruby/1.8

rubyversion => 1.8.7

selinux => false

serialnumber => 0

sshdsakey => AAAAB3NzaC1kc3MAAACBAKZzqRqybGg8RwKqMxloDUpJEti02q2mYq2BrNxB9AEkEpFYaTRxSu+aQWsNYz+ghI0dyhKDo0nbkE8htPh9jX7ewcVp7txPKZxiVof/UTx5gZaeLNmbBGSS4mWn/YidKblfPiNodaKinYfgfiI54p0eDVQPG/nTHbe017O23zUZAAAAFQDxxgb+7+bjvdTZNqLmFJxO/UVAywAAAIBa9U1nm7xNnKz0Ricn3b8MReX2XrBHCsEb8udx/qDm+tSbIngJynmr2I1F7qFpb2VyGUJaR09Poe6BMrHPyGoVLzNhSV2n/Kztgc9TWO0ie0h6C4T4wl1rC1FQtk486eqge1PQazruRSGq1Oxv6KIrWOSrVUiCrXtFZ+wuWaoniAAAAIEAlbGgt6clf9y1xdEno4ZcwZ7542jCw2LXUqVf7/3Pip6wLOak/DNwcD29KEPkSP4dR/0jfbKuZ7u7SbFNbTao+1RmyRSbPN1SJr9hxh+15RWN6zwpzaaz7p+YzIQUcMyMdgg+G0owFO949TuyzenDXkTPkSSIqj6Qw0KN8VqsiTA=

sshfp_dsa => SSHFP 2 1 6c30a8cd63c93332dc9fb4f01d3e37d43bb6db93

SSHFP 2 2 3478ea419cd51e58860d7ff4524393866ef339f3cbaeeb5e2c494f6a4dea2cf1

sshfp_rsa => SSHFP 1 1 69ad5764ece1bc85142909d17b4caa9d1b8db972

SSHFP 1 2 c12b3dff41cd300d2318af64d4591b1ef978762f00b1b1f2d080dc07cf3e2150

sshrsakey => AAAAB3NzaC1yc2EAAAABIwAAAQEAxmScYmGYeQWkWvPOfNCmLCViISvYi7ASKQtEOqw5CC+I1DaUSRy3kQLJLwyD8hjeqfG6vzEJYw1EZxDcUgHhQUiWjU4hRD7M+ptojhKOLwAdqR6wUFCrKalDv5u59tgx6nq8CHfPrHNmK0MQxH0yOcxG58ZJDHV2/9V6Z2UyCNRcXUCFfV1jAndrTWt2ffF+vLYoCyBp1Jfys+id9m4jFz51GvTxBZ9V464amR4QCm1iNYfS4wM1mjsIK6WmoatUzN2deuV+/pO7SxcgtteLywVSs6aLhGYvQWPMG5yfiCoMW62+XtCmLcy1t6rqHgeElwSyvZfKX+Y7A3WUd//WJQ==

swapfree => 954.57 MB

swapfree_mb => 954.57

swapsize => 991.99 MB

swapsize_mb => 991.99

timezone => CST

type => Other

uniqueid => a8c06538

uptime => 14:51 hours

uptime_days => 0

uptime_hours => 14

uptime_seconds => 53478

uuid => 1FC4C0D5-0336-46BD-A1F3-B3792346E877

virtual => virtualbox

[root@rango ~]# mco facts operatingsystem -v #使用mco facts命令对操作系统类型进行显示

Discovering hosts using the mc method for 2 second(s) .... 1

Report for fact: operatingsystem

CentOS found 1 times

vm1.sysu

---- rpc stats ----

Nodes: 1 / 1

Pass / Fail: 1 / 0

Start Time: Fri Mar 21 10:56:55 +0800 2014

Discovery Time: 2003.16ms

Agent Time: 60.49ms

Total Time: 2063.65ms

[root@rango ~]# mco facts operatingsystem #使用mco facts命令对操作系统类型进行统计

Report for fact: operatingsystem

CentOS found 1 times

Finished processing 1 / 1 hosts in 54.61 ms

#查看vm1的剩余内存

[root@rango ~]# mco facts -v --with-fact hostname='vm1.sysu' memoryfree

Discovering hosts using the mc method for 2 second(s) .... 0

No request sent, we did not discover any nodes.Report for fact: memoryfree

---- rpc stats ----

Nodes: 0 / 0

Pass / Fail: 0 / 0

Start Time: Fri Mar 21 10:58:37 +0800 2014

Discovery Time: 2002.65ms

Agent Time: 0.00ms

Total Time: 2002.65ms

注解:此处由于vm1为虚拟机,故无法查看

6.3 使用元数据定位主机

6.3.1 使用默认facter元数据定位主机

1. 触发所有系统为CentOS,版本为6.5的所有节点puppetd守护进程

[root@rango ~]# mco facts -v --with-fact hostname='vm1.sysu' memoryfree

Discovering hosts using the mc method for 2 second(s) .... 0

No request sent, we did not discover any nodes.Report for fact: memoryfree

---- rpc stats ----

Nodes: 0 / 0

Pass / Fail: 0 / 0

Start Time: Fri Mar 21 10:58:37 +0800 2014

Discovery Time: 2002.65ms

Agent Time: 0.00ms

Total Time: 2002.65ms

[root@rango ~]# mco puppet -v runonce rpc --np -F operatingsystemrelease='6.5' -F operatingsystem='CentOS'

Discovering hosts using the mc method for 2 second(s) .... 1

vm1.sysu : OK

{:summary=>"Signalled the running Puppet Daemon"}

---- rpc stats ----

Nodes: 1 / 1

Pass / Fail: 1 / 0

Start Time: Fri Mar 21 11:01:49 +0800 2014

Discovery Time: 2003.97ms

Agent Time: 64.14ms

Total Time: 2068.11ms

2. 触发所有系统为CentOS,kernel版本为2.6.32的所有节点的puppetd守护进程

[root@rango ~]# mco puppet -v runonce rpc --np -F kernelversion='2.6.32' -F operatingsystem='CentOS'

Discovering hosts using the mc method for 2 second(s) .... 1

vm1.sysu : Puppet is currently applying a catalog, cannot run now

{:summary=>"Puppet is currently applying a catalog, cannot run now"}

---- rpc stats ----

Nodes: 1 / 1

Pass / Fail: 0 / 1

Start Time: Fri Mar 21 11:03:23 +0800 2014

Discovery Time: 2004.44ms

Agent Time: 25.51ms

Total Time: 2029.94ms

注解:此处显示"Puppet is currently applying a catalog, cannot run now",是因为之前的命令已经触发了vm1的puppetd守护进程了。

6.3.2 使用自定义facter元数据定位主机

1. 在vm1上定义facter my_apply1和my_apply2

[root@vm1 mcollective]# facter -p | grep my_apply

my_apply1 => apache

my_apply2 => mysql

2. 在vm2上定义facter my_apply2和my_apply3

[root@vm2 mcollective]# facter -p | grep my_apply

my_apply2 => mysql

my_apply3 => php

3. 在MCollective客户端测试节点自定义facter是否正确

[root@rango facter]# mco inventory vm1.sysu | grep my_apply

my_apply1 => apache

my_apply2 => mysql

[root@rango facter]# mco inventory vm2.sysu | grep my_apply

my_apply2 => mysql

my_apply3 => php

4. 通过自定义facter定位主机触发更新

[root@rango facter]# mco puppet -v runonce mco facts -v --with-fact my_apply3='php' #筛选节点facter变量my_apply3=php的主机进行触发puppetd守护进程

Discovering hosts using the mc method for 2 second(s) .... 1

* [ ============================================================> ] 1 / 1

vm2.sysu : OK

{:summary=> "Started a background Puppet run using the 'puppet agent --onetime --daemonize --color=false --splay --splaylimit 30' command"}

---- rpc stats ----

Nodes: 1 / 1

Pass / Fail: 1 / 0

Start Time: Thu Oct 03 23:33:54 +0800 2013

Discovery Time: 2005.35ms

Agent Time: 1078.86ms

Total Time: 3084.21ms

7 总结

采用MCollective+Middleware架构能够更加高效、安全、灵活的推送Puppet更新。MCollective+Middleware架构还能够应用到类似的集中化运维环境中。

――Rango Chen