在vmware server中部署linux redhat 5.4 ORACLE RAC11g +ASM

在vmware server中部署ORACLE RAC 11g

部署oracle rac on redhat5.4

第一部分 准备环境:

1、 硬件配置

电脑:I3, 8G, 500G

虚机配置:1 core, 2G ,25G

2、 软件及版本

OS RedHat 5.4 X64

Grid Infrastructure 11gR2

Oracle 11gR2

3、 安装规划

用户: grid 和oracle

软件组件

软件名称 |

OS用户 |

主用户组 |

辅助用户组 |

软件base目录 |

软件home目录 |

Grid |

Grid |

Oinstall |

asmadmin,asmdba, asmoper,oper,dba |

/u01/app/grid |

/u01/app/11.2.0/grid |

Oracle |

Oracle |

Oinstall |

dba,asmdba,oper |

/u01/app/oracle |

$ORACLE_BASE/product/11.2.0/db_1 |

用户分组:

组名 |

属组用户 |

描述 |

asmadmin |

Grid |

ASM instance administrator(OSASM) Group |

asmdba |

Grid/Oracle |

ASM instance administrator(osdba)Group |

asmoper |

Grid |

ASM instance administrator Operator (osoper) Group |

oper |

Grid/Oracle |

Database Operator (OSOPER) Group |

dba |

Oracle |

数据库管理员 |

Oinstall |

Grid/Oracle |

Oracle Inventory group (oinstall) |

网络:

节点 |

Public IP |

VIP |

Private IP |

Scan IP |

|

RAC1 |

172.20.10.3 |

172.20.10.5 |

10.0.0.1 |

172.20.10.7 |

|

RAC2 |

172.20.10.4 |

172.20.10.6 |

10.0.0.2 |

||

本机以太网适配器 VMware Network Adapter VMnet1: |

172.20.10.11 |

||||

第二部分 安装VMware Server 和 安装linux OS

第I步 安装VMware Server省略,都是下一步;

第II步 安装 Linux Redhat 5.4

1. 登陆VMwareServer,点击Virtual Machines ->"Create VirtualMachine" ->在"Name"

输入 "RAC1"->"Next"如下图:

登陆

创建虚机

2. 选择linux OS 并在version中选择 RedHat 5 X64

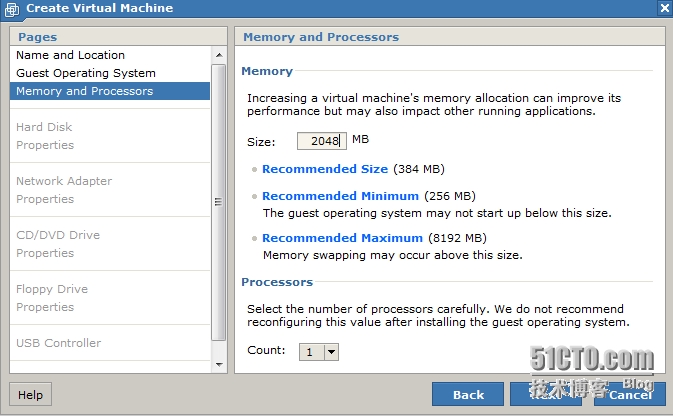

3. 分配内存 2048G

4. 分配磁盘

5. 配置网卡选择”HostOnly”模式

6. 选择OS 的IOS镜像,选择“Use ISO Image”

7. 浏览ISO镜像目录

8. 选择ISO文件

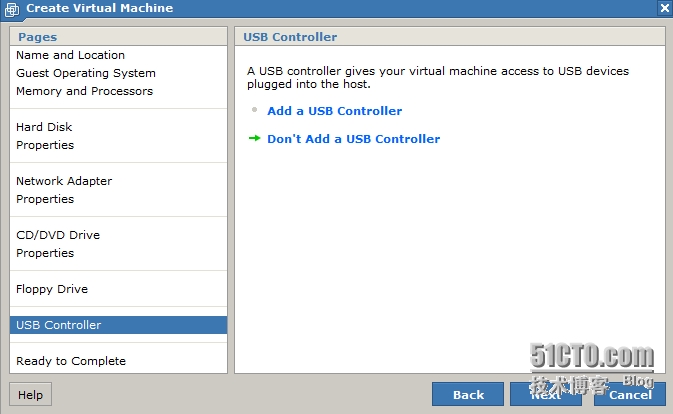

9. 配置FloppyDrive,选择“Don’t Add a Floppy Drive”+ “Don’t Add a USB Controll”

10. Ready to Complete 点击“finish”自动加电进行安装

11. 添加网卡

在右侧栏目中选择”commands”中点击”AddHardware”, 在Hardware Type对话框中选择NetworkAdapter,并在Network Connection 为HostOnly。

12. 点击虚机,在虚机侧栏目中选择Console点击”Openthe console in a new window”,使用vmware remote console 安装OS。详细安装OS步骤省略

截止这里使用VMware 安装OS步骤已经完成。

第III步 配置系统环境

配置本地yum源,安装oracle 所需rpm包(查看文档末尾)

yum install binutils-develbinutils compat-libstdc++ elfutils-libelf \

elfutils-libelf-develgcc gcc-c++ glibc glibc-common glibc-devel glibc-headers ksh \

libaio libaio-devel libgcc libstdc++ libstdc++-devel \

make sysstat unixODBC unixODBC-devel

/etc/sysctl.conf

注销掉:kernel.shmmax和kernel.shmall 这两个文件

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = 2097152

kernel.shmmax = 1073741824

kernel.shmmni = 4096

kernel.sem = 250 32000 100128

net.ipv4.ip_local_port_range= 9000 65500

net.core.rmem_default =262144

net.core.rmem_max = 4194304

net.core.wmem_default =262144

net.core.wmem_max = 1048586

/sbin/sysctl -p 使之生效

/etc/security/limits.conf

在最后面加入:

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

/etc/pam.d/login

加入:

session requiredpam_limits.so

/etc/profile.d/oracleset.sh

if [ $USER ="oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask022

fi

创建用户及用户组:oracle 和grid

用户及密码:

oracle :FANfan

grid:FANfan

groupadd -g 501 oinstall

groupadd -g 502 dba

groupadd -g 503 oper

groupadd -g 504 asmadmin

groupadd -g 505 asmoper

groupadd -g 506 asmdba

useradd -u 500 -g oinstall -Gasmadmin,asmdba,asmoper,oper,dba grid

useradd -u 501 -g oinstall -Gdba,asmdba,oper oracle

echo "password" |passwd oracle --stdin

echo "password" |passwd grid --stdin

目录:

mkdir -p/u01/app/oraInventory

mkdir -p /u01/app/11.2.0/grid

chown -R grid:oinstall /u01/

mkdir -p /u01/app/oracle

chown -R oracle:oinstall/u01/app/oracle

chmod -R 775 /u01/app

关闭iptables 和selinux

chkconfig iptables off

用户 .base_profile 配置:

#grid config

export TMP=/tmp

export TMPDIR=$TMP

exportORACLE_BASE=/u01/app/grid

exportORACLE_HOME=/u01/app/11.2.0/grid

export ORACLE_SID=+ASM1

exportPATH=$PATH:$ORACLE_HOME/bin:

exportPATH=$ORACLE_HOME/bin:/usr/sbin:$PATH

exportLD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

exportCLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export ORACLE_HOSTNAME=rac1.localdomain

if [ $USER ="oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

这里的grid 和oracle 用户中的ORACLE_HOSTNAME会根据主机名来配置

#Oracle config

export TMP=/tmp

export TMPDIR=$TMP

exportORACLE_BASE=/u01/app/oracle

exportORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export ORACLE_UNQNAME=orcl1

export ORACLE_SID=orcl1

export PATH=$PATH:$ORACLE_HOME/bin:

exportPATH=$ORACLE_HOME/bin:/usr/sbin:$PATH

exportLD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

exportCLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export ORACLE_HOSTNAME=rac1.localdomain

if [ $USER ="oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

配置:hosts

127.0.0.1 localhost.localdomain localhost

# Public

172.20.10.3 rac1.localdomain rac1

172.20.10.4 rac2.localdomain rac2

# Private

10.0.0.1 rac1-priv.localdomain rac1-priv

10.0.0.2 rac2-priv.localdomain rac2-priv

# Virtual

172.20.10.5 rac1-vip.localdomain rac1-vip

172.20.10.6 rac2-vip.localdomain rac2-vip

# SCAN

172.20.10.7 scan.localdomain scan

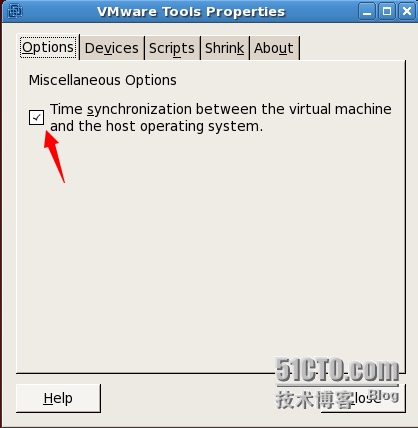

安装 VMwareTools

进入root用户

进入目录运行:

./vmware-install.pl 一路默认回车

[root@rac1~]# vmware-toolbox

选中 虚拟机之间时间同步

Either configure NTP, or make sure it is not configured so theOracle Cluster Time Synchronization Service (ctssd) can synchronize the timesof the RAC nodes. If you want to deconfigure NTP do the following.

# service ntpd stop

Shutting down ntpd: [ OK ]

# chkconfig ntpd off

# mv /etc/ntp.conf /etc/ntp.conf.orig

# rm /var/run/ntpd.pid

If you want to use NTP, you must add the "-x" optioninto the following line in the "/etc/sysconfig/ntpd" file.

OPTIONS="-x -u ntp:ntp -p /var/run/ntpd.pid"

Then restart NTP.

# service ntpd restart

添加共享磁盘:

关闭虚机

1. Shutdown�Ch now

2. 在右侧栏选中添加硬件Add Hardware

3. 选择硬盘“HardDisk”―> “next” ->“Createa New Virtual Disk” ->“next”

4. 点击“Browse”选择把vmdk文件单独的存储到指定目录中,并选中“Persistent”

5. 选择磁盘接口为SCSI1,device 接口从数字1开始

6. 点击“next”->”finish”

继续添加磁盘并重复2到6步骤,添加五块盘。

编辑虚机vmx文件

添加内容:

scsi1:1.redo = ""

scsi1:2.redo = ""

scsi1:3.redo = ""

scsi1:4.redo = ""

scsi1:5.redo = ""

scsi1:1.deviceType ="disk"

scsi1:2.deviceType ="disk"

scsi1:3.deviceType ="disk"

scsi1:4.deviceType ="disk"

scsi1:5.deviceType ="disk"

disk.locking ="FALSE"

diskLib.dataCacheMaxSize ="0"

diskLib.dataCacheMaxReadAheadSize= "0"

diskLib.dataCacheMinReadAheadSize= "0"

diskLib.dataCachePageSize ="4096"

diskLib.maxUnsyncedWrites ="0"

disk.EnableUUID ="TRUE"

reslck.timeout = 600 * 这个参数很重要,默认应该是20秒。会出现宕机现象,超过时间虚拟机会自动关机报错。

添加完成后,开启虚机查看硬件

进入系统创建磁盘逻辑分区

[root@rac1 ~]# ls -ls /dev/sd*

0 brw-r----- 1 root disk 8, 0 Mar 7 15:28 /dev/sda

0 brw-r----- 1 root disk 8, 1 Mar 7 15:28 /dev/sda1

0 brw-r----- 1 root disk 8, 2 Mar 7 15:28 /dev/sda2

0 brw-r----- 1 root disk 8, 16 Mar 7 15:28 /dev/sdb

0 brw-r----- 1 root disk 8, 32 Mar 7 15:28 /dev/sdc

0 brw-r----- 1 root disk 8, 48 Mar 7 15:28 /dev/sdd

0 brw-r----- 1 root disk 8, 64 Mar 7 15:28 /dev/sde

0 brw-r----- 1 root disk 8, 80 Mar 7 15:28 /dev/sdf

[root@rac1 ~]# fdisk /dev/sdb

Device contains neither a valid DOS partition table, nor Sun,SGI or OSF disklabel

Building a new DOS disklabel. Changes will remain in memoryonly,

until you decide to write them. After that, of course, theprevious

content won't be recoverable.

The number of cylinders for this disk is set to 1305.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2FDISK)

Warning: invalid flag 0x0000 of partition table 4 will becorrected by w(rite)

Command (m for help): p

Disk /dev/sdb: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

Command (m for help): m

Command action

a toggle a bootable flag

b edit bsd disklabel

c toggle the dos compatibility flag

d deletea partition

l list known partition types

m print this menu

n add a new partition

o create a new empty DOS partition table

p print the partition table

q quit without saving changes

s create a new empty Sun disklabel

t change a partition's system id

u change display/entry units

v verify the partition table

w write table to disk and exit

x extra functionality (experts only)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-1305, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-1305, default1305):

Using default value 1305

Command (m for help): p

Disk /dev/sdb: 10.7 GB, 10737418240 bytes

255 heads, 63 sectors/track, 1305 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 1305 10482381 83 Linux

Command (m for help): q

重复把5块盘做完;

安装oracleasm

1、rpm -ivhoracleasm-support-2.1.8-1.el5.x86_64.rpm

2、rpm -ivhoracleasm-2.6.18-164.el5-2.0.5-1.el5.x86_64.rpm

3、rpm -ivhoracleasmlib-2.0.4-1.el5.x86_64.rpm

配置asm

[root@rac1 rac_soft]# /etc/init.d/oracleasm configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASMlibrary

driver. The followingquestions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

Initializing the Oracle ASMLib driver: [ OK ]

Scanning the system for Oracle ASMLib disks: [ OK ]

初始化oracleasm

[root@rac1 kernel]# /usr/sbin/oracleasm init

Creating /dev/oracleasm mount point: /dev/oracleasm

Loading module "oracleasm": oracleasm

Mounting ASMlib driver filesystem: /dev/oracleasm

[root@rac1 kernel]#

创建asm磁盘

[root@rac1 ~]# /etc/init.d/oracleasm createdisk DISK1 /dev/sdb1

Marking disk "DISK1" as an ASM disk: [ OK ]

[root@rac1 ~]# /etc/init.d/oracleasm createdisk DISK2 /dev/sdc1

Marking disk "DISK2" as an ASM disk: [ OK ]

[root@rac1 ~]# /etc/init.d/oracleasm createdisk DISK3 /dev/sdd1

Marking disk "DISK3" as an ASM disk: [ OK ]

[root@rac1 ~]# /etc/init.d/oracleasm createdisk DISK4 /dev/sde1

Marking disk "DISK4" as an ASM disk: [ OK ]

[root@rac1 ~]# /etc/init.d/oracleasm createdisk DISK5 /dev/sdf1

Marking disk "DISK5" as an ASM disk: [ OK ]

[root@rac1 ~]#

查看ASM 状态:

[root@rac1 ~]# oracleasm status

Checking if ASM is loaded: yes

Checking if /dev/oracleasm is mounted: yes

[root@rac1 ~]# oracleasm listdisks

DISK1

DISK2

DISK3

DISK4

DISK5

[root@rac1 ~]#

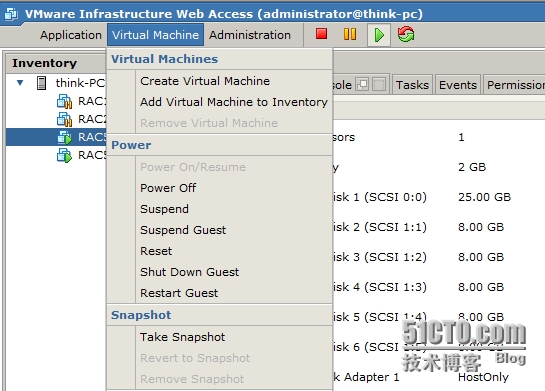

第IV步 克隆虚机

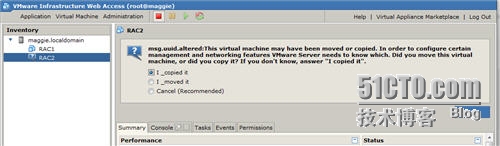

Vmware Server 克隆虚机需要把虚机文件手动复制,然后再去添加虚机VMX文件进行注册。而在exsi 或者workstation 中在菜单栏中点击clone vmware 就可以了。添加.vmx文件后点击开启虚机加电。

1、 添加虚机 点击Virtual Machines,选择Add Virtual Machine to Inventory

2、 在VM目录中找到复制文件.vmx文件,并开启虚机选择”copy it”

3、 配置网络

配置eth0和eth1,删除eth1.bak和eth.bak

4、 修改grid 和 oracle 用户.bash_profile 变量值如:

Grid:

export ORACLE_SID=+ASM1 改为 +ASM2

Oracle:

export ORACLE_UNQNAME=orcl1 改为 orcl2

export ORACLE_SID=orcl1改为orcl2

export ORACLE_HOSTNAME=rac1.localdomain 改为 rac2.localdomain

5、 修改主机名:

[root@rac2 ~]# cat/etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=rac2.localdomain

[root@rac2 ~]#

第三部分 安装grid infrastructure

进入grid用户执行安装

install grid infrastructure

version:11.2.0.1.0

./runInstaller

第一步:Select InstallationOption "install and configure Grid Infrastructure for a cluster"-> "next"

第二步:Installation Type "Advanced Installation" -> "next"

第三步:Product Languages "English" -> "next"

第四步:Grid Plug andPlay "Cluster Name" ->"rac-cluster" , SCAN Name ->"scan.localdomain" SCAN Port "1521" ,去掉 Configure GNS复选框。这里没有配置DNS。

第五步:Cluster NodeInformation

1、点击ADD按钮 添加节点输入: Hostname"rac2.localdomain" 和Virtual IP Name"rac2-vip.localdomain"

2、点击SSH Connectivity 在 OS Password 输入操作系统密码""; 点击 Setup 安装节点之间SSH 无密码登陆; 点击 Test 测试,测试成功后"Next"

第六步:Network InterfaceUsage 配置网卡的Pubic 和Private;

第七步:Storage Option 选择"Automatic Storage Management(ASM)" 点击"Next"

第八步:Create ASM Disk Group 在Disk Group Name 输入名称"+DATA",Redundancy选择"External",Add Disks 这里选择的是"All Disks" 全选所有ASM 磁盘 -> "Next"

第九步:ASM Password 这里可以根据自己的环境配置,我在这里使用统一的密码管理; Use same Passwords for these accounts 输入密码"" -> "Next"

第十步:Failure Isolation 选择默认就行 "Do not Use IPMI" -> "Next"

第十一步:Operation SystemGroup 这里配置通过操作系统认证来管理ASM,默认就行 -> "Next"

第十二步:InstallationLocation 这里默认,因为在 grid用户的.bash_profile环境中已经配置好了。 -> "Next"

第十三步:Create Inventory 默认 -> "Next"

第十四步:Prerequisite Checks 检测成功后会跳到第十五步 Summary ->"Finish" 进行安装,执行root.sh 脚本

注明:

这里需要分别两个节点上执行 orainstRoot.sh和root.sh,(root.sh脚本执行需要十几分钟分钟),这里可以

tail -f /u01/app/11.2.0/grid/cfgtoollogs/crsconfig/rootcrs_rac2.log 安装root.sh安装日志和进度

caution:

You must run theroot.sh script on the first node and wait for it to finish. You can run root.shscripts concurrently on all other nodes except for the last node on which yourun the script. Like the first node, the root.sh script on the last node mustbe run separately.

安装完成grid infrastructure 后,创建监听:

使用netca

输入监听名listener,再选择select subnet选择public IP段(安装时会自动默认)再下一步finish。

第四部分 安装 oracle

进入oracle用户:

1、Install Oracle DB software

version 11.2.0.1.0

[oracle@rac1 database]$./runInstaller

第一步:Configure SecurityUpdates 点击"Next"

第二步:InstallationOption 选择"install database software only" 点击"Next"

第三步:Grid Option 在默认选择"Real Application Clusters database Installation" 配置ssh 无密码登陆。选择"SSH Connectivity"

输入操作系统密码*****; 点击 Setup 安装节点之间SSH 无密码登陆; 点击 Test 测试,测试成功后 点击"Next"

第四步:Product Language"English"+"Simplified Chinese" 点击"Next"

第五步:Database Edition 选择 "Enterprise Edition" 点击"Next"

第六步:InstallationLocation OracleBase:"/u01/app/oracle",SoftwareLocation"/u01/app/oracle/product/11.2.0/db_1" ,点击"Next"

第七步:OS groups 配置 Database Administrator(OSDBA) Group 为'dba',Database Operator(OSOPER) 为'oper' ,点击"Next"

第八步:Prerequisite Checks 如果检测通过,就会直接跳到第九步。不通过根据failed 项来找原因

第九步;Summary 点击"Finish"

第十步:Install Product (这里会用半个小时),在最后一步会出现"Execute ConfigurationScripts"执行root.sh 脚本,分别在RAC1和RAC2两个节点上执行。

第十一步:到这一步The installationof Oracle Database was successful 点击"Close"

2、 Create Database

使用DBCA 创建数据库

进入 Oracle 用户:

第一步:运行dbca启动创建,选择"Oracle Real Application Cluster database" ->"Next"

第二步:选择"Create aDatabase" -> "Next"

第三步:选择"DataWarehouse" (数据仓库) -> "Next"

第四步:DatabaseIdentification Configuration Type 选择"Admin-Managed",GlobalDatabase Name "orcl",并选择两个节点RAC1和RAC2 'Select All' ->"Next"

注明:了解Policy-Managed 类型

第五步:Management Options 根据自己情况也可以选择关闭,默认是开启的 -> "Next"

第六步:配置oracle系统用户密码,根据情况来配置。这里是统一密码 -> "Next"

第七步:Storage Type 选择"ASM" -> "Next" 会出现ASMCredentials 对话框,输入"Specify ASMSNMP passwordspecific to ASM " ->"OK"

第八步:RecoveryConfiguration,这里开启"Specify Flash RecoveryArea"和"Enable Archive" -> "Next"

第九步:Database Content 直接 -> "Next"

第十步:InitiallzationParameters 选择Character Sets -->Choose from the listof character sets -->"ZHS16GBK - GBK 16-bit Simplified Chinese" (数据库支持中文简体) -> "Next"

第十一步:Database Storage 根据需要来配置 -> "Next"

第十二步:Creation Options "Create database" -> "Next" (这里可以选中数据库生成脚本,一边与在以后手动静默创建库参考)

截止到这里oracle的DB创建已经完成。

第五部分 验证安装

1、 登陆任意节点上,查看rac双节点实例状态

SQL> selectinstance_name,instance_number,status from gv$instance;

INSTANCE_NAME INSTANCE_NUMBER STATUS

---------------- --------------- ------------

orcl1 1 OPEN

orcl2 2 OPEN

SQL>

2、 查看监听

On rac1:

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ lsnrctl status

On rac2:

[root@rac2 ~]# su - grid

[grid@rac2 ~]$ lsnrctl status

任意节点进入root 用户查看网卡信息

Eth0网卡会多出两个虚拟网卡如下;

eth0:1

eth0:2

一个是vip ip,一个是scan ip

3、 停掉单节点查看数据库状态

进入rac1节点,shutdown �Ch now关闭rac1

客户端pl/sql登陆查看集群状态

SQL> select instance_name,instance_number,status from gv$instance;

INSTANCE_NAME INSTANCE_NUMBERSTATUS

---------------- --------------- ------------

orcl2 2OPEN

SQL>

查看rac2网卡

eth0 Link encap:Ethernet HWaddr 00:0C:29:CE:8C:7C

inet addr:172.20.10.4 Bcast:172.20.10.255 Mask:255.255.255.0

inet6 addr:fe80::20c:29ff:fece:8c7c/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:5344 errors:0 dropped:0overruns:0 frame:0

TX packets:3779 errors:0 dropped:0overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:769323 (751.2 KiB) TX bytes:670356 (654.6 KiB)

Base address:0x2400Memory:d8920000-d8940000

eth0:1 Link encap:Ethernet HWaddr 00:0C:29:CE:8C:7C

inet addr:172.20.10.6 Bcast:172.20.10.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Base address:0x2400Memory:d8920000-d8940000

eth0:2 Link encap:Ethernet HWaddr 00:0C:29:CE:8C:7C

inet addr:172.20.10.7 Bcast:172.20.10.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Base address:0x2400 Memory:d8920000-d8940000

eth0:3 Link encap:Ethernet HWaddr 00:0C:29:CE:8C:7C

inet addr:172.20.10.5 Bcast:172.20.10.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Base address:0x2400 Memory:d8920000-d8940000

eth1 Link encap:Ethernet HWaddr 00:0C:29:CE:8C:86

inet addr:10.0.0.2 Bcast:10.255.255.255 Mask:255.0.0.0

inet6 addr:fe80::20c:29ff:fece:8c86/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:97053 errors:0 dropped:0overruns:0 frame:0

TX packets:119807 errors:0 dropped:0overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:41771418 (39.8 MiB) TX bytes:76194041 (72.6 MiB)

Base address:0x2440Memory:d8940000-d8960000

这说明rac1节点的vip和scan ip 已经票到了rac2节点上

第六部分 辅助内容

1、 配置linux本地源

linux RedHat 5.4 本地源:

[rhel-debuginfo]

name=Red Hat Enterprise Linux $releasever -$basearch - Debug

baseurl=file:///mnt/cdrom/Server

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

2、 asm操作

查看ASM 状态:

[root@rac1 ~]# oracleasm status

Checking if ASM is loaded: yes

Checking if /dev/oracleasm is mounted: yes

[root@rac1 ~]# oracleasm listdisks

相关视图:

V$ASM_DISKGROUP,v$asm_disk,V$ASM_USER等

进入grid用户使用sqlplusd登陆

sqlplus / as sysasm进入asm实例

查看ASM_DISK组号,磁盘编号,状态,磁盘名称,mount时间

selectGROUP_NUMBER,DISK_NUMBER,MOUNT_STATUS,HEADER_STATUS,MODE_STATUS,name,MOUNT_DATE from v$asm_disk;

GROUP_NUMBERDISK_NUMBER MOUNT_S HEADER_STATU MODE_ST NAME MOUNT_DAT

----------------------- ------- ------------ ------- - -------------- --------------- ---------

1 0 CACHED MEMBER ONLINE DISK1 21-APR-14

1 1 CACHED MEMBER ONLINE DISK2 21-APR-14

1 2 CACHED MEMBER ONLINE DISK3 21-APR-14

1 3 CACHED MEMBER ONLINE DISK4 21-APR-14

1 4 CACHED MEMBER ONLINE DISK5 21-APR-14

检查ASM磁盘 redundancylevel,state 和使用文件大少

SQL> selectname,state,type,USABLE_FILE_MB from V$ASM_DISKGROUP;

NAME STATE TYPE USABLE_FILE_MB

----------------------------------------- ------ --------------

DATA MOUNTED EXTERN 38701

一旦redundancy level 确定之后就不能再去更改:

对于其他的添加,删除,修改diskgroup等查看官网

3、 grid infrastructure 操作

启动集群:

$ srvctl start nodeapps -n rac1

$ srvctl start asm -n rac1

$ srvctl start instance -d RACDB -iRACDB1

$ emctl start dbconsole

查看asm状态

[grid@rac1 ~]$srvctl status asm

启动asm

[grid@rac2 ~]$srvctl start asm

查看监听状态

[grid@rac1 ~]$lsnrctl status

查看集群状态

crs_stat �Ct 、crsctl statres �Ct

Caption

ora.gsd is OFFLINE by default if there is no 9idatabase in the cluster.

ora.oc4j isOFFLINE in 11.2.0.1 as Database Workload Management(DBWLM) is unavailable. these can be ignored in 11gR2 RAC.

查看进程:

oracle用户:

ps aux | grepora_

查看集群状态:

grid用户:

crsctl check crs

crs_stat �Cv

查看单节点服务器状态:

[grid@rac1 ~]$srvctl status server -n rac1

查看集群监听状态:

[grid@rac2 ~]$srvctl status listener

ListenerLISTENER is enabled

Listener LISTENERis running on node(s): rac1,rac2

在 grid infrastructure中删除DB

srvctl removedatabase -d <db_unique_name> [-f] [-y]

srvctl removedatabase -d orcl �Cf

检查所有集群节点状态,这个命令可以取代在每个节点去查看

crsctl checkcluster -all