heartbeat + pacemaker实现pg流复制自动切换(一)

heartbeat + pacemaker + postgres_streaming_replication

说明:

该文档用于说明以hearbeat+pacemaker的方式实现PostgreSQL流复制自动切换。注意内容包括有关hearbeat/pacemaker知识总结以及整个环境的搭建过程和问题处理。

一、介绍

Heartbeat

自3版本开始,heartbeat将原来项目拆分为了多个子项目(即多个独立组件),现在的组件包括:heartbeat、cluster-glue、resource-agents。

各组件主要功能:

heartbeat:属于集群的信息层,负责维护集群中所有节点的信息以及各节点之间的通信。

cluster-glue:包括LRM(本地资源管理器)、STONITH,将heartbeat与crm(集群资源管理器)联系起来,属于一个中间层。

resource-agents:即各种资源脚本,由LRM调用从而实现各个资源的启动、停止、监控等。

Heartbeat内部组件关系图:

Pacemaker

Pacemaker,即Cluster Resource Manager(CRM),管理整个HA,客户端通过pacemaker管理监控整个集群。

常用的集群管理工具:

(1)基于命令行

crm shell/pcs

(2)基于图形化

pygui/hawk/lcmc/pcs

Hawk:http://clusterlabs.org/wiki/Hawk

Lcmc:http://www.drbd.org/mc/lcmc/

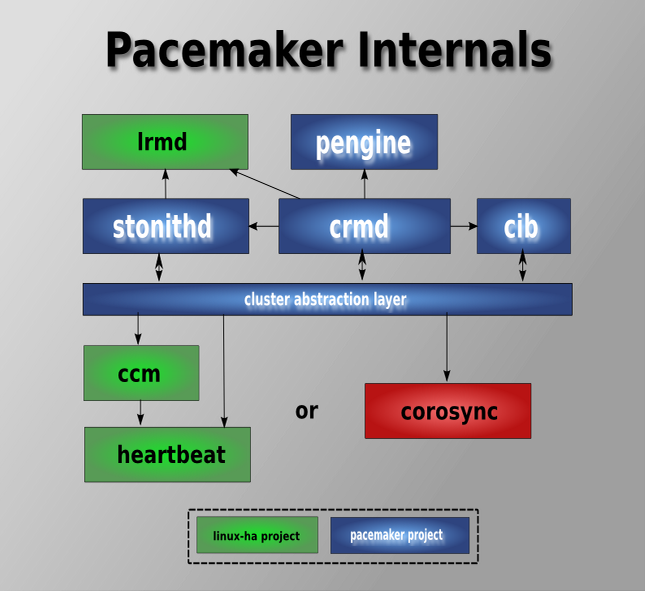

Pacemaker内部组件、模块关系图:

二、环境

2.1 OS

# cat /etc/issue CentOS release 6.4 (Final) Kernel \r on an \m # uname -a Linux node1 2.6.32-358.el6.x86_64 #1 SMP Fri Feb 22 00:31:26 UTC 2013 x86_64 x86_64 x86_64 GNU/Linux

2.2 IP

node1:

eth0 192.168.100.161/24 GW 192.168.100.1 ---真实地址

eth1 2.2.2.1/24 ---心跳地址

eth2 192.168.2.1/24 ---流复制地址

node2:

eth0 192.168.100.162/24 GW 192.168.100.1 ---真实地址

eth1 2.2.2.2/24 ---心跳地址

eth2 192.168.2.2/24 ---流复制地址

虚拟地址:

eth0:0 192.168.100.163/24 ---vip-master

eth0:0 192.168.100.164/24 ---vip-slave

eth2:0 192.168.2.3/24 ---vip-rep

2.3 软件版本

# rpm -qa | grep heartbeat heartbeat-devel-3.0.3-2.3.el5 heartbeat-debuginfo-3.0.3-2.3.el5 heartbeat-3.0.3-2.3.el5 heartbeat-libs-3.0.3-2.3.el5 heartbeat-devel-3.0.3-2.3.el5 heartbeat-3.0.3-2.3.el5 heartbeat-debuginfo-3.0.3-2.3.el5 heartbeat-libs-3.0.3-2.3.el5 # rpm -qa | grep pacemaker pacemaker-libs-1.0.12-1.el5.centos pacemaker-1.0.12-1.el5.centos pacemaker-debuginfo-1.0.12-1.el5.centos pacemaker-debuginfo-1.0.12-1.el5.centos pacemaker-1.0.12-1.el5.centos pacemaker-libs-1.0.12-1.el5.centos pacemaker-libs-devel-1.0.12-1.el5.centos pacemaker-libs-devel-1.0.12-1.el5.centos # rpm -qa | grep resource-agent resource-agents-1.0.4-1.1.el5 # rpm -qa | grep cluster-glue cluster-glue-libs-1.0.6-1.6.el5 cluster-glue-libs-1.0.6-1.6.el5 cluster-glue-1.0.6-1.6.el5 cluster-glue-libs-devel-1.0.6-1.6.el5

PostgreSQL Version:9.1.4

三、安装

3.1 设置YUM源

# wget -O /etc/yum.repos.d/pacemaker.repo http://clusterlabs.org/rpm/epel-5/clusterlabs.repo

3.2 安装heartbeat/pacemaker

安装libesmtp:

# wget ftp://ftp.univie.ac.at/systems/linux/fedora/epel/5/x86_64/libesmtp-1.0.4-5.el5.x86_64.rpm # wget ftp://ftp.univie.ac.at/systems/linux/fedora/epel/5/i386/libesmtp-1.0.4-5.el5.i386.rpm # rpm -ivh libesmtp-1.0.4-5.el5.x86_64.rpm # rpm -ivh libesmtp-1.0.4-5.el5.i386.rpm

安装pacemaker corosync:

# yum install heartbeat* pacemaker*

通过命令查看资源脚本:

# crm ra list ocf AoEtarget AudibleAlarm CTDB ClusterMon Delay Dummy EvmsSCC Evmsd Filesystem HealthCPU HealthSMART ICP IPaddr IPaddr2 IPsrcaddr IPv6addr LVM LinuxSCSI MailTo ManageRAID ManageVE Pure-FTPd Raid1 Route SAPDatabase SAPInstance SendArp ServeRAID SphinxSearchDaemon Squid Stateful SysInfo SystemHealth VIPArip VirtualDomain WAS WAS6 WinPopup Xen Xinetd anything apache conntrackd controld db2 drbd eDir88 exportfs fio iSCSILogicalUnit iSCSITarget ids iscsi jboss ldirectord mysql mysql-proxy nfsserver nginx o2cb oracle oralsnr pgsql ping pingd portblock postfix proftpd rsyncd scsi2reservation sfex syslog-ng tomcat vmware

禁止开机启动:

# chkconfig heartbeat off

3.3 安装PostgreSQL

安装目录为/opt/pgsql

{安装过程略}

为postgres用户配置环境变量:

[postgres@node1 ~]$ cat .bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs export PATH=/opt/pgsql/bin:$PATH:$HOME/bin export PGDATA=/opt/pgsql/data export PGUSER=postgres export PGPORT=5432 export LD_LIBRARY_PATH=/opt/pgsql/lib:$LD_LIBRARY_PATH

四、配置

4.1 hosts设置

# vim /etc/hosts 192.168.100.161 node1 192.168.100.162 node2

4.2 配置heartbeat

创建配置文件:

# cp /usr/share/doc/heartbeat-3.0.3/ha.cf /etc/ha.d/

修改配置:

# vim /etc/ha.d/ha.cf logfile /var/log/ha-log logfacility local0 keepalive 2 deadtime 30 warntime 10 initdead 120 ucast eth1 2.2.2.2 #node2上改为2.2.2.1 auto_failback off node node1 node node2 pacemaker respawn #自heartbeat-3.0.4改为crm respawn

4.3 生成密钥

# (echo -ne "auth 1\n1 sha1 ";dd if=/dev/urandom bs=512 count=1 | openssl sha1 ) > /etc/ha.d/authkeys 1+0 records in 1+0 records out 512 bytes (512 B) copied, 0.00032444 s, 1.6 MB/s # chmod 0600 /etc/ha.d/authkeys

4.4 同步配置

[root@node1 ~]# cd /etc/ha.d/ [root@node1 ha.d]# scp authkeys ha.cf node2:/etc/ha.d/

4.5 下载替换脚本

pgsql脚本过旧,不支持配置pgsql.crm中设置的一些参数,需要从网上下载并替换pgsql

下载地址:

https://github.com/ClusterLabs/resource-agents

# unzip resource-agents-master.zip # cd resource-agents-master/heartbeat/ # cp pgsql /usr/lib/ocf/resource.d/heartbeat/ # cp ocf-shellfuncs.in /usr/lib/ocf/lib/heartbeat/ocf-shellfuncs # cp ocf-rarun /usr/lib/ocf/lib/heartbeat/ocf-rarun # chmod 755 /usr/lib/ocf/resource.d/heartbeat/pgsql

修改ocf-shellfuncs:

if [ -z "$OCF_ROOT" ]; then

# : ${OCF_ROOT=@OCF_ROOT_DIR@}

: ${OCF_ROOT=/usr/lib/ocf}

Fi

pgsql资源脚本特性:

●主节点失效切换

master宕掉时,RA检测到该问题并将master标记为stop,随后将slave提升为新的master。

●异步与同步切换

如果slave宕掉或者LAN中存在问题,那么当设置为同步复制时包含写操作的事务将会被终止,也就意味着服务将停止。因此,为防止服务停止RA将会动态地将同步转换为异步复制。

●初始启动时自动识别新旧数据

当两个或多个节点上的Pacemaker同时初始启动时,RA通过每个节点上最近的replay location进行比较,找出最新数据节点。这个拥有最新数据的节点将被认为是master。当然,若在一个节点上启动pacemaker或者该节点上的pacemaker是第一个被启动的,那么它也将成为master。RA依据停止前的数据状态进行裁定。

●读负载均衡

由于slave节点可以处理只读事务,因此对于读操作可以通过虚拟另一个虚拟IP来实现读操作的负载均衡。

4.6 启动heartbeat

启动:

[root@node1 ~]# service heartbeat start [root@node2 ~]# service heartbeat start

检测状态:

[root@node1 ~]# crm status ============ Last updated: Fri Jan 24 08:02:54 2014 Stack: Heartbeat Current DC: node2 (43a4f083-c5d3-4c66-a387-b05d79b5dd89) - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, unknown expected votes 0 Resources configured. ============ Online: [ node1 node2 ]

{heartbeat启动成功}

测试:

禁用stonith,创建一个虚拟ip资源vip

[root@node1 ~]# crm configure property stonith-enabled="false" [root@node1 ~]# crm configure crm(live)configure# primitive vip ocf:heartbeat:IPaddr2 \ > params \ > ip="192.168.100.90" \ > nic="eth0" \ > cidr_netmask="24" \ > op start timeout="60s" interval="0s" on-fail="stop" \ > op monitor timeout="60s" interval="10s" on-fail="restart" \ > op stop timeout="60s" interval="0s" on-fail="block" crm(live)configure# commit crm(live)configure# quit bye

[root@node2 heartbeat]# crm_mon -1 ============ Last updated: Fri Jan 24 08:23:09 2014 Stack: Heartbeat Current DC: node2 (43a4f083-c5d3-4c66-a387-b05d79b5dd89) - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, unknown expected votes 1 Resources configured. ============ Online: [ node1 node2 ] vip (ocf::heartbeat:IPaddr2): Started node1

{vip资源在node1上运行}

[root@node1 ~]# ping 192.168.100.90 PING 192.168.100.90 (192.168.100.90) 56(84) bytes of data. 64 bytes from 192.168.100.90: icmp_seq=1 ttl=64 time=0.060 ms 64 bytes from 192.168.100.90: icmp_seq=2 ttl=64 time=0.111 ms 64 bytes from 192.168.100.90: icmp_seq=3 ttl=64 time=0.123 ms

模拟node1故障

[root@node1 ~]# service heartbeat stop [root@node2 heartbeat]# crm_mon -1 ============ Last updated: Fri Jan 24 08:22:22 2014 Stack: Heartbeat Current DC: node2 (43a4f083-c5d3-4c66-a387-b05d79b5dd89) - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, unknown expected votes 1 Resources configured. ============ Online: [ node2 ] OFFLINE: [ node1 ] vip (ocf::heartbeat:IPaddr2): Started node2

{node2顺利接管资源vip}

重新恢复node1

[root@node1 ~]# service heartbeat start [root@node2 heartbeat]# crm_mon -1 ============ Last updated: Fri Jan 24 08:23:09 2014 Stack: Heartbeat Current DC: node2 (43a4f083-c5d3-4c66-a387-b05d79b5dd89) - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, unknown expected votes 1 Resources configured. ============ Online: [ node1 node2 ] vip (ocf::heartbeat:IPaddr2): Started node1

{node1顺利收回vip资源的接管权}

删除资源:

[root@node1 ~]# crm_resource -D -r vip -t primitive [root@node1 ~]# crm_resource -L NO resources configured

4.7 配置流复制

在node1/node2上配置postgresql.conf/pg_hba.conf:

postgresql.conf : listen_addresses = '*' port = 5432 wal_level = hot_standby archive_mode = on archive_command = 'test ! -f /opt/archivelog/%f && cp %p /opt/archivelog/%f' max_wal_senders = 4 wal_keep_segments = 50 hot_standby = on pg_hba.conf : host replication postgres 192.168.2.0/24 trust

4.8 配置pacemaker

{关于pacemaker的配置可通过多种方式,如crmsh、hb_gui、pcs等,该实验使用crmsh配置}

启动node1的heartbeat,关闭node2的heartbeat。

编写crm配置脚本:

[root@node1 ~]# cat pgsql.crm property \ no-quorum-policy="ignore" \ stonith-enabled="false" \ crmd-transition-delay="0s" rsc_defaults \ resource-stickiness="INFINITY" \ migration-threshold="1" ms msPostgresql pgsql \ meta \ master-max="1" \ master-node-max="1" \ clone-max="2" \ clone-node-max="1" \ notify="true" clone clnPingCheck pingCheck group master-group \ vip-master \ vip-rep \ meta \ ordered="false" primitive vip-master ocf:heartbeat:IPaddr2 \ params \ ip="192.168.100.163" \ nic="eth0" \ cidr_netmask="24" \ op start timeout="60s" interval="0s" on-fail="stop" \ op monitor timeout="60s" interval="10s" on-fail="restart" \ op stop timeout="60s" interval="0s" on-fail="block" primitive vip-rep ocf:heartbeat:IPaddr2 \ params \ ip="192.168.2.3" \ nic="eth2" \ cidr_netmask="24" \ meta \ migration-threshold="0" \ op start timeout="60s" interval="0s" on-fail="restart" \ op monitor timeout="60s" interval="10s" on-fail="restart" \ op stop timeout="60s" interval="0s" on-fail="block" primitive vip-slave ocf:heartbeat:IPaddr2 \ params \ ip="192.168.100.164" \ nic="eth0" \ cidr_netmask="24" \ meta \ resource-stickiness="1" \ op start timeout="60s" interval="0s" on-fail="restart" \ op monitor timeout="60s" interval="10s" on-fail="restart" \ op stop timeout="60s" interval="0s" on-fail="block" primitive pgsql ocf:heartbeat:pgsql \ params \ pgctl="/opt/pgsql/bin/pg_ctl" \ psql="/opt/pgsql/bin/psql" \ pgdata="/opt/pgsql/data/" \ start_opt="-p 5432" \ rep_mode="sync" \ node_list="node1 node2" \ restore_command="cp /opt/archivelog/%f %p" \ primary_conninfo_opt="keepalives_idle=60 keepalives_interval=5 keepalives_count=5" \ master_ip="192.168.2.3" \ stop_escalate="0" \ op start timeout="60s" interval="0s" on-fail="restart" \ op monitor timeout="60s" interval="7s" on-fail="restart" \ op monitor timeout="60s" interval="2s" on-fail="restart" role="Master" \ op promote timeout="60s" interval="0s" on-fail="restart" \ op demote timeout="60s" interval="0s" on-fail="stop" \ op stop timeout="60s" interval="0s" on-fail="block" \ op notify timeout="60s" interval="0s" primitive pingCheck ocf:pacemaker:pingd \ params \ name="default_ping_set" \ host_list="192.168.100.1" \ multiplier="100" \ op start timeout="60s" interval="0s" on-fail="restart" \ op monitor timeout="60s" interval="10s" on-fail="restart" \ op stop timeout="60s" interval="0s" on-fail="ignore" location rsc_location-1 vip-slave \ rule 200: pgsql-status eq "HS:sync" \ rule 100: pgsql-status eq "PRI" \ rule -inf: not_defined pgsql-status \ rule -inf: pgsql-status ne "HS:sync" and pgsql-status ne "PRI" location rsc_location-2 msPostgresql \ rule -inf: not_defined default_ping_set or default_ping_set lt 100 colocation rsc_colocation-1 inf: msPostgresql clnPingCheck colocation rsc_colocation-2 inf: master-group msPostgresql:Master order rsc_order-1 0: clnPingCheck msPostgresql order rsc_order-2 0: msPostgresql:promote master-group:start symmetrical=false order rsc_order-3 0: msPostgresql:demote master-group:stop symmetrical=false

导入配置脚本:

[root@node1 ~]# crm configure load update pgsql.crm WARNING: pgsql: specified timeout 60s for stop is smaller than the advised 120 WARNING: pgsql: specified timeout 60s for start is smaller than the advised 120 WARNING: pgsql: specified timeout 60s for notify is smaller than the advised 90 WARNING: pgsql: specified timeout 60s for demote is smaller than the advised 120 WARNING: pgsql: specified timeout 60s for promote is smaller than the advised 120 WARNING: pingCheck: specified timeout 60s for start is smaller than the advised 90 WARNING: pingCheck: specified timeout 60s for stop is smaller than the advised 100

一段时间后查看ha状态:

[root@node1 ~]# crm_mon -Afr1 ============ Last updated: Mon Jan 27 05:23:59 2014 Stack: Heartbeat Current DC: node1 (30b7dc95-25c5-40d7-b1e4-7eaf2d5cdf07) - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, unknown expected votes 4 Resources configured. ============ Online: [ node1 ] OFFLINE: [ node2 ] Full list of resources: vip-slave (ocf::heartbeat:IPaddr2): Started node1 Resource Group: master-group vip-master (ocf::heartbeat:IPaddr2): Started node1 vip-rep (ocf::heartbeat:IPaddr2): Started node1 Master/Slave Set: msPostgresql Masters: [ node1 ] Stopped: [ pgsql:0 ] Clone Set: clnPingCheck Started: [ node1 ] Stopped: [ pingCheck:1 ] Node Attributes: * Node node1: + default_ping_set : 100 + master-pgsql:1 : 1000 + pgsql-data-status : LATEST + pgsql-master-baseline : 0000000003000078 + pgsql-status : PRI Migration summary: * Node node1:

注:刚启动时为slave,一段时间后自动切换为master。

待资源在node1上都正常运行后在node2上执行基础备份同步:

[postgres@node2 data]$ pg_basebackup -h 192.168.2.3 -U postgres -D /opt/pgsql/data/ -P

启动node2的heartbeat:

[root@node2 ~]# service heartbeat start

过一段时间后查看集群状态:

[root@node1 ~]# crm_mon -Afr1 ============ Last updated: Mon Jan 27 05:27:22 2014 Stack: Heartbeat Current DC: node1 (30b7dc95-25c5-40d7-b1e4-7eaf2d5cdf07) - partition with quorum Version: 1.0.12-unknown 2 Nodes configured, unknown expected votes 4 Resources configured. ============ Online: [ node1 node2 ] Full list of resources: vip-slave (ocf::heartbeat:IPaddr2): Started node2 Resource Group: master-group vip-master (ocf::heartbeat:IPaddr2): Started node1 vip-rep (ocf::heartbeat:IPaddr2): Started node1 Master/Slave Set: msPostgresql Masters: [ node1 ] Slaves: [ node2 ] Clone Set: clnPingCheck Started: [ node1 node2 ] Node Attributes: * Node node1: + default_ping_set : 100 + master-pgsql:1 : 1000 + pgsql-data-status : LATEST + pgsql-master-baseline : 0000000003000078 + pgsql-status : PRI * Node node2: + default_ping_set : 100 + master-pgsql:0 : 100 + pgsql-data-status : STREAMING|SYNC + pgsql-status : HS:sync Migration summary: * Node node2: * Node node1:

{vip-slave资源已经由node1切换到了node2上,流复制状态正常}